flood wrote: ↑15 May 2020, 17:21

imo the only thing i care about is

how much slower is a display than a "perfect display", if both are receiving the same video signal (and hence running at same refresh rate and whatever).

Yup, this is excllent, and agree with flood here.

Lag standards also needs to be adjustable to permit using real life as a valid lag reference, because displays sometimes have to be benchmarked against real life.

Sometimes we definitely need IDMS method. It's perfect for the right job. But the toolbox is big. Right tool for right job sometimes makes the IDMS standard total crap -- just like a Philips screwdriver isn't good for flat top screws. Or worse, trying to use a staple gun as a screwdriver. Or using a hammer as a knife. Flatly, sometimes IDMS lag standard is the wrong tool for the wrong job.

Bottom line, IDMS can be perfect for some applications, but woefully imperfect for other applications. The IDMS standard is not futureproofed in the refresh rate race to retina refresh rates, many esports scenarios, and many real-life emulation scenarios. IDMS error margins do shrink at higher refresh rates, but we're not going to be eliminating a lot of those error margins for a long time.

IDMS is a chiefly a 0-framebuffer-depth VSYNC ON GtG 50% lag measurement standard, and does not accomodate scanout-bypassing subrefresh latency methods (Virtual reality raster beam racing techniques, VSYNC OFF techniques, and variable-scanout-velocity displays, interactions between sequential cable delivery versus global strobe flash backlights, etc). Also, it doesn't account for weird lag-interaction behaviours (both beneficial and deleterious) between different components of the GPU-to-display chain. There is some scalers/TCON that has some scanout-distorting mechanics (more visible on Plasma/DLP than LCDs, but some LCDs occasionally have this too -- e.g. 240Hz panels that needs to buffer lower-Hz to scanout at full 1/240sec velocity; distorting lag mechanics of VSYNC OFF or "virtual reality beamracing" techniques, like NVidia VRWorks front-buffer techniques), and some of these behaviours are fully co-operative between GPU and display. Yesterday it was analog at a perfect constant pixel clock. Today it's digital. Now it's micropackets. Then we've got VRR and VSYNC OFF. There's now some DSC too. And tomorrow, additional layers such as

rame rate amplification layers (e.g. display co-GPUs). There is some slowly increasing integration of latency co-dependence between GPU and display that cannot always be siloed. Sometimes we DO need to silo it, but sometimes we do need to measure Present()-to-photons to measure proper gametime:photontime relativity to real world, for every single pixel of the display, as gametime:photontime can jitter differently for different sync technologies and monitor settings, etc, meaning lower-lag but worse-lag-jitter (and lag-jitter range differences for different pixels, like worse lag range at bottom edge versus top edge, and vice versa -- e.g. [0..4.1ms] for one edge of screen and [0...16.7ms] lag jitter range for opposite edge, because of the multi-layered unexpected interactions between technologies, because of things like scanout-compression artifacts (e.g. scan-converting TCONs). Anyway, I've successfully thought-through and formula'd most of these common interactions. I've seen almost all scanout patterns and latency interactions.

Also, erratic-stutter is a sub-branch of lag-volatility mathematics. Mathed correctly, latency volatility and erratic stutter both come the same gametime:photontime divergences. (I'm not talking about regular stutter, like perfect low-framerate 20fps framepaced exactly 1/20sec -- but erratic stutter). In the

Vicious Cycle Effect, during virtual reality, even a 1ms stutter can become human visible.

For example, a head turn in virtual reality at 8000 pixels/sec is a slow head turn on an 8K VR headset (even 8K is not sharp enough to be retina when used for VR, because it's blown-up to bigger-than-IMAX sizes). 1ms translates to 8 pixels at 8000 pixels/sec. So a 1ms stutter is an 8 pixel jump. Assuming motion blur is sufficiently low (e.g. 0.3ms MPRT like Valve Index), that 1ms stutter can be visible. 1ms stutter is mostly invisible on a 60Hz 240p CRT Nintendo game because of low resolution.

1ms stutter is mostly invisible on a 60Hz 1080p LCD because of high persistence of LCD.

But 1ms stutter is very human-visible on a low-persistence 8K VR display.

So tiny sub-refresh lag error margins are important, and we've seen displays where lag error margins of top-vs-bottom is quite noticeable (e.g. top having varying absolute lag samples of [15ms...19ms] relative to computer's frame Present(), and bottom edge having varying absolute lag samples of [4ms...19ms] relative to computer's frame Present() ....) because of all those multilayered lag interactions. These milliseconds often don't matter to most, but can be critical when we're trying to develop a display that mimics real life.

(It's also a small portion of the generic complaint "Lightboost looks stuttery/jittery during VSYNC OFF gameplay", and even the opposite edge of screen have different stutterfeel, not noticed by most, but noticed if paid attention, and measurable when doing 1000 lag samples during 120Hz strobing at 240Hz VSYNC OFF on a panel with a scan-converting TCON/scaler -- there's a lag volatility difference of 8ms for top vs bottom, that can be applicable if you're beam racing, or if you're doing scanout-lag-bypassing VSYNC OFF).

The IDMS lag measurement standard is a narrow lag tool in a big lag toolbox.

Even the Blur Busters display lag standard initative necessarily won't be perfect, but it would provide more futureproof standardization upgrade paths too simply by allowing the introduction of more optional variables (e.g. strobing / strobe crosstalk / faint pre-strobes that cheat lag tests). The first standard will cover the most common reference profiles.

AndreasSchmid wrote: ↑17 May 2020, 13:09

As far as I can tell, this simplifies the problem a bit too much:

1. A "perfect display" would be a display with zero lag, wouldn't it? This obviously does not exist, so if we would do a comparison, we would have to compare to a "non-perfect" display.

However, we need a "perfect display" reference. It's a necessary part of a future lag standard.

The problem is we now have virtual reality and Holodeck simulators.

The fact is that a "perfect display" already exists: It's called "real life".

And guess what a display sometimes does? It emulates real life.

The great news is that real life is mathematically simple, and a lot of things about real life can be calculated.

The IDMS is a simplistic lag standard that does not have a venn diagram big enough to include this reference.

I am cross posting here:

Chief Blur Buster wrote:hmukos wrote: ↑17 May 2020, 12:20

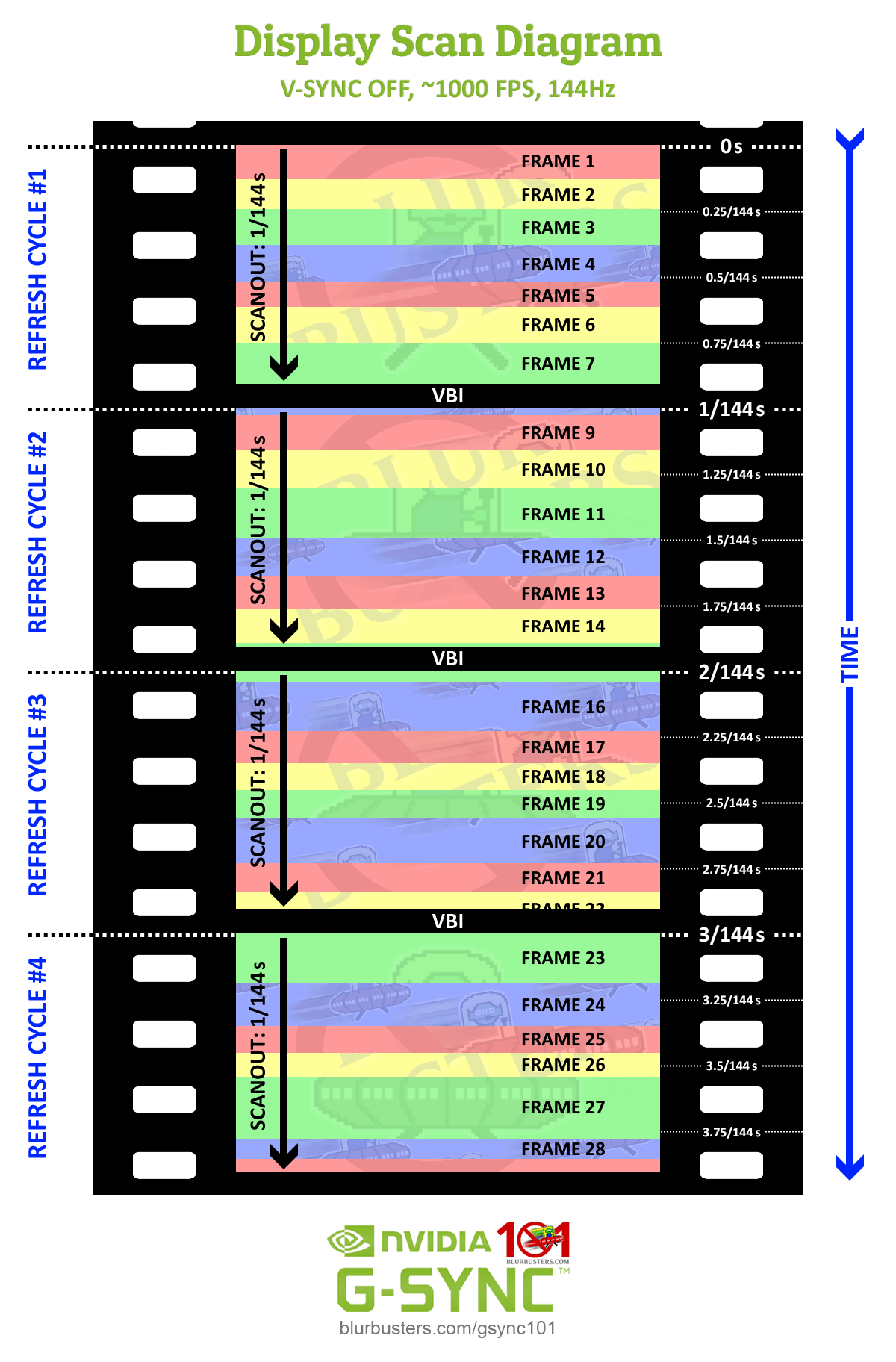

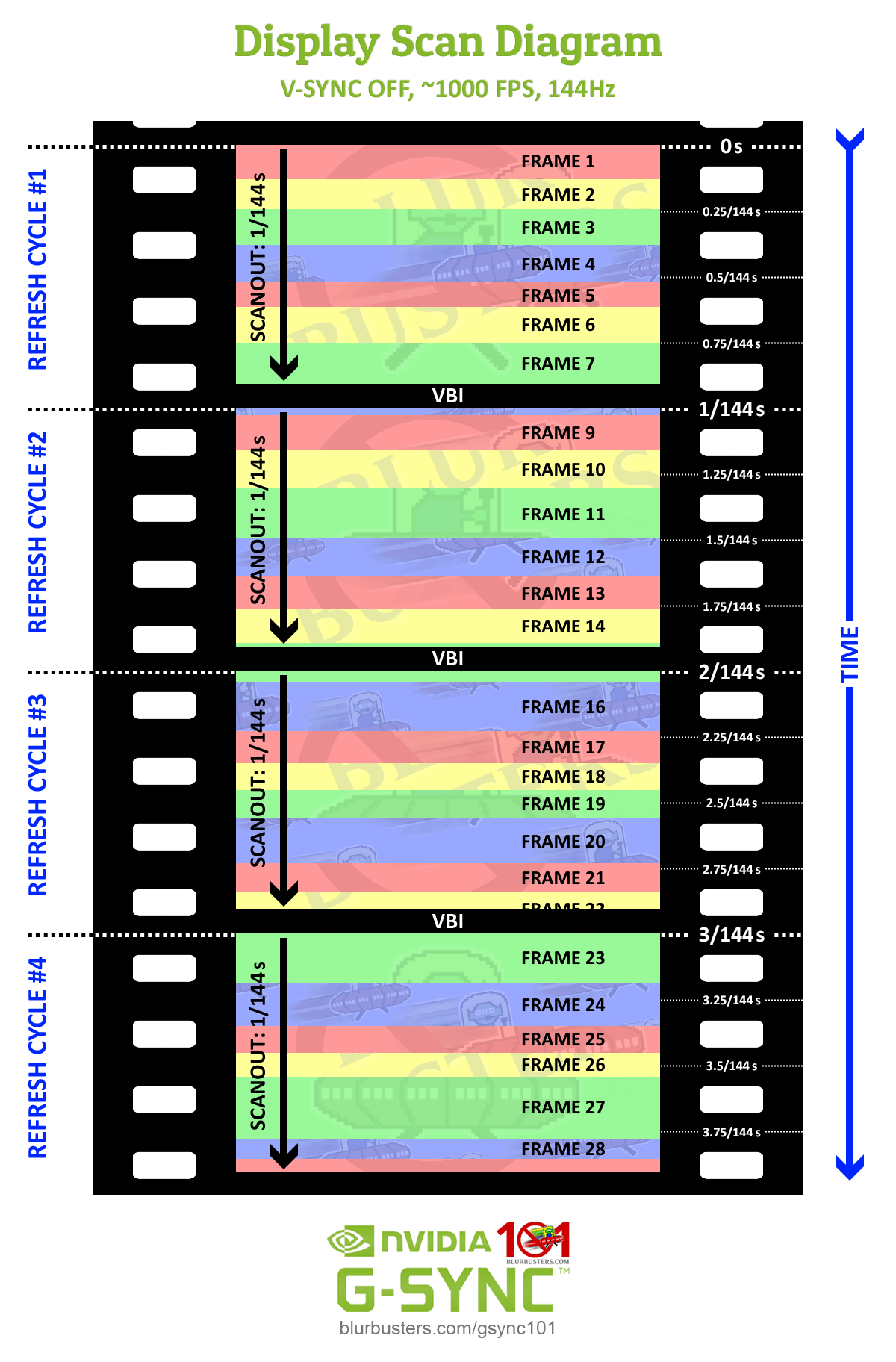

That's what I don't understand. Suppose we made an input right before "Frame 2" and than it rendered and it's teared part was scanned out. How is that different in terms of input lag than if we did the same for "Frame 7"? Doesn't V-SYNC OFF mean that as soon as our frame is rendered, it's teared part is instantly shown at the place where monitor was scanning right now?

Yes, VSYNC OFF frameslices splice into cable scanout (usually within microseconds), and some monitors have panel scanout that is in sync with cable scanout (sub-refresh latency pixel-for-pixel).

What I think Jorim is saying is that higher refresh rates reduces the latency error margin caused by scanout. 60Hz produces a 16.7ms range, while 240Hz produces a 4.2ms range.

For a given frame rate, 500fps will output 4x more frameslice pixels per frame at 240Hz than at 60Hz. That's because the scanout is 4x faster, so a frame will spew out a 4x-taller frameslice at 240Hz than 60Hz for a specific given frame rate. Each frameslice is a latency sub-gradient within the scanout lag gradient. So for 100fps, a frameslice has a lag of [0ms...10ms] from top to bottom. For 500fps, a frameslice has a lag of [0ms...5ms] from top to bottom. We're assuming a 60Hz display doing 1/60sec scan velocity, and 240Hz display doing a 1/240sec scan velocity, with identical processing lag, and thus identical absolute lag.

Even if average absolute lag is identical for a perfect 60Hz monitor and for a perfect 240Hz monitor, the lagfeel is lower at 240Hz because more pixel update opportunities are occuring per pixel, allowing earlier visibility per pixel.

Now, if you measured average lag of all 1920x1080 pixels from all possible infinite gametime offsets to all actual photons (including situations where NO refresh cycles are happening and NO frames are happening), the average visibility lag for a pixel is actuallly lower, thanks to the more frequent pixel excitations.

Understanding this properly requires decoupling latency from frame rate, and thinking of gametime as an analog continuous concept instead of a digital value stepping from frame to frame. Once you math this correctly and properly, this makes sense.

Metaphorically, and mathematically (in photon visibility math), it is akin to real life shutter glasses flickering 60Hz versus 240Hz while walking around in real life (not staring at a screen). Your real life will feel slightly less lagged if your shutters of your shutter glasses are flashing at 240Hz instead of 60Hz. That's because of more frequent retina-excitement opportunities of the real-world photons hitting your eyeballs, reducing average lag of the existing apparent photons.

Once you use proper mathematics (treat a display as an infinite-refreshrate display) and calculate all lag offsets from a theoretical analog gametime, there's always more lag at lower refresh rates, despite having the same average absolute lag.

Most lag formulas don't factor this in -- not even absolute lag. Nearly all lag test methodologies neglect to consider the more frequent pixel excitation opportunity factor,

if you're trying to use real world as the scientific lag reference.

The existing lag numbers are useful for comparing displays, but neglects to reveal the lag advantage of 240Hz for an identical-absolute-lag of 240Hz-vs-60Hz, thanks to more frequent sampling rate along the analog domain. To correctly math this out, requires a lag test method that decouples from digital references, and calculates against a theoretical infinite-Hz instant-scanout mathematical reference (i.e. real life as the lag reference). Once the lag formula does this, suddely, the "same-absolute-lag number" feels like it's hiding many lag secrets that many humans do not understand.

We have a use for this type of lag references too, as part of a future lag standard, because VR and Holodecks are trying to emulate real life, and thus, we need lag standards that has a venn diagram big enough -- to use this as a reference.

Lag is a complex science.

So you see, two different-Hz displays can have identical lag numbers with certian sync technologies, but the higher-Hz display is less laggy relative to real life thanks to more frequent photon opportunities.

We need an ironclad lag-measurement standard flexible and powerful enough to meet the needs of the 21st century.

Displays are getting more complex. Sync technologies are getting more complex (e.g. invention of variable refresh rate) that inject GPU-display co-operative behaviors that cannot be siloed like in classical fixed-Hz displays. Also, some future displays will have a co-GPU to help with

frame rate amplification technologies, creating more intimate connection between the display and the GPU for some important applications. Most resarchers are still stuck in "fixed-Hz think", while Blur Busters is thinking far beyond.

Also, you might have seen some of my Tearline Jedi work controlling VSYNC OFF tearlines to create microsecond-latency for TOP/CENTER/BOTTOM, to generate pixels streamed out of the GPU output in realtime (bypassing the framebuffer), see

Tearline Jedi Thread. This is how Atari 2600 did it, since the Atari 2600 did not have a frame buffer, it had to generate pixels in real time. This is possible to do with GPUs by using VSYNC OFF to bypass frame buffers, since VSYNC OFF tearlines are just scan line rasters. I'm able to create sub-millisecond Present()-to-Photons for the entire screen surface of a display (at least on CRT), with these tricks. None of the TOP,CENTER,BOTTOM lag-dissonances. It also partially explains why some esports players use VSYNC OFF, for CS:GO ultrahigh framerates (500fps) to create consistent TOP-to-BOTTOM lagfeel. Most lag formulas neglect to acknolwedge the realtime streaming of VSYNC OFF frameslices as a scanout-lag-bypasser mechanism, and this is why Leo Bodnar numbers (and IDMS numbers) are useless for CS:GO players who win thousands of dollars in esports.

Now, this lag math becomes complex when we involve scan-converting TCONs (e.g. 240Hz fixed-horizontal-scanrate panels like BenQ XL2546 (DyAc OFF) can only refresh a 60Hz refresh cycle in 1/240sec, rather than scanrate-multisync panels (like BenQ XL2411P) where their scanout slows down to match the Hz and scanrate of the cable signal. And becomes even more complex when we add strobing (which converts scanout visibility to global visibility). We can have up to 3 different multilayered vertical latency gradients and lag-gradient compressions (some of them opposite-direction than the others), because of sync technology setting changes at the GPU level, to things like monitors' VRR setting or strobing setting, all interacting simultaneously with each other to create latency behaviours that never reveal themselves in VESA / IDMS / etc.

The lag formula needs to be flexible and future proof enough to accomodate such situations too. The great news is that there's a path to such a lag standard, and I would like to help work with this.

That's why, some classic lag measurement formulas are garbage for some real-world lag-measurement applications. But we do it for an excellent and legitimate scientific reason.

We're the people who conceptualized the idea of "Holodeck Turing Test", e.g. real life matching virtual reality, and vice versa, blind test between VR goggles and transparent ski goggles. This will be long time before this is successful, but one component is ultrahigh refresh rates, and measurement standards that is flexible enough -- whereupon adjusting a variable allows you to use a lag measurement standards of "real life" as being a valid measurement reference, not just only versus a CRT. (real life = mathematically infinite Hz infinite framerate, for all practical intents and purposes).

The bottom line, is "real life" is a valid lag measurement reference. Sometimes we need that. The problem is IDMS hides a lot of lag (that varies a lot between identical-IDMS displays), that prevents IDMS lag measurement method from being used in all lag-measurement applications that we need.

Use Case #1: Two displays of identical IDMS numbers, but different sync settings, create a lag-number-swap effect between two different displays. Creating a display superior/worse for Console game (one sync setting) and worse/superior for PC esports game (different sync setting).

Conclusion #1: IDMS is an insufficient standard when professional gamers needs to shop for a display for a specific game. This is thanks to the non-separatable interaction/integration between GPU+display lag behaviors that is defacto co-operative.

Use Case #2: Two displays of identical IDMS numbers, can have different lag relative to real life, when we're trying to emulate real life (VR or Holodeck application).

Conclusion #2: IDMS is an insufficient standard when we need to compare against real life as a chosen lag reference

There's even a #3, #4, or #5, but I think I've dropped enough microphones, slam-dunk, in hushing this debate for the need of superior latency measurement standards/formulas. But there exists GPU interactions where "Display A goes higher lag than Display B" at one setting that becomes "Display B goes higher lag than Display A" at a different GPU setting on the SAME display. Yes, GPU-side setting. Yes, SAME display. Non-separatable interaction!

The boom of the billion-dollar esports industry and VR industry revealed massive shortcomings of current lag-measurement standards.

IDMS is a great standard. It is useful when it is Right Tool for Right Job.

But, unfortunately, it is but merely only one tool in a huge "display lag measurement" toolbox.

Blur Busters is often the Skunkworks or Area 51 of the future of displays, and so lag measurement standards are far behind Blur Busters.

Also, if any papers or research is modified because you read something on Blur Busters, we appreciate my name (Mark Rejhon) and Blur Busters credit to be included in any future lag papers. We're looking to increase our lag credentials over the long term -- happy to be providing research for free in exchange for peer review credit. Much like I did for a lot of other things, like pursuit camera.

A universal lag standard (essentially metaphorically a grand unifying theory of physics in importance in the latency-measuring unvierse), is sorely needed. The universal latency standard is intended to allow allow you to choose a preferred lag reference, and adjustable to match the majority of past lag measuring standards (SMTT, Leo Bodnar, IDMS, VESA, etc). That way, existing monitor reviewers can publish numbers backwards compatible with their existing test methodologies, WHILE also publishing superior standardized numbers alongside. Otherwise, it becomes like the xkcd cartoon of a new standard just becoming another standard, because it won't be adopted. To be the real standard, it has to be powerful and unifying, to wide critical acclaim (like other Blur Busters initiatives).

We have successfully found a formula that becomes a superset of nearly all lag measurement standards in the past, combined. (Within knowable error margins, of course -- e.g. SMTT method is subject to camera sensor scanout artifacts as well as human-vision subjectivity)

We believe Blur Busters / TestUFO has enough brand recognition in the testing industry to trailblaze a popular new display lag standard that is compatible with everybody's lag methodologies (just simply by tweaking the variables in the standard), while also converging into unified industry standards that allows long-term cross-comparision (Site A lag data more easy to compare to Site B lag data). Colorimetry finally achieved that (universal standards that are now consistently used by all testers), and Blur Busters aims to standardize such temporal unification.

One can easily continue to use IDMS standard as a subset within the Blur Busters display lag testing standard initiative -- simply by disclosing the lag stopwatch variables that are plugged into the Blur Busters display lag testing standard. So it doesn't have to replace IDMS. But be a superset standard that helps improve the latency understanding. Much like people still do newtonian mathematics for things that don't have enough speed to generate Relativity Theory error margins. Or simply sticking to geometry for things that don't require complex calculus. My thinking is very left-field different, much like Geometry-vs-Physics, and that's how I innovate with Blur Busters.

Even competitors to Blur Busters products have adopted several Blur Busters ideas / trailblazes (with our full blessing). So even the Blur Busters initiative of the unified-but-flexible latency measurement standard -- will quickly be copied by many competitors -- and/or created as a selectable sub-profile within the capability of the Blur Busters display lag measurement standard (Profiles, much like how you select "Rec.2020", "sRGB", "Adobe" for colorimetry). Thanks to these known standards, they are formula-convertible to each other (within limited circumstances), but today's lag measurement standards are like thousands of opaque numbers that cannot be compared across multiple different websites. Open source devices and commercial devices alike. A proper lag standard and lag-variables disclosure requirement, also gives pressure for commercial devices to comply with the standards, and force full-disclosures of latency measurement standards in commercial devices and commercial reviewers, too. Part of the intent of a new Blur Busters display lag standard initiative is disclosure-honesty -- both by tester manufacturers & display reviewers.

To some brains, it feels like greek/chinese/calculus. To me, it's turned more into a simplified geometry problem. The industry needs it badly, and I'm happy to help lead the way.

If there are researchers and university students here, I welcome participating in a collaboration for a more universal latency standard that allows retargetting latency-reference -- mark[at]blurbusters.com ....

The IDMS work is fascinating and extremely important. Just need to be cognizant of the limitations of the latency-measurement portion of the IDMS standard, at least as an acknowledgement of known error margins