Wow, that’s a lot of information. Thanks. I’ve been trying to target latency as much as possible since I’ve been thinking about playing more competitively since I’ve always been a fairly top-tier COD player.Alpha wrote: ↑02 Dec 2020, 07:22

TCP and network optimizations are by far and large some of the best a person can do for a competitive edge easy. I do a lot of professional tournaments so competing for money makes it a big deal and why I have a commercial grade network. For other optimizations, I won't sacrifice image quality to a potato like some will but all my OS deployments are custom built but yours truly using Microsofts framework. These are essentially completely stripped of everything with the exception of security protocols. However until we see something more serious like an artificial intelligence based solution that doesn't do signature scanning I live with defender. Hoping to see some commercial solutions roll out soon. I have my CEH so am delicately paranoid about the risk associated with those doors being open. Its unreal how easy it is to hit systems and own them. I can handle the security at the firewall levels but running deep packet inspection and geo filtering and other intrusion prevention methods cost the microseconds we fight for that can cost (and has) big money (to me). I'm a career IT guy by trade though this year I have highly considered retiring due to some dumb luck and gaming (more dumb luck but I am pretty fast) but I piggie back off some of the big brains out in the world especially here on these boards of all places because there is a legit collection of awesome people and Chief has masterfully managed to keep the community amazing. I implement whatever changes make sense or I can tell a difference by feel even if I can't quantify it with test. It could all be complete BS and maybe some light being reflected off something hits the eye just right not even being noticed helping focus or whatever and bam fragging out lol. No idea here truth be told.

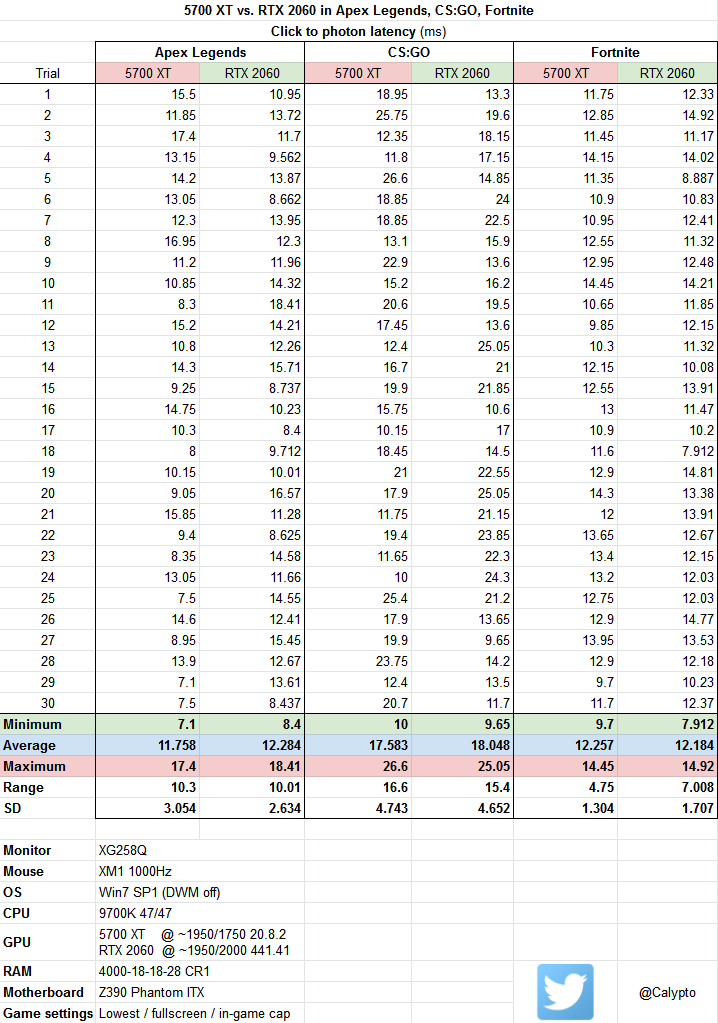

The issue with AMD GPU's is simply that they won't get the frames (excluding the 6000 series that may make sense with the rasterization muscle @ 1080p). In addition, when you're sponsored, you're sponsored. I wouldn't run with a mouse due to it not being what I prefer. Probably wouldn't make a difference but it was no good in my hands. I don't need the income and already established career wise but if you're living in a team house and Logitechs bringing G Pro X Wireless Superlights, that's what you're fragging with (just ordered mine while I wait for Razer to drop the 8000hz mouse). Chief or someone would have to explain why something feels "ahead" because I clearly don't fully understand but on the 5700 system and easily 60+ fps difference that's a moving target, its almost like being ahead of the 2080ti face to face (due to network test I know its not packets landing first so its something else). I am hoping to hear some reasons on this. My theory was that the pipe was organized in a way that prioritizes inputs. Literally no idea if that's even possible.

I really want to get my hands on the 6000 series for just a few minutes. 6900XT hits in a few days and if the 3090 is faster that'd be my choice but if the 6900XT feels like the 5700 I'd go that direction and buy a 3080 Ti as a back up or throw in my other machine. I'd do this if the 6900XT was a bit slower even. No issues with the 5700 but it'll take awhile to get cool with the confidence in AMD's GPU drivers.

My experience with Boost is not so good. Maybe my brain is fired up at times and less at others but boost and enabled can hurt negatively or feel ok at times. I turn it off now and stick to the older recommended NCP settings.

For the Windows deployments, are there any pre-made ones you could recommend with everything stripped out of them?

What do you mean by “boost”? I saw another one of your comments on the 360 Hz monitor review thread. You were saying your reaction time test was 20 ms slower with Reflex. What do you mean by that? I thought Reflex was something that had to be enabled on a per-game basis in the game’s settings. There’s also ultra low latency mode in the control panel.

I actually have also been looking at all of these optimizations because when I first got my 240 Hz AW2518H, my reaction time tests were around 145 ms and now I struggle to get much under 160 ms. I’m not sure if it is something with Windows or Chrome or just me. I’ve tried using a C++ version running without full screen optimizations (no DWM) and I can easily get in the 130 ms range.

Do you recommend any of the popular changes to the platform clock and disabledynamictick? Basically, any of the settings you can find in bcdedit /enum. Also, is there any tool you use to see if the changes you’re making are actually doing anything? I’ve used LatencyMon, but I know DPC latency is not directly related to input lag. Also, my DPC latency is usually 7-15 us. I managed to get it down a lot by switching GPU and audio drivers to MSI mode.