Hi guys,

After some research and have understanding how low latency mode (pre-rendred frame) work, im a bit confused on a last point.

LLM on is like max pre rendred frame = 1, ans LLM = ultra is like a real time pre rendered frame so its like = 0, no frame queued in buffer, then cpu directly send frame to gpu, but if cpu is not fast enough to render frame "on time" to the gpu, then it result in stutter cause no any frame is queued in buffer (or maybe ultra allow 1 pre rendered framed max when cpu isnt too fast)

Ive got 2 scenario, first is GTA 5, i play vsync on 60hz (fps cap (RTSS) 61 for inputlag). In this case im never gpu/cpu bound, gpu is like 70% usage and cpu is like 20%, so, i could in this case use LLM = ultra until my cpu is not maxed out (stutter) and if i use LLM=on (max pre rendred=1) it will result in more input lag? (1 more frame queued vs ultra), but better performance if CPU maxed out.

In the other case its on CS GO game, i play uncapped fps, cpu is obviously the bottleneck fps are like 250-300 and gpu never maxed out.

So in this case, use LLM=utra should not be good right? cause cpu maxed out, then it should stutter if im right.

Then in this case should better use LLM=On then?

Also when LLM = off, this mean there is no max pre rendred frame limit, this mean the game itself choose how much frame are queued?

Thanks.

LLM On vs ultra

Re: LLM On vs ultra

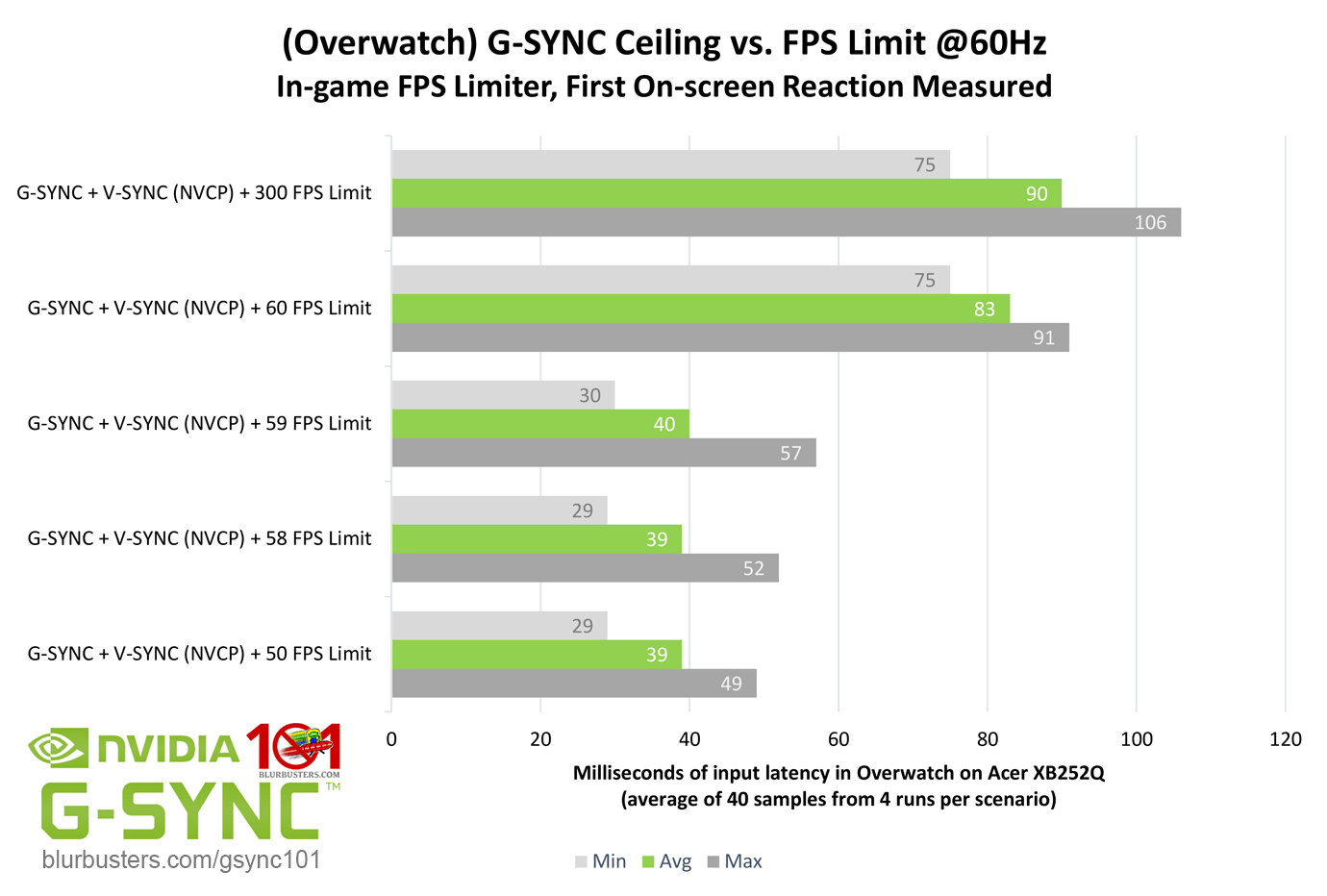

I'm not going to bother trying to explain pre-rendered frames to you further, as I think I confused it more than I clarified it for you in the other thread, but I will point out here that a 61 FPS cap is not enough to prevent V-SYNC input lag @60Hz. The below chart is from my G-SYNC article, but still applies to my specific point, as G-SYNC + V-SYNC behaves the same way as standard V-SYNC with framerates above the refresh rate:

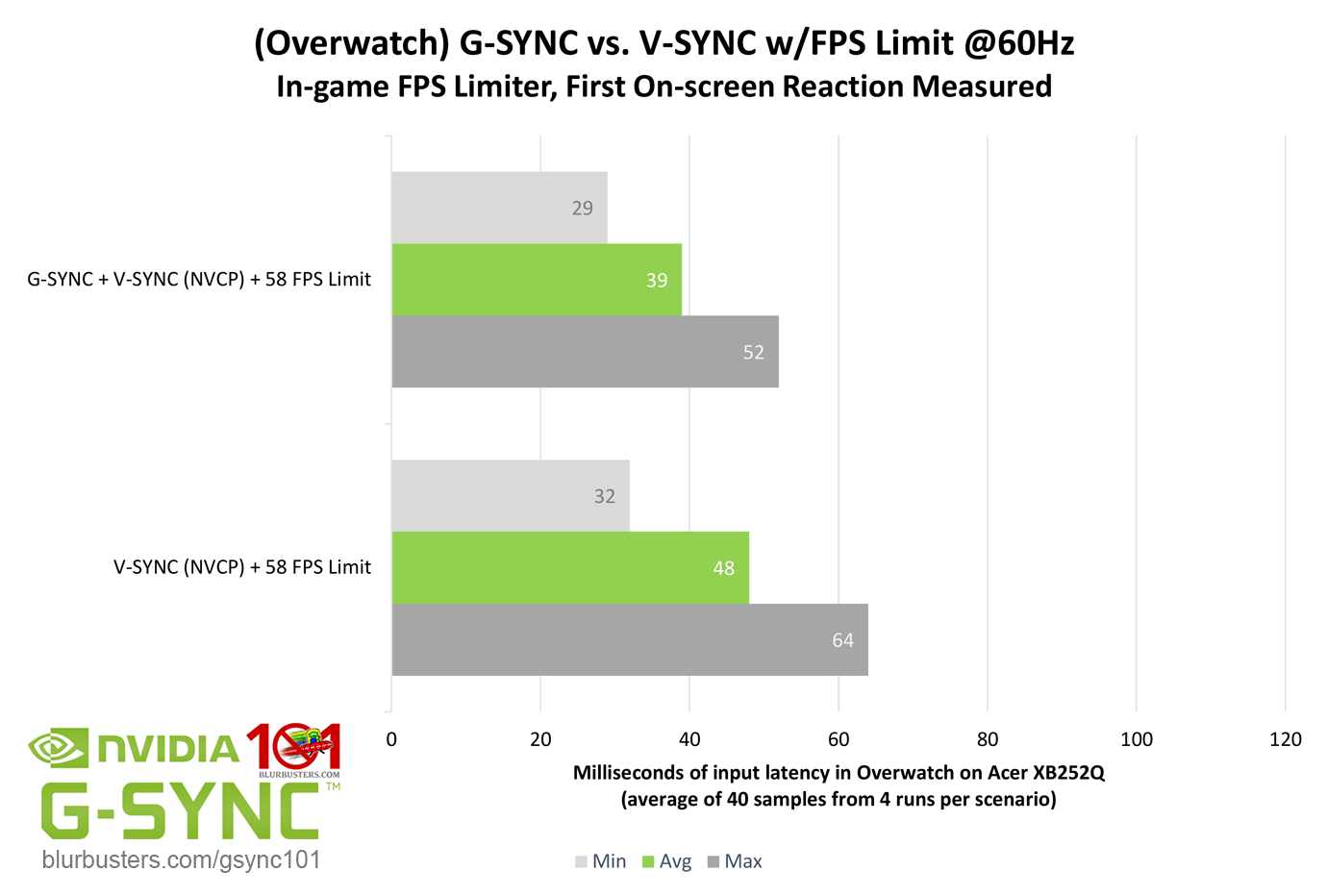

Now, since the above chart is for G-SYNC, it's still going to be lower lag when capped below the refresh rate than when V-SYNC is capped below the refresh rate:

But the bottom-line is, you don't want your FPS cap above the refresh rate when using V-SYNC, you want it below for the lowest input lag possible.

For low lag V-SYNC methods that are not Scanline Sync, refer to this article:

https://blurbusters.com/howto-low-lag-vsync-on/

(jorimt: /jor-uhm-tee/)

Author: Blur Busters "G-SYNC 101" Series

Displays: ASUS PG27AQN, LG 48CX VR: Beyond, Quest 3, Reverb G2, Index OS: Windows 11 Pro Case: Fractal Design Torrent PSU: Seasonic PRIME TX-1000 MB: ASUS Z790 Hero CPU: Intel i9-13900k w/Noctua NH-U12A GPU: GIGABYTE RTX 4090 GAMING OC RAM: 32GB G.SKILL Trident Z5 DDR5 6400MHz CL32 SSDs: 2TB WD_BLACK SN850 (OS), 4TB WD_BLACK SN850X (Games) Keyboards: Wooting 60HE, Logitech G915 TKL Mice: Razer Viper Mini SE, Razer Viper 8kHz Sound: Creative Sound Blaster Katana V2 (speakers/amp/DAC), AFUL Performer 8 (IEMs)

Author: Blur Busters "G-SYNC 101" Series

Displays: ASUS PG27AQN, LG 48CX VR: Beyond, Quest 3, Reverb G2, Index OS: Windows 11 Pro Case: Fractal Design Torrent PSU: Seasonic PRIME TX-1000 MB: ASUS Z790 Hero CPU: Intel i9-13900k w/Noctua NH-U12A GPU: GIGABYTE RTX 4090 GAMING OC RAM: 32GB G.SKILL Trident Z5 DDR5 6400MHz CL32 SSDs: 2TB WD_BLACK SN850 (OS), 4TB WD_BLACK SN850X (Games) Keyboards: Wooting 60HE, Logitech G915 TKL Mice: Razer Viper Mini SE, Razer Viper 8kHz Sound: Creative Sound Blaster Katana V2 (speakers/amp/DAC), AFUL Performer 8 (IEMs)

Re: LLM On vs ultra

Thanks again, for sure cap under refresh rate as the best input lag, i cant cap under because i use adaptive vsync so if fps 58 = vsync off= tearing.jorimt wrote: ↑12 Apr 2020, 09:18I'm not going to bother trying to explain pre-rendered frames to you further, as I think I confused it more than I clarified it for you in the other thread, but I will point out here that a 61 FPS cap is not enough to prevent V-SYNC input lag @60Hz. The below chart is from my G-SYNC article, but still applies to my specific point, as G-SYNC + V-SYNC behaves the same way as standard V-SYNC with framerates above the refresh rate:

Now, since the above chart is for G-SYNC, it's still going to be lower lag when capped below the refresh rate than when V-SYNC is capped below the refresh rate:

But the bottom-line is, you don't want your FPS cap above the refresh rate when using V-SYNC, you want it below for the lowest input lag possible.

For low lag V-SYNC methods that are not Scanline Sync, refer to this article:

https://blurbusters.com/howto-low-lag-vsync-on/

I just wanted to know what was the better LLM mode for better inputlag in the specific scenario is described.

I will stay to LLM on input lag is pretty decent IMO.

For the low lag vsync method thats what i used before scanline sync being realised, blur buster say cap 0.01 under real refresh rate, another guy said 0.006.. ive tried both but i steel feel the input lag, tried with fast sync always result in stutter.

Re: LLM On vs ultra

Yes, the game sets the queue size itself with LLM off, but most games don't have an unlimited queue, they instead usually default to a 0-3 pre-rendered frames queue. This means the pre-rendered frames queue could have anywhere from 0 to 3 frames queued at any time. It's not a static "1" or "3," it changes at any given point from a variety of performance factors.

If your goal is to reduce input lag in that specific scenario, you're looking to the wrong setting to achieve it.

To put it in perspective, simply switching Nvidia adaptive V-SYNC to normal V-SYNC, and capping your FPS slightly below the refresh rate can reduce your current input lag levels by up to 100ms @60Hz, whereas LLM can only reduce input lag in similar situations by up to 16.6ms.

And since you're not GPU-bound in the GTA scenario, LLM won't reduce input lag at all.

You have a lot of your individual facts straight, but you're applying some of them to the wrong situations. For instance, V-SYNC is causing most of your input lag in that GTA scenario, not your LLM settings, and, as for stutter, it can be caused by more than different LLM settings. For all you know, the stutter could be caused by disk access from background asset loading (and so on).

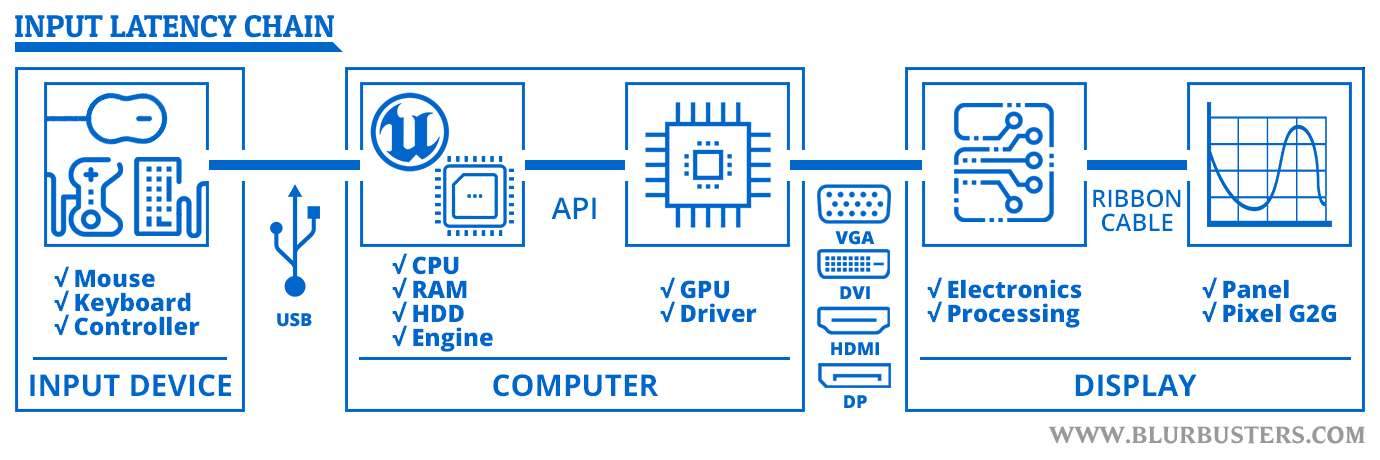

The pre-rendered frames queue is only a small part of a much bigger input latency chain:

(jorimt: /jor-uhm-tee/)

Author: Blur Busters "G-SYNC 101" Series

Displays: ASUS PG27AQN, LG 48CX VR: Beyond, Quest 3, Reverb G2, Index OS: Windows 11 Pro Case: Fractal Design Torrent PSU: Seasonic PRIME TX-1000 MB: ASUS Z790 Hero CPU: Intel i9-13900k w/Noctua NH-U12A GPU: GIGABYTE RTX 4090 GAMING OC RAM: 32GB G.SKILL Trident Z5 DDR5 6400MHz CL32 SSDs: 2TB WD_BLACK SN850 (OS), 4TB WD_BLACK SN850X (Games) Keyboards: Wooting 60HE, Logitech G915 TKL Mice: Razer Viper Mini SE, Razer Viper 8kHz Sound: Creative Sound Blaster Katana V2 (speakers/amp/DAC), AFUL Performer 8 (IEMs)

Author: Blur Busters "G-SYNC 101" Series

Displays: ASUS PG27AQN, LG 48CX VR: Beyond, Quest 3, Reverb G2, Index OS: Windows 11 Pro Case: Fractal Design Torrent PSU: Seasonic PRIME TX-1000 MB: ASUS Z790 Hero CPU: Intel i9-13900k w/Noctua NH-U12A GPU: GIGABYTE RTX 4090 GAMING OC RAM: 32GB G.SKILL Trident Z5 DDR5 6400MHz CL32 SSDs: 2TB WD_BLACK SN850 (OS), 4TB WD_BLACK SN850X (Games) Keyboards: Wooting 60HE, Logitech G915 TKL Mice: Razer Viper Mini SE, Razer Viper 8kHz Sound: Creative Sound Blaster Katana V2 (speakers/amp/DAC), AFUL Performer 8 (IEMs)

Re: LLM On vs ultra

allright very usefull picture this just what i need! thanks!jorimt wrote: ↑12 Apr 2020, 12:16Yes, the game sets the queue size itself with LLM off, but most games don't have an unlimited queue, they instead usually default to a 0-3 pre-rendered frames queue. This means the pre-rendered frames queue could have anywhere from 0 to 3 frames queued at any time. It's not a static "1" or "3," it changes at any given point from a variety of performance factors.

If your goal is to reduce input lag in that specific scenario, you're looking to the wrong setting to achieve it.

To put it in perspective, simply switching Nvidia adaptive V-SYNC to normal V-SYNC, and capping your FPS slightly below the refresh rate can reduce your current input lag levels by up to 100ms @60Hz, whereas LLM can only reduce input lag in similar situations by up to 16.6ms.

And since you're not GPU-bound in the GTA scenario, LLM won't reduce input lag at all.

You have a lot of your individual facts straight, but you're applying some of them to the wrong situations. For instance, V-SYNC is causing most of your input lag in that GTA scenario, not your LLM settings, and, as for stutter, it can be caused by more than different LLM settings. For all you know, the stutter could be caused by disk access from background asset loading (and so on).

The pre-rendered frames queue is only a small part of a much bigger input latency chain:

for gta 5, i use msi afterburner osd to monitor frametime as a graph, and thats where ive seen frametime fluctuation while framerate dont drop, when pre rendered frame is higher than 1, and cap higher than 61fps, but as you said, thats normal behavior when unlocked fps with at least 2 frame in buffer. and havnt notice stutter but higher input lag.

curioous about when you cap to 58 with vsync is there any issue with smoothness?

Re: LLM On vs ultra

That was just to compare V-SYNC directly against G-SYNC. The 58 FPS limit @60Hz only applies to G-SYNC scenarios.

For normal standalone V-SYNC, ideally, you want to cap your FPS just slightly below the refresh rate at the decimal-level with RTSS (or use Scanline Sync instead, where possible).

See this article for details:

https://blurbusters.com/howto-low-lag-vsync-on/

Relevant instructions from said article below:

We found that only one frame-rate capping software, called RTSS (RivaTuner Statistics Server) was able to reduce VSYNC ON lag without adding visible stutters. Other frame rate limiter software (in-game, NVInspector, etc) were not as accurate.

Download and install RTSS.

Go to TestUFO Refresh Rate and measure your exact refresh rate to at least 3 decimal digits.

Subtract 0.01 from this number.

Use that as your RTSS frame rate cap for that specific game.

For fractional caps, you need to edit two lines in the RTSS config file. Remove the decimal point for the “Limit” line, and configure a divisor in the “LimitDenominator” line. Example of 59.935fps cap:

[Framerate]

Limit=59935

LimitDenominator=1000

IMPORTANT: Replace “59935” with TestUFO Refresh Rate, subtract 0.01, and remove the decimal point.

(jorimt: /jor-uhm-tee/)

Author: Blur Busters "G-SYNC 101" Series

Displays: ASUS PG27AQN, LG 48CX VR: Beyond, Quest 3, Reverb G2, Index OS: Windows 11 Pro Case: Fractal Design Torrent PSU: Seasonic PRIME TX-1000 MB: ASUS Z790 Hero CPU: Intel i9-13900k w/Noctua NH-U12A GPU: GIGABYTE RTX 4090 GAMING OC RAM: 32GB G.SKILL Trident Z5 DDR5 6400MHz CL32 SSDs: 2TB WD_BLACK SN850 (OS), 4TB WD_BLACK SN850X (Games) Keyboards: Wooting 60HE, Logitech G915 TKL Mice: Razer Viper Mini SE, Razer Viper 8kHz Sound: Creative Sound Blaster Katana V2 (speakers/amp/DAC), AFUL Performer 8 (IEMs)

Author: Blur Busters "G-SYNC 101" Series

Displays: ASUS PG27AQN, LG 48CX VR: Beyond, Quest 3, Reverb G2, Index OS: Windows 11 Pro Case: Fractal Design Torrent PSU: Seasonic PRIME TX-1000 MB: ASUS Z790 Hero CPU: Intel i9-13900k w/Noctua NH-U12A GPU: GIGABYTE RTX 4090 GAMING OC RAM: 32GB G.SKILL Trident Z5 DDR5 6400MHz CL32 SSDs: 2TB WD_BLACK SN850 (OS), 4TB WD_BLACK SN850X (Games) Keyboards: Wooting 60HE, Logitech G915 TKL Mice: Razer Viper Mini SE, Razer Viper 8kHz Sound: Creative Sound Blaster Katana V2 (speakers/amp/DAC), AFUL Performer 8 (IEMs)

Re: LLM On vs ultra

Thanks again for all your clarification, i used the low lag vsync method before scanline came out, but now when input lag is my priority i use scanline sync, for al solo games LLM=ON seems a good alternative.jorimt wrote: ↑12 Apr 2020, 14:02That was just to compare V-SYNC directly against G-SYNC. The 58 FPS limit @60Hz only applies to G-SYNC scenarios.

For normal standalone V-SYNC, ideally, you want to cap your FPS just slightly below the refresh rate at the decimal-level with RTSS (or use Scanline Sync instead, where possible).

See this article for details:

https://blurbusters.com/howto-low-lag-vsync-on/

Relevant instructions from said article below:We found that only one frame-rate capping software, called RTSS (RivaTuner Statistics Server) was able to reduce VSYNC ON lag without adding visible stutters. Other frame rate limiter software (in-game, NVInspector, etc) were not as accurate.

Download and install RTSS.

Go to TestUFO Refresh Rate and measure your exact refresh rate to at least 3 decimal digits.

Subtract 0.01 from this number.

Use that as your RTSS frame rate cap for that specific game.

For fractional caps, you need to edit two lines in the RTSS config file. Remove the decimal point for the “Limit” line, and configure a divisor in the “LimitDenominator” line. Example of 59.935fps cap:

[Framerate]

Limit=59935

LimitDenominator=1000

IMPORTANT: Replace “59935” with TestUFO Refresh Rate, subtract 0.01, and remove the decimal point.

Re: LLM On vs ultra

I think that the author main point is to know the overhead of Low Latency Mode on Ultra for scenarios when it is not needed (bottlenecked by the limiter or CPU). I didn't find any significant difference on my tests so I just leave it on ultra on all cases.

Some people claim it adds significant input lag (many ms) but their methodology is not reliable in my option as they usually test input lag using events that depend on tickrate and network such as strafe or gunfire instead of instantaneous events of the engine like camera angle. Those tickrates are usually a bottleneck (7.8ms for a high quality 128 tickrate) and have other side-effects. You could have a huge difference in response if you cap at 127.5 or 128.5 depending on how the engine handles cmd throttling.

Some people claim it adds significant input lag (many ms) but their methodology is not reliable in my option as they usually test input lag using events that depend on tickrate and network such as strafe or gunfire instead of instantaneous events of the engine like camera angle. Those tickrates are usually a bottleneck (7.8ms for a high quality 128 tickrate) and have other side-effects. You could have a huge difference in response if you cap at 127.5 or 128.5 depending on how the engine handles cmd throttling.

Re: LLM On vs ultra

Personnaly i didnt notice any benefit to use LLM ultra comapre to LLM ON in term of inputlag, even more in my scenario cpu never bottleneck casue frame are limited, and gpu also never used to the max, so i dont get the point to use LLM ultra compare to LLM = ON.andrelip wrote: ↑13 Apr 2020, 04:27I think that the author main point is to know the overhead of Low Latency Mode on Ultra for scenarios when it is not needed (bottlenecked by the limiter or CPU). I didn't find any significant difference on my tests so I just leave it on ultra on all cases.

Some people claim it adds significant input lag (many ms) but their methodology is not reliable in my option as they usually test input lag using events that depend on tickrate and network such as strafe or gunfire instead of instantaneous events of the engine like camera angle. Those tickrates are usually a bottleneck (7.8ms for a high quality 128 tickrate) and have other side-effects. You could have a huge difference in response if you cap at 127.5 or 128.5 depending on how the engine handles cmd throttling.

And i dont know how LLM=ULTRA can be usefull in cpu bound scenario? i mean, LLM=ULTRA= 0 pre rendered frame, so no buffer, then it should the most heavy for cpu, so if you already cpu bottleneck, and use LLM=ultra, hmmm....

On other hand i dont understand why LLM=ultra have to be used in gpu bound scenario, why couldnt it work with low gpu usage? cause if low gpu usage this mean its cpu limited? or framerate limited? in that case then LLM = ultra have to be used with unlocked fps? cause depending the game, uncapped fps will bound the gpu, but on CS GO for example, uncapped fps give cpu botleneck, so not good for LLM= ultra.

Sorry for all my odd question, this become a bit obsessional to me, i really want to know how it work from A to Z.

Re: LLM On vs ultra

You're continuing to complicate it. LLM can reduce input lag, and typically by only 1 frame, when you're GPU-bound. When you're not, it has little to no effect on input lag. That said, it should be safe to leave on when you're not GPU-bound, just don't expect it to do anything for input lag when you aren't GPU-bound.bapt337 wrote: ↑13 Apr 2020, 05:09Personnaly i didnt notice any benefit to use LLM ultra comapre to LLM ON in term of inputlag, even more in my scenario cpu never bottleneck casue frame are limited, and gpu also never used to the max, so i dont get the point to use LLM ultra compare to LLM = ON.

And i dont know how LLM=ULTRA can be usefull in cpu bound scenario? i mean, LLM=ULTRA= 0 pre rendered frame, so no buffer, then it should the most heavy for cpu, so if you already cpu bottleneck, and use LLM=ultra, hmmm....

On other hand i dont understand why LLM=ultra have to be used in gpu bound scenario, why couldnt it work with low gpu usage? cause if low gpu usage this mean its cpu limited? or framerate limited? in that case then LLM = ultra have to be used with unlocked fps? cause depending the game, uncapped fps will bound the gpu, but on CS GO for example, uncapped fps give cpu botleneck, so not good for LLM= ultra.

Also, the pre-rendered frames queue and LLM aren't always the same thing. The pre-rendered frames queue is always present, it's just not always filled. LLM only controls how much the PRF queue can be filled, and only in certain situations (the PRF queue simply stays filled more when the GPU is maxed, because the CPU has to wait longer for the GPU to be ready for the next frame).

And believe it or not, even some DX11 and DX9 games don't support LLM settings and use their own PRF values (many of which are already at "1"). And no, there's no easy way to tell.

And again, if the pre-rendered frames queue is at "1," it's not a constant "1" frame, it's anywhere from 0-1 frames, or 0-3 frames, or 0-5 frames; the "x" number is the "max" number PRF can be, not the constant. That's why the setting used to be called "Maximum pre-rendered frames."

Anyway, we keep talking about input lag here, but the pre-rendered frames queue actually has more to do with how high or low of an average framerate the CPU can output than it does with input lag, that's why setting PRF values too low can sometimes reduce average framerate. Just try any modern Battlefield game, and then set it's "RenderAheadLimit" value to "1"; you'll see how an actual max pre-rendered frame of "1" can impact average framerate.

Good luck, because it's the hardest setting to understand, the hardest setting to test for, and the hardest setting to see the effects of, especially on the user-side. And even if you can, in situations where your settings can actually make a difference, you're more likely to see it effect average framerate first, as opposed to input lag directly.

I'll close with a couple other sources on LLM:

https://www.nvidia.com/en-us/geforce/ne ... dy-driver/

And:The NVIDIA Control Panel has -- for over 10 years -- enabled GeForce gamers to adjust the “Maximum Pre-Rendered Frames”, the number of frames buffered in the render queue. By reducing the number of frames in the render queue, new frames are sent to your GPU sooner, reducing latency and improving responsiveness.

With the release of our Gamescom Game Ready Driver, we’re introducing a new Ultra-Low Latency Mode that enables ‘just in time’ frame scheduling, submitting frames to be rendered just before the GPU needs them. This further reduces latency by up to 33%:

Low Latency modes have the most impact when your game is GPU bound, and framerates are between 60 and 100 FPS, enabling you to get the responsiveness of high-framerate gaming without having to decrease graphical fidelity.

https://www.howtogeek.com/437761/how-to ... -graphics/

Graphics engines queue frames to be rendered by the GPU, the GPU renders them, and then they’re displayed on your PC. As NVIDIA explains, this feature builds on the “Maximum Pre-Rendered Frames” feature that’s been found in the NVIDIA Control Panel for over a decade. That allowed you to keep the number of frames in the render queue down.

With “Ultra-Low Latency” mode, frames are submitted into the render queue just before the GPU needs them. This is “just in time frame scheduling,” as NVIDIA calls it. NVIDIA says it will “further [reduce] latency by up to 33%” over just using the Maximum Pre-Rendered Frames option.

This works with all GPUs. However, it only works with DirectX 9 and DirectX 11 games. In DirectX 12 and Vulkan games, “the game decides when to queue the frame” and the NVIDIA graphics drivers have no control over this.

Here’s when NVIDIA says you might want to use this setting:

“Low Latency modes have the most impact when your game is GPU bound, and framerates are between 60 and 100 FPS, enabling you to get the responsiveness of high-framerate gaming without having to decrease graphical fidelity. “

In other words, if a game is CPU bound (limited by your CPU resources instead of your GPU) or you have very high or very low FPS, this won’t help too much. If you have input latency in games—mouse lag, for example—that’s often simply a result of low frames per second (FPS) and this setting won’t solve that problem.

Warning: This will potentially reduce your FPS. This mode is off by default, which NVIDIA says leads to “maximum render throughput.” For most people most of the time, that’s a better option. But, for competitive multiplayer gaming, you’ll want all the tiny edges you can get—and that includes lower latency.

(jorimt: /jor-uhm-tee/)

Author: Blur Busters "G-SYNC 101" Series

Displays: ASUS PG27AQN, LG 48CX VR: Beyond, Quest 3, Reverb G2, Index OS: Windows 11 Pro Case: Fractal Design Torrent PSU: Seasonic PRIME TX-1000 MB: ASUS Z790 Hero CPU: Intel i9-13900k w/Noctua NH-U12A GPU: GIGABYTE RTX 4090 GAMING OC RAM: 32GB G.SKILL Trident Z5 DDR5 6400MHz CL32 SSDs: 2TB WD_BLACK SN850 (OS), 4TB WD_BLACK SN850X (Games) Keyboards: Wooting 60HE, Logitech G915 TKL Mice: Razer Viper Mini SE, Razer Viper 8kHz Sound: Creative Sound Blaster Katana V2 (speakers/amp/DAC), AFUL Performer 8 (IEMs)

Author: Blur Busters "G-SYNC 101" Series

Displays: ASUS PG27AQN, LG 48CX VR: Beyond, Quest 3, Reverb G2, Index OS: Windows 11 Pro Case: Fractal Design Torrent PSU: Seasonic PRIME TX-1000 MB: ASUS Z790 Hero CPU: Intel i9-13900k w/Noctua NH-U12A GPU: GIGABYTE RTX 4090 GAMING OC RAM: 32GB G.SKILL Trident Z5 DDR5 6400MHz CL32 SSDs: 2TB WD_BLACK SN850 (OS), 4TB WD_BLACK SN850X (Games) Keyboards: Wooting 60HE, Logitech G915 TKL Mice: Razer Viper Mini SE, Razer Viper 8kHz Sound: Creative Sound Blaster Katana V2 (speakers/amp/DAC), AFUL Performer 8 (IEMs)