My monitor Acer XF252Q has an option for Low input lag. However, this option is greyed out when enabling Freesync. Hence I was wondering if that would mean it's disabled or enabled when using Freesync? I believe it should be enabled but when it's greyed out, it says that the option is off which I find odd.

On my AOC C24G1, the same options exists but that options is instead always greyed out (and showing on) unless I'm using a custom resolution.

Low-Input-Lag mode Monitor?

- RedCloudFuneral

- Posts: 40

- Joined: 09 May 2020, 00:23

Re: Low-Input-Lag mode Monitor?

My Viewsonic is the same, greyed out when using Freesync. It makes sense because VRR adds some input lag, if you're prioritizing input lag above all else you will likely want Freesync off.

I also lose dynamic contrast, blue-light filter, and other features using the low lag mode with my monitor. I'm thinking the low lag is accomplished by switching off parts of the circuitry trading features for a more direct processing route. Do you notice anything else disabled on yours?

I also lose dynamic contrast, blue-light filter, and other features using the low lag mode with my monitor. I'm thinking the low lag is accomplished by switching off parts of the circuitry trading features for a more direct processing route. Do you notice anything else disabled on yours?

- Chief Blur Buster

- Site Admin

- Posts: 11653

- Joined: 05 Dec 2013, 15:44

- Location: Toronto / Hamilton, Ontario, Canada

- Contact:

Re: Low-Input-Lag mode Monitor?

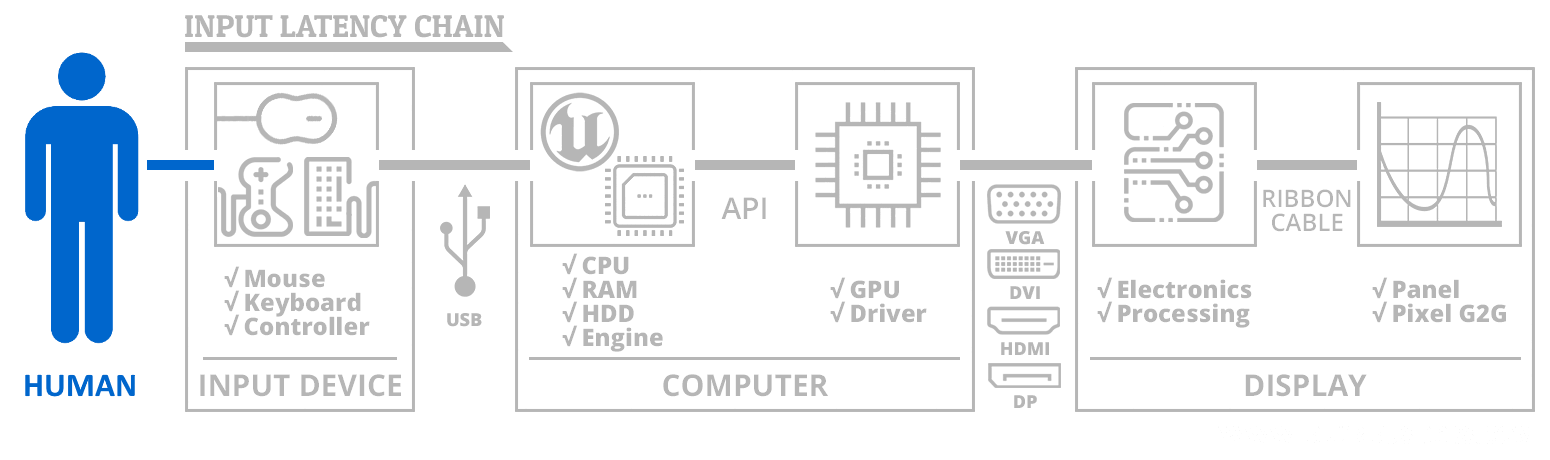

Firstly, the lag chain is complex.RedCloudFuneral wrote: ↑13 May 2020, 13:17My Viewsonic is the same, greyed out when using Freesync. It makes sense because VRR adds some input lag, if you're prioritizing input lag above all else you will likely want Freesync off.

I also lose dynamic contrast, blue-light filter, and other features using the low lag mode with my monitor. I'm thinking the low lag is accomplished by switching off parts of the circuitry trading features for a more direct processing route. Do you notice anything else disabled on yours?

But that's a grossly simplified diagram. It doesn't dive into even subtler nuances of lag (scanout lag versus absolute lag). One needs to understand the purpose of the "Low Lag" setting feature which is often misunderstood by users.

In reality, FreeSync/G-SYNC forces the "low-lag" setting permanently ON (unlike "OFF" that most people think)

The answer to this is more nuanced.RedCloudFuneral wrote: ↑13 May 2020, 13:17My Viewsonic is the same, greyed out when using Freesync. It makes sense because VRR adds some input lag, if you're prioritizing input lag above all else you will likely want Freesync off.

Many monitors often go into their low-lag mode when FreeSync/G-SYNC is enabled. Basically realtime sync between cable scanout -vs- panel scanout.

The only claimed "lag" of FreeSync is simply the mandatory scanout latency, but FreeSync is lower lag than all sync technologies (VSYNC ON, Fast Sync, Enhanced Sync) with the sole exception of any sync technologies that shows tearlines, such as VSYNC OFF (thanks to realtime splicing of frameslices into mid-scanout)

FreeSync automatically turns "low lag" on, on most panels. That's why RTINGs sometimes have equal lag for FreeSync ON/OFF

But the "mandatory scanout latency" (of ALL tearfree sync technologies) is a different issue than "absolute latency from processing latency" (which VSYNC OFF is still prone to).

Apples vs oranges.

For those who do not understand "scanout latency", see this high speed video of a screen flashing 4 frames. Not all pixels on an LCD refreshes at the same time:

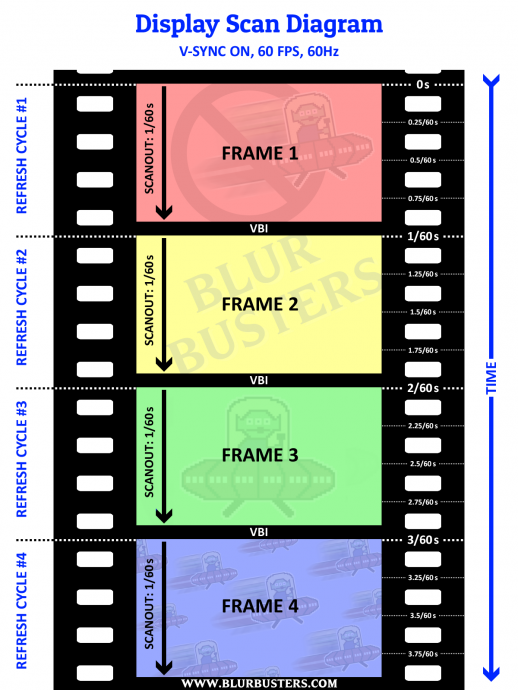

For VSYNC ON 60Hz, this can be graphed out as:

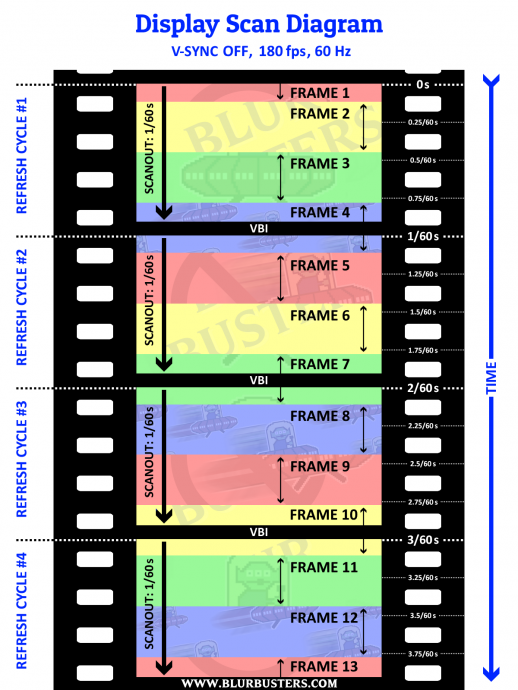

All sync technologies have to wait for VBI and wait for scanout. The only way that this is bypassed with VSYNC OFF.

In VSYNC OFF, frameslices can interrupt the scanout, being real-time spliced mid-scanout into the frame. You have tearlines, but you have lower lag because you've eliminated the "wait for VBI" (wait for VSYNC).

Now, enabling a monitor's "Low Lag Mode" is often a separate issue altogether, than the sync technology on the PC side (VSYNC ON versus VSYNC OFF, or FreeSync). Although VRR means co-operation between the computer and the monitor (waiting for the PC to deliver refresh cycles, rather than a fixed refresh cycle schedule), it's all equally subject to scanout latency.

What this means is that FreeSync/G-SYNC already automatically turns on low-lag mode on most monitors (and LOCKS it ON)

The "FreeSync lag" you hear about is simply the scanout latency, that is unrelated to the "Low Lag" feature of the monitor.

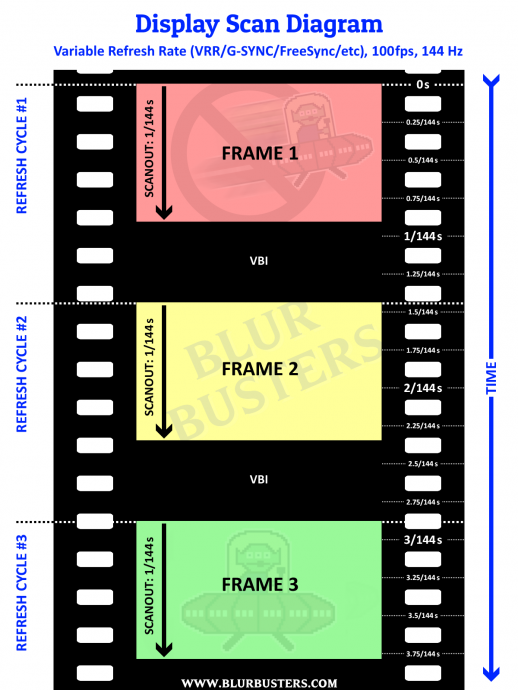

Now, this is what the scanout graph looks like for 100fps at 144Hz during FreeSync or G-SYNC:

The screen only refreshes when the game presents the frame.

Thanks to this, VRR is the world's lowest-lag "tear-free" sync technology.

All sync technologies that has tearing, all have a mandatory scanout lag (since not all pixels refresh at the same time on the screen surface), and if you want the world's lowest-lag zero-tearing sync technology -- it's indeed VRR.

The "force low lag on" behaviour of VRR is simply a side effect of the VRR technology, but that doesn't bypass scanout lag (that requires tearing/splicing in order to do so).

If there is any lag you are feeling with VRR, the lag you are feeling only because of mandatory scanout latency. That can be massively reduced by using a higher refresh rate (faster scanout, 240Hz = 1/240sec scanout lag, and 360Hz = 1/360sec scanout lag).

Scanout lag is different from absolute lag / panel processing lag.

Again, apples vs oranges.

Head of Blur Busters - BlurBusters.com | TestUFO.com | Follow @BlurBusters on Twitter

Forum Rules wrote: 1. Rule #1: Be Nice. This is published forum rule #1. Even To Newbies & People You Disagree With!

2. Please report rule violations If you see a post that violates forum rules, then report the post.

3. ALWAYS respect indie testers here. See how indies are bootstrapping Blur Busters research!

Re: Low-Input-Lag mode Monitor?

With this in mind, is it correct that (if you're using around 230 fps) low-lag-mode and freesync on mode has very similar input lag (within a few milliseconds)?Chief Blur Buster wrote: ↑13 May 2020, 13:53The only claimed "lag" of FreeSync is simply the mandatory scanout latency, but FreeSync is lower lag than all sync technologies (VSYNC ON, Fast Sync, Enhanced Sync) with the sole exception of any sync technologies that shows tearlines, such as VSYNC OFF (thanks to realtime splicing of frameslices into mid-scanout)

FreeSync automatically turns "low lag" on, on most panels. That's why RTINGs sometimes have equal lag for FreeSync ON/OFF

- RedCloudFuneral

- Posts: 40

- Joined: 09 May 2020, 00:23

Re: Low-Input-Lag mode Monitor?

To check my comprehension; are the implications of this that the perceived lag is highly variable with VRR/Vsync off? The most up to date information will be displayed wherever the screen is currently drawing?Chief Blur Buster wrote: ↑13 May 2020, 13:53Firstly, the lag chain is complex.

...

Again, apples vs oranges.

The comparison is apples vs. oranges because one method will show consistent lag(VRR/Vysnc) & the other tears into other frames as they render varying perception by gaze & timing?

- Chief Blur Buster

- Site Admin

- Posts: 11653

- Joined: 05 Dec 2013, 15:44

- Location: Toronto / Hamilton, Ontario, Canada

- Contact:

Re: Low-Input-Lag mode Monitor?

Yes.RedCloudFuneral wrote: ↑14 May 2020, 00:17To check my comprehension; are the implications of this that the perceived lag is highly variable with VRR/Vsync off? The most up to date information will be displayed wherever the screen is currently drawing?

Not exactly. To explain better, requires a complex reply:RedCloudFuneral wrote: ↑14 May 2020, 00:17The comparison is apples vs. oranges because one method will show consistent lag(VRR/Vysnc) & the other tears into other frames as they render varying perception by gaze & timing?

A roundabout way to explain this is: VSYNC OFF is more "gametime:photontime" consistent because it's essentially real-time streaming of fresh frameslices being spliced mid-scanout. However, it is still subject to latency gradients within the VSYNC OFF frameslice.

For tearing technologies (VSYNC OFF)

- The frameslice is a vertical linear latency gradient from [0...frametime]

- The lowest lag is the first pixel row immediately below a tearline.

- It is possible for frameslices to be shorter in height than screen height. In this case, it is is possible to have the worst-case latency less than bottom-edge scanout latency.

For non-tearing technologies (VSYNC ON, VRR)

- The whole screen is a vertical linear latency gradient [0...refreshtime]

- The lowest lag is the first pixel row at the top edge of the screen. (non-strobed)

Mandatory full screen height scanout latency

For framerates far in excess of refresh rates, frame slice latency gradients become darn nearly negligible, and latency of TOP = CENTER = BOTTOM. VRR is the world's lowest lag non-tearing sync technology.

For framerates below Hz, well-optimized well-tuned framerate-capped VRR can feel more consistent, especially when scanout lag is tiny (e.g. 1/240sec = 4.2ms).

But for framerates above Hz, VSYNC OFF advantages can exceed VRR advantages for certain games such as CS:GO. The moral of the story is that if you're playing low frame rates and fluctuating frame rates, VRR can give a competitive advantage. That said, CS:GO wants to spew frame rates far beyond Hz, so VRR isn't as as beneficial in CS:GO until 360Hz or 480Hz monitors. The advantage of 1000fps VSYNC OFF is that TOP=CENTER=BOTTOM with the whole screen surface equal, and jittering in lag only 1ms relative to other pixels, bypassing scanout lag, since pixel differences betwen pixels are only [0...frametime] and 1000fps means 1ms frametimes. Thus, ultra-high-framerate VSYNC OFF can feel more consistent due to the sheer overkill and the scanout-lag-bypassing behavior.

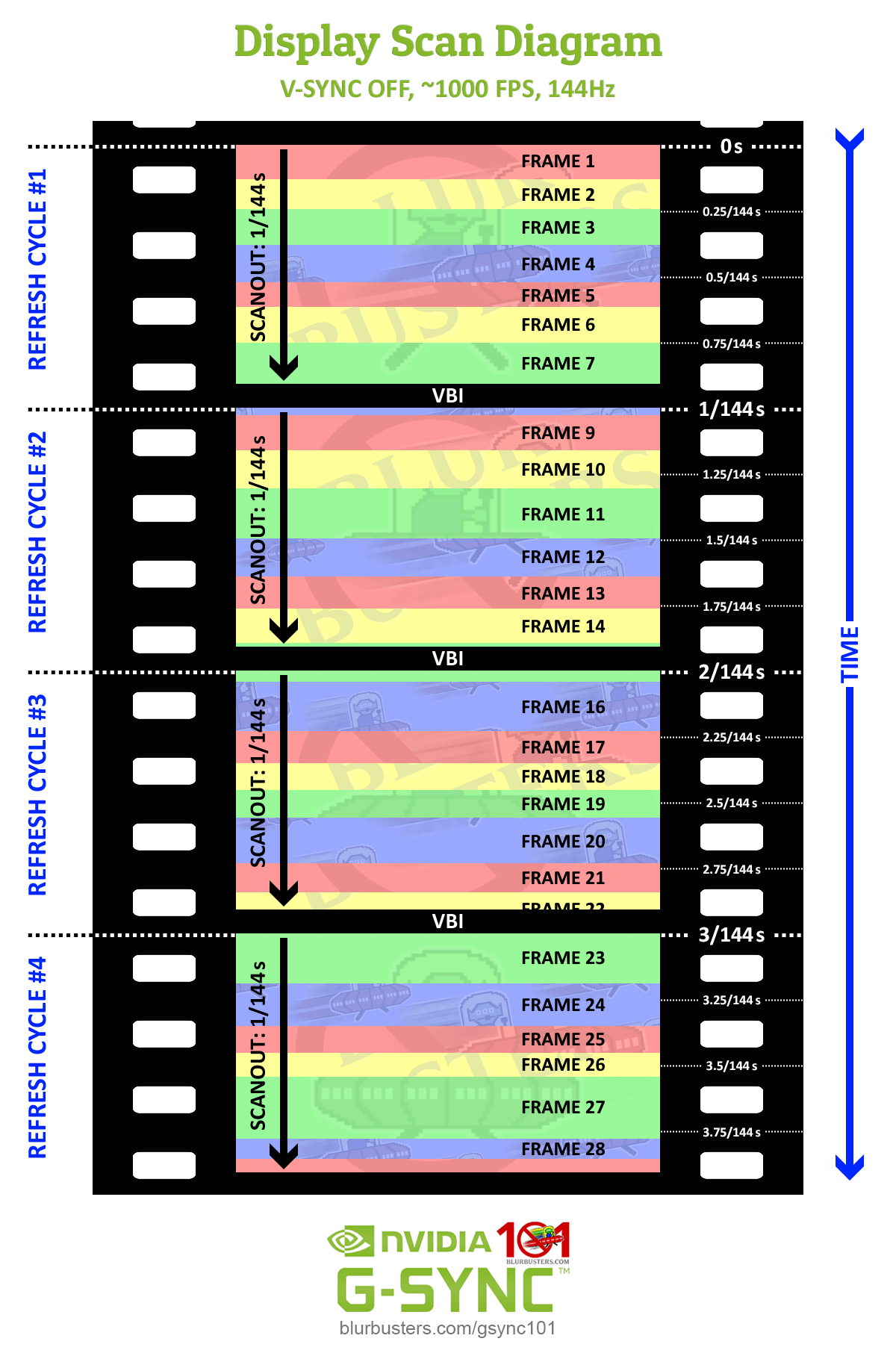

In this example, the frameslices can vary a bit in height because frametimes were varying (e.g. [0.8ms thru 1.2ms] ...) as it would really occur in real life. Few games can achieve 1000fps today, except things like older Quake 2 or Quake Live engine, but whole screen surface feels identical in lag (TOP = CENTER = BOTTOM) within roughly a 1ms margin -- because the gametime:photontime is staying in sync, with frameslices becoming fresher and fresher compensating for scanout lag.

Think of it as real-time streaming of frameslices splicing into mid-scanout. Sure, you get maybe 7 to 8 tearlines per refresh cycle for 1000fps at 144Hz (Average tearlines per refresh cycle will be framerate divided by Hz, as explained at Are There Advantages to Frame Rates Higher Than the Refresh Rate?...)

However, if you use any sync technology that doesn't have tearlines (doesn't bypass scanout latency), you always have the mandatory scanout latency. That's the sole location of the only VRR lag.

But the monitor is already in low-scanout-lag mode (realtime cable:panel scanout sync, since scanout graphs can look different on cable, versus panel -- e.g. different pixel delivery sequence, or different horizontal scan rate -- e.g. faster delivery of pixel rows, Quick Frame Transport, scan conversion, e.g. 60Hz signal being buffered in a monitor whose panel can only scanout at full velocity in 1/240sec top-to-bottom).

When a monitor is configured to "Low Latency" on a typical TN/IPS gaming monitor, it usually turns on its real time synchronous scanout mode where the cable scanout goes almost straight to the panel scanout, in sync -- with no framebuffering in the monitor. Just enough of a tiny rolling-window line buffer for scanout (usually about 6 pixel rows or thereabouts), sufficient for DisplayPort micropacket and HDMI micropacket de-jittering / de-multiplexing (e.g. embedded audio, embedded data, and whatnot, DSC decompression). That's how gaming monitors can achieve sub-refresh latency for screen bottom edge, during VSYNC OFF, or only one refresh cycle latency during the world's most optimized VSYNC ON / VRR / non-tearing sync technology.

The "Low Latency" mode in a monitor globally applies to all sync technologies, unrelated to the "mandatory-scanout lag requirement for bottom edge lag" disadvantage of non-tearing sync technologies. That's the apples vs oranges argument.

This is a typical Blur Busters Advanced Answer -- lag is a complex chain.

Head of Blur Busters - BlurBusters.com | TestUFO.com | Follow @BlurBusters on Twitter

Forum Rules wrote: 1. Rule #1: Be Nice. This is published forum rule #1. Even To Newbies & People You Disagree With!

2. Please report rule violations If you see a post that violates forum rules, then report the post.

3. ALWAYS respect indie testers here. See how indies are bootstrapping Blur Busters research!

Re: Low-Input-Lag mode Monitor?

It has always been incomprehensible to me why vrr is raised to the absolute as technology without Tearing. Yes, she is the best to date. But when using vrr there is Smooth + Microstuttering both at low frequencies and at high ones. They are not so noticeable. But still there.

I often do not clearly state my thoughts. google translate is far from perfect. And in addition to the translator, I myself am mistaken. Do not take me seriously.

- Chief Blur Buster

- Site Admin

- Posts: 11653

- Joined: 05 Dec 2013, 15:44

- Location: Toronto / Hamilton, Ontario, Canada

- Contact:

Re: Low-Input-Lag mode Monitor?

VRR does do a good job in massively improving gametime:photontime. Divergences are the cause of erratic stutter (judder / irregular stutter / etc). You have to keep those relative to reduce your stutter error margins.1000WATT wrote: ↑14 May 2020, 13:43It has always been incomprehensible to me why vrr is raised to the absolute as technology without Tearing. Yes, she is the best to date. But when using vrr there is Smooth + Microstuttering both at low frequencies and at high ones. They are not so noticeable. But still there.

That said, VRR doesn't 100% solve gametime:photontime relativity divergences that create stutter.

Glossary:

"gametime" (also software developer parlance) the microsecond time positions of all the 3D graphics are in (positions, pan, turn, objects, whatever)

"photontime" (Blur Busters term), the time light hits the human eyeballs.

Erratic stutters despite VRR:

There are other sources of gametime:photontime variances despite VRR

- Mouse microstutters (Hz limitations, DPI limitations)

- Disk loads (with no gametime compensation; poor programming)

- Poor game programming (gametime isn't relative-accurate to Present() / glxxSwapBuffers() time)

- Rendertime variances (frametime variances) that shift gametime relative to photontime

- Power management between frames (low GPU utilization can intentionally slow down GPU rendering erratically, creating erratic frametimes for identical rendering workloads, creating amplified gametime:photontime-divergence microstutters on laptops with VRR)

- VRR granularity (some VRR tech don't bother to go microsecond-timescale. Millisecond-timescale has visible microstutter during ultrahigh-Hz and/or motion blur reduction). For most VRR, the VRR granularity is one scanline in horizontal scanrate, but 1080p 240Hz is in the neighborhood of 600KHz (less than 2 microseconds per pixel row) so that is not a human-visible VRR granularity error margin, but some drivers inject VRR granularity

Non-erratic gametime:photontime divergences (not fixable by VRR)

- Simple lowness of framerates (regular stutter) is because it's a static frame while theoretical perfect gametime is continuously analog (like real time). You get regular stutter from low frame rates like www.testufo.com/framerates-versus ... but the stutter is not erratic. VRR cannot fix low-framerate regular stutter.

- Sudden framerate drops (freezes), is simply another use-case of frame rate lowness as explained above.

- Scanout (top edge versus bottom edge) but that's just only smooth scanskew effect ( www.testufo.com/scanskew - Best if seen on iPad or DELL/HP 60Hz panel), not usually a cause of erratic stutter. Not all pixels refresh at the same time, you can see high speed videos of a display refreshing at www.blurbusters.com/scanout ... Fortunately, higher Hz means faster scanout, which means less scanout-related behavior.

Erratic stutter (for a given frame rate) can becomes easier to see at higher Hz or with motion blur reduction (e.g. ELMB-SYNC), thanks to the Vicious Cycle Effect where higher Hz, higher resolutions, wider FOV, clearer motion, bigger displays, all interact with each other. It's easier to see microstutter during low-persistence 4K 120fps than blurry-LCD 480p 120fps because everything is so much clearer and so much higher resolution, which means tiny stutters becomes more visible at higher resolution. It's easier to see microsttuter during ULMB than non-ULMB. Now imagine 8K 1000Hz strobeless ULMB, all the microstutter weak links becomes even more amplified.

A reply explaining how software developers can reduce gametime:photontime divergences during VRR (minimizing stutters that break through VRR) can be found at:

Software Developers: Making Your Game Engine GSYNC/FreeSync Compatible

That's why I am happy that lead developer of Billy Kahn said that the Doom Eternal engine supports 1000fps; I like future-proofed game engines that will gracefully scale through the Vicious Cycle Effect; I'm going to write some articles solely about the Vicious Cycle Effect, since it's kind of a raison d'etre of Blur Busters existence.

Head of Blur Busters - BlurBusters.com | TestUFO.com | Follow @BlurBusters on Twitter

Forum Rules wrote: 1. Rule #1: Be Nice. This is published forum rule #1. Even To Newbies & People You Disagree With!

2. Please report rule violations If you see a post that violates forum rules, then report the post.

3. ALWAYS respect indie testers here. See how indies are bootstrapping Blur Busters research!

Re: Low-Input-Lag mode Monitor?

These diagrams are really helpful for visualizing the trade offs between VRR and VSYNC OFF, thanks. I'm kind of curious about what Scanline Sync looks like in one of these diagrams?Chief Blur Buster wrote: ↑13 May 2020, 13:53For VSYNC ON 60Hz, this can be graphed out as:

All sync technologies have to wait for VBI and wait for scanout. The only way that this is bypassed with VSYNC OFF.

In VSYNC OFF, frameslices can interrupt the scanout, being real-time spliced mid-scanout into the frame. You have tearlines, but you have lower lag because you've eliminated the "wait for VBI" (wait for VSYNC).

Now, enabling a monitor's "Low Lag Mode" is often a separate issue altogether, than the sync technology on the PC side (VSYNC ON versus VSYNC OFF, or FreeSync). Although VRR means co-operation between the computer and the monitor (waiting for the PC to deliver refresh cycles, rather than a fixed refresh cycle schedule), it's all equally subject to scanout latency.

What this means is that FreeSync/G-SYNC already automatically turns on low-lag mode on most monitors (and LOCKS it ON)

The "FreeSync lag" you hear about is simply the scanout latency, that is unrelated to the "Low Lag" feature of the monitor.

Now, this is what the scanout graph looks like for 100fps at 144Hz during FreeSync or G-SYNC:

The screen only refreshes when the game presents the frame.

Thanks to this, VRR is the world's lowest-lag "tear-free" sync technology.

All sync technologies that has tearing, all have a mandatory scanout lag (since not all pixels refresh at the same time on the screen surface), and if you want the world's lowest-lag zero-tearing sync technology -- it's indeed VRR.

The "force low lag on" behaviour of VRR is simply a side effect of the VRR technology, but that doesn't bypass scanout lag (that requires tearing/splicing in order to do so).

If there is any lag you are feeling with VRR, the lag you are feeling only because of mandatory scanout latency. That can be massively reduced by using a higher refresh rate (faster scanout, 240Hz = 1/240sec scanout lag, and 360Hz = 1/360sec scanout lag).

Scanout lag is different from absolute lag / panel processing lag.

Again, apples vs oranges.

Where does Scanline Sync fit here?Chief Blur Buster wrote: ↑14 May 2020, 11:32Not exactly. To explain better, requires a complex reply:

A roundabout way to explain this is: VSYNC OFF is more "gametime:photontime" consistent because it's essentially real-time streaming of fresh frameslices being spliced mid-scanout. However, it is still subject to latency gradients within the VSYNC OFF frameslice.

For tearing technologies (VSYNC OFF)

- The frameslice is a vertical linear latency gradient from [0...frametime]

- The lowest lag is the first pixel row immediately below a tearline.

- It is possible for frameslices to be shorter in height than screen height. In this case, it is is possible to have the worst-case latency less than bottom-edge scanout latency.

For non-tearing technologies (VSYNC ON, VRR)

- The whole screen is a vertical linear latency gradient [0...refreshtime]

- The lowest lag is the first pixel row at the top edge of the screen. (non-strobed)

Mandatory full screen height scanout latency

Naively, I was hoping to use SSYNC get VSYNC OFF performance but with a consistent tearline some [comfortable] amount above my crosshair, to ensure that area has the lowest lag. Am I thinking about this right? Am I using the right tool for the job?

Re: Low-Input-Lag mode Monitor?

Thinking a little more critically, and after a little more caffeine, I think this would just be like VRR but worse. Consistent scanline location but visible instead of during VBI. With no real benefit.