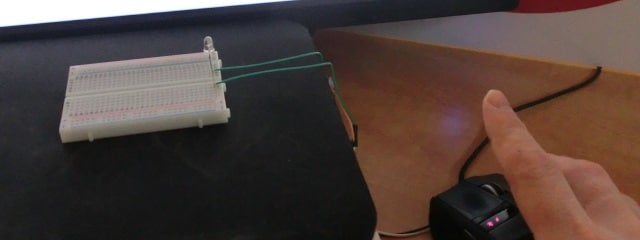

For anybody who wants to measure input lag but doesn't want to shell out for a leo bodnar, time sleuth, or mod their OSSC there's another option that just requires a raspberry pi (zero). They can be had for $5 if you don't already have one. The other requirement is a camera - 60fps or higher (high speed is easier but not more accurate). No soldering or other hardware mods required, and the software is free.

https://alantechreview.blogspot.com/202 ... berry.html

Use a regular raspberry pi to measure input lag with millisecond accuracy

Use a regular raspberry pi to measure input lag with millisecond accuracy

Measure display input lag the cheap way or the best way (IMHO, but I'm biased).

- Chief Blur Buster

- Site Admin

- Posts: 11653

- Joined: 05 Dec 2013, 15:44

- Location: Toronto / Hamilton, Ontario, Canada

- Contact:

Re: Use a regular raspberry pi to measure input lag with millisecond accuracy

Very nice!

However, it won't be millisecond accuracy with a 60fps camera. You need 1000fps for millisecond accuracy.

Also, I didn't see the Pi program, but I don't know if it syncs the green illumination of the green LED to the blanking interval (VSYNC). If it does not, then the Pi software adds a 1-refresh-cycle error margin if it's VSYNC-timing unaware. There are Linux techniques to monitor for the tick-tock of refresh cycles the GPU is generating, then illuminate the green LED exactly between refresh cycles (at the PI's output). That will eliminate the error margin. Once done, combined with 1000fps camera, it should be millisecond accurate to the top edge of scanout, though not all pixels refresh at the same time, as seen at www.blurbusters.com/scanout

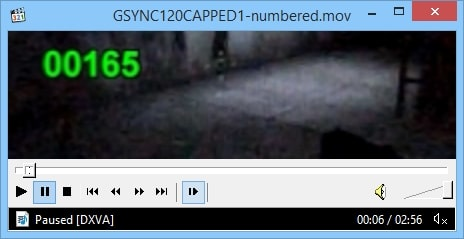

Different equipment, but similar "a light appears, then screen changes" lag test approach used by Jorim's GSYNC 101 articles on Blur Busters. Not too different from the G-SYNC lag testing technique I came up six years ago at www.blurbusters.com/gsync/preview2 ... Basically the "LED illuminates, then display changes" approach.

And instead of 60fps, I used 1000fps, for millisecond-accuracy. It was a mouse modification, direct-wire to the switch.

So it wasn't a Raspberry PI, but was able to allow me to record the input lag of GSYNC.

There are various limitations with the Pi approach that creates difficulties to get sub-refresh numbers. But the concept is quite similar -- "a light illuminates, then another light illuminates" approach.

However, it won't be millisecond accuracy with a 60fps camera. You need 1000fps for millisecond accuracy.

Also, I didn't see the Pi program, but I don't know if it syncs the green illumination of the green LED to the blanking interval (VSYNC). If it does not, then the Pi software adds a 1-refresh-cycle error margin if it's VSYNC-timing unaware. There are Linux techniques to monitor for the tick-tock of refresh cycles the GPU is generating, then illuminate the green LED exactly between refresh cycles (at the PI's output). That will eliminate the error margin. Once done, combined with 1000fps camera, it should be millisecond accurate to the top edge of scanout, though not all pixels refresh at the same time, as seen at www.blurbusters.com/scanout

Different equipment, but similar "a light appears, then screen changes" lag test approach used by Jorim's GSYNC 101 articles on Blur Busters. Not too different from the G-SYNC lag testing technique I came up six years ago at www.blurbusters.com/gsync/preview2 ... Basically the "LED illuminates, then display changes" approach.

And instead of 60fps, I used 1000fps, for millisecond-accuracy. It was a mouse modification, direct-wire to the switch.

So it wasn't a Raspberry PI, but was able to allow me to record the input lag of GSYNC.

There are various limitations with the Pi approach that creates difficulties to get sub-refresh numbers. But the concept is quite similar -- "a light illuminates, then another light illuminates" approach.

Head of Blur Busters - BlurBusters.com | TestUFO.com | Follow @BlurBusters on Twitter

Forum Rules wrote: 1. Rule #1: Be Nice. This is published forum rule #1. Even To Newbies & People You Disagree With!

2. Please report rule violations If you see a post that violates forum rules, then report the post.

3. ALWAYS respect indie testers here. See how indies are bootstrapping Blur Busters research!

Re: Use a regular raspberry pi to measure input lag with millisecond accuracy

Yep, similar. Great minds think alike? Though, by generating the appropriate test signal (in this case a bar) the temporal resolution is much better. I like that you can measure your method in actual games but that does muddy up the contributions of mouse polling, game engine, and display pipeline vs purely monitor caused lag.

The main motivation of my approach is to take out all the soldering and keep the price low (ideally, free, since raspberry pi's are pretty common among the target market).

The main motivation of my approach is to take out all the soldering and keep the price low (ideally, free, since raspberry pi's are pretty common among the target market).

Measure display input lag the cheap way or the best way (IMHO, but I'm biased).

Re: Use a regular raspberry pi to measure input lag with millisecond accuracy

That's actually the part I'm pretty pleased about: yes you can get millisecond accuracy with a 60hz camera. At least 1-2 millisecond, anyway, with averaging.Chief Blur Buster wrote: ↑02 Jun 2020, 16:02

However, it won't be millisecond accuracy with a 60fps camera. You need 1000fps for millisecond accuracy.

https://alantechreview.blogspot.com/202 ... -high.html

I've validated my numbers with an oscilloscope and also the OSSC DIY lag test mod.

Measure display input lag the cheap way or the best way (IMHO, but I'm biased).

- Chief Blur Buster

- Site Admin

- Posts: 11653

- Joined: 05 Dec 2013, 15:44

- Location: Toronto / Hamilton, Ontario, Canada

- Contact:

Re: Use a regular raspberry pi to measure input lag with millisecond accuracy

Indeed, yes, averaging. That does the job.

How does the device turn on the green LED, though? Random times, or synchronized to refresh cycles?

Sometimes either is statistically useful (but different) lag numbers, since random times can emulate a button press, and green-to-VSYNC times more isolates the latency chain segment.

Latency is a hugely complex chain with different people (for different reasons) needing to measure different parts...

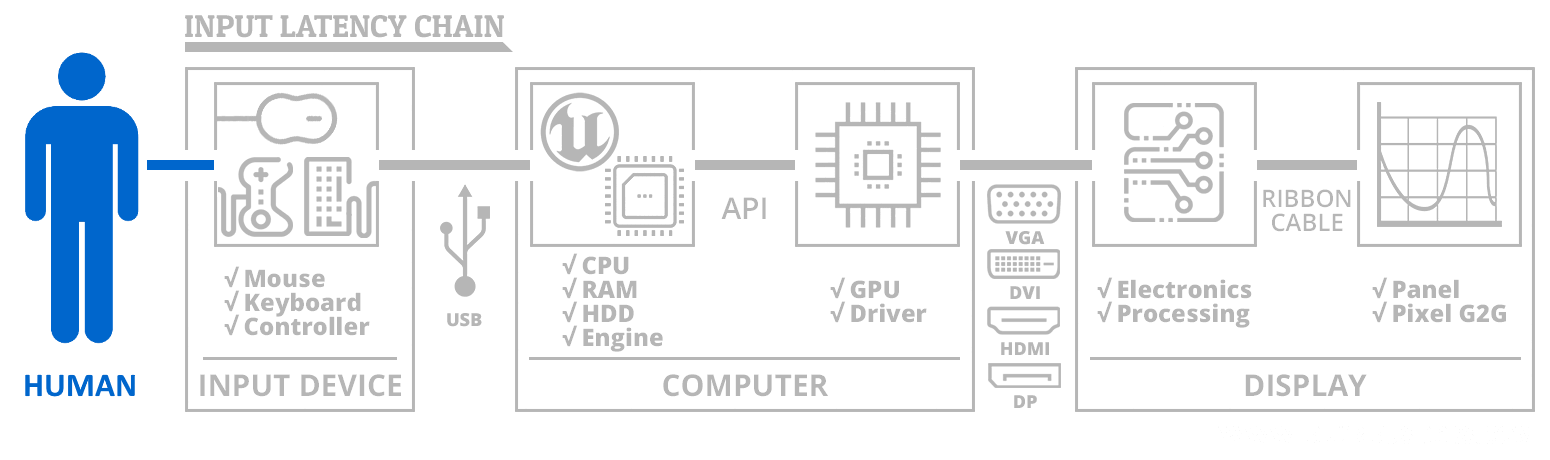

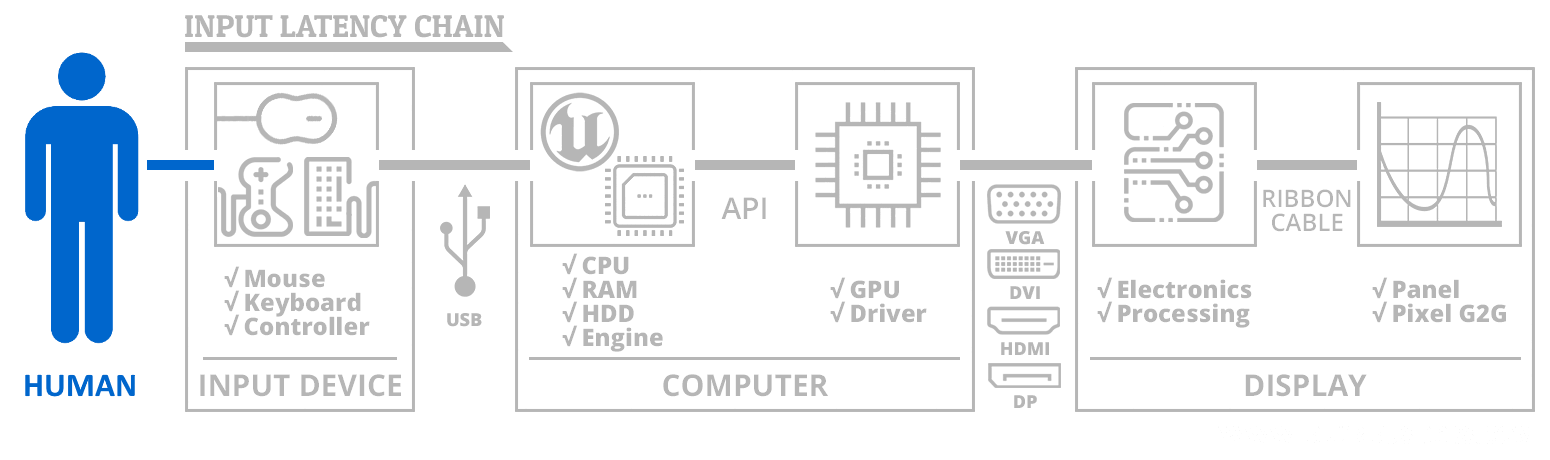

Even this diagram is grossly simplified, but for laypeople, it is a hint of complexity. Sometimes you only want to measure the lag of a section of the chain, or the whole chain.

Also, even GPU and display lag is blurred with driver-cooperative behaviours (e.g. GSYNC and FreeSync), so sometimes it can't be reliably siloed.

How does the device turn on the green LED, though? Random times, or synchronized to refresh cycles?

Sometimes either is statistically useful (but different) lag numbers, since random times can emulate a button press, and green-to-VSYNC times more isolates the latency chain segment.

Latency is a hugely complex chain with different people (for different reasons) needing to measure different parts...

Even this diagram is grossly simplified, but for laypeople, it is a hint of complexity. Sometimes you only want to measure the lag of a section of the chain, or the whole chain.

Also, even GPU and display lag is blurred with driver-cooperative behaviours (e.g. GSYNC and FreeSync), so sometimes it can't be reliably siloed.

Head of Blur Busters - BlurBusters.com | TestUFO.com | Follow @BlurBusters on Twitter

Forum Rules wrote: 1. Rule #1: Be Nice. This is published forum rule #1. Even To Newbies & People You Disagree With!

2. Please report rule violations If you see a post that violates forum rules, then report the post.

3. ALWAYS respect indie testers here. See how indies are bootstrapping Blur Busters research!

Re: Use a regular raspberry pi to measure input lag with millisecond accuracy

It's actually a bit more than that - you'd have to average a *LOT* to get decent data without using my technique. If you wanted to use a 60hz camera, anyway. But by measuring the extent of the vertical bar, 5-8 samples seems to be enough, empirically. I'd still want to take at least 5 samples even when using a higher speed camera, such as the 960fps S10e I've also used in this project. As you guys have pointed out, fast cameras are not that hard to get these days. But, I'd like to keep this approach as affordable as possible, hence the extra work to support 60hz cameras.

Synchronized to the refresh cycle. Random times would be a mess, IMHO. There's almost certainly some fixed error in the chain - the GPU could be lying about when it sends the scanout over HDMI, for instance. And while I write directly the control registers for the on-board LED there could be some delay in response time on the LED. Using a 960fps camera you can actually see the LED light over the period of about 1 ms. BUT: since I have an OSSC to cross validate, it appears that the constant error is probably 1-2ms. And of course that's assuming the OSSC should be ground truth. Given that it uses a fixed brightness threshold I'm not sure it should be. The OSSC is a very nice device overall, but it wasn't built to be a lag tester. If there was ground truth it would be possible to advance or retard the LED and eliminate the source of constant error in my method.Chief Blur Buster wrote: ↑02 Jun 2020, 16:53

How does the device turn on the green LED, though? Random times, or synchronized to refresh cycles?

Measure display input lag the cheap way or the best way (IMHO, but I'm biased).

Re: Use a regular raspberry pi to measure input lag with millisecond accuracy

are u measuring display lag or input lag ?xeos wrote: ↑02 Jun 2020, 14:07For anybody who wants to measure input lag but doesn't want to shell out for a leo bodnar, time sleuth, or mod their OSSC there's another option that just requires a raspberry pi (zero). They can be had for $5 if you don't already have one. The other requirement is a camera - 60fps or higher (high speed is easier but not more accurate). No soldering or other hardware mods required, and the software is free.

https://alantechreview.blogspot.com/202 ... berry.html

that s different thing, the latter require to use your rasperry pi as an input device on a running windows

if you measure display lag then yeah a standalone pi could do it either with a cam or photodiode.

Re: Use a regular raspberry pi to measure input lag with millisecond accuracy

In the monitor/display review sites and such, input lag is the term for it - but perhaps in the gamer community it's called display lag? I'm not a hard core gamer. In any case it doesn't pay to get hung up on terms; to be clear: this is a device/method that measures how long it takes the display to respond to video signals over HDMI.

Measure display input lag the cheap way or the best way (IMHO, but I'm biased).