Did anyone test and published their results for CRT vs 240+ Hz?

Something like this:

But for monitors respectively. Or automated tests using Arduino and photo- resistor/diode/transistor, doesn't really matter.

Just wondering if CRTs are still faster.

Are there input lag tests CRT vs 240 Hz?

- Chief Blur Buster

- Site Admin

- Posts: 11653

- Joined: 05 Dec 2013, 15:44

- Location: Toronto / Hamilton, Ontario, Canada

- Contact:

Re: Are there input lag tests CRT vs 240 Hz?

Short answer:

Yes, they are.

But by only 1-2 milliseconds max versus the best LCDs.

Test a CRT 60Hz and an ultralowlag 1ms 60Hz LCD, and the lag is darn near identical for TOP/CENTER/BOTTOM for a Leo Bodnar Lag Tester and other lag testing techniques. This already scales to higher Hz, and beyond (Hz where CRTs don't exist for), just a smidge more.

Long answer:

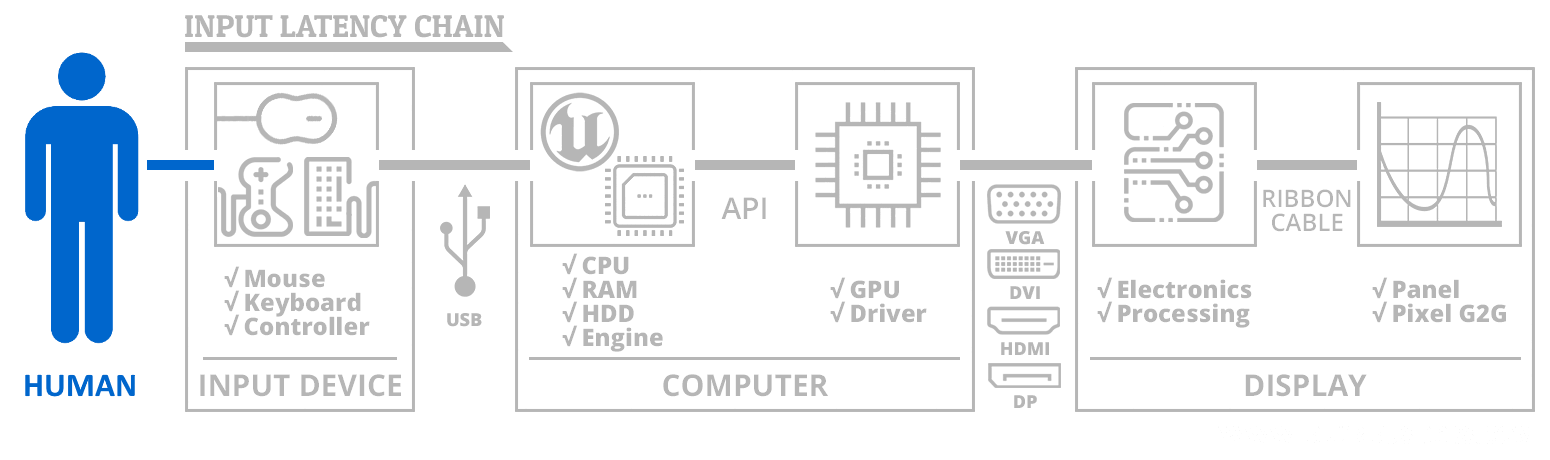

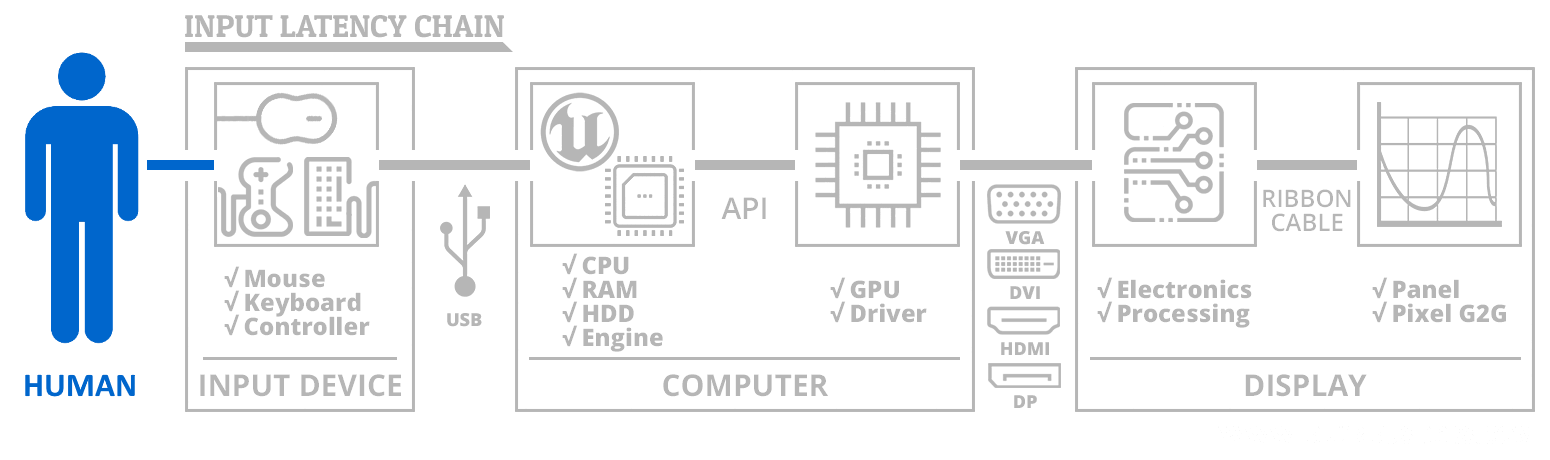

This is for a pixel-versus-pixel VSYNC OFF scanout-aware latency test for Present()-to-photons. (LCDs also scan raster, see high speed videos at www.blurbusters.com/scanout ...)

Since no 240Hz 1080p CRTs exist, we break down the latency problem for knowing where the raster in (scan line number being output of the GPU), versus scan line being illuminated in photons hitting eyeballs.

The differential is mainly the digital domain

- DisplayPort/HDMI micropacket system

- Small rolling-window processing (overdrive processing, color processing, micropacket jitter, demux of streams like audio away from video, multiple video streams on DisplayPort, etc). Desktop gaming monitors usually no longer fullframebuffer-process, at max Hz, most a realtime flying rolling window (a few pixel rows) syncing cable scanout to panel scanout.

- LCD GtG, which can now be sub-1ms for some colors. That said, GtG10% is human visible, so we don't need to wait till GtG100% for lag-measurements relevant to human reaction time.

What if I use an adaptor and use a CRT?

Now if you used an adaptor for DisplayPort-to-VGA for a CRT, you will be adding back most of the same lags too (except LCD GtG), since it has its own digital/micropacket processing too.

Head of Blur Busters - BlurBusters.com | TestUFO.com | Follow @BlurBusters on Twitter

Forum Rules wrote: 1. Rule #1: Be Nice. This is published forum rule #1. Even To Newbies & People You Disagree With!

2. Please report rule violations If you see a post that violates forum rules, then report the post.

3. ALWAYS respect indie testers here. See how indies are bootstrapping Blur Busters research!

Re: Are there input lag tests CRT vs 240 Hz?

Ok. Well, are there tests with concrete numbers?Chief Blur Buster wrote: ↑05 Jun 2020, 11:39Short answer:

Yes, they are.

But by only 1-2 milliseconds max versus the best LCDs.

I would like to see something like this:

CSGO Flood map 1000 measurements each test

LCD 60 Hz 500 FPS: 13.26ms average

LCD 120Hz 500 FPS: 11.74ms average

LCD 240 Hz 500 FPS: 9.53ms average

CRT 85Hz 500 FPS: 8.56ms average

I did just that today myself using Arduino with 5 photoresistors put close to the left side of displays and got this:

CSGO Flood map 1000 measurements each test

LCD 60 Hz 500 FPS: 12.93ms average

CRT 85Hz 500 FPS: 8.06ms average

And I'd like to know where the 240+ Hz panels lie compared to CRT.

- Chief Blur Buster

- Site Admin

- Posts: 11653

- Joined: 05 Dec 2013, 15:44

- Location: Toronto / Hamilton, Ontario, Canada

- Contact:

Re: Are there input lag tests CRT vs 240 Hz?

Before we go further, we have to correctly disclose the parameters of the latency tests.

Lag stopwatching methods are a cesspool of multiple different opposing latency standards covering multiple subsets of the latency grain.

Latency is multiple numbers:

- Absolute latency

- Latency gradient (differences in lag between different pixels)

- Latency volatility

- Etc.

Stopwatch start varies between different websites and testers

- Frame completion stopwatching

- VBI stopwatching

- Sync technology variances (VSYNC ON, VSYNC OFF, G-SYNC)

- Etc.

Stopwatch stop varies between different websites and testers

- First faint photon visibility (e.g. GtG1% or GtG2%), often before human notices

- Full pixel completion (e.g. GtG 100%)

- GtG midpoint options (GtG 10% or GtG50%), more relevant to human reaction time

- One color test

- Averages of multiple color test (Since some colors are laggier)

- First-anywhere test (e.g. camera-base lag tests), basically, first changed pixel visible

- Etc.

Different lag stopwatching rules generates completely different numbers.

CS:GO tests button-to-pixels, which adds a lot of latency noise, so in this case, for a same rez-for-rez, same hz-for-hz, same sync tech test, so 1080p 60Hz vs 1080p 60Hz has practically no visible lag difference CRT vs lowest-lag 60Hz LCDs (not 240Hz monitors configured to 60Hz).

Now, since 1080p 240Hz CRTs do not exist, that type of lag test is impossible to achieve. However, when one breaks down the latency chain only the "frame presentation to photons" portion, the lag differnces do faintly become visible, but is only a 1-2ms time window.

You can see displaylag.com Leo Bodnar Lag Tester, but Leo Bodnar Lag Tester is a very limited "VSYNC ON 60Hz 1080p" lag test with a known VBI stopwatch start, but an unknown lag stopwatch end (GtG%). However, when it is tested on the fastest 1080p 60Hz LCDs, combined to 1080p 60Hz capable CRT, it is emitting the same lag numbers. Also since no GPUs with VGA outputs are sold anymore, so that's a testing complication too - but when Leo Bodnar lag testing a CRT, you use an adaptor, and the digitalness adds a bit of lag (albiet <1-2ms). So also extremely hard to do apples-vs-apples.

However, for apples-vs-apples (and midpoint GtGs first time where human visible), the difference becomes negligible.

Mind you, VSYNC OFF lag numbers are totally different, since that's a scanout-following latency test, where TOP = CENTER = BOTTOM rather than TOP < CENTER < BOTTOM because VSYNC OFF bypasses scanout latency.

TL;DR: Understand latency stopwatching disclosure. Most website lag tests do not disclose lag stopwatch sequence adequately. Be aware of lack-of-disclosure caveats when quoting lag numbers.

Lag stopwatching methods are a cesspool of multiple different opposing latency standards covering multiple subsets of the latency grain.

Latency is multiple numbers:

- Absolute latency

- Latency gradient (differences in lag between different pixels)

- Latency volatility

- Etc.

Stopwatch start varies between different websites and testers

- Frame completion stopwatching

- VBI stopwatching

- Sync technology variances (VSYNC ON, VSYNC OFF, G-SYNC)

- Etc.

Stopwatch stop varies between different websites and testers

- First faint photon visibility (e.g. GtG1% or GtG2%), often before human notices

- Full pixel completion (e.g. GtG 100%)

- GtG midpoint options (GtG 10% or GtG50%), more relevant to human reaction time

- One color test

- Averages of multiple color test (Since some colors are laggier)

- First-anywhere test (e.g. camera-base lag tests), basically, first changed pixel visible

- Etc.

Different lag stopwatching rules generates completely different numbers.

CS:GO tests button-to-pixels, which adds a lot of latency noise, so in this case, for a same rez-for-rez, same hz-for-hz, same sync tech test, so 1080p 60Hz vs 1080p 60Hz has practically no visible lag difference CRT vs lowest-lag 60Hz LCDs (not 240Hz monitors configured to 60Hz).

Now, since 1080p 240Hz CRTs do not exist, that type of lag test is impossible to achieve. However, when one breaks down the latency chain only the "frame presentation to photons" portion, the lag differnces do faintly become visible, but is only a 1-2ms time window.

You can see displaylag.com Leo Bodnar Lag Tester, but Leo Bodnar Lag Tester is a very limited "VSYNC ON 60Hz 1080p" lag test with a known VBI stopwatch start, but an unknown lag stopwatch end (GtG%). However, when it is tested on the fastest 1080p 60Hz LCDs, combined to 1080p 60Hz capable CRT, it is emitting the same lag numbers. Also since no GPUs with VGA outputs are sold anymore, so that's a testing complication too - but when Leo Bodnar lag testing a CRT, you use an adaptor, and the digitalness adds a bit of lag (albiet <1-2ms). So also extremely hard to do apples-vs-apples.

However, for apples-vs-apples (and midpoint GtGs first time where human visible), the difference becomes negligible.

Mind you, VSYNC OFF lag numbers are totally different, since that's a scanout-following latency test, where TOP = CENTER = BOTTOM rather than TOP < CENTER < BOTTOM because VSYNC OFF bypasses scanout latency.

TL;DR: Understand latency stopwatching disclosure. Most website lag tests do not disclose lag stopwatch sequence adequately. Be aware of lack-of-disclosure caveats when quoting lag numbers.

Head of Blur Busters - BlurBusters.com | TestUFO.com | Follow @BlurBusters on Twitter

Forum Rules wrote: 1. Rule #1: Be Nice. This is published forum rule #1. Even To Newbies & People You Disagree With!

2. Please report rule violations If you see a post that violates forum rules, then report the post.

3. ALWAYS respect indie testers here. See how indies are bootstrapping Blur Busters research!

Re: Are there input lag tests CRT vs 240 Hz?

I see. Well, I'm interested from the gamer's perspective and I don't think we as gamers(who else cares about latency on monitors?) care how fast first line from the new frame will be shown on the screen. I think we care how fast we'll get whole new frame on the screen no matter where it starts drawing it: top, middle or bottom. And that's why 120 Hz LCD is so much better than 60 Hz LCD: new frame is fully shown on the screen about twice as fast.Chief Blur Buster wrote: ↑05 Jun 2020, 12:19Stopwatch start varies between different websites and testers

- Frame completion stopwatching

- VBI stopwatching

- Sync technology variances (VSYNC ON, VSYNC OFF, G-SYNC)

- Etc.

Stopwatch stop varies between different websites and testers

- First faint photon visibility (e.g. GtG1% or GtG2%), often before human notices

- Full pixel completion (e.g. GtG 100%)

- GtG midpoint options (GtG 10% or GtG50%), more relevant to human reaction time

- One color test

- Averages of multiple color test (Since some colors are laggier)

- First-anywhere test (e.g. camera-base lag tests), basically, first changed pixel visible

- Etc.

Different lag stopwatching rules generates completely different numbers.

Ok. So like I said, I'm interested in the total latency of presenting a full frame. So if we imagine that video card just finished rendering new frame and swapped front and back frame buffers, and we have 2 monitors: 85 Hz CRT and 360 Hz LCD. And 2 monitors are synced in a way that they present this frame from line number 1 and down to the last line.Chief Blur Buster wrote: ↑05 Jun 2020, 12:19CS:GO tests button-to-pixels, which adds a lot of latency noise, so in this case, for a same rez-for-rez, same hz-for-hz, same sync tech test, so 1080p 60Hz vs 1080p 60Hz has practically no visible lag difference CRT vs lowest-lag 60Hz LCDs (not 240Hz monitors configured to 60Hz).

Now, since 1080p 240Hz CRTs do not exist, that type of lag test is impossible to achieve. However, when one breaks down the latency chain only the "frame presentation to photons" portion, the lag differnces do faintly become visible, but is only a 1-2ms time window.

By my calculation latency of presenting a full frame on CRT should be 1000 / 85 ~ 11.76ms total. And total latency of presenting a full frame on 360 Hz LCD should be 1000 / 360 + 1ms latency on LCD matrix + 1ms latency on DisplayPort = 2.78 + 1 + 1 = 4.78ms.

So if we take CSGO and cap it at 360 FPS, by the time 85 Hz CRT will fully refresh once from top to bottom(and show chunks of 5 different frames), 360 Hz LCD will fully show 4 different frames and will start showing fifth. Or it's better said the other way around: by the time one-fifth of the frame will be shown on 85 Hz CRT, whole frame will be shown on a 360 Hz LCD.

And even if we take average latency on any point of the screen with syncs off, on 85 Hz CRT it should be 1000 / 85 / 2 = 5.76ms, and on 360 Hz LCD it should be 1000 / 360 / 2 + 1 + 1 = 3.39ms.

Am I wrong?

- Chief Blur Buster

- Site Admin

- Posts: 11653

- Joined: 05 Dec 2013, 15:44

- Location: Toronto / Hamilton, Ontario, Canada

- Contact:

Re: Are there input lag tests CRT vs 240 Hz?

Sync technology enabled would mean it's not "VSYNC OFF"Kulagin wrote: ↑12 Jun 2020, 05:07Ok. So like I said, I'm interested in the total latency of presenting a full frame. So if we imagine that video card just finished rendering new frame and swapped front and back frame buffers, and we have 2 monitors: 85 Hz CRT and 360 Hz LCD. And 2 monitors are synced in a way that they present this frame from line number 1 and down to the last line.

For a full scanout, last-pixel-latency, yes.

Mind you, as you later said in your post -- average lag also tend to match screen centre too. Screen centre will be halftime (the crosshairs, the important location for many gamers), it will be about half the scanout latency, aka (1000 / 85 / 2) versus (1000 / 360 / 2). Screen centre also happens to be a good synonym for average latency of each pixel too.

There can be other latency distortion effects, but for a non-strobed LCD running at max refresh rate, with a sync technology enabled (aka scanout latency), these are reasonable estimates.

The extra latencies (LCD matrix, DisplayPort) are somewhat black box but those are reasonable guesstimates (they can be under 1ms each by the way, but 1ms is a good approx rule of thumb for a modern gaming monitor). Some panels are much worse than others (full framebuffer latency for processing) while others are much better with really subpixel latencies (a rolling buffer of only a few scanlines).

Yes, that is correct. The frameslice height difference for a given framerate, on display X versus Y will be (X Hz)/(Y Hz). Your 85Hz monitor will only have 85/360ths frameslice height of the 360Hz monitor, for any given framerate.Kulagin wrote: ↑12 Jun 2020, 05:07So if we take CSGO and cap it at 360 FPS, by the time 85 Hz CRT will fully refresh once from top to bottom(and show chunks of 5 different frames), 360 Hz LCD will fully show 4 different frames and will start showing fifth. Or it's better said the other way around: by the time one-fifth of the frame will be shown on 85 Hz CRT, whole frame will be shown on a 360 Hz LCD.

For any monitor, VSYNC OFF frameslice height is mathematically approximately: (refreshrate / framerate) assuming the blanking interval is a tiny fraction of a refresh cycle (usually ~5% or less, see Custom Resolution Utility Glossary)

Same VSYNC OFF framerate at twice the refresh rate, means frameslice height doubles -- the doubled horizontal scanrate means twice the number of pixel rows displayed during VSYNC OFF at twice the Hz.

So "First-anywhere" screen latency benchmarks will become lower, even if minimum latency is identical (pixel for pixel single-sensor photodiode). More frame is displayed onscreen per game frame, at higher refresh rates for VSYNC OFF, increasing likelihood that in-frame stimuli becomes visible to the human eyes sooner.

These are very reasonable average-lag ballparks, correct.

Mind you, a caveat that the decimals may not be meaninful here, given error margin of these kinds of guesstimates are often at least +/-1ms due to black box factors. But these are the correct latency ballparks for 360fps VSYNC OFF.

You got your latency concepts correct as far as I can tell!

Head of Blur Busters - BlurBusters.com | TestUFO.com | Follow @BlurBusters on Twitter

Forum Rules wrote: 1. Rule #1: Be Nice. This is published forum rule #1. Even To Newbies & People You Disagree With!

2. Please report rule violations If you see a post that violates forum rules, then report the post.

3. ALWAYS respect indie testers here. See how indies are bootstrapping Blur Busters research!

Re: Are there input lag tests CRT vs 240 Hz?

I've tested with own written d3d program with thousands of fps (M01) moused with LED connected to mouse button and 1200fps camera. Time in ms is diff of first change on LED and first change on screen. Main concern was a slow lighting up LED

Mouse: Logitech G100S mouse with LED connected to right mouse button.

Camera: Nikon 1 J1 that can capture 5 seconds video with 320x120 at 1200 fps.

Mouse: Logitech G100S mouse with LED connected to right mouse button.

Camera: Nikon 1 J1 that can capture 5 seconds video with 320x120 at 1200 fps.

-

bakedchicken

- Posts: 10

- Joined: 17 Mar 2019, 19:31

Re: Are there input lag tests CRT vs 240 Hz?

https://www.youtube.com/user/a5hun/videos check out a5hun's videos, he has crts and in fact also the new 280 ips. also his website has a shitload of information i don't think it gets much better than that. dunno why he isn't more well known

Re: Are there input lag tests CRT vs 240 Hz?

Thanks for answers