Rasterization = Scanout, And Yes, Both Are Real Time Nowadays

Kamen Rider Blade wrote: ↑19 Mar 2021, 02:04

Chief Blur Busters, do most monitors wait for 1 entire frame of data before starting the rasterization process across each line?

Most all of them do subrefresh sync nowadays for the last 10 years. It's been a decade since most of them stopped doing full framebuffer latency.

First, At The Root Of Common Misconceptions: Lag Testing Methods Vary

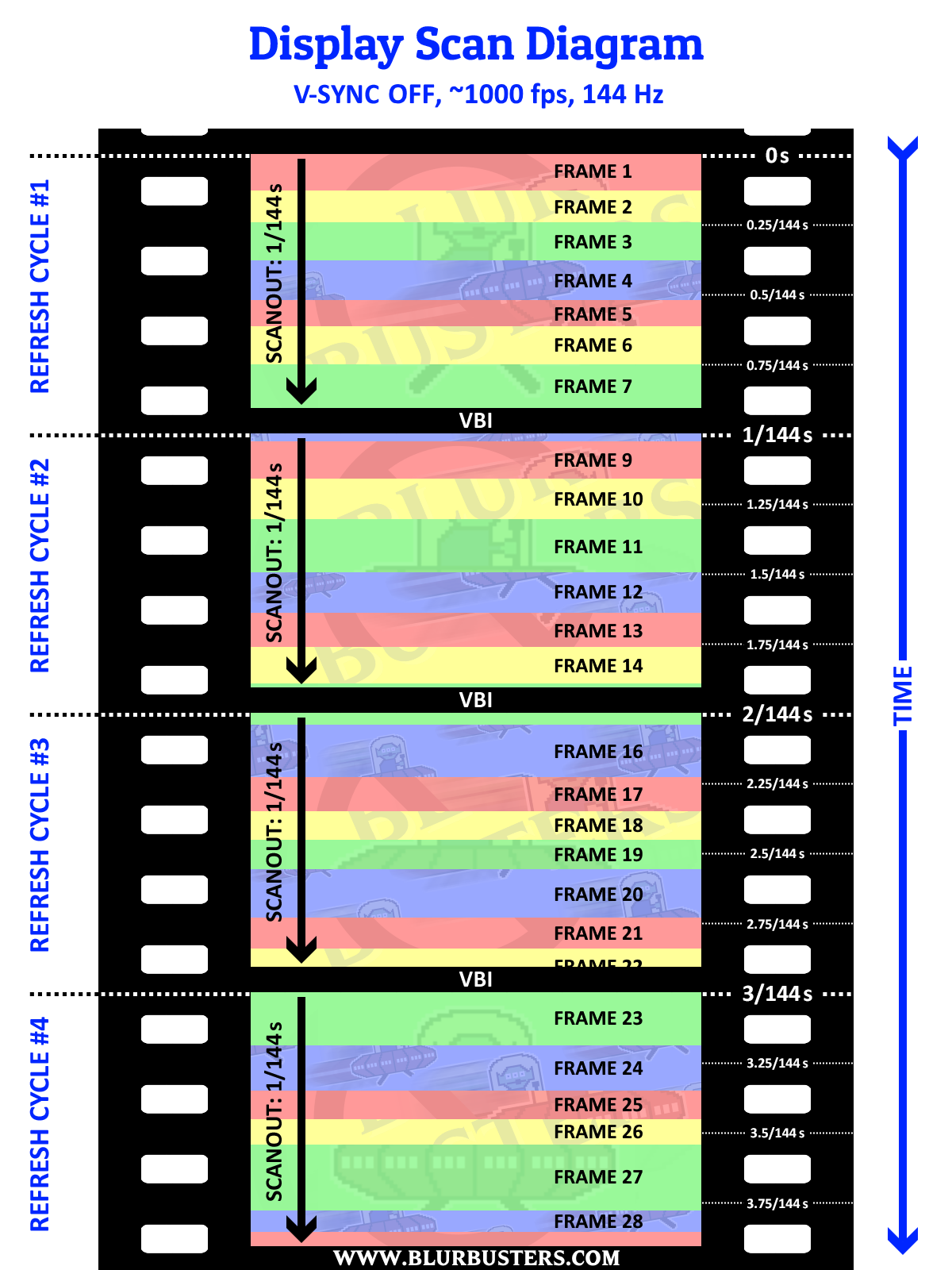

The latency numbers you see on the Internet measured more than a frame is simply latency measurement methodology. Some people are measuring using a VSYNC ON latency tester, which can never have subrefresh latency for screen bottom edge. While others are using VSYNC OFF latency testers. So one site may measure 20ms and a different site 3ms. The moral of the story is latency benchmarking have different stopwatching methods. Stopwatch start can vary from Present() or start-of-VBI (making latency stopwatch start sensitive to sync technology), and latency stopwatch end can vary (which GtG % -- since a pixel fades in gradually as GtG is simply a slow fade). But if you use 1000fps VSYNC OFF latency testers, all current gaming monitors measure subrefresh Present()-to-photons latency nowadays, even if a different site measures 20ms lag because they used a 1080p 60Hz VSYNC ON lag tester device (i.e. Leo Bodnar) on a 240Hz monitor --creating 3 or 4 concurrent latency weak links not applicable to your gaming

from sheer flawed lag testing method alone --

which doesn't help educate people that subrefresh latency has been routine on desktop gaming LCDs for over ten years.

(Because of different latency stopwatching methods of different websites, always compare lag numbers against numbers on the same website, and try to determine if their latency testing method is using the same sync technology you plan to use. LagTester.com and TomsHardware.com uses a VSYNC ON lag test devices, and RTINGS.com / TFTCentral.co.uk uses VSYNC OFF lag test devices).

All the big names (ASUS, ACER, ViewSonic, BenQs, etc) reliably does that now for their gaming monitors, as does most 144Hz-to-240Hz LCDs

run at their native refresh rate.

Next, More About Modern Sub-Refresh Latency Capabilities

You might want to

re-read my post above to understand better, as well as

Custom Resolution Utility Glossary.

Blur Busters Termonology Glossary

"Rasterization" = "Scanout" (same thing)

So you can re-read our scanout-related articles as rasterization related. Cable rasterization is identical to panel rasterization on most panels. So they're usually sync'd (at least at max Hz). I talk a lot about "cable=panel scanout sync" and such.

So most gaming monitors (144Hz and up) now sync cable to panel scanout, since low lag is important. This is now common for modern IPS and TN panels. They don't need to framebuffer a full frame, they only need to linebuffer a few lines (just enough for HDMI/DP micropacket dejitter + color processing + overdrive processing + etc). You can also study the Area 51 forums to learn more about how modern LCDs operate currently.

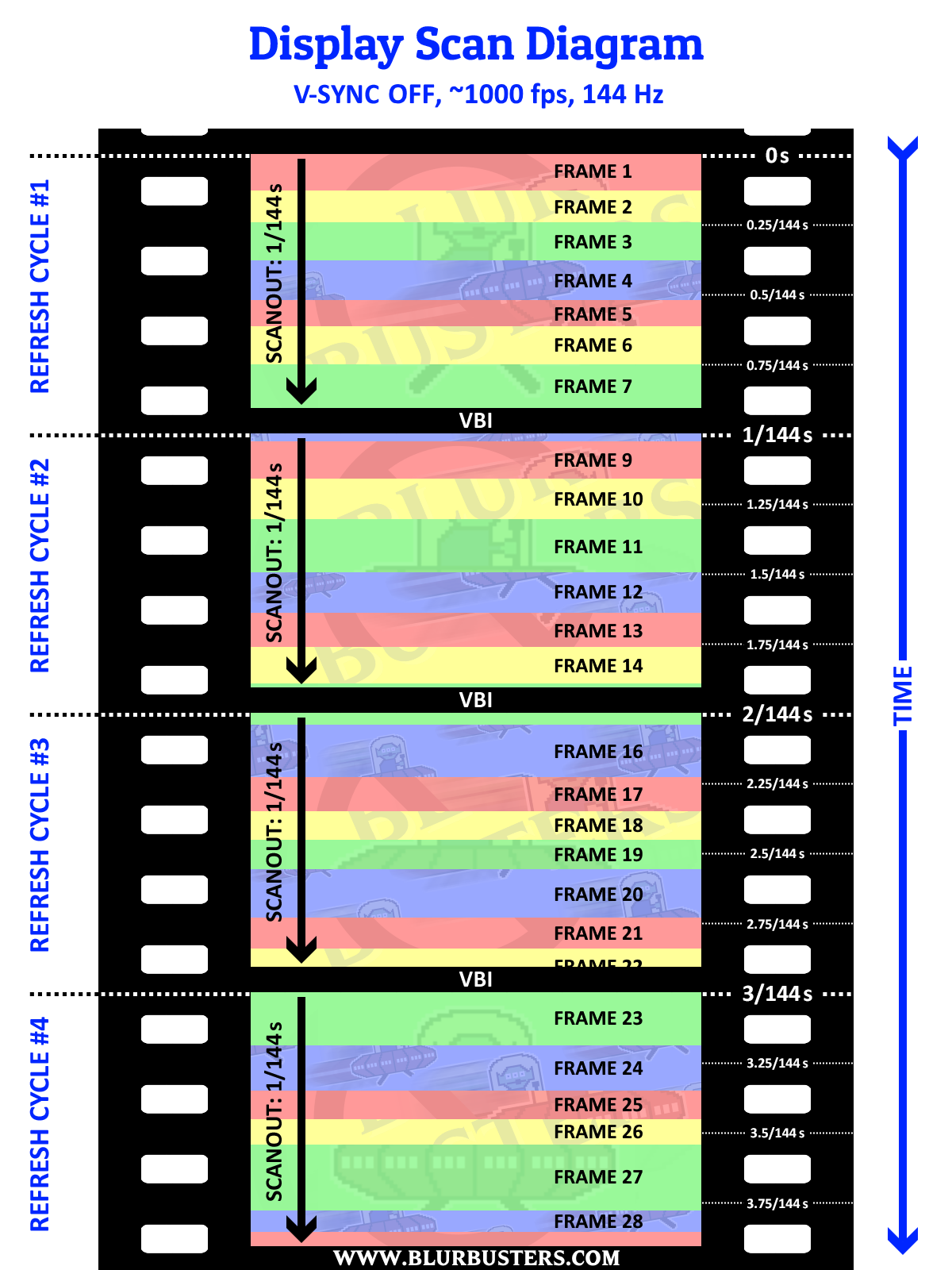

Now, each pixel in this diagram has subrefresh latency on most 240Hz LCDs. Present()-to-Photons latency is mostly only cable-transmission and GtG latency in this specific case, I've seen as little as ~1.5second latency between software API Present() and the light glowing from the pixels:

The first scanline at top edge of a frameslice microseconds of latency on an analog VGA (like old GTX 680 connected via VGA to CRT), but adds to about 2-3ms of latency on say, a 240Hz 1ms IPS panel or 240Hz 1ms TN panel. This is because of codec and packet latency (Modern digital outputs such as DisplayPort/HDMI outputs is essentially sort of a modem with packetization) and the display motherboard has to buffer a few rows of pixels to dejitter the packets first. And pixel transitions (LCD GtG) takes time too.

But the bottom line is that's very sub-refresh; the cable is streaming almost straight onto the panel "in a manner of speaking" with only a small rolling window of a few pixel rows.

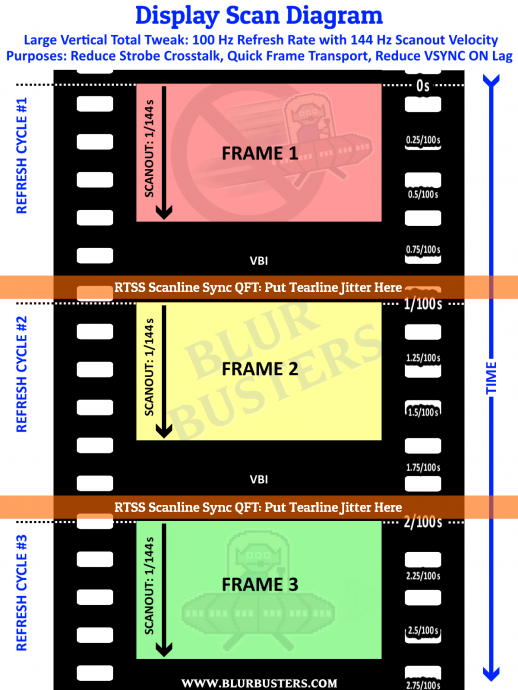

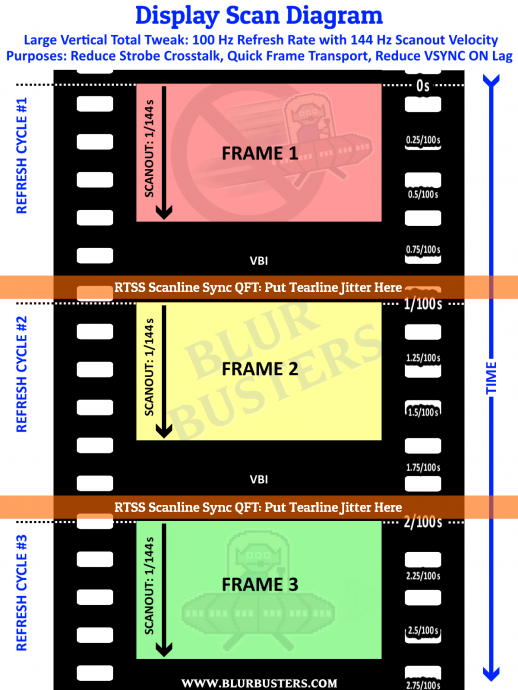

There are situations where lag can appear if your panel is rasterizing at fixed speed (e.g. fixed horizontal scan rate), which means some 240 Hz panels have high 60Hz lag (unless you use

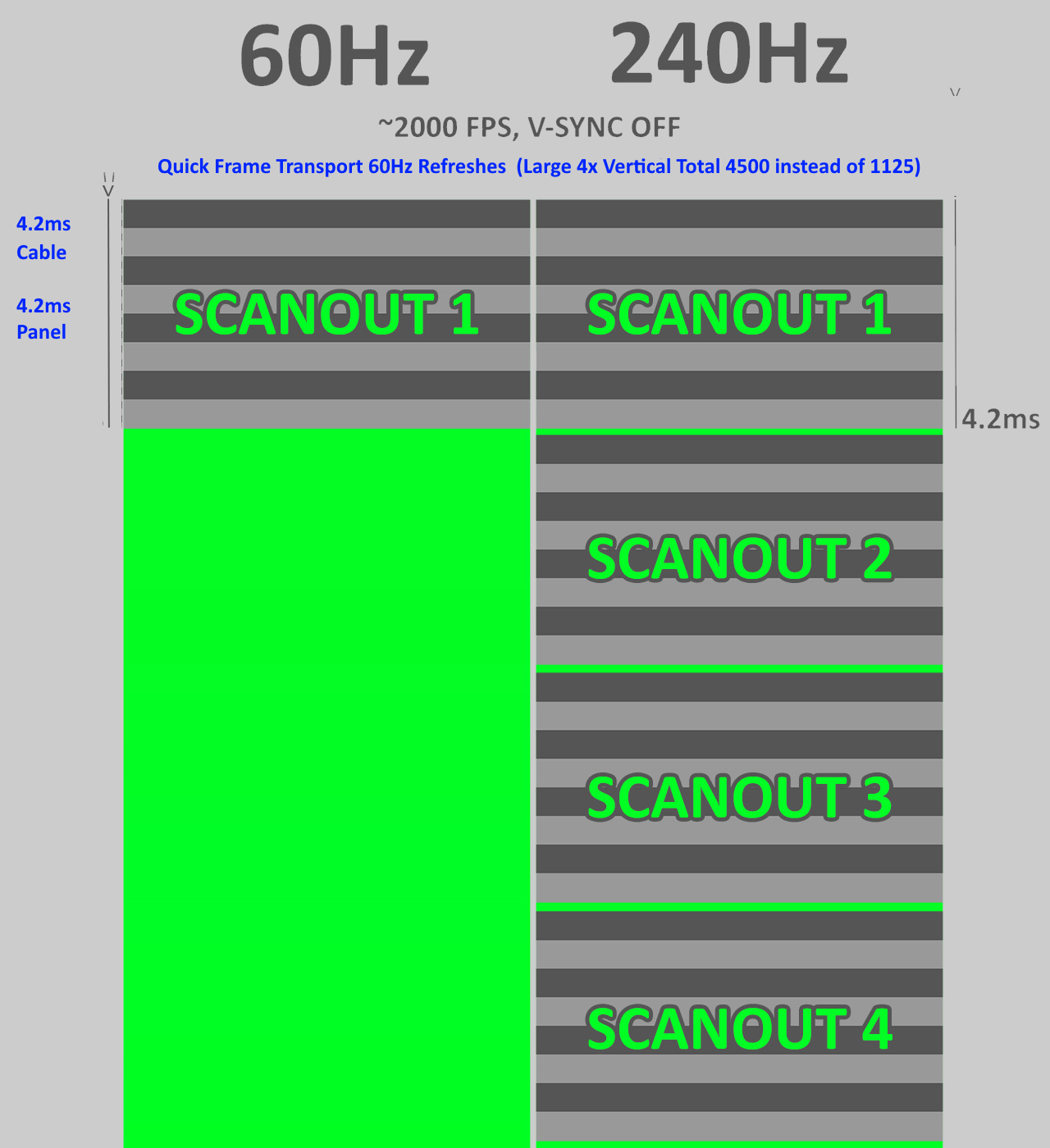

Quick Frame Transport tricks which is simply a high scanrate followed by a long blanking interval. So a 60Hz refresh cycle can be transmitted top-to-bottom over cable in 1/240sec, while simultaneously synchronously refreshed top-to-bottom onto the screen in 1/240sec, for lower VSYNC ON latency, if you need a low-lag low-Hz mode, on a high-Hz-capable panel, for example).

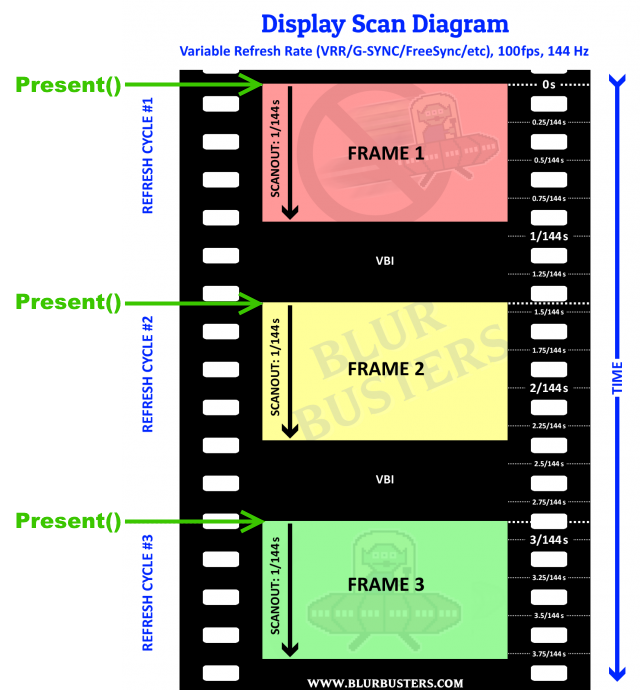

VRR is also rasterized too (which is why I can get

FreeSync working on certain CRTs via a HDMI-to-VGA adaptor trick) -- it's just a varying-size blanking interval between refresh cycles. We've been using the same raster method for 100 years from the first 1920s TVs to current 2020s DisplayPort LCDs which still use the same raster sequence (in digital form) regardless of refresh rate or VRR technology.

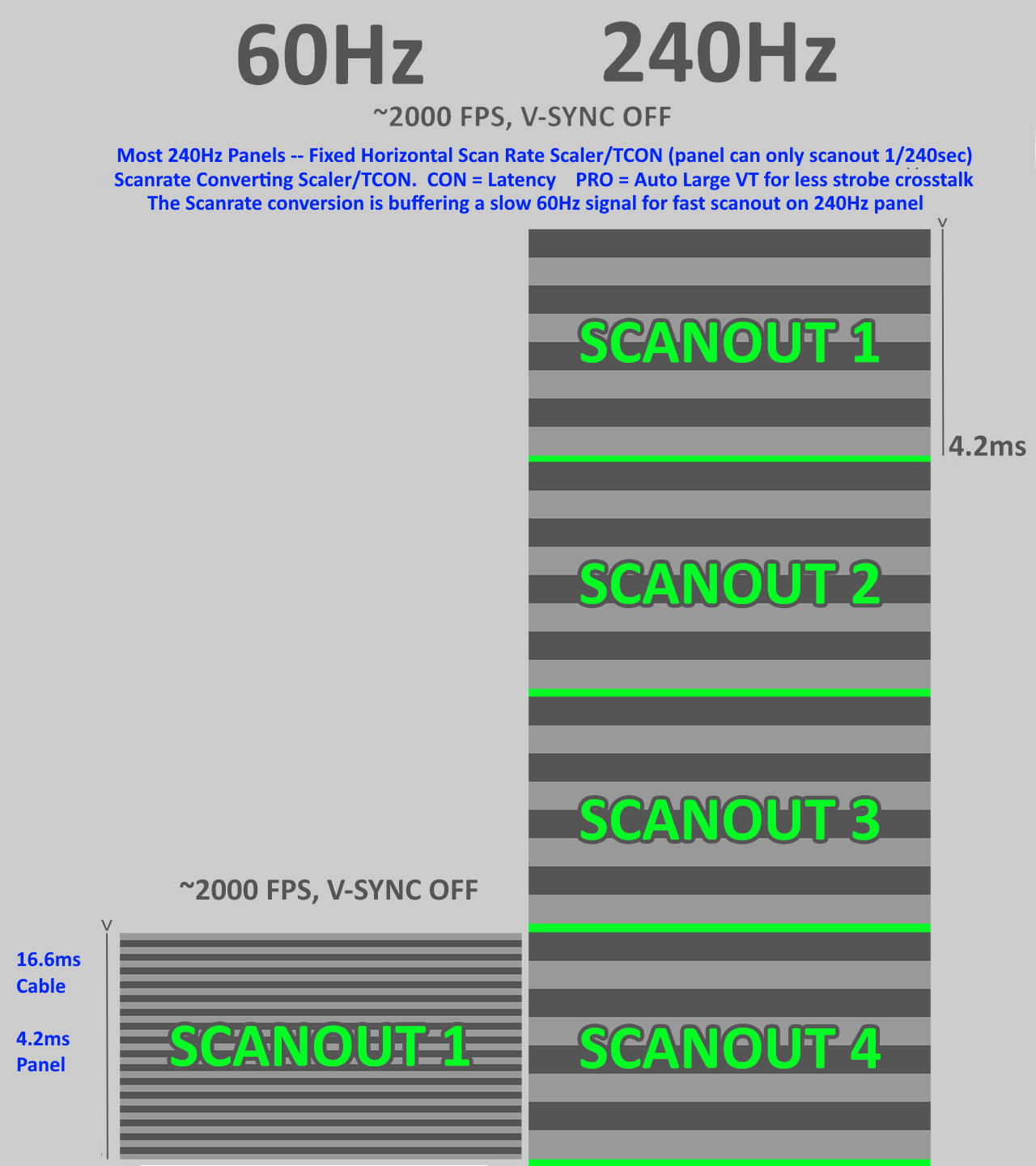

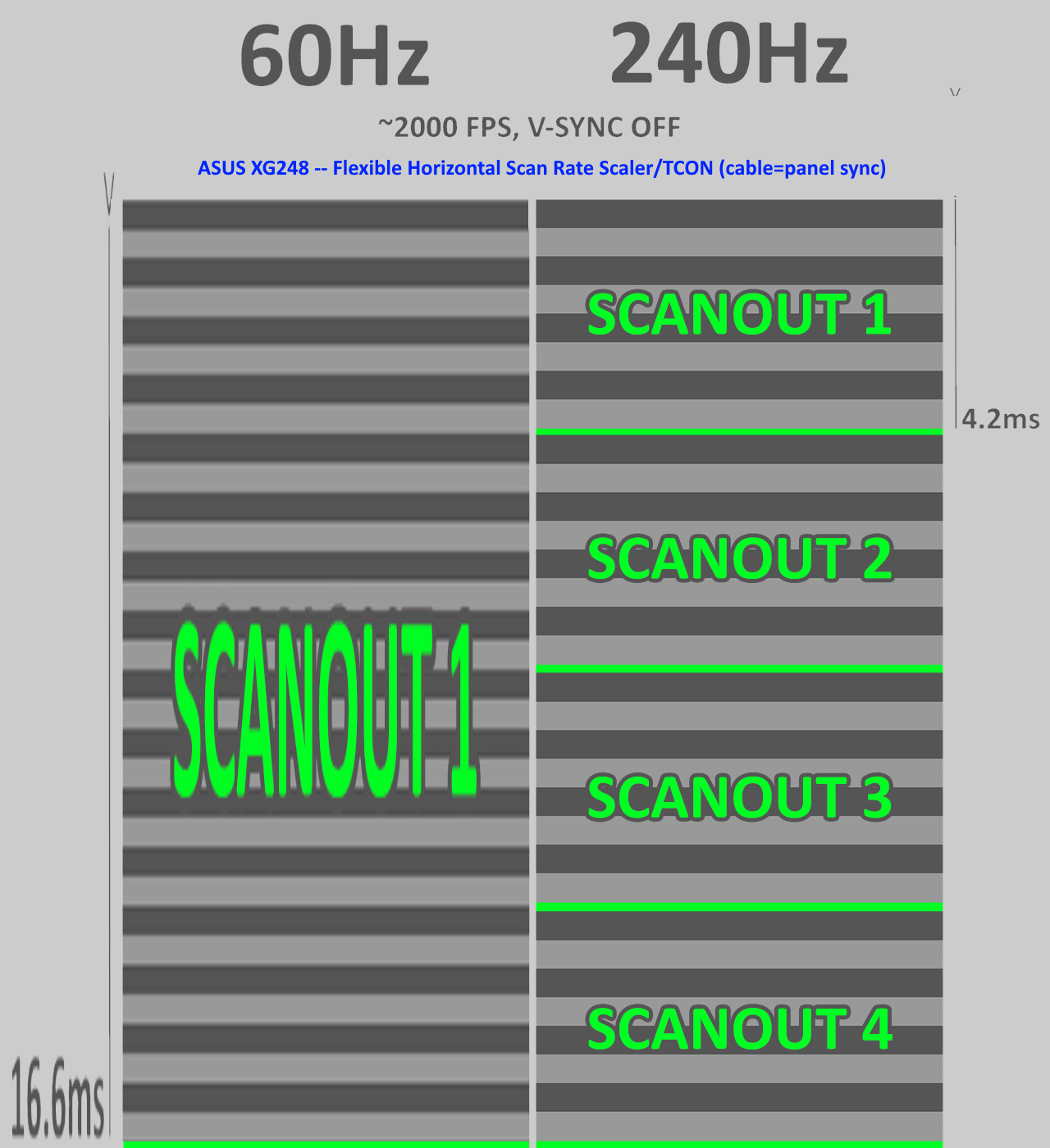

GOOD: Any 240Hz panel run with 240Hz source (usually sync'd rasterization. Cable=panel realtime scanout)

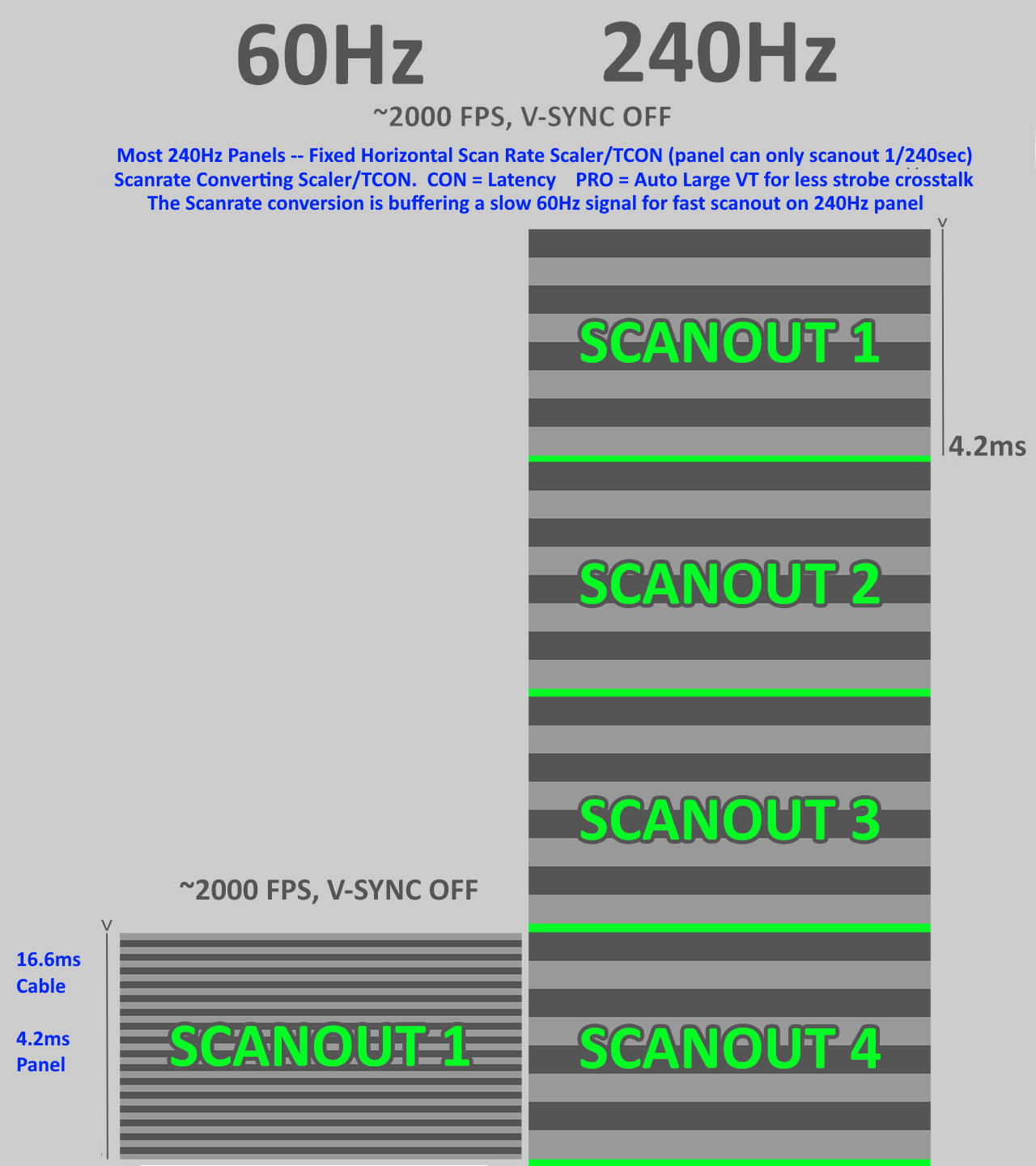

BAD: Fixed-horizontal-scanrate 240Hz panel run at ATSC HDTV 60Hz (out-of-sync rasterization)

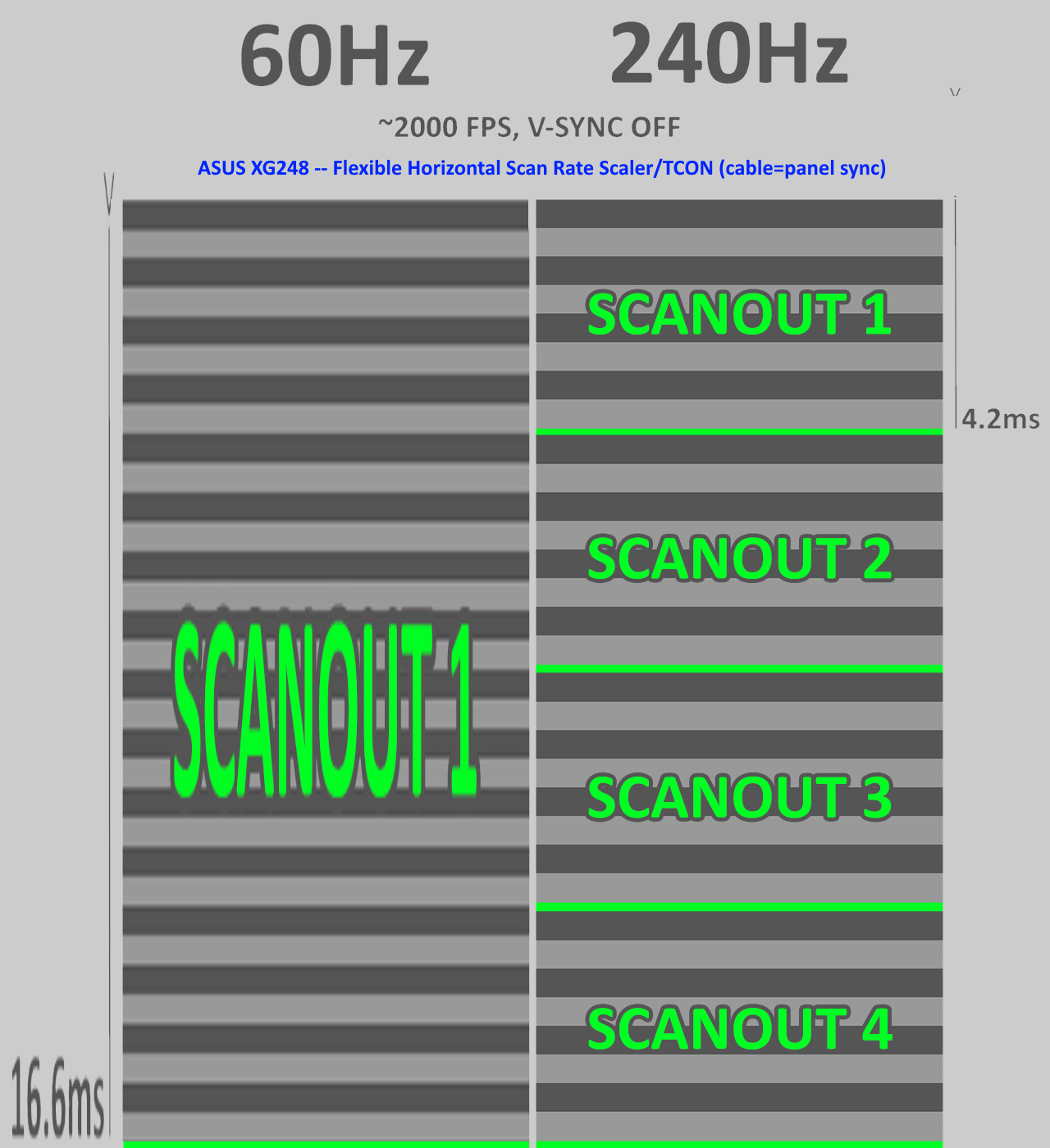

GOOD: Variable-horizontal-scanrate 240Hz panel run at ATSC HDTV 60 Hz (sync'd rasterization. Cable=panel realtime scanout)

BAD: Fixed-horizontal-scanrate 240Hz panel run with XBox/PS4 120Hz (out-of-sync rasterization)

GOOD: Variable-horizontal-scanrate 240Hz panel run with XBox/PS4 120Hz (sync'd rasterization. Cable=panel realtime scanout)

GOOD: Fixed-horizontal-scanrate 240Hz panel run with Quick Frame Transport 60Hz (Vertical Total 4500 to allow 60Hz refresh cycles transmitted in 1/240sec over cable for panels that can't scan slower than 1/240sec sweep) (sync'd rasterization. Cable=panel realtime scanout)

Etc.

Chief Blur Buster wrote:Crossposting an educational thread.

hmukos wrote: ↑22 May 2020, 19:02

I would understand this if multiple frameslices to be scanned out in this cycle would be somehow preremembered and scanned only after as a whole. But doesn't scanout happen in realtime and show frame as soon as it is rendered?

There's a cable scanout and a panel scanout, and they both can be higher/lower than each other.

Jorim nailed most of it for the panel scanout level, though there should be two separate scanout diagrams to help understand context (scanout diagram for cable, scanout diagram for panel) whenever the scanout are different velocities.

However, there are some fundamental clarifications that is needed.

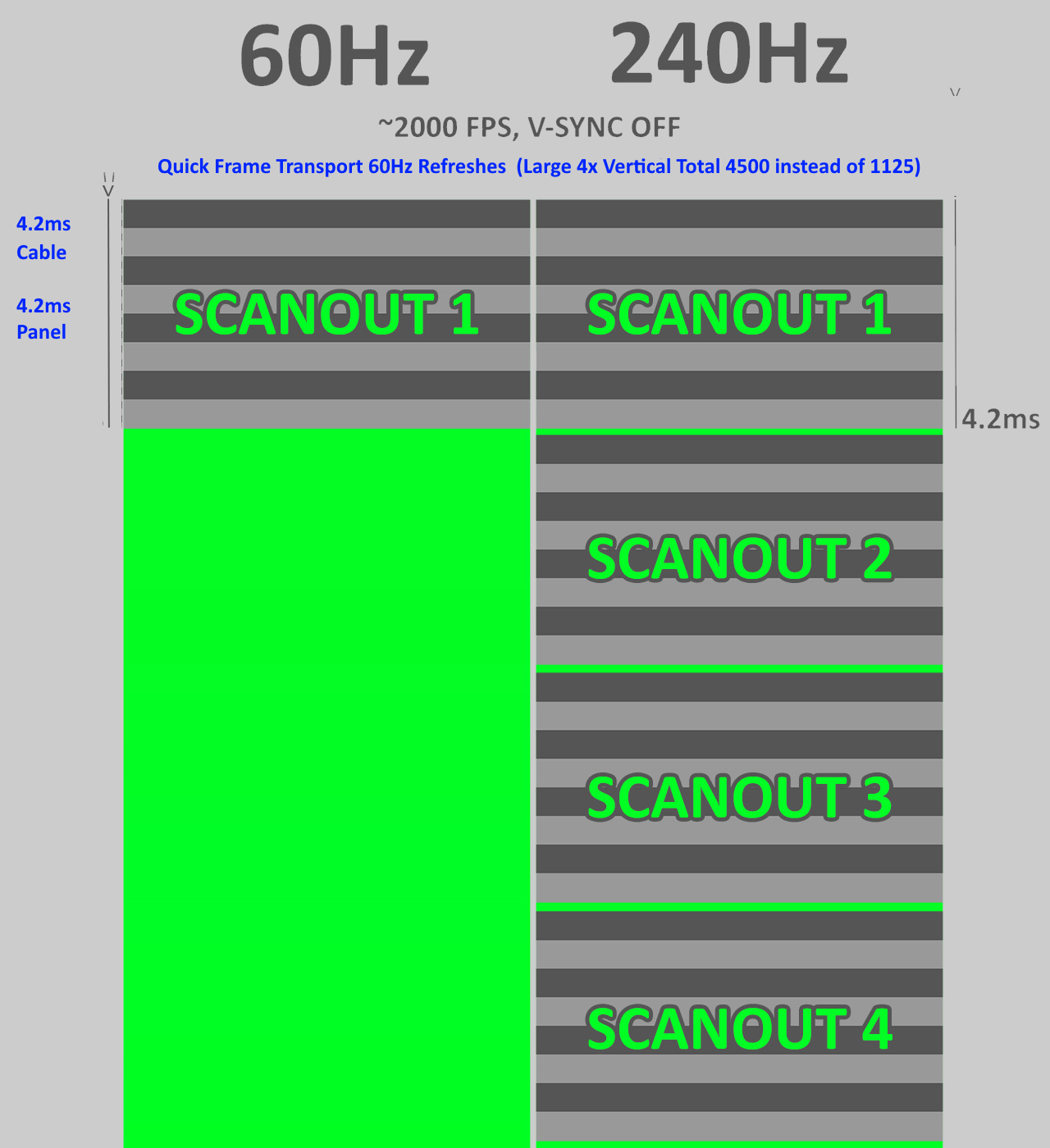

The frameslices are still compressed together because the frameslices are injected at the cable level, but the monitor motherboard is buffering the 60Hz refresh cycle to scanout in 1/240sec.

Fixed-Scanrate Panels

Fixed-scanrate panels create input lag at refresh rates lower than max-Hz, unless Quick Frame Transport is used to compensate.

I would bottom-align the 60Hz like this, however:

Scaler/TCON scan conversion "compresses the scanout downwards" towards the time delivery of the final pixel row. So about 3/4ths of 60Hz scanout is delivered before the panel begins refreshing at full 1/240sec velocity.

Also, sometimes this is intentionally done by a panel with a strobed backlight or scanning backlights, to artificially increase the size of VBI, to reduce strobe crosstalk (double image effects), by creating a VBI large enough to hide LCD GtG pixel response between refresh cycles (hiding GtG in backlight-OFF).

Flexible Scanrate Panels

However, some panels are scanrate multisync, such as the ASUS XG248, which has excellent low 60Hz console lag:

Learn more about Quick Frame Transport

Learn more about Quick Frame Transport

For more information about compensating for buffering lag, you can use Quick Frame Transport (Large Vertical Totals) to lower latency of low refresh rates on 240Hz panels:

Custom Quick Frame Transport Signals.

The Quick Frame Transport creates this situation:

This can dramatically reduce strobe lag, but Microsoft and NVIDIA needs to fix their graphics drivers to use end-of-VBI frame Present(). Look at the large green block, so frame Present() needs to be at the END of the green block, to be closer to the NEXT refresh (less lag!).

Microsoft / NVIDIA Limitation Preventing QFT Lag Reductions

Unfortunately, Quick Frame Transport currently only reduces lag if you simultaneously use RTSS Scanline Sync (with

negative number tearline indexes) to move Present() from beginning of VBI to the end of VBI. So hacks have often been needed.

This simulates a VSYNC ON with a inputdelayed Present() as late as possible into the vertical blanking interval.

The software API, called Present(), built into all graphics drivers and Windows, to present a frame from software to the GPU. Normally Present() blocks (doesn't return to the calling software) until the blanking interval. But Present() blocks until the very beginning of VBI (after the final scan line) before releasing. Many video games does the next keyboard/mouse read at that instant right after Present() returns. So it's in our favour to delay returning from Present() until the very end of the VBI: That delays input reads closer to the next refresh cycle! Thus, delayed Present() return = lower input lag because keyboard/mouse input is read closer to the next refresh cycle.

A third party utility, called RTSS, has a new mode called "Scanline Sync", that can be used for Do-It-Yourself Quick Frame Transport.

Then that dramatically reduces VSYNC ON input lag (anything that's not VSYNC OFF) on both fixed-scanrate and flexible-scanrate panels, because the 60Hz scanout velocity is the same native velocity of 240Hz.

Great for reducing strobe lag, too!

(Not everyone at Microsoft, AMD, and NVIDIA fully understand this.)

We successfully reduced the input lag of ViewSonic XG270 PureXP+ by 12 milliseconds less input lag, while ALSO reducing strobe crosstalk, with this technique.

ViewSonic XG270 120Hz PureXP+ Quick Frame Transport HOWTO.

Earlier, I tried large Front Porches, hoping that Microsoft inputdelayed to the first scanline of VBI before unblocking return from Present() API call. But unfortunately, Microsoft/NVIDIA unblocks Present() during VSYNC ON at the END of visible refresh (before first line of Front Porch). Arrrrrrgh. Turning Easy QFT, into Complex QFT.

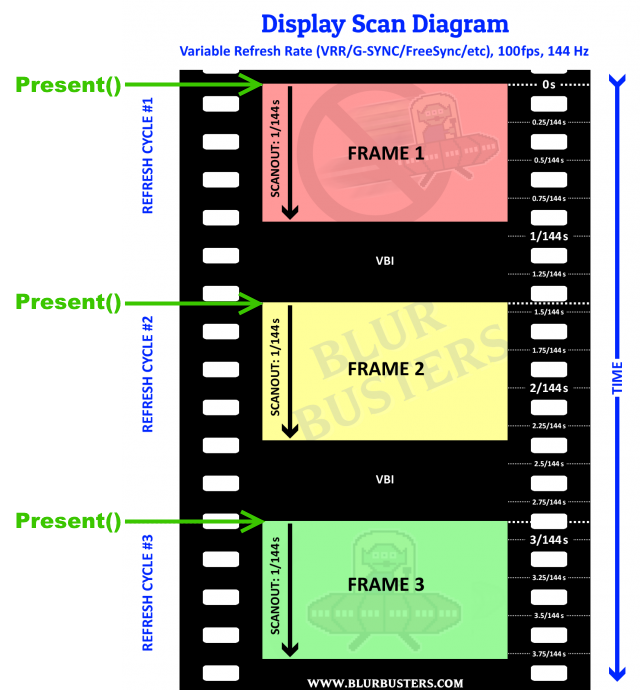

But Wait! G-SYNC and FreeSync are Natural Quick Frame Transports

But Wait! G-SYNC and FreeSync are Natural Quick Frame Transports

Want an easier Quick Frame Transport? Just use a 60fps cap at 240hz VRR. All VRR GPUs always transmit refresh cycles at maximum scanout velocity. Present() immediately starts delivering the first scanline at that instant (if monitor not currently busy refreshing or repeat-refreshing) since the monitor slaved to the VRR.

Present() is already permanently connected to the end of VBI during VRR operation. Unless the monitor is still busy refreshing (frametime faster than max Hz) or the monitor is busy repeat-refreshing (frametime slower than min Hz). As long as frametimes stay within the panel's VRR range,

software is 100% controlling the timing of the monitor's refresh cycles!

This is why emulator users love high-Hz G-SYNC displays for lower emulator lag.

60fps at 240Hz is much lower latency than a 60hz monitor, because of the ultrafast 1/240sec scanout already automatically included with all 60fps material on all VRR monitors! The magic of delivering AND refreshing a "60Hz" refresh cycle in only 4.2 milliseconds (both cable and panel), means ultra-low latency for capped VRR

This is why VRR is is the world's lowest latency "Non-VSYNC-OFF" sync technology.

It doesn't help when you need to use fixed-Hz (consoles, strobing, non-VRR panels).

This Posts Helps you to:

- Understand Flexible-Scanrate LCD panels (most 1080p 144Hz panels, few 1080p 240Hz panels)

- Understand Fixed-Scanrate LCD panels (most 1080p 240Hz panels, most 144Hz 1440p panels)

- Understand Quick Frame Transport

- Understand Quick Frame Transport's ability to workaround low-Hz lag on Fixed-Scanrate Panels

- Understand VRR

- Understand How VRR is similar to Quick Frame Transport

- If you are a software developer, Understand that software controls triggering variable refresh monitor's refresh cycle via Present()

Variables matter! You can get lag if you don't know how to choose the right gaming monitor to pair up with your device, as not all 240Hz panels can do low-Hz properly.

To keep cable=panel synchronous you want:

(A) Choose the right sync technology for your application. VSYNC OFF, VSYNC ON, RTSS Scanline Sync, GSYNC, FreeSync, etc. They all have pros/cons. CS:GO might work better with VSYNC OFF, while stuttery games might work better with GSYNC (if stutter is so bad it hurts your aiming).

(B) Understand absolute latency is not everything for every single game. You've got other latencies involved such as latency jitter (aka stutter), so you may need to control things a bit via frame rate caps or alternative sync technologies, etc.

(C) Choose the right refresh rate. If you don't want to research which high-Hz panels can do low lag at low-Hz, then try to always use max refresh rate. 60fps at 240Hz is lower lag than 60fps at 60Hz.

(D) If you need lower-Hz low-lag, choose the right workaround (variable-scanrate-capable panel OR quick frame transport). Remember consoles don't do quick frame transport tricks with custom resolution utilities, but PCs can.

(E) Most latency comes from other parts of the latency chain rather than the display nowadays.

TL;DR: In fact, with proper user configuration of a typical 240 Hz gaming monitor, 90%+ of the latency is not the display fault anymore (for current recent 240Hz LCDs run at 240Hz refresh rate)

Those who want to read more about the Present()-to-Photons black box can read

www.blurbusters.com/area51 and our Area51 forums. We talk a lot about rasterization stuff there (search keyword "scanout").