usually knowledgeable, but struggling to understand

-

smoothnobody

- Posts: 10

- Joined: 13 Feb 2022, 17:04

usually knowledgeable, but struggling to understand

i just got a QN90A tv + RTX 3080. i've spent the last few days reading about vsync, gsync, adaptive sync, fast sync, VRR. i believe i have a limited understanding, but not quite confident to say i know what i'm talking about. i believe i have been reading outdated information, or just plan wrong information. please correct / educate me.

vsync - limits FPS to refresh rate. adds input lag. if vsync limits FPS to refresh rate, how is this different from manually setting the "max frame rate" to your refresh rate? i tried this, and i got tearing. my understanding must be lacking. i also heard when vsync is on you are actually capping your FPS to half your refresh rate. i turned on vsync, my FPS was not half my refresh rate, so i'm not inclined to believe this, but this has come up enough times that makes me wonder if there is truth to this.

fast sync - drops frames in excess of your frame rate. very low input lag. makes your GPU run at 100%. only useful when you produce more FPS than your refresh rate. i tested this with a game that produced more FPS than my refresh rate. i still got tearing. but when i capped my FPS with fast sync, no tearing. confused again. i thought the use case for fast sync was when you have too many frames, which i did in the first test. when i use the FPS limiter, i did not have too many frames, which seems to go against the use case for fast sync, producing more FPS than your refresh rate.

adaptive sync - this is the opposite of vsync. instead of limiting the FPS to match the refresh rate, it limits/adjusts the refresh rate to match the FPS on demand.

gsync - same concept as adaptive sync. uses nvidia proprietary hardware. because it's hardware controlled vs software controlled there are tighter tolerances / higher standards / better performance. this is often seen as the end game / holy grail that solves both problems of tearing and input lag. a few things that confuse me about gsync, i thought this replaces vsync. but i've read several threads now that say it does not replace vsync, you use gsync and vsync together. i've also read other threads that say you use both plus a FPS limiter set a few FPS below your max refresh rate. even with the tech that is considered the best, there is still uncertainty on how to use it.

VRR - same concept as adaptive sync. but can't figure out why this exists. my best guess is this is basically marketing the same thing different ways, or this is geared towards consoles. nvidia has gsync, amd has freesync, consoles have VRR. they all have the same goal, but accomplish it differently or are aimed towards different platforms. one thing i can't figure out about VRR, my tv supports VRR, but not gsync. however, whether i'm in game mode, or PC mode, i do not see a way to turn VRR on in my TV or nvidia control panel. my TV always says VRR off. to make it even more confusing, with my tv game mode on, i can turn gsync on in nvidia control panel, even though it's not supported.

sorry for the long post. i know this has been discussed millions of times. i'm not just posting without doing any reading or making no effort to understand for myself. i've been reading almost the whole week. either i'm stupid or this is confusing or i'm reading bad information or missing important pieces of information cause i am really struggling to figure out the differences between these technologies and when it's appropriate to use one over the other or one in conjunction with another. hopefully you guys can straighten me out cause i just want to play my games the right way.

vsync - limits FPS to refresh rate. adds input lag. if vsync limits FPS to refresh rate, how is this different from manually setting the "max frame rate" to your refresh rate? i tried this, and i got tearing. my understanding must be lacking. i also heard when vsync is on you are actually capping your FPS to half your refresh rate. i turned on vsync, my FPS was not half my refresh rate, so i'm not inclined to believe this, but this has come up enough times that makes me wonder if there is truth to this.

fast sync - drops frames in excess of your frame rate. very low input lag. makes your GPU run at 100%. only useful when you produce more FPS than your refresh rate. i tested this with a game that produced more FPS than my refresh rate. i still got tearing. but when i capped my FPS with fast sync, no tearing. confused again. i thought the use case for fast sync was when you have too many frames, which i did in the first test. when i use the FPS limiter, i did not have too many frames, which seems to go against the use case for fast sync, producing more FPS than your refresh rate.

adaptive sync - this is the opposite of vsync. instead of limiting the FPS to match the refresh rate, it limits/adjusts the refresh rate to match the FPS on demand.

gsync - same concept as adaptive sync. uses nvidia proprietary hardware. because it's hardware controlled vs software controlled there are tighter tolerances / higher standards / better performance. this is often seen as the end game / holy grail that solves both problems of tearing and input lag. a few things that confuse me about gsync, i thought this replaces vsync. but i've read several threads now that say it does not replace vsync, you use gsync and vsync together. i've also read other threads that say you use both plus a FPS limiter set a few FPS below your max refresh rate. even with the tech that is considered the best, there is still uncertainty on how to use it.

VRR - same concept as adaptive sync. but can't figure out why this exists. my best guess is this is basically marketing the same thing different ways, or this is geared towards consoles. nvidia has gsync, amd has freesync, consoles have VRR. they all have the same goal, but accomplish it differently or are aimed towards different platforms. one thing i can't figure out about VRR, my tv supports VRR, but not gsync. however, whether i'm in game mode, or PC mode, i do not see a way to turn VRR on in my TV or nvidia control panel. my TV always says VRR off. to make it even more confusing, with my tv game mode on, i can turn gsync on in nvidia control panel, even though it's not supported.

sorry for the long post. i know this has been discussed millions of times. i'm not just posting without doing any reading or making no effort to understand for myself. i've been reading almost the whole week. either i'm stupid or this is confusing or i'm reading bad information or missing important pieces of information cause i am really struggling to figure out the differences between these technologies and when it's appropriate to use one over the other or one in conjunction with another. hopefully you guys can straighten me out cause i just want to play my games the right way.

- Chief Blur Buster

- Site Admin

- Posts: 12056

- Joined: 05 Dec 2013, 15:44

- Location: Toronto / Hamilton, Ontario, Canada

- Contact:

Re: usually knowledgeable, but struggling to understand

Welcome to Blur Busters!smoothnobody wrote: ↑13 Feb 2022, 20:27i just got a QN90A tv + RTX 3080. i've spent the last few days reading about vsync, gsync, adaptive sync, fast sync, VRR. i believe i have a limited understanding, but not quite confident to say i know what i'm talking about. i believe i have been reading outdated information, or just plan wrong information. please correct / educate me.

vsync - limits FPS to refresh rate. adds input lag. if vsync limits FPS to refresh rate, how is this different from manually setting the "max frame rate" to your refresh rate? i tried this, and i got tearing. my understanding must be lacking. i also heard when vsync is on you are actually capping your FPS to half your refresh rate. i turned on vsync, my FPS was not half my refresh rate, so i'm not inclined to believe this, but this has come up enough times that makes me wonder if there is truth to this.

We're the pros in explaining this bleep.

Q: Why do VSYNC OFF and VSYNC ON look different even for framerate=Hz?

Short Answer:

Cables do not transmit all pixels at the same time.

Not all pixels on a screen refresh at the same time.

Pixels are transmitted one at a time.

Pixels are transmitted over the cable like a reading book, left-to-right, top-to-bottom.

Displays are refreshed one pixel row at a time

Displays are refreshed like a reading book, left-to-right, top-to-bottom.

VSYNC OFF means a new frame can interrupt/splice in the middle of the previous frame, rather than between them.

This is what causes tearing.

Long Answer:

Here's a high speed video of a screen refreshing. I'm displaying 60 different pictures a second, one rapidly after the other (via www.testufo.com/scanout). Observe how the screen refreshes top to bottom. It happens so fast, that even at 1080p 60Hz, the next pixel row is often refreshed only 1/67,000th of a second afterwards refreshing the previous pixel row. So you need a very fast 1000fps+ high speed camera to really capture this scanout behavior:

This is also how video cables such as VGA, HDMI and DisplayPort transmits the frames of refresh cycles -- as a serialization of a 2D image over a 1D wire. Like mosaic tiles one tile at a time, or a color-by-numbers fashion coloring one square at a time on graph paper. This is the very old fashioned "raster" scanout, which has been used for 100+ years, ever since the first experimental TVs of the 1920s a century ago -- today even digital displays still refresh in the same "book order" sequence (left to right, top to bottom, transmission of pixels over cable, and refreshing of pixels onto screen.

A computer transmits a refresh cycle from computer to monitor one pixel row at a time.

VSYNC OFF -- a new frame can interrupt the current refresh cycle (splice mid-cable-transmission)

VSYNC ON -- a new frame can only splice between refresh cycles (splice in VBI)

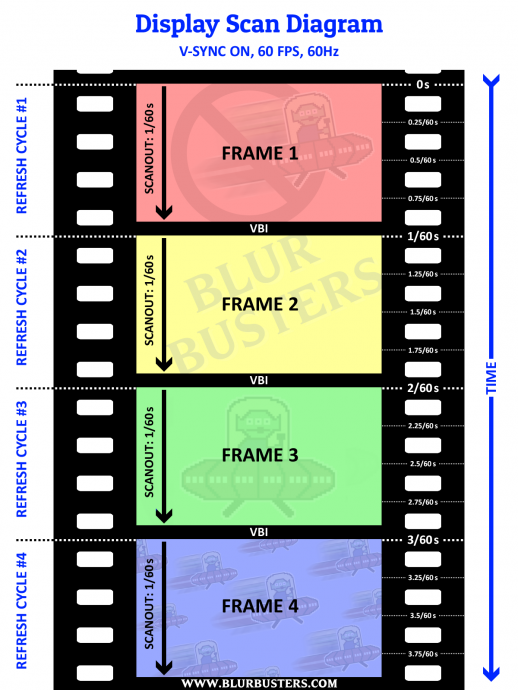

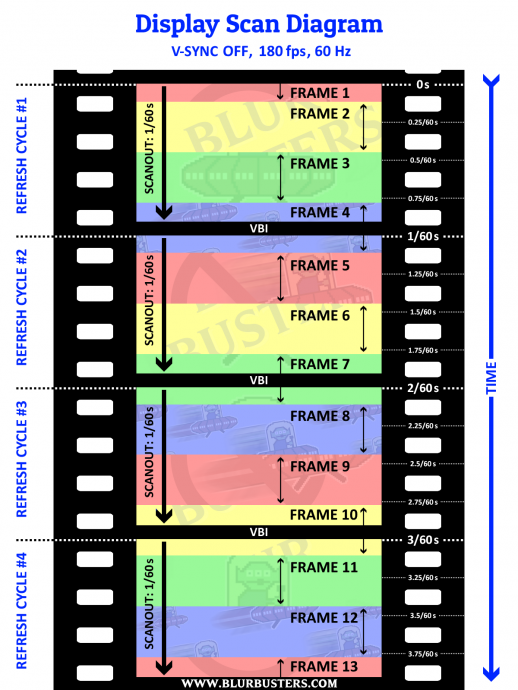

Here's diagrams of VSYNC ON and VSYNC OFF.

If you cap framerate=Hz at 60fps, you might be splicing in the middle of the screen at exactly the same location (stationary or jittery tearline), especially with microsecond-accurate frame rate capping.

Now if the frame rate cap is inaccurate and jittery (one frame is 1/59sec and next frame is 1/61sec), the tearline will jump around vertically, depending on inaccuracy of differential of frame rate and Hz, since a tearline will move even if the fps and Hz is 0.001 different.

60Hz is not always exactly 60 -- see www.testufo.com/refreshrate#digits=6 with a microsecond clock to see how inaccurate it can become as an example!

Moreover, VSYNC ON is needed to steer the tearline off the screen into the VBI (the signal spacer between refresh cycles). Other custom tearline steering software like RTSS Scanline Sync or Special-K Latent Sync, can also simulate VSYNC ON via VSYNC OFF, via complex programming -- but explanation is beyond scope of this thread and is explained in other more-advanced threads.

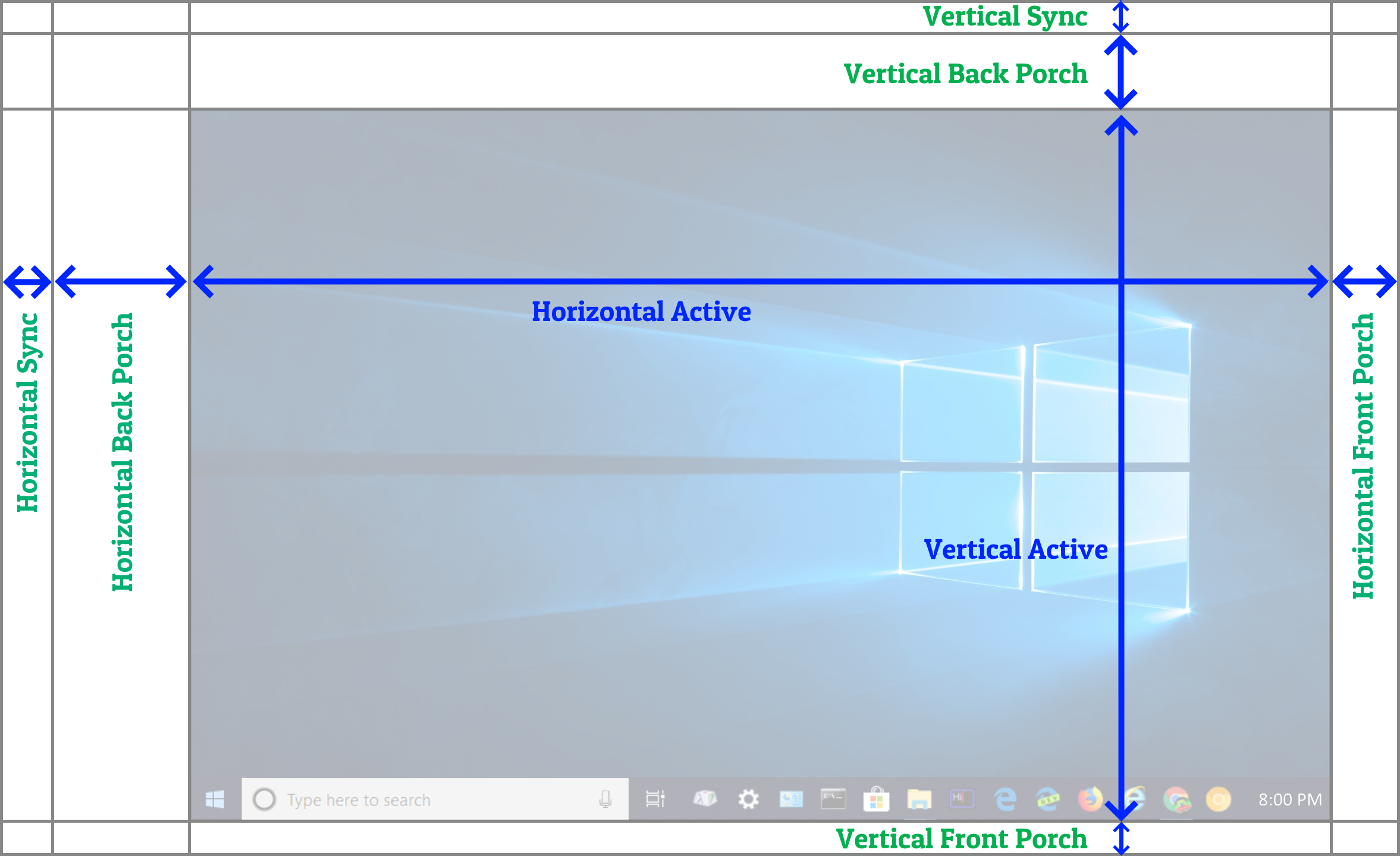

Now, if you ever use a custom resolution utility, you'll observe that there are funky settings such as "Front Porch" or "Back Porch". Porches are simply overscan (hidden pixels beyond edges of screen). This was more common in the analog era, but still is used for digital displays too and digital cables. It's like a larger virtual resolution with the real visible image embedded within. That's how refresh cycles are transmitted over a video cable to help the monitor know how to refresh the image (still a sequential 1D transmission of a 2D image, like a book or a text page -- transmitted left-to-right, top-to-bottom).

Porch is the overscan, and sync intervals are simply signals (a glorified comma separator between pixel rows and between refresh cycles) to tell the display to begin a new pixel row, or a new refresh cycle.

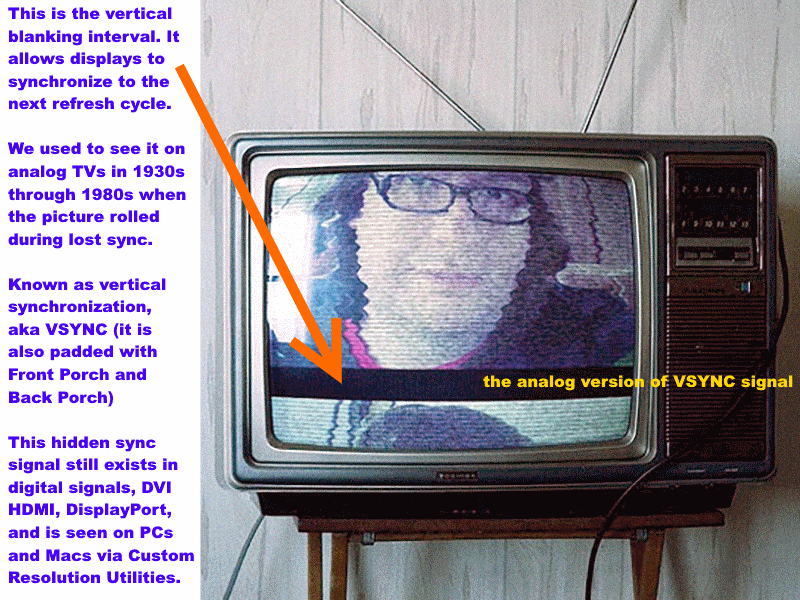

If you are 40+ years old, you probably have spent a lot of time with a CRT tube in the past. Maybe you saw the picture roll (this is called a VHOLD loss, aka loss of "Vertical Hold"), and saw the black bar separating refresh cycles! That's the VBI (VBI = Vertical Blanking Interval = all vertical porches/sync = offscreen stuff separating each refresh cycles).

This is true for both analog and digital signals. For analog, large sync intervals and large overscan was to compensate for the non-square-shaped CRT tube screen as well as the slowness of the electron beam moving to a new pixel row (scanline) or new refresh cycle.

VBI = Vertical Blanking Interval = (Vertical Back Porch + Vertical Sync + Vertical Front Porch)

Head of Blur Busters - BlurBusters.com | TestUFO.com | Follow @BlurBusters on: BlueSky | Twitter | Facebook

Forum Rules wrote: 1. Rule #1: Be Nice. This is published forum rule #1. Even To Newbies & People You Disagree With!

2. Please report rule violations If you see a post that violates forum rules, then report the post.

3. ALWAYS respect indie testers here. See how indies are bootstrapping Blur Busters research!

- Chief Blur Buster

- Site Admin

- Posts: 12056

- Joined: 05 Dec 2013, 15:44

- Location: Toronto / Hamilton, Ontario, Canada

- Contact:

Re: usually knowledgeable, but struggling to understand

Part 2 (of probably 4 parts)

Sometimes these technologies are simply dynamically switching between VSYNC ON and VSYNC OFF depending on what framerate is occuring on the screen.

Long Answer:

Fast Sync often uses an algorithm to try to keep the tearing invisible when framerates are close to Hz. It essentially simulates VSYNC ON whenever framerate is near Hz, but then either drops excess frames (if using tear-free Fast Sync) or splices the frames (behaves as VSYNC OFF when framerates above Hz). There are many different variants of driver-based Fast Sync, which comes under many different brand names like "Triple Buffering" or "Enhanced Sync" or "Adaptive VSYNC" etc, and they sometimes all behave slightly different, with a realtime dynamic switching between VSYNC ON versus VSYNC OFF versus Fast Triple Buffering. Even twiddling with settings such as frame rate caps / or NVInspector can change the appearance of Fast Sync -- you're essentially changing the variables (such as frame rate) that the sync technology needs to use to dynamically choose what behind-the-scenes sync technology to use.

Glossary

Glossary

VESA Adaptive Sync = Same as VRR

Adaptive VSYNC = More similar to Fast Sync

Short Answer:smoothnobody wrote: ↑13 Feb 2022, 20:27fast sync - drops frames in excess of your frame rate. very low input lag. makes your GPU run at 100%. only useful when you produce more FPS than your refresh rate. i tested this with a game that produced more FPS than my refresh rate. i still got tearing. but when i capped my FPS with fast sync, no tearing. confused again. i thought the use case for fast sync was when you have too many frames, which i did in the first test. when i use the FPS limiter, i did not have too many frames, which seems to go against the use case for fast sync, producing more FPS than your refresh rate.

Sometimes these technologies are simply dynamically switching between VSYNC ON and VSYNC OFF depending on what framerate is occuring on the screen.

Long Answer:

Fast Sync often uses an algorithm to try to keep the tearing invisible when framerates are close to Hz. It essentially simulates VSYNC ON whenever framerate is near Hz, but then either drops excess frames (if using tear-free Fast Sync) or splices the frames (behaves as VSYNC OFF when framerates above Hz). There are many different variants of driver-based Fast Sync, which comes under many different brand names like "Triple Buffering" or "Enhanced Sync" or "Adaptive VSYNC" etc, and they sometimes all behave slightly different, with a realtime dynamic switching between VSYNC ON versus VSYNC OFF versus Fast Triple Buffering. Even twiddling with settings such as frame rate caps / or NVInspector can change the appearance of Fast Sync -- you're essentially changing the variables (such as frame rate) that the sync technology needs to use to dynamically choose what behind-the-scenes sync technology to use.

Glossary

- VSYNC ON = see previous post. (Version where framerate is throttled to Hz).

- VSYNC OFF = see previous post

- Fast Triple Buffering = VSYNC ON with a special modification.

...Where latest undisplayed buffered frame is replaced with fresher undisplayed frame. If new frame is rendered before it manages to be delivered to the display. For example, if GPU is so fast (200fps at 60Hz) it can be able to render 3 or 4 frames before next Hz. Fast version of triple buffering ensures that only the newest frame will get displayed in full (with no VSYNC OFF tearing / splicing effect).

Let's be careful not to confuse "VESA Adaptive Sync" (same as VRR) with "Adaptive VSYNC" (not VRR). Adaptive VSYNC is very similar to Fast Sync but with a somewhat more dynamic graphics driver algorithm.smoothnobody wrote: ↑13 Feb 2022, 20:27adaptive sync - this is the opposite of vsync. instead of limiting the FPS to match the refresh rate, it limits/adjusts the refresh rate to match the FPS on demand.

Glossary

VESA Adaptive Sync = Same as VRR

Adaptive VSYNC = More similar to Fast Sync

Head of Blur Busters - BlurBusters.com | TestUFO.com | Follow @BlurBusters on: BlueSky | Twitter | Facebook

Forum Rules wrote: 1. Rule #1: Be Nice. This is published forum rule #1. Even To Newbies & People You Disagree With!

2. Please report rule violations If you see a post that violates forum rules, then report the post.

3. ALWAYS respect indie testers here. See how indies are bootstrapping Blur Busters research!

- Chief Blur Buster

- Site Admin

- Posts: 12056

- Joined: 05 Dec 2013, 15:44

- Location: Toronto / Hamilton, Ontario, Canada

- Contact:

Re: usually knowledgeable, but struggling to understand

Q: What's VRR? FreeSync? G-SYNC? Are They Same?smoothnobody wrote: ↑13 Feb 2022, 20:27gsync - same concept as adaptive sync. uses nvidia proprietary hardware. because it's hardware controlled vs software controlled there are tighter tolerances / higher standards / better performance. this is often seen as the end game / holy grail that solves both problems of tearing and input lag. a few things that confuse me about gsync, i thought this replaces vsync. but i've read several threads now that say it does not replace vsync, you use gsync and vsync together. i've also read other threads that say you use both plus a FPS limiter set a few FPS below your max refresh rate. even with the tech that is considered the best, there is still uncertainty on how to use it.

VRR - same concept as adaptive sync. but can't figure out why this exists. my best guess is this is basically marketing the same thing different ways, or this is geared towards consoles. nvidia has gsync, amd has freesync, consoles have VRR. they all have the same goal, but accomplish it differently or are aimed towards different platforms. one thing i can't figure out about VRR, my tv supports VRR, but not gsync. however, whether i'm in game mode, or PC mode, i do not see a way to turn VRR on in my TV or nvidia control panel. my TV always says VRR off. to make it even more confusing, with my tv game mode on, i can turn gsync on in nvidia control panel, even though it's not supported.

Good news! Most newer GSYNC is no longer fully proprietary. NVIDIA introduced "G-SYNC Compatible" which is fully generic VRR compatible, and works with AMD cards too. But there is massive confusion about VRR technologies. Also, even newer "G-SYNC Native" now has generic VRR compatibility fallback.

These are now identical at the video signal layer:

VRR = FreeSync = VESA Adaptive Sync = G-SYNC Compatible

They simply vary the size of the blanking intervals to temporally space apart refresh cycles. Here's what all generic VRR technologies look like on the cable, as a varying-size VBI. Each frame can have its unique Hz since varying framerate = varying refresh rate.

Glossary

- VRR = Variable Refresh Rate = Generic Catchall Terminology of all below. It's not (originally) a marketing term.

- VESA Adaptive Sync = The base VRR technology by VESA the standards organization = works on NVIDIA & AMD

- FreeSync = AMD generic VRR = AMD Certification of VESA Adaptive Sync (now works on NVIDIA too)

- G-SYNC Compatible = NVIDIA generic VRR = NVIDIA Certification of VESA Adaptive Sync (now works on AMD too)

- G-SYNC Native = NVIDIA proprietary VRR = Proprietary NVIDIA G-SYNC Chip*

- HDMI VRR = The HDMI equivalent of VESA Adaptive Sync

*Newer G-SYNC Native in newer monitors is also compatible with generic VESA Adaptive Sync, so newer G-SYNC Native monitors now works as a FreeSync-compatible behavior on AMD cards. Also, unlike older NVIDIA GPUS, the newer NVIDIA GPUs now are FreeSync compatible (if you force G-SYNC Compatible mode, even works on generic uncertified VESA Adaptive Sync).

Here's a www.testufo.com/vrr simulated interpolated animation of how VRR can erase stutters of framerate changes (for game stutters that are caused by framerate and refresh rate out of sync, not for other stutters such as disk access)

Best looking VRR occurs with a framerate cap 3fps below Hz, and with VSYNC ON enabled as the fallback sync technology (for framerates trying to exceed Hz).

In the early days, there were differences in the EDID plug-and-play negotiation for VRR technologies (e.g. Generic VESA Adaptive Sync versus FreeSync versus G-SYNC) but they have all standardized to the same CEA-861 Extension Block (refresh rate range).

Head of Blur Busters - BlurBusters.com | TestUFO.com | Follow @BlurBusters on: BlueSky | Twitter | Facebook

Forum Rules wrote: 1. Rule #1: Be Nice. This is published forum rule #1. Even To Newbies & People You Disagree With!

2. Please report rule violations If you see a post that violates forum rules, then report the post.

3. ALWAYS respect indie testers here. See how indies are bootstrapping Blur Busters research!

- Chief Blur Buster

- Site Admin

- Posts: 12056

- Joined: 05 Dec 2013, 15:44

- Location: Toronto / Hamilton, Ontario, Canada

- Contact:

Re: usually knowledgeable, but struggling to understand

If you're technically literate, you might want to study Blur Busters Area 51 to understand display technology better before trying to read confusing forum posts.smoothnobody wrote: ↑13 Feb 2022, 20:27sorry for the long post. i know this has been discussed millions of times. i'm not just posting without doing any reading or making no effort to understand for myself. i've been reading almost the whole week. either i'm stupid or this is confusing or i'm reading bad information or missing important pieces of information cause i am really struggling to figure out the differences between these technologies and when it's appropriate to use one over the other or one in conjunction with another. hopefully you guys can straighten me out cause i just want to play my games the right way.

Blur Busters Area 51 Research Articles

If you love opening the hood of cars, or digging into computer innards, then you'll love to open this hood of Hertz!

Head of Blur Busters - BlurBusters.com | TestUFO.com | Follow @BlurBusters on: BlueSky | Twitter | Facebook

Forum Rules wrote: 1. Rule #1: Be Nice. This is published forum rule #1. Even To Newbies & People You Disagree With!

2. Please report rule violations If you see a post that violates forum rules, then report the post.

3. ALWAYS respect indie testers here. See how indies are bootstrapping Blur Busters research!

-

smoothnobody

- Posts: 10

- Joined: 13 Feb 2022, 17:04

Re: usually knowledgeable, but struggling to understand

appreciate the in depth info. i read it twice. it helped alot. it's so nice to read something and have confidence what i'm reading is correct.

i definitely was getting twisted with Adaptive Sync and Adaptive VSYNC.

i was also getting messed up with G-SYNC vs G-SYNC Compatible.

this also helped alot......

http://www.testufo.com/vrr

and this is exactly what i needed, what my settings should be. i like how you dropped this at the very end, after the education.

i definitely was getting twisted with Adaptive Sync and Adaptive VSYNC.

i was also getting messed up with G-SYNC vs G-SYNC Compatible.

this also helped alot......

this was awesome......Chief Blur Buster wrote: ↑14 Feb 2022, 02:01These are now identical at the video signal layer:

VRR = FreeSync = VESA Adaptive Sync = G-SYNC Compatible

Glossary

- VRR = Variable Refresh Rate = Generic Catchall Terminology of all below. It's not (originally) a marketing term.

- VESA Adaptive Sync = The base VRR technology by VESA the standards organization = works on NVIDIA & AMD

- FreeSync = AMD generic VRR = AMD Certification of VESA Adaptive Sync (now works on NVIDIA too)

- G-SYNC Compatible = NVIDIA generic VRR = NVIDIA Certification of VESA Adaptive Sync (now works on AMD too)

- G-SYNC Native = NVIDIA proprietary VRR = Proprietary NVIDIA G-SYNC Chip*

- HDMI VRR = The HDMI equivalent of VESA Adaptive Sync

http://www.testufo.com/vrr

and this is exactly what i needed, what my settings should be. i like how you dropped this at the very end, after the education.

only have one more question. why do we limit the FPS to 3 below max refresh rate? i'm assuming so it doesn't go over and cause tearing. but why not limit it to the refresh rate, or 1-2 below refresh rate?Chief Blur Buster wrote: ↑14 Feb 2022, 02:01Best looking VRR occurs with a framerate cap 3fps below Hz, and with VSYNC ON enabled as the fallback sync technology (for framerates trying to exceed Hz).

- Chief Blur Buster

- Site Admin

- Posts: 12056

- Joined: 05 Dec 2013, 15:44

- Location: Toronto / Hamilton, Ontario, Canada

- Contact:

Re: usually knowledgeable, but struggling to understand

We did some tests almost ten years ago in the G-SYNC preview #2 that discovered latency reductions if there was a little safety margin.smoothnobody wrote: ↑15 Feb 2022, 02:11only have one more question. why do we limit the FPS to 3 below max refresh rate? i'm assuming so it doesn't go over and cause tearing. but why not limit it to the refresh rate, or 1-2 below refresh rate?Chief Blur Buster wrote: ↑14 Feb 2022, 02:01Best looking VRR occurs with a framerate cap 3fps below Hz, and with VSYNC ON enabled as the fallback sync technology (for framerates trying to exceed Hz).

www.blurbusters.com/gsync/preview2

And then Jorim did our massive G-SYNC 101 tests that remain a textbook study: Even NVIDIA learned a lot from it and made many improvements to G-SYNC behaviors because of its findings where it tried multiple fps-below margins and found 3fps below as a sweet spot at the time:

www.blurbusters.com/gsync/gsync101

Short answer is this is because of two things:

1. VSYNC ON has more input lag than G-SYNC.

2. Frame rate caps are not perfect, and will jitter.

Frame rate caps are notoriously jittery/inaccurate. One frame may render in 1/141sec and another frame may render in 1/147sec. Each frame will have jittery frametimes. The frames that render too fast will be getting VSYNC ON treatment and buffer up and start to lag (a least a bit).

So framerates trying to get higher than Hz will start to lag as the frames to buffer up (VSYNC ON often takes several refresh cycles to play out all the yet-undisplayed frames that are buffered up).

With VRR, the monitor is syncing to the software. The frame presentation immediately refreshes the screen right on the spot, with no fixed refresh rate schedule. In an ideal world, gametime is in perfect sync with photontime, so framerate fluctuations look stutter-free to human eyes -- try "Random" in www.testufo.com/vrr such as Fast Random.

It's interesting to observe that randomly-fluctuating framerates can be made visually stutterfree -- thanks to gametime:photontime staying in sync despite random intervals between frames. For sample-and-hold VRR, only the motion blur varies linearly to frametime (sample-and-hold MPRT persistence blurring) -- literally in virtual perfect accorance to Blur Busters Law. (Ignoring GtG for simplicity) 1ms of frametime = 1 pixel of motion blur per 1000 pixels/sec.

More information about Blur Busters Law (double Hz and framerate = half display motion blur) can be found at www.blurbusters.com/gtg-vs-mprt as well as www.blurbusters.com/1000hz-journey -- the decrease on display motion blur during higher VRR frame rates is really easy to observe on framerate-ramping animations on VRR displays. That's the main side effect after all stutters are removed -- you're more clearly seeing the remaining display artifacts such as motion blur caused by frame rate limitations.

Frames outside VRR range will have some minor side effects (such as lag or possible stutter).

Framerate above max Hz of VRR range

Frames that are ready too soon, don't get to immediately refreshed (i.e. lagged by being forced to wait for the monitor to refresh sooner). You could use VSYNC OFF instead as the fallback sync technology, but that means you will get tearing appearing when framerates are higher than refresh rate. This is fine if you're latency-priority (VRR + VSYNC OFF) rather than smoothness-priority (VRR + VSYNC ON), everybody has different priorities.

Framerate below min Hz of VRR range

Frames that are ready too slow (framerate below Hz), means the monitor is in danger of timing out (screen fading, screen going black, screen flickering, or other side effect). For G-SYNC native, the monitor automatically repeat-refreshes the previous frame from the monitor's own built-in RAM. For everything else (generic VRR and its related/certified variants), the graphics driver is responsible for beginning to repeat-refresh a new refresh cycle out the video output. Stutter can occur if the software finishes a frame while the screen is still busy repeat-refreshing. A repeat-refresh penalty is 1/(maxHz) second. A 240Hz VRR monitor doing 30fps LFC will stall the new frame for up to 1/240sec. LFC stutter can occur. The gametime:photontime relativeness is violated = stutter occurs. But if it's small, you might not see it.

LFC Stutter May be Invisible or Visible

This is why huge VRR ranges (30fps-360fps) usually have completely invisible LFC stutter, while tight VRR ranges (48Hz-120Hz) have much more visible LFC stutter. A 1/360sec stall on 1/30sec frametime is much more invisible than a 1/120sec stall on 1/47sec frametime -- that's why LFC stutter is much more invisible for monitors with extremely huge VRR ranges, since the stutter error is much more minor./

Size of Framerate-Below Margin

A 3fps-below cap was discovered as a generic catchall cap-below-Hz, that worked very reliably for most users. However, it's not hard-and-fast. Even 0.5fps-below works well for 4K 60Hz FreeSync displays, because 60Hz has very slow refresh times (16.7ms). But for today's 360Hz G-SYNC monitors, you sometimes need 5-10fps below max Hz. So a 350fps cap for 360Hz often works better. 1ms on 1/360sec refresh cycle is a lot more jitter than 1ms on 1/60sec refresh cycle, so you need bigger margins for higher Hz and smaller margins for lower Hz.

3fps-below is a mere 0.2ms error margin at 120Hz

However, 3fps-below pretty much has universally lower latency than uncapped VRR (main sync tech) + VSYNC ON (fallback sync tech) no matter what Hz you are using. The 3fps-below is just a boilerplate recommendation, but you're welcome to try 1fps below or 10fps below. 117fps cap versus 120fps cap is a ((1/117)-(1/120)) = Only a 0.2 millisecond difference. No wonder frame rate caps jitter; few software can frame-pace more accurately than 200 microseconds on a software timer. Even if the game software did, or if the RTSS framerate cap did, the graphics drivers may not always be able to attain such precision (especially when at near-100% GPU load).

Ideal VRR is a refresh rate range bigger than your frame rate ranges

Better yet, a VRR display with a refresh rate range bigger than your game's framerate range, and you wouldn't have to care about the latency difference between the main sync technology (VRR) and the fallback sync technology (for frame rates outside VRR range). But with a RTX 3000 series spraying framerates that often peak beyond 120 Hz in many older games, it's worth deciding what to do with framerates above Hz on a VRR display.

Smoothness-purists and latency-consistency will benefit from capping slightly below. The cap may not be hit when you're playing raytraced Cyberpunk 2077 at 4K 120Hz, but will easily be hit when playing CS:GO at low detail level.

Head of Blur Busters - BlurBusters.com | TestUFO.com | Follow @BlurBusters on: BlueSky | Twitter | Facebook

Forum Rules wrote: 1. Rule #1: Be Nice. This is published forum rule #1. Even To Newbies & People You Disagree With!

2. Please report rule violations If you see a post that violates forum rules, then report the post.

3. ALWAYS respect indie testers here. See how indies are bootstrapping Blur Busters research!

-

smoothnobody

- Posts: 10

- Joined: 13 Feb 2022, 17:04

Re: usually knowledgeable, but struggling to understand

thanks for everything chief. you and jorimt are pretty awesome. this is the most helpful forum i have ever been on. respect.