So when I do intput lag measurements on the same monitor at 120hz vs 240hz, I get very big difference, more than in theory.

In theory, the lowest input lag, if we're talking about the pure performance of the monitor is about 8ms, for 240hz it's around 4ms.

When I did measurements on my setup, I get about 38-42ms total input lag, but when I switched it to 240hz, I get about 20ms, that's a reduction of 18ms+, why is it such a big difference? Shouldn't it just be a reduction of 4ms?

I've done measurements using a high speed camera, yes I've done it about 10 times for each.

The reason I'm asking is I'm thinking there's maybe a bottleneck somewhere, and it's not a "system speed" bottleneck for now, rather it's a bottleneck maybe in some pipeline that is not able to go faster due to it automatically matching the hz of the monitor, thus not allowing you to go down the threshold or something. There must be a way to somehow improve this, in theory you should be able to get it down to just 8ms total input lag, but we'll be generous and add in an extra 8ms for the addition of system latency, making it go up to a total of 16ms total system lag. But you can't achieve this unless you crank it up to about 360hz or so?

Basically, the theory is confusing and I'm thinking there's a bottleneck that shouldn't be there.

Measuring input lag at 120hz vs 240hz gives bigger difference than expected

Re: Measuring input lag at 120hz vs 240hz gives bigger difference than expected

Not necessarily. Scanout conversion latency on your particular monitor model could be a contributing factor as well.deama wrote: ↑03 Nov 2023, 12:30So when I do intput lag measurements on the same monitor at 120hz vs 240hz, I get very big difference, more than in theory.

In theory, the lowest input lag, if we're talking about the pure performance of the monitor is about 8ms, for 240hz it's around 4ms.

When I did measurements on my setup, I get about 38-42ms total input lag, but when I switched it to 240hz, I get about 20ms, that's a reduction of 18ms+, why is it such a big difference? Shouldn't it just be a reduction of 4ms?

(jorimt: /jor-uhm-tee/)

Author: Blur Busters "G-SYNC 101" Series

Displays: ASUS PG27AQN, LG 48CX VR: Beyond, Quest 3, Reverb G2, Index OS: Windows 11 Pro Case: Fractal Design Torrent PSU: Seasonic PRIME TX-1000 MB: ASUS Z790 Hero CPU: Intel i9-13900k w/Noctua NH-U12A GPU: GIGABYTE RTX 4090 GAMING OC RAM: 32GB G.SKILL Trident Z5 DDR5 6400MHz CL32 SSDs: 2TB WD_BLACK SN850 (OS), 4TB WD_BLACK SN850X (Games) Keyboards: Wooting 60HE, Logitech G915 TKL Mice: Razer Viper Mini SE, Razer Viper 8kHz Sound: Creative Sound Blaster Katana V2 (speakers/amp/DAC), AFUL Performer 8 (IEMs)

Author: Blur Busters "G-SYNC 101" Series

Displays: ASUS PG27AQN, LG 48CX VR: Beyond, Quest 3, Reverb G2, Index OS: Windows 11 Pro Case: Fractal Design Torrent PSU: Seasonic PRIME TX-1000 MB: ASUS Z790 Hero CPU: Intel i9-13900k w/Noctua NH-U12A GPU: GIGABYTE RTX 4090 GAMING OC RAM: 32GB G.SKILL Trident Z5 DDR5 6400MHz CL32 SSDs: 2TB WD_BLACK SN850 (OS), 4TB WD_BLACK SN850X (Games) Keyboards: Wooting 60HE, Logitech G915 TKL Mice: Razer Viper Mini SE, Razer Viper 8kHz Sound: Creative Sound Blaster Katana V2 (speakers/amp/DAC), AFUL Performer 8 (IEMs)

Re: Measuring input lag at 120hz vs 240hz gives bigger difference than expected

It's OLED based so I think that's as fast as it can be?jorimt wrote: ↑03 Nov 2023, 12:37Not necessarily. Scanout conversion latency on your particular monitor model could be a contributing factor as well.deama wrote: ↑03 Nov 2023, 12:30So when I do intput lag measurements on the same monitor at 120hz vs 240hz, I get very big difference, more than in theory.

In theory, the lowest input lag, if we're talking about the pure performance of the monitor is about 8ms, for 240hz it's around 4ms.

When I did measurements on my setup, I get about 38-42ms total input lag, but when I switched it to 240hz, I get about 20ms, that's a reduction of 18ms+, why is it such a big difference? Shouldn't it just be a reduction of 4ms?

Also, I believe rtings did the standard measurement of the white box flicker on a black background and that reported the usual 4ms/8ms 120hz/240hz.

Re: Measuring input lag at 120hz vs 240hz gives bigger difference than expected

Scanout conversion latency can apply to any panel type.

The 4.2ms vs. 8.3ms number is per scanout cycle, and there are, for instance, 120 individual ~8.3ms scanout cycles within a 1 second period at 120Hz (etc), so the averaged difference in latency between the two refresh rates over any test duration longer than a single scanout cycle will obviously be higher than 4.1ms.deama wrote: ↑03 Nov 2023, 12:48In theory, the lowest input lag, if we're talking about the pure performance of the monitor is about 8ms, for 240hz it's around 4ms.

When I did measurements on my setup, I get about 38-42ms total input lag, but when I switched it to 240hz, I get about 20ms, that's a reduction of 18ms+, why is it such a big difference? Shouldn't it just be a reduction of 4ms?

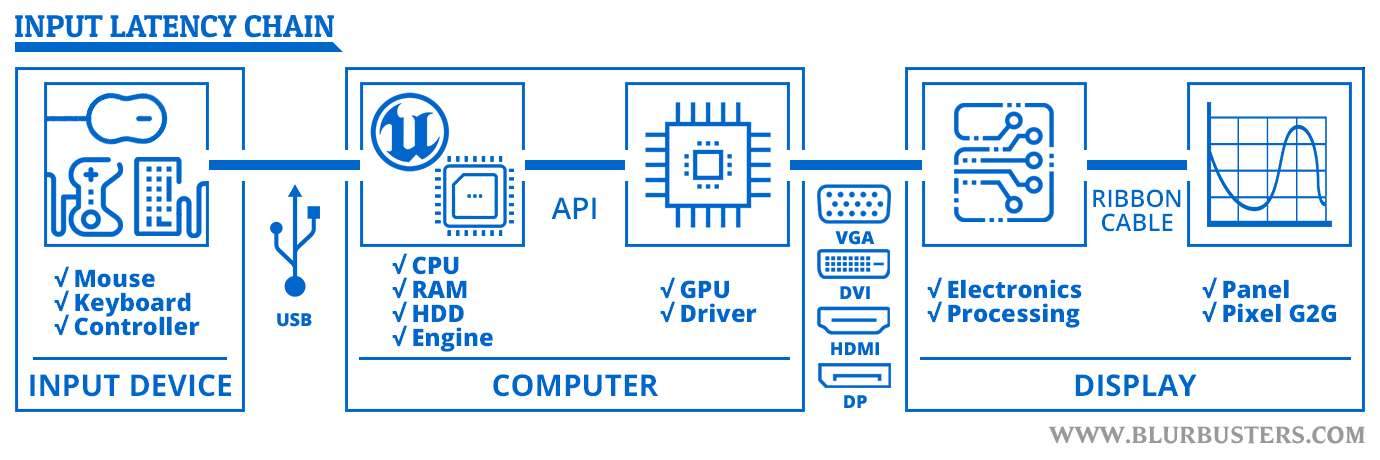

When you're performing high speed camera tests, you're capturing the entire input-to-display chain, which includes any and all input device, system, and display latency, so your latency "bottleneck" isn't just max refresh/framerate:

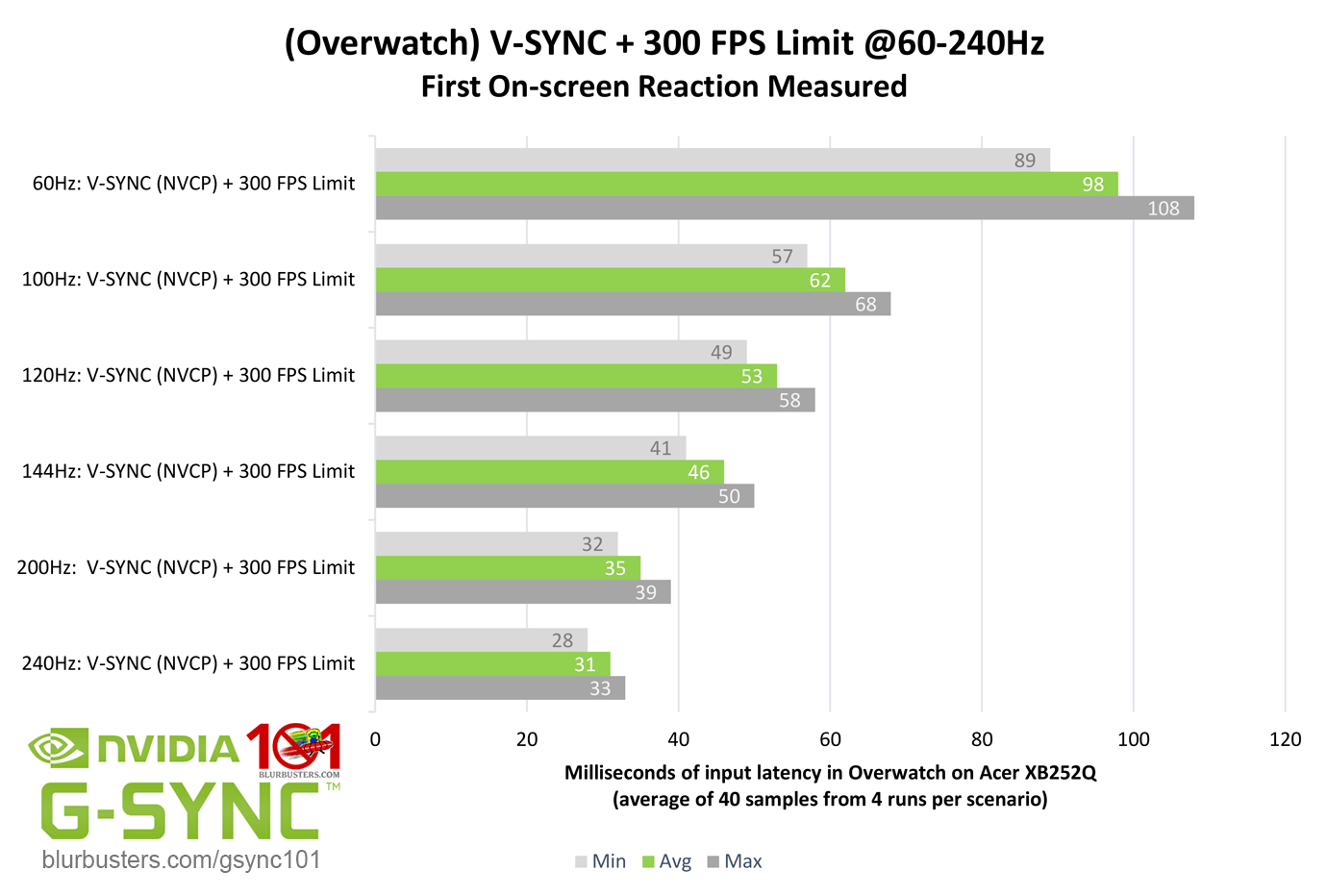

The minimum average latency entirely depends on the test scenario + system and game. I.E. I was getting an average button-to-pixel latency of 16ms in Overwatch on a 240Hz monitor, for instance:

viewtopic.php?f=5&t=9151&p=86590

(jorimt: /jor-uhm-tee/)

Author: Blur Busters "G-SYNC 101" Series

Displays: ASUS PG27AQN, LG 48CX VR: Beyond, Quest 3, Reverb G2, Index OS: Windows 11 Pro Case: Fractal Design Torrent PSU: Seasonic PRIME TX-1000 MB: ASUS Z790 Hero CPU: Intel i9-13900k w/Noctua NH-U12A GPU: GIGABYTE RTX 4090 GAMING OC RAM: 32GB G.SKILL Trident Z5 DDR5 6400MHz CL32 SSDs: 2TB WD_BLACK SN850 (OS), 4TB WD_BLACK SN850X (Games) Keyboards: Wooting 60HE, Logitech G915 TKL Mice: Razer Viper Mini SE, Razer Viper 8kHz Sound: Creative Sound Blaster Katana V2 (speakers/amp/DAC), AFUL Performer 8 (IEMs)

Author: Blur Busters "G-SYNC 101" Series

Displays: ASUS PG27AQN, LG 48CX VR: Beyond, Quest 3, Reverb G2, Index OS: Windows 11 Pro Case: Fractal Design Torrent PSU: Seasonic PRIME TX-1000 MB: ASUS Z790 Hero CPU: Intel i9-13900k w/Noctua NH-U12A GPU: GIGABYTE RTX 4090 GAMING OC RAM: 32GB G.SKILL Trident Z5 DDR5 6400MHz CL32 SSDs: 2TB WD_BLACK SN850 (OS), 4TB WD_BLACK SN850X (Games) Keyboards: Wooting 60HE, Logitech G915 TKL Mice: Razer Viper Mini SE, Razer Viper 8kHz Sound: Creative Sound Blaster Katana V2 (speakers/amp/DAC), AFUL Performer 8 (IEMs)

Re: Measuring input lag at 120hz vs 240hz gives bigger difference than expected

I'm not quite understanding the "scanout cycle" thing you are talking about, I was always under the impression that on a monitor of e.g. 120hz, it took roughly 8ms for 1 hertz cycle (whole screen refresh cycle) to be performed. In which case, the lowest latency you can obtain is 8ms, capped by your 120hz monitor.jorimt wrote: ↑03 Nov 2023, 14:24Scanout conversion latency can apply to any panel type.

The 4.2ms vs. 8.3ms number is per scanout cycle, and there are, for instance, 120 individual ~8.3ms scanout cycles within a 1 second period at 120Hz (etc), so the averaged difference in latency between the two refresh rates over any test duration longer than a single scanout cycle will obviously be higher than 4.1ms.deama wrote: ↑03 Nov 2023, 12:48In theory, the lowest input lag, if we're talking about the pure performance of the monitor is about 8ms, for 240hz it's around 4ms.

When I did measurements on my setup, I get about 38-42ms total input lag, but when I switched it to 240hz, I get about 20ms, that's a reduction of 18ms+, why is it such a big difference? Shouldn't it just be a reduction of 4ms?

When you're performing high speed camera tests, you're capturing the entire input-to-display chain, which includes any and all input device, system, and display latency, so your latency "bottleneck" isn't just max refresh/framerate:

The minimum average latency entirely depends on the test scenario + system and game. I.E. I was getting an average button-to-pixel latency of 16ms in Overwatch on a 240Hz monitor, for instance:

viewtopic.php?f=5&t=9151&p=86590

Yes that's right, but switching between 120hz and 240hz presents a large latency dispertiy, so if you're measuring the whole click-to-photon latency, then the bottleneck is obviously the monitor right? Because the only thing you changed was the monitor hz, and it yielded a large difference, compared to something like clocking the CPU higher, GPU higher, or some motherboard thing.The minimum average latency entirely depends on the test scenario + system and game. I.E. I was getting an average button-to-pixel latency of 16ms in Overwatch on a 240Hz monitor, for instance:

viewtopic.php?f=5&t=9151&p=86590

But what I'm not understanding is if you're at 120hz, in theory you should be getting 8ms if we only measure the monitor and exclude the rest of the system, which seems to be roughly true for if you just test the monitor, you get roughly 8ms on a good panel at 120hz. But when you add in the rest of the system, it adds in a whopping 30ms ontop of the 8ms to 38ms total. BUT if you crank it up to 240hz, it goes down to like 20ms instead of just 34ms. So the bottleneck is something that is working with the monitor hz refresh right? Because it can't be the monitor itself that's the bottleneck, otherwise if you changed from 120hz to 240hz, you'd only get an improvement of 4ms, not 18+ms. And it can't be the rest of the system that's the bottleneck because then if you changed your monitor from 120hz to 240hz, it wouldn't yield such a massive improvement, because in that scenario the system isn't suppposed to be able to handle lower latency, because e.g. the motherboard/cpu/gpu just isn't able to push them out fast enough, but it does! It gives you a 18ms+ improvement!

To me, it seems there is some artificial thing in place, whether software or hardware specific, that limits the latency output based on your monitor hz, doesn't matter on the panel type or monitor itself, but only hz.

So I was wondering if we could identify this piece of hardware/software, and then maybe be able to forcefully change it? (or try).

I don't think it's software related per sey, at least not on the surface level, because I tried doing these tests on linux as well and the figures were roughly the same. Either this is hardware based, deep inside of just the fundementals of how everything has been built, or something I'm not understanding at all.

Re: Measuring input lag at 120hz vs 240hz gives bigger difference than expected

As I explained in my previous reply, ~8.3ms is a single refresh cycle out of the 120 that occur every second at 120Hz, so if you're testing even a single click using a high speed camera, just one sample will contain more than one of those cycles at that refresh rate.deama wrote: ↑05 Nov 2023, 07:35I'm not quite understanding the "scanout cycle" thing you are talking about, I was always under the impression that on a monitor of e.g. 120hz, it took roughly 8ms for 1 hertz cycle (whole screen refresh cycle) to be performed. In which case, the lowest latency you can obtain is 8ms, capped by your 120hz monitor.

Thus, the averaged latency at 240Hz over a period of scanout cycles is going to be much lower than 120Hz, even if all the other factors in the test beside the max refresh rate are equal, due to the fact that each scanout cycle scans in each rendered frame faster (regardless of framerate), and there are 120 more of those scanout cycles at 240Hz occurring each second.

I.E. the higher refresh rate = the lower the average latency, regardless of scenario:

Anyway, it seems you don't understand the fundamentals enough at this stage for my answers to make sense, so to avoid going in circles, I'll stop here.

(jorimt: /jor-uhm-tee/)

Author: Blur Busters "G-SYNC 101" Series

Displays: ASUS PG27AQN, LG 48CX VR: Beyond, Quest 3, Reverb G2, Index OS: Windows 11 Pro Case: Fractal Design Torrent PSU: Seasonic PRIME TX-1000 MB: ASUS Z790 Hero CPU: Intel i9-13900k w/Noctua NH-U12A GPU: GIGABYTE RTX 4090 GAMING OC RAM: 32GB G.SKILL Trident Z5 DDR5 6400MHz CL32 SSDs: 2TB WD_BLACK SN850 (OS), 4TB WD_BLACK SN850X (Games) Keyboards: Wooting 60HE, Logitech G915 TKL Mice: Razer Viper Mini SE, Razer Viper 8kHz Sound: Creative Sound Blaster Katana V2 (speakers/amp/DAC), AFUL Performer 8 (IEMs)

Author: Blur Busters "G-SYNC 101" Series

Displays: ASUS PG27AQN, LG 48CX VR: Beyond, Quest 3, Reverb G2, Index OS: Windows 11 Pro Case: Fractal Design Torrent PSU: Seasonic PRIME TX-1000 MB: ASUS Z790 Hero CPU: Intel i9-13900k w/Noctua NH-U12A GPU: GIGABYTE RTX 4090 GAMING OC RAM: 32GB G.SKILL Trident Z5 DDR5 6400MHz CL32 SSDs: 2TB WD_BLACK SN850 (OS), 4TB WD_BLACK SN850X (Games) Keyboards: Wooting 60HE, Logitech G915 TKL Mice: Razer Viper Mini SE, Razer Viper 8kHz Sound: Creative Sound Blaster Katana V2 (speakers/amp/DAC), AFUL Performer 8 (IEMs)