Hi guys, i’ve recently bought this amazing monitor but I ne ed your help because i’ve got 2 problems.

The first one is that Windows 10 dosen’t recognize the monitor, it says generically monitor plug and play while the nvidia control panel detected it correctly. This cause to me that some games use the win10 details and don’t let me go over 75hz standard.. i can not find out anywhere the monitor driver, also no the site there aren’t.. have you got this problem ? Does anyone of you have got the drivers?

The secondo one, are you using the msi display kit or the osd app 2.0 for the gaming config ?

Thanks in advance

The Official *MSI Optix MAG251RX* Owners Thread

- DukeDice929

- Posts: 81

- Joined: 02 Nov 2019, 08:33

Re: The Official *MSI Optix MAG251RX* Owners Thread

So the problem is - you can't go over 75Hz, right?Vash_84 wrote: ↑20 Sep 2020, 03:56The first one is that Windows 10 dosen’t recognize the monitor, it says generically monitor plug and play while the nvidia control panel detected it correctly. This cause to me that some games use the win10 details and don’t let me go over 75hz standard.. i can not find out anywhere the monitor driver, also no the site there aren’t.. have you got this problem ? Does anyone of you have got the drivers?

Search for "Advanced display settings"->"Display adapted properties", then switch to "monitor" tab and apply those sweet Hz.

My bad English :0

-

RLCSContender*

- Posts: 541

- Joined: 13 Jan 2021, 22:49

- Contact:

Re: The Official *MSI Optix MAG251RX* Owners Thread

to answer your question.Vash_84 wrote: ↑20 Sep 2020, 03:56Hi guys, i’ve recently bought this amazing monitor but I ne ed your help because i’ve got 2 problems.

The first one is that Windows 10 dosen’t recognize the monitor, it says generically monitor plug and play while the nvidia control panel detected it correctly. This cause to me that some games use the win10 details and don’t let me go over 75hz standard.. i can not find out anywhere the monitor driver, also no the site there aren’t.. have you got this problem ? Does anyone of you have got the drivers?

The secondo one, are you using the msi display kit or the osd app 2.0 for the gaming config ?

Thanks in advance

For anyone who just got this monitor, here are the steps to set up the msi mag251rx

1. download CRU and watch this video. By default, it's not scaling via Display, but scaling via GPU which adds slight input lag and slight blur due to scaling. It's not "native" under PC, but native under those TV resolutions. It should be native under PC.

https://custom-resolution-utility.en.lo4d.com/windows

2. Once you did that, try to get a 6500k white balance. You can use your smart phone photo app. (on my samsung galaxy, it's under "pro mode" on the photo app and it will show you the white balance, almost every photo app has it.. Once you find that, point your phone at the mag251rx's white background(i use google.com's white background and adjust your monitor's red/green/blue to to get to 6500k, if it's not 6500k, just keep on adjusting the red/green/blue(most of the time, the red is too high)

i've owned 4 MSI MAG251RX's, everyone of them have different color calibration.

3. For backlight strobing(AMB), they lock you out from controlling the brightness setting. In my opinion, it's too dimm so I use "controlmymonitor" app or u can use nvidia control panel. (on nvidia control panel, i never go higher than 65-70. The crosstalk is average and since black frame insertion is dominant, it's extremely difficult to se the crosstalk.

https://www.portablefreeware.com/index.php?id=2911

click on the app, then go to the "Brightness" folder and choose your brightness level (from 0 to 100), thus u won't lose color accuracy if you use this app compared to nvidia control panel

4. Go to your OSD and set the overdrive setting to "fast", that is the only playable overdrive setting and it's one of the best tuned overdrive setting since it has good motion clarity especially at from 60-240hz. Faster has inverse ghosting coronas.

5. Go to nvidia and change 8 bit color depth to 10 bit color depth. There would be less banding. You can get 12 bit color depth if you use an HDMI cable at 120hz refresh rate.

6. (optional) There's an optional gaming OSD app where you can adjust every thing(including rgb) or have macros or use certain aiming crosshairs for first person shooters. I use this for useful macros to get an edge above my opponents. There's an lag switch tactic i like to use on this app

https://us.msi.com/Monitor/support/Opti ... wn-utility

7.(optional) this is the only monitor that i've owned that has an alarm clock. You can set a timer or alarm during long game sessions.

the best part of this monitor is the alarm clock. It's better than windows 10 version. Yup, the alarm clock is legit

-

RLCSContender*

- Posts: 541

- Joined: 13 Jan 2021, 22:49

- Contact:

Re: The Official *MSI Optix MAG251RX* Owners Thread

all the 240hz fast IPS AU Optronics monitors have this. There's really nothing to be worried about since it's not permanent. Just go on youtube and watch a few videos, it will go away. You can try Cru and adjusting the refresh rate to 240.000 or 239.772, one of those mitigate image retention quite well.

my alienware aw2521hfl has "LCD conditioning" which removes it.

i wouldn't "RMA" if i were you, since all of them have this. (i timed it, it's around 5-10 mins it will show). it's really no big deal, just use a screen saver or don't leave the UFO ghosting test on your monitor for too long(exit the screen if u are not using it)

my alienware aw2521hfl has "LCD conditioning" which removes it.

i wouldn't "RMA" if i were you, since all of them have this. (i timed it, it's around 5-10 mins it will show). it's really no big deal, just use a screen saver or don't leave the UFO ghosting test on your monitor for too long(exit the screen if u are not using it)

- Chief Blur Buster

- Site Admin

- Posts: 11653

- Joined: 05 Dec 2013, 15:44

- Location: Toronto / Hamilton, Ontario, Canada

- Contact:

Re: The Official *MSI Optix MAG251RX* Owners Thread

Image Retention ("Temporary Burn-In")Joel D wrote: ↑19 Sep 2020, 13:26Dang. Thats the weirdest thing ever. I've had the same static image on the screen for hours at a time, never had a issue. Wow, I'm amazed this happened in such a short time. Furthermore, omg that retention in general is loud and bold ! Most bold IR I've ever seen. How high do you have your brightness ? IMO 99% of people use their monitors way too bright, in which could be culprit to image retention quicker. It is also why they see outrageous screen bloom and backlight bleed.

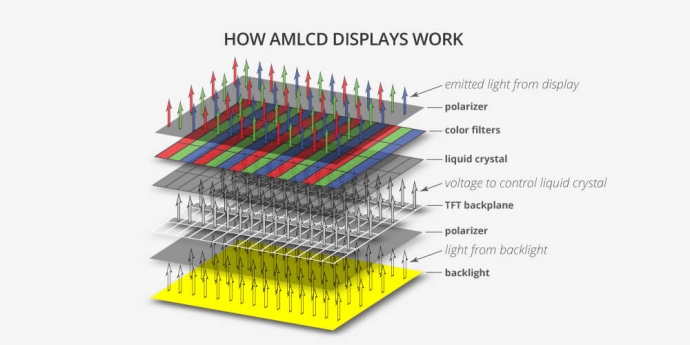

From Temporary Static Electricity Buildup In Pixels

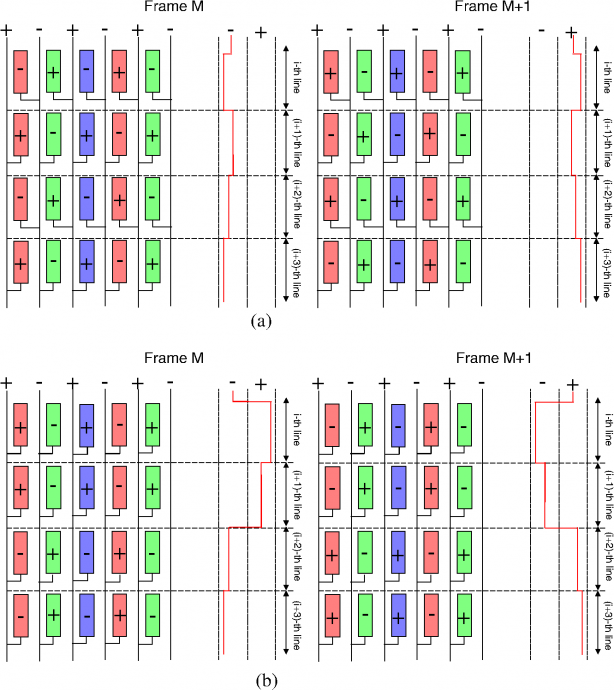

Flicker patterns such as:

- Emulator black-frame insertion

- 3D-glasses software

- Certain motion tests (including but not just TestUFO's Sync Track)

- Anything that does exactly a half-Hz or quarter-Hz flicker with no dropped frames for a sustained period

Can create temporary LCD image retention. It's a static-electricity behavior when a flicker goes in sync with the positive-negative voltage inversion algorithm, and creates this inversion-related image retention.

This is temporary and you just display video full-screen, or some other thing that really exercise the whole screen -- e.g.

This gets rid of the per-pixel static electricity buildups.

You also get the same problem in anything that flickers pixels in-phase with the positive-negative voltages of the LCD inversion algorithm.

https://www.google.com/search?q=techmind+LCD+inversion

Voltages inverts to try to balance the electricity buildup in the panel, but flicker patterns that go in sync with this, can cause a voltage unbalance = static electricity build up, as an LCD pixels can accidentally behave as capacitors.

The layered nature of an LCD unfortunately creates unavoidable capacitance effects that interfere with operation.

Modern LCDs try to avoid this by using spatial and temporal alternating voltage (positive voltage, then negative voltage, then positive voltage, then negative voltage, and so on)

It's often in a chessboard pattern spatially, which sometimes produces an inversion artifact. Normally this is invisible when the positive voltages are perfectly balanced with negative voltages. But the voltage balancing is not always perfect, so you see this:

However, this is also done temporally -- the voltages swap (like an inverted chessboard) at the next refresh cycle.

Now, if you flicker perfectly (at half Hz), then pixels that gets the "higher voltage" (different brightnesses = different voltages) are always getting negative voltages or always getting positive voltages = static electricity buildup = image retention.

- The chessboard artifact is the quirk from spatial component of the inversion algorithm.

- The image retention is the quirk from temporal component of the inversion algorithm.

Thusly, I am not surprised that the world's first panels of a specific refresh rate has some "inversion-related quirks". Every single 240Hz 1ms IPS panel currently has this pixel-as-capacitors quirk at the moment, that only shows up with sustained exact-Hz flicker patterns. As time passes, I'm sure this will improve, with improved inversion algorithms.

Since the pixels have inadvertently behaved like capacitors because of the layered nature of an LCD worked against proper pixel operation. Now you got to drain the charge -- the built-up static electricity stored in the pixel.

Draining the pixel static electricity charge is best done by playing highly active video material. If you want to erase image retention faster, use full screen random-color flashing (fully randomized colors).

FIX: Play highly active fullscreen video or animation.

Such as pixel fixer software https://www.youtube.com/watch?v=39HUG7QrQi8 (play at 2X speed, it's too slow)

Or simulated analog TV noise https://www.shadertoy.com/view/tdXXRM -- this usually erases image retention faster

Make sure to click the full screen button

While a technology quirk, it is not an RMA defect at all.

Also, if you want to keep using emulator BFI, use the new 180Hz BFI feature now found in some emulators, a 3-cycle flicker pattern never produces image retention on majority of monitors -- RetroArch is building this feature in now.

Head of Blur Busters - BlurBusters.com | TestUFO.com | Follow @BlurBusters on Twitter

Forum Rules wrote: 1. Rule #1: Be Nice. This is published forum rule #1. Even To Newbies & People You Disagree With!

2. Please report rule violations If you see a post that violates forum rules, then report the post.

3. ALWAYS respect indie testers here. See how indies are bootstrapping Blur Busters research!

Re: The Official *MSI Optix MAG251RX* Owners Thread

Wow super interesting Chief ! Never knew this. And extremely good to know. I bookmarked them sites just in case.

Hey, perfect timing - while both of you guys are here, can you clear this up - see below

Please clarify guys - what's the real word ?

Hey, perfect timing - while both of you guys are here, can you clear this up - see below

RLCS Contender has come back to say same thing as the original discovery on this. Which means he is saying it still stands true. Yet purplew says its not true and there is actually negative effects to using CRU and furthermore claims Chief "said so too".

Please clarify guys - what's the real word ?

-

RLCSContender*

- Posts: 541

- Joined: 13 Jan 2021, 22:49

- Contact:

Re: The Official *MSI Optix MAG251RX* Owners Thread

There's just too many disadvantages of NOT using CRU. One of them is slightly lowering resolution (yet it has the same 16:9 aspect ratio to get more FPS. Also, if it's non native, some monitors cannot get "FULL colors" but are stuck to limited. PCM(the guy who does the most accurate monitor reviews other than myself covers this). Also this guy in this video explains this well

Generally speaking, GPU scaling. If you have the rtx 3070 or any modern GPU(rtx 2000 series), there shouldn't be any noticeable input lag(if at all).

in my opinion, it's literally a 2 min fix. The differences maybe negligible but i would rather have PC under native resolution since i'm able to reduce the resolution to get more FPS AND i will be on display scaling(less latency)

Generally speaking, GPU scaling. If you have the rtx 3070 or any modern GPU(rtx 2000 series), there shouldn't be any noticeable input lag(if at all).

in my opinion, it's literally a 2 min fix. The differences maybe negligible but i would rather have PC under native resolution since i'm able to reduce the resolution to get more FPS AND i will be on display scaling(less latency)

- Chief Blur Buster

- Site Admin

- Posts: 11653

- Joined: 05 Dec 2013, 15:44

- Location: Toronto / Hamilton, Ontario, Canada

- Contact:

Re: The Official *MSI Optix MAG251RX* Owners Thread

GPUs can scale so fast now, so the difference between display scaling and GPU scaling is negligible enough to prefer GPU scaling. Even a lowly GTX 1080 Ti can do about 10,000 framebuffer copying of 1440p per second in C# programming language in a test -- and of that doing scaling simultaneously (aka 0.1ms to scale a framebuffer -- less laggy than some monitor scalers) such as scaling 1440p to 1080p.

There are pros/cons. But that can vary on monitor to monitor.

It's OK to just use GPU scaling and call it a day. You just use NVIDIA Control Panel to create a lower resolution, and let NVIDIA scale that. It is done so invisibly that NVIDIA usually automatically uses GPU scaling instead of letting the monitor scale.

More than 15 years ago, it used to be consistent that GPU scaling was usually laggier than display scaling, when it was using the GPU rendering to scale (rather than a dedicated scaler chip). This is now a myth on modern GPUs that it's reliably faster. In fact, on some models of monitors, with recent GPUs, the use of GPU scaling has less lag than monitor scaling. It depends on the model. Some monitors have laggier scalers and other monitors have less laggy scalers. Thus, as GPUs get faster and becoming more lagless in scaling behaviors, the GPU is now in an increased likelihood of being equal or less laggy, with any given random monitor. YMMV.

Scaling setting adjustments may win/lose the day -- what the monitor menus lets you adjust. (Much like HDTV stretch/zoom/aspect ratio options -- but on steroids). Some displays are VERY good at letting you adjust display scaling -- configuring 1:1 scaling, 1:2 scaling, pillarboxing, stretching, etc. Other monitors are not. In this case, NVIDIA Control Panel gives you MUCH more flexible scaling options than some brands of monitors that have less flexible scaling -- some monitors don't even have a 1:1 option like NVIDIA Control Panel offers! This can be important if you like to emulate a smaller monitor for competitive play to keep the whole screen in your peripheral vision, especially if you love using a large monitor, and wish to simulate a smaller monitor during competitive play. Not all monitors lets you do that, so you sometimes end up having to use GPU scaling to get this perk.

From time to time, these details matter way more than the latency differences when it's almost a coinflip whether GPU scaler or monitor scaler is less laggy.

Also, to reliably force hardware scaling, you need to use ToastyX CRU instead of NVIDIA Custom Resolution. You know that your GPU is doing GPU scaling if you click "Adjust desktop size and position" -> "Perform scaling on" and it's locked to GPU instead of Display. (This can sometimes happen when you create certain custom resolutions in NVIDIA Control Panel)

Also, there are many times a person said "I am using display scaling!", but I show them, and their CRU caused NVIDIA to automatically enable GPU scaling. Be careful you've correctly confirmed what scaler you're using, if you're latency-measuring the scalers, and you need to use Present()-to-Photons lag test methods, to properly do a scalers-versus test. The frame presentation tme is when the software says goodbye to the frame, and the display subsystem (including GPU drivers) takes over. I consider drivers+display as one latency bloc, due to the latency co-operative behaviours of drivers+display (sync technologies, scaling, variable refresh, VSYNC OFF, VSYNC ON, etc) which can't be reliably siloed as display-only lag or GPU-only lag.

Head of Blur Busters - BlurBusters.com | TestUFO.com | Follow @BlurBusters on Twitter

Forum Rules wrote: 1. Rule #1: Be Nice. This is published forum rule #1. Even To Newbies & People You Disagree With!

2. Please report rule violations If you see a post that violates forum rules, then report the post.

3. ALWAYS respect indie testers here. See how indies are bootstrapping Blur Busters research!

Re: The Official *MSI Optix MAG251RX* Owners Thread

Thanks for clearing that up guys.

Besides the lag though, I didn't hear any elaboration on this statement, which IMO is the most concern.

Me myself I do not downscale ever. But I do want settings that are most efficient. For obvious reasons. I have a RTX 2080ti so also want to do what's best for my personal set up.

RLCScontender, I see you mentioned in Step 5 to "switch to 10 bit color". Well I got my settings set like you said, but I never can change my color bit. Its grayed out. But when I make it so its not grayed out, the choice is only 8 bit anyway. So what am I doing wrong, and how do I make that setting offer 10bit ?

BTW, I have iPhone,, which app does the white balance readings for iPhone ? And what are you using to adjust the r/g/b ? Nvidia CP ? Or ??

Thanks !

Besides the lag though, I didn't hear any elaboration on this statement, which IMO is the most concern.

Is this indeed true ? Where is this coming from technical wise ?RLCScontender wrote: ↑21 Sep 2020, 03:44scaling via GPU which adds slight input lag and slight blur due to scaling

Me myself I do not downscale ever. But I do want settings that are most efficient. For obvious reasons. I have a RTX 2080ti so also want to do what's best for my personal set up.

RLCScontender, I see you mentioned in Step 5 to "switch to 10 bit color". Well I got my settings set like you said, but I never can change my color bit. Its grayed out. But when I make it so its not grayed out, the choice is only 8 bit anyway. So what am I doing wrong, and how do I make that setting offer 10bit ?

BTW, I have iPhone,, which app does the white balance readings for iPhone ? And what are you using to adjust the r/g/b ? Nvidia CP ? Or ??

Thanks !

Re: The Official *MSI Optix MAG251RX* Owners Thread

Why do people trust the display's scaler more than the video card's scaler? Both do the same thing, mind you, but why the implicit trust towards the display's scaler? Sure, we know that the video card's scaler uses bilinear in case of nvidia cards and some displays use different scaling algorithms, but when it comes to scaling latency, everyone just assumes the display's scaler is better, even though there's a huge variation of display scalers out there...

Steam • GitHub • Stack Overflow

The views and opinions expressed in my posts are my own and do not necessarily reflect the official policy or position of Blur Busters.

The views and opinions expressed in my posts are my own and do not necessarily reflect the official policy or position of Blur Busters.