I have never owned a monitor with any of these features but after seeing [https://www.testufo.com/blackframes]this[https://www.testufo.com/blackframes] demonstration it got me really intrigued. I mainly play fast paced competitive games and using one of these modes sounds like a huge advantage! However, I read that these modes can provide a bit of input lag but I think it also said that good/newer implementation of these modes only add a very tiny amount of lag. The other problem I saw about using these is that you can't use adaptive refresh rate technologies alongside these modes which means if you want no tear super smooth motion clarity you would have to use vsync which would add another layer of input lag. Also, Chief Blur Buster mentioned that to have the best experience with these modes is to have refresh rate headroom to avoid strobe crosstalk which leads me to a question. When activating these motion blur reduction modes, can you set them to any refresh rate or are you only given a select amount of refresh rates?

Sorry if this is a bit messy, I am not used to writing posts this long so I wasn't sure if it would have been better to make each sentence a paragraph or just leave it as it is. Thanks for reading and would love to hear what you guys have to say about this.

Are these motion blur reduction modes useful for competitive fast paced games?

Re: Are these motion blur reduction modes useful for competitive fast paced games?

LCD Strobing add input lag, is not recommended for competitive, where players want the lowest input lag settings. Professional gamers never play leagues with strobe mode On.

For instance, with Viewsonic XG270, if play non strobed 240FPS/Hz VSync On, each pixel is refreshed each 4.12ms, when is refreshed you see the color start change inmediately to the next. No increase default panel input lag. Unfortunately any LCD can do this change near instant, so always there are smearing due to low response time, some GtG transitions are beyond 4.12ms, that adds more smearing that GtG under the refresh rate interval. Along with motion blur due to sample and hold LCD behavior.

If XG270 run at 120FPS/Hz strobed (PureXP+ On), Ultra setting for instance 10% MPRT ~0.5ms, the input lag come from the wait time until LCD change color. Internaly XG270 continue working at 240Hz, but now when pixel is refreshed you can't see it, because is hided by backlight Off. This is exactly the cause of input lag strobing. Is not slow electronics lag, is how the strobing tech work.

The only way to fix, is to made a gaming monitor with a near instant response time panel, now OLED, in future MicroLED and the cheap version QDEL, and use BFI (Black Frame Insertion) for reduce motion blur. For instance, in LG TV OLED CX 48" 4K 120Hz BFI, internaly the panel work at 240Hz like XG270, but due to very fast OLED response time, BFI (OLED Motion Pro) work different respect strobing.

When CX refresh pixel, user can see the color change inmediately, no hide time ! This internal 240Hz OLED rolling scan with 120FPS/Hz signal BFI, not add input lag by itself way of work, is the same input lag that play 120FPS/Hz without BFI. But unfortunately the electronic of this TV add to much input lag, 22ms with BFI On, so is not recommended for competitive. Is far more that any LCD gaming monitor with strobing.

But a especificaly designed gaming monitor OLED BFI with under 1ms input lag electronics, is the way to go. Unfortunately, no panel in the market with these specs. QDEL and MicroLED is more fast that OLED, but will take longer to arrive. These panels fix the fast burn-in issue in OLED.

Now market is focused in LCD 240@360Hz, but again, input lag is here when set 120FPS/Hz strobing, the improvement is in lower crosstalk. There should be a line of panel manufacturing research working in OLED gaming BFI. Research fix burn-in and increase bright. OLED 120FPS/Hz BFI 50% gets near feel that old CRT monitors at the same FPS/Hz VSync On, and improve LCD strobing due to no add input lag, and no add crosstalk. Motion blur can be reduced more if there are more bright OLED.

In my opinion, is the way to go, but panel manufacturers not do it. Again LCD, again backlight, again non pure black, again low contrast, again backlight bleeding, again slow response time. Is obvious that they are dosing gaming monitors evolution by a economic point of view. Is understandable, user allways want the best tech available, but bussiness want take the much money possible of these tech transitions, so for sure they will squeeze LCD to the limit until change to next panel tech.

But they take some risk, because if one OLED TV manufacturer like LG launch a 120Hz BFI 1ms input lag using a exclusive gaming mode, gamers that want gaming screen without motion blur go buy TV instead to get the CRT/Plasma feel in PC desktop. 48" 4K 120Hz can be used at native 24" FHD 120Hz with GPU integer scaling, so is perfectly usable for PC desktop if is wall mounted and user have enought space for it, and money

If LG launch a OLED panel with extremely low input lag, that break the gaming market from motion blur reduction point of view (no crosstalk no input lag), and the rest of gaming panel manufacturers they will be late to these next generation. They should be thinking about doing something about it before this occurs, but only JOLED is in this race Vs LG, but now only 22" monitor OLED 4K 60Hz no gaming.

LG is doing a great job, is the only that realy try to offer user next gen display, all others is based in LCD. CX TV OLED 2020 is now VRR capable, Adaptive Sync (GSync-C and FreeSync), BFI at 120FPS/Hz looks fantastic, is mixing TV and gaming monitor features, only need to improve to much input lag, a bit more brightness, add one display port 2.0 and they have a great gaming monitor !

Image quality in OLED is far from any LCD can do, in black, constrast, color gamut, angles, weight, size, energy.

Race to 1000Hz display with LCD is nonsense [Mod EDIT: Nope -- not nonsense], response time can't fit into refresh rate, non-strobed with smearing, and 120FPS/Hz strobed, can be crosstalk free, but increase input lag, and gamers not want more input lag, so need to go straight to OLED.

For instance, with Viewsonic XG270, if play non strobed 240FPS/Hz VSync On, each pixel is refreshed each 4.12ms, when is refreshed you see the color start change inmediately to the next. No increase default panel input lag. Unfortunately any LCD can do this change near instant, so always there are smearing due to low response time, some GtG transitions are beyond 4.12ms, that adds more smearing that GtG under the refresh rate interval. Along with motion blur due to sample and hold LCD behavior.

If XG270 run at 120FPS/Hz strobed (PureXP+ On), Ultra setting for instance 10% MPRT ~0.5ms, the input lag come from the wait time until LCD change color. Internaly XG270 continue working at 240Hz, but now when pixel is refreshed you can't see it, because is hided by backlight Off. This is exactly the cause of input lag strobing. Is not slow electronics lag, is how the strobing tech work.

The only way to fix, is to made a gaming monitor with a near instant response time panel, now OLED, in future MicroLED and the cheap version QDEL, and use BFI (Black Frame Insertion) for reduce motion blur. For instance, in LG TV OLED CX 48" 4K 120Hz BFI, internaly the panel work at 240Hz like XG270, but due to very fast OLED response time, BFI (OLED Motion Pro) work different respect strobing.

When CX refresh pixel, user can see the color change inmediately, no hide time ! This internal 240Hz OLED rolling scan with 120FPS/Hz signal BFI, not add input lag by itself way of work, is the same input lag that play 120FPS/Hz without BFI. But unfortunately the electronic of this TV add to much input lag, 22ms with BFI On, so is not recommended for competitive. Is far more that any LCD gaming monitor with strobing.

But a especificaly designed gaming monitor OLED BFI with under 1ms input lag electronics, is the way to go. Unfortunately, no panel in the market with these specs. QDEL and MicroLED is more fast that OLED, but will take longer to arrive. These panels fix the fast burn-in issue in OLED.

Now market is focused in LCD 240@360Hz, but again, input lag is here when set 120FPS/Hz strobing, the improvement is in lower crosstalk. There should be a line of panel manufacturing research working in OLED gaming BFI. Research fix burn-in and increase bright. OLED 120FPS/Hz BFI 50% gets near feel that old CRT monitors at the same FPS/Hz VSync On, and improve LCD strobing due to no add input lag, and no add crosstalk. Motion blur can be reduced more if there are more bright OLED.

In my opinion, is the way to go, but panel manufacturers not do it. Again LCD, again backlight, again non pure black, again low contrast, again backlight bleeding, again slow response time. Is obvious that they are dosing gaming monitors evolution by a economic point of view. Is understandable, user allways want the best tech available, but bussiness want take the much money possible of these tech transitions, so for sure they will squeeze LCD to the limit until change to next panel tech.

But they take some risk, because if one OLED TV manufacturer like LG launch a 120Hz BFI 1ms input lag using a exclusive gaming mode, gamers that want gaming screen without motion blur go buy TV instead to get the CRT/Plasma feel in PC desktop. 48" 4K 120Hz can be used at native 24" FHD 120Hz with GPU integer scaling, so is perfectly usable for PC desktop if is wall mounted and user have enought space for it, and money

If LG launch a OLED panel with extremely low input lag, that break the gaming market from motion blur reduction point of view (no crosstalk no input lag), and the rest of gaming panel manufacturers they will be late to these next generation. They should be thinking about doing something about it before this occurs, but only JOLED is in this race Vs LG, but now only 22" monitor OLED 4K 60Hz no gaming.

LG is doing a great job, is the only that realy try to offer user next gen display, all others is based in LCD. CX TV OLED 2020 is now VRR capable, Adaptive Sync (GSync-C and FreeSync), BFI at 120FPS/Hz looks fantastic, is mixing TV and gaming monitor features, only need to improve to much input lag, a bit more brightness, add one display port 2.0 and they have a great gaming monitor !

Image quality in OLED is far from any LCD can do, in black, constrast, color gamut, angles, weight, size, energy.

Race to 1000Hz display with LCD is nonsense [Mod EDIT: Nope -- not nonsense], response time can't fit into refresh rate, non-strobed with smearing, and 120FPS/Hz strobed, can be crosstalk free, but increase input lag, and gamers not want more input lag, so need to go straight to OLED.

- Chief Blur Buster

- Site Admin

- Posts: 11653

- Joined: 05 Dec 2013, 15:44

- Location: Toronto / Hamilton, Ontario, Canada

- Contact:

Re: Are these motion blur reduction modes useful for competitive fast paced games?

I am going to provide a very contrarian view.

First, while CRTs are excellent at eliminating motion blur, traditional CRTs are crappy for the Stroboscopic Effect of Finite Frame Rates. We want to improve beyond CRT. That's why we want a strobeless/impulseless/flickerless method of eliminating motion blur, so we eliminate motion blur.

And, Blur Busters exists because "nonsense" is not in our vocabulary. The word "nonsense" is a four letter word.

In the last 10 years, the improvement to LCD capabilities have surprised many. The problem is a lot of innovations have not yet converged (LCD was the first to achieve 10,000 nits but not in a low-persistence display), the perfect blacks (multithousand-element FALD LCDs), the zero blur without compromises (good lagless scanning backlight systems or future 1000Hz systems), all into the same display.

I have visited conventions and seen LCDs that looked better than OLEDs, and vice versa. There are prototype panels that really look amazing. I have feasted my eyes on thousands of LCDs. Do not judge LCD by only looking at sub-$1000 retail displays.

Even LCD is a valid contender for 1000Hz, but valid contenders are LCD, OLED, MicroLED, etc. There are some technology bottleneck with OLED that makes LCD a little bit easier (in some ways) to reach 1000Hz by 2030 -- but the use of full-color MicroLED may actually bypass it all, and provide superior pixel response.

Perhaps a 1000Hz OLED will be better, but a 1000Hz LCD will have a pixel response fast enough to be clearer than a 750Hz OLED. The GtG may not necessarily HAVE to be perfect, but it is just about (barely) keeping up with the refresh rate race, and there are currently engineering paths to make LCDs get faster. There are some with microsecond pixel response (Blue Phase LCDs), more than ten times faster than today's LCDs.

We are inclusive and keep an open mind. A mandatory Blur Busters philosophy. We exist because we keep an open mind.

Over 40 years ago, wearing the early LCD wristwatches in the 1970s, we never dreamed LCDs could generate the beautiful wall-hanging displays of today.

The word "nonsense" relating to refresh rates at Blur Busters, is considered a non-scientific luddite word when you need to put proper research & study on this. After all, there are some LCD technologies that have microsecond pixel responses, and it's possible it's a different panel format than TN, IPS, VA, but they exist in the laboratory already. When one has seen thousands of LCDs, retail, laboratory, in travels to conventions, laboratories, samples, and more, one tends to gain an more open mind.

Sure, OLED and MicroLED may make it first, and I keep an open mind to that. But right now, it's 50-50 odds that LCD is the first one to reach 1000Hz in a panel-based desktop display.

1/240sec ~= 4ms. Half of that is 2ms

The goal is, if you use strobing in esports is: The improved human rection time of strobing for some gaming tactics (You will react 2ms faster) can sometimes outweigh the strobe latency penalty.

But it depends on your game, on the motion-dependant gaming tactics, and other error margins such as microstutter interfering with blur reduction benefits. You might not benefit from strobing for stationary-gaze-at-crosshairs, but benefit more from strobing.

That's why some of the low-lag strobe backlights such as DyAc are used more often by competitive gamers. Not all of them, but a bigger % view the strobe penalty small enough to keep DyAc turned on. If you look at the players, a lot of them turn off DyAc, but not all of them.

Since some words written here, may be misunderstood by new forum readers, here's a graph:

Humans will react to the first photons of the GtG long before GtG100%. GtG10% is already a dark gray in the transition from black to white. GtG50% is a middle gray in the transition from black to white. Sure, we prefer instant GtG, but the lag stopwatch (from a human reaction time perspective) doesn't end at GtG100%

The motion blur can be a bigger problem than GtG sometimes, though.

Did you know BenQ strobing implementation DyAc stands for Dynamic Accuracy, and Gigabyte AORUS strobing implementation is called Aim Stabilizer? The motion blur reduction benefits of trying to re-aim a shaky automatic gun, can outweigh the strobe lag in many games.

Reduced blur creates reaction-time improvements that outweigh the tiny ~2ms strobe lag penalty.

It is only ~2ms to screen centre, given the half-time of scanout (High Speed Videos of Display Scanout, as the time differntial between pixel refresh in darkness, and the strobe-flash, as seen in High Speed Video of LightBoost.

Sure, some strobe modes (like LightBoost) have WAY more lag, because they buffer longer to do more processing (advanced strobe-optimized overdrive, etc), but that's not really necessary when you have a fast scanout and fast enough pixel response.

Some modes such as old LIghtBoost was much, much more laggy. LightBoost does really Y-Axis optimized overdrive, with different overdrive setting per scanline (pixel row), because of the different time differentials of panel scanout (in dark) versus the global strobe flash (seen). This was more important when GtG was slower and you strobed closer to max Hz. But it's just simpler to reduce strobe crosstalk via a large VBI (or Large Vertical Totals), eliminating the need for such advanced algorithms.

Also, 1000Hz can makes strobing unnecessary.

Real life does not strobe to reduce motion blur.

Also, CRT impulses & LCD strobe & OLED strobe creates stroboscopic artifacts.

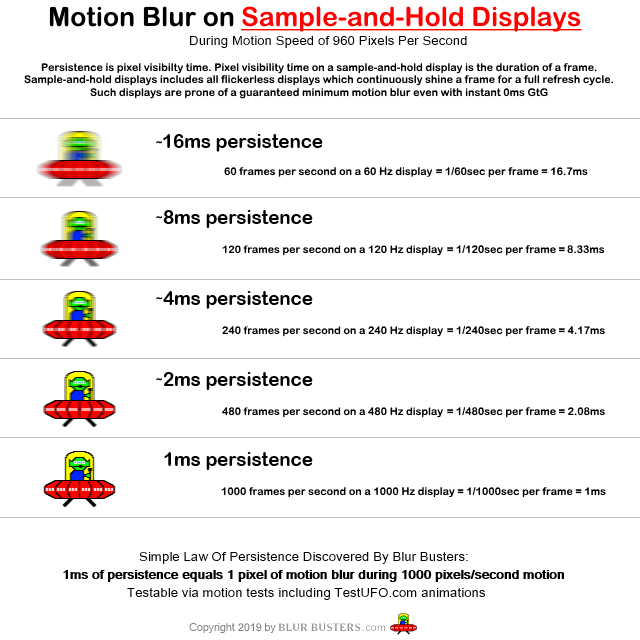

To emulate real life, reduce display motion blur without strobing, requires full persistence to be simultaneously low persistence. 1ms persistence with no black periods in between, requires filling the whole second with 1ms frames, 1000fps at 1000Hz, in order to have 1ms MPRT without strobing.

Proof: BenQ ZOWIE DyAc.

Also, ViewSonic XG270 strobing at 224Hz and 240Hz adds less than 3ms of lag for screen centre, so it's well within the error margins of improved human reaction time outweighing strobe lag. Some gaming tactics (e.g. low-altitude high speed helicoptor flyby over camoflaged enemies, or trying to find a camoflaged flying ball in Rocket League while turning your car around, or very pan-heavy MOBA where you want to see things while panning, etc) -- can produce measurable reaction time improvements. Provided other strobe-benefits-killing weak links are fixed (e.g. 1600dpi mouse, etc).

We welcome LCD to 1000Hz too. All horses are fair game in this refresh rate race.

On some tubes, a CRT phosphor dot can be emitting over 100,000 nits for an instant. We can't strobe OLED that bright, but the world's first 10,000 nit HDR flat panel is a LCD, when I saw the Sony 10,000 nit prototype HDR LCD. That would be delicious for strobing, 90% persistence reduction while still having 1,000 nits HDR.

The plain fact, is, LCD has a huge advantage in the ability to do outsourced light. LEDs can be stadium bright, and you can focus that (e.g. water cooled light sources, prisms, etc) and focus that all through an LCD.

Now, that said, the great news is MIcroLED is able to output a light cannon -- there are MicroLEDs capable of matching CRT brightness momentary in lumens-per-dot. This will produce some amazing persistence reductions when the time comes.

But ultimately, by the time this comes, BFI will just be garbage. BFI is not real life. Real life doesn't strobe. Real life doesn't flicker. Just eliminate motion blur strobelessly, using a superior artifactless lagless frame rate amplification technology that looks perfect (zero soap opera effect). Flicker is a humankind band-aid. Black frame insertion is a humankind band-aid. Phosphor impulsing is a humankind band-aid. We have to impulse a display only because it's not possible to emulate analog motion yet. But we're now getting closer (via ultrahigh refresh rates). Once you've feasted your eyes on a prototype 1000fps @ 1000Hz, it's even more amazing than CRT. Blurless sample-hold. Strobeless ULMB. Lagless ULMB. Goodbye BFI, goodbye phosphor, goodbye strobing, good riddance! But it will take a long time (a decade or two) before such technology becomes mainstream.

Blur Busters is a strobing fan, and we are born because of strobing (LightBoost FTW!), but strobing is not the Holy Grail of humankind.

I can now buy a machine-manufactured 300 LED on a 5 meter ribbon for only $10 off Alibaba.

They already make machines that manufacture Jumbotron modules, like 32x32 RGB discrete LED panels -- the raw screen material of Jumbotrons, now costs about ~$200 per square meter in China (thanks to assembly-line manufacture of 32x32 and 64x64 RGB LEDs).

Shortly, they are about to make machines that makes ultra-cheap FALD MicroLED arrays for LCDs. I believe, that by the year 2025, the average $400-$500 gaming monitor (2020 funds, before inflation) will commonly include cheap a FALD MicroLED sheet. Technology won't be cheap enough to make 1080p MicroLEDs, but technology will soon be cheap enough to make 2000-LED FALD sheets for only a few dollars apiece by the mid 2020s.

This finally, for the first time, brings perfect-looking LCD blacks (with no visible haloing of low-FALD-counts) to inexpensive $500 gaming monitors, unlike the extra-expensive home theater displays that used to be the sole holder of FALD technologies. Sure, there's a few below-the-noisefloor photons leaking, but that's noisefloor stuff like OLED banding (a common complaint), so OLED-vs-LCD will have very well balanced pros-cons for long time to come.

This is only 1 breakthrough technology example. I have seen multiple other breakthrough technologies that guarantees LCD stays alive for about 20 years, even 30 more years, so I'm micdropping the "LCD is dead" debate. LCD will die a slow death yes, when cheap retina-resolution MicroLEDs arrive (superior to OLED and superior to LCD, and can eventually be superior to CRT), but LCD won't be the sudden death that many claim.

One huge problem that has hurt LCD is the "race to bottom". Some aspects of LCD is worse than 20 years ago. People don't want to pay $5000 for an LCD that looks better than an OLED.

Back in year 2001, the IBM T221 (the world's first 4K LCD) did not have much of an IPS glow issue. But today's cheaply made LCDs have more nonuniformities, and so on.

The bottom line is that you cannot judge a book by the cover by having worked maijnly with sub-$1000 LCDs. If you travelled the world to display conventions, display prototypes, and laboratories, one will understand better what I am talking about.

I understand why many people make assumptions on LCD limitations because we judge by the $500 monitor. But the fact is CRT-quality blacks are already achieved in LCDs. Just not at the $500 level.

Just like how Blur Busters helped prove to the world that motion blur can be eliminated on LCDs back in the day people thought that motion blur can never be fixed on an LCD -- that's is how Blur Busters was born. Back in the hobby days, the Blur Busters website used to be www.scanningbacklight.com and I still have a very old scanning backlight FAQ from the orginal 2012 site.

However, a lot has changed since, and it will become much easier/cheaper to do FALD LCDs in a gaming monitors in the coming years, as a stopgap before full-RGB MicroLED slowly goes cheap/commodity/retina (~10-20 years). We'll be able to enjoy great CRT blacks without blooming artifacts much sooner.

While approximately half of your post had good (albiet idealistic) points -- the word "nonsense" is blasphemy to Blur Busters existence.

MicroLED is pretty much the ultimate goal but it's going to be a long time before it's affordable/cheap.

First, while CRTs are excellent at eliminating motion blur, traditional CRTs are crappy for the Stroboscopic Effect of Finite Frame Rates. We want to improve beyond CRT. That's why we want a strobeless/impulseless/flickerless method of eliminating motion blur, so we eliminate motion blur.

And, Blur Busters exists because "nonsense" is not in our vocabulary. The word "nonsense" is a four letter word.

In the last 10 years, the improvement to LCD capabilities have surprised many. The problem is a lot of innovations have not yet converged (LCD was the first to achieve 10,000 nits but not in a low-persistence display), the perfect blacks (multithousand-element FALD LCDs), the zero blur without compromises (good lagless scanning backlight systems or future 1000Hz systems), all into the same display.

I have visited conventions and seen LCDs that looked better than OLEDs, and vice versa. There are prototype panels that really look amazing. I have feasted my eyes on thousands of LCDs. Do not judge LCD by only looking at sub-$1000 retail displays.

Even LCD is a valid contender for 1000Hz, but valid contenders are LCD, OLED, MicroLED, etc. There are some technology bottleneck with OLED that makes LCD a little bit easier (in some ways) to reach 1000Hz by 2030 -- but the use of full-color MicroLED may actually bypass it all, and provide superior pixel response.

Perhaps a 1000Hz OLED will be better, but a 1000Hz LCD will have a pixel response fast enough to be clearer than a 750Hz OLED. The GtG may not necessarily HAVE to be perfect, but it is just about (barely) keeping up with the refresh rate race, and there are currently engineering paths to make LCDs get faster. There are some with microsecond pixel response (Blue Phase LCDs), more than ten times faster than today's LCDs.

We are inclusive and keep an open mind. A mandatory Blur Busters philosophy. We exist because we keep an open mind.

Over 40 years ago, wearing the early LCD wristwatches in the 1970s, we never dreamed LCDs could generate the beautiful wall-hanging displays of today.

The word "nonsense" relating to refresh rates at Blur Busters, is considered a non-scientific luddite word when you need to put proper research & study on this. After all, there are some LCD technologies that have microsecond pixel responses, and it's possible it's a different panel format than TN, IPS, VA, but they exist in the laboratory already. When one has seen thousands of LCDs, retail, laboratory, in travels to conventions, laboratories, samples, and more, one tends to gain an more open mind.

Sure, OLED and MicroLED may make it first, and I keep an open mind to that. But right now, it's 50-50 odds that LCD is the first one to reach 1000Hz in a panel-based desktop display.

The strobe penalty in a good high-Hz strobed monitor is only half a refresh cycle.

1/240sec ~= 4ms. Half of that is 2ms

The goal is, if you use strobing in esports is: The improved human rection time of strobing for some gaming tactics (You will react 2ms faster) can sometimes outweigh the strobe latency penalty.

But it depends on your game, on the motion-dependant gaming tactics, and other error margins such as microstutter interfering with blur reduction benefits. You might not benefit from strobing for stationary-gaze-at-crosshairs, but benefit more from strobing.

prosettings.net show a lot of players use DyAc because of its tiny strobe penalty. Not all of them, but a lot of them.

That's why some of the low-lag strobe backlights such as DyAc are used more often by competitive gamers. Not all of them, but a bigger % view the strobe penalty small enough to keep DyAc turned on. If you look at the players, a lot of them turn off DyAc, but not all of them.

To other readers wanting more information about pixel response, see Pixel Response FAQ: GtG Versus MPRT, to understand how GtG is measured.AddictFPS wrote: ↑17 May 2020, 05:20For instance, with Viewsonic XG270, if play non strobed 240FPS/Hz VSync On, each pixel is refreshed each 4.12ms, when is refreshed you see the color start change inmediately to the next. No increase default panel input lag. Unfortunately any LCD can do this change near instant, so always there are smearing due to low response time, some GtG transitions are beyond 4.12ms, that adds more smearing that GtG under the refresh rate interval. Along with motion blur due to sample and hold LCD behavior.

Since some words written here, may be misunderstood by new forum readers, here's a graph:

Humans will react to the first photons of the GtG long before GtG100%. GtG10% is already a dark gray in the transition from black to white. GtG50% is a middle gray in the transition from black to white. Sure, we prefer instant GtG, but the lag stopwatch (from a human reaction time perspective) doesn't end at GtG100%

The motion blur can be a bigger problem than GtG sometimes, though.

It's true there is strobe lag, but the strobe lag penalty is only a half refreshtime. If you want less strobe lag, use 224Hz. It's a bit more crosstalky but still produces useful esports-quality reaction-time improvements for some gaming tactics, for purposes such as aim stabilizing.AddictFPS wrote: ↑17 May 2020, 05:20If XG270 run at 120FPS/Hz strobed (PureXP+ On), Ultra setting for instance 10% MPRT ~0.5ms, the input lag come from the wait time until LCD change color. Internaly XG270 continue working at 240Hz, but now when pixel is refreshed you can't see it, because is hided by backlight Off. This is exactly the cause of input lag strobing. Is not slow electronics lag, is how the strobing tech work.

Did you know BenQ strobing implementation DyAc stands for Dynamic Accuracy, and Gigabyte AORUS strobing implementation is called Aim Stabilizer? The motion blur reduction benefits of trying to re-aim a shaky automatic gun, can outweigh the strobe lag in many games.

Reduced blur creates reaction-time improvements that outweigh the tiny ~2ms strobe lag penalty.

It is only ~2ms to screen centre, given the half-time of scanout (High Speed Videos of Display Scanout, as the time differntial between pixel refresh in darkness, and the strobe-flash, as seen in High Speed Video of LightBoost.

Sure, some strobe modes (like LightBoost) have WAY more lag, because they buffer longer to do more processing (advanced strobe-optimized overdrive, etc), but that's not really necessary when you have a fast scanout and fast enough pixel response.

Some modes such as old LIghtBoost was much, much more laggy. LightBoost does really Y-Axis optimized overdrive, with different overdrive setting per scanline (pixel row), because of the different time differentials of panel scanout (in dark) versus the global strobe flash (seen). This was more important when GtG was slower and you strobed closer to max Hz. But it's just simpler to reduce strobe crosstalk via a large VBI (or Large Vertical Totals), eliminating the need for such advanced algorithms.

I agree but the reality is that LCD will continue to be around for decades. LCDs will continue to improve and it will remain a horse in the race for a long time to come because there are amazing LCD improvements yet to come.AddictFPS wrote: ↑17 May 2020, 05:20The only way to fix, is to made a gaming monitor with a near instant response time panel, now OLED, in future MicroLED and the cheap version QDEL, and use BFI (Black Frame Insertion) for reduce motion blur. For instance, in LG TV OLED CX 48" 4K 120Hz BFI, internaly the panel work at 240Hz like XG270, but due to very fast OLED response time, BFI (OLED Motion Pro) work different respect strobing.

Also, 1000Hz can makes strobing unnecessary.

Real life does not strobe to reduce motion blur.

Also, CRT impulses & LCD strobe & OLED strobe creates stroboscopic artifacts.

To emulate real life, reduce display motion blur without strobing, requires full persistence to be simultaneously low persistence. 1ms persistence with no black periods in between, requires filling the whole second with 1ms frames, 1000fps at 1000Hz, in order to have 1ms MPRT without strobing.

The good news is well-developed strobing implementations only add half a refresh cycle lag.AddictFPS wrote: ↑17 May 2020, 05:20When CX refresh pixel, user can see the color change inmediately, no hide time ! This internal 240Hz OLED rolling scan with 120FPS/Hz signal BFI, not add input lag by itself way of work, is the same input lag that play 120FPS/Hz without BFI. But unfortunately the electronic of this TV add to much input lag, 22ms with BFI On, so is not recommended for competitive. Is far more that any LCD gaming monitor with strobing.

Proof: BenQ ZOWIE DyAc.

Also, ViewSonic XG270 strobing at 224Hz and 240Hz adds less than 3ms of lag for screen centre, so it's well within the error margins of improved human reaction time outweighing strobe lag. Some gaming tactics (e.g. low-altitude high speed helicoptor flyby over camoflaged enemies, or trying to find a camoflaged flying ball in Rocket League while turning your car around, or very pan-heavy MOBA where you want to see things while panning, etc) -- can produce measurable reaction time improvements. Provided other strobe-benefits-killing weak links are fixed (e.g. 1600dpi mouse, etc).

Agree. But idealism.AddictFPS wrote: ↑17 May 2020, 05:20But a especificaly designed gaming monitor OLED BFI with under 1ms input lag electronics, is the way to go. Unfortunately, no panel in the market with these specs. QDEL and MicroLED is more fast that OLED, but will take longer to arrive. These panels fix the fast burn-in issue in OLED.

We welcome LCD to 1000Hz too. All horses are fair game in this refresh rate race.

There are some issues with OLED including something called Talbot-Plateau law. Outsourced light (e.g. backlight) is easier to generate brighter than direct light source (e.g. OLED pixels). The problem is that tiny microwires can't send enough electricity to pixels powerful enough to output enough nits.

On some tubes, a CRT phosphor dot can be emitting over 100,000 nits for an instant. We can't strobe OLED that bright, but the world's first 10,000 nit HDR flat panel is a LCD, when I saw the Sony 10,000 nit prototype HDR LCD. That would be delicious for strobing, 90% persistence reduction while still having 1,000 nits HDR.

The plain fact, is, LCD has a huge advantage in the ability to do outsourced light. LEDs can be stadium bright, and you can focus that (e.g. water cooled light sources, prisms, etc) and focus that all through an LCD.

Now, that said, the great news is MIcroLED is able to output a light cannon -- there are MicroLEDs capable of matching CRT brightness momentary in lumens-per-dot. This will produce some amazing persistence reductions when the time comes.

But ultimately, by the time this comes, BFI will just be garbage. BFI is not real life. Real life doesn't strobe. Real life doesn't flicker. Just eliminate motion blur strobelessly, using a superior artifactless lagless frame rate amplification technology that looks perfect (zero soap opera effect). Flicker is a humankind band-aid. Black frame insertion is a humankind band-aid. Phosphor impulsing is a humankind band-aid. We have to impulse a display only because it's not possible to emulate analog motion yet. But we're now getting closer (via ultrahigh refresh rates). Once you've feasted your eyes on a prototype 1000fps @ 1000Hz, it's even more amazing than CRT. Blurless sample-hold. Strobeless ULMB. Lagless ULMB. Goodbye BFI, goodbye phosphor, goodbye strobing, good riddance! But it will take a long time (a decade or two) before such technology becomes mainstream.

Blur Busters is a strobing fan, and we are born because of strobing (LightBoost FTW!), but strobing is not the Holy Grail of humankind.

Brightness-wise, we should bypass OLED and go straight to MicroLED.AddictFPS wrote: ↑17 May 2020, 05:20Research fix burn-in and increase bright. OLED 120FPS/Hz BFI 50% gets near feel that old CRT monitors at the same FPS/Hz VSync On, and improve LCD strobing due to no add input lag, and no add crosstalk. Motion blur can be reduced more if there are more bright OLED.

LCD can achieve perfect blacks with multithousand-element FALD backlights. I've seen some LCDs that look better than OLEDs, have you been travelling to dozens of conventions? Have you flown over the Atlantic Ocean to visit display manufacturers? Have you flown over the Pacific Ocean to visit display manufacturers? I have done all the above. I've feasted my eyes on thousands of displays in the last 8 years of Blur Busters' existence. Take my word, LCD is a horse in the blacks race too.AddictFPS wrote: ↑17 May 2020, 05:20In my opinion, is the way to go, but panel manufacturers not do it. Again LCD, again backlight, again non pure black, again low contrast, again backlight bleeding, again slow response time. Is obvious that they are dosing gaming monitors evolution by a economic point of view. Is understandable, user allways want the best tech available, but bussiness want take the much money possible of these tech transitions, so for sure they will squeeze LCD to the limit until change to next panel tech.

I can now buy a machine-manufactured 300 LED on a 5 meter ribbon for only $10 off Alibaba.

They already make machines that manufacture Jumbotron modules, like 32x32 RGB discrete LED panels -- the raw screen material of Jumbotrons, now costs about ~$200 per square meter in China (thanks to assembly-line manufacture of 32x32 and 64x64 RGB LEDs).

Shortly, they are about to make machines that makes ultra-cheap FALD MicroLED arrays for LCDs. I believe, that by the year 2025, the average $400-$500 gaming monitor (2020 funds, before inflation) will commonly include cheap a FALD MicroLED sheet. Technology won't be cheap enough to make 1080p MicroLEDs, but technology will soon be cheap enough to make 2000-LED FALD sheets for only a few dollars apiece by the mid 2020s.

This finally, for the first time, brings perfect-looking LCD blacks (with no visible haloing of low-FALD-counts) to inexpensive $500 gaming monitors, unlike the extra-expensive home theater displays that used to be the sole holder of FALD technologies. Sure, there's a few below-the-noisefloor photons leaking, but that's noisefloor stuff like OLED banding (a common complaint), so OLED-vs-LCD will have very well balanced pros-cons for long time to come.

This is only 1 breakthrough technology example. I have seen multiple other breakthrough technologies that guarantees LCD stays alive for about 20 years, even 30 more years, so I'm micdropping the "LCD is dead" debate. LCD will die a slow death yes, when cheap retina-resolution MicroLEDs arrive (superior to OLED and superior to LCD, and can eventually be superior to CRT), but LCD won't be the sudden death that many claim.

On LG, the OLED BFI 120Hz is 8ms of motion blur, much more motionblurry than LCD strobing. I've not seen an OLED strobe as well as an LCD just yet, unfortunately. Even the best OLED strobing I've seen -- the Oculus Rift VR headset -- is 2ms persistence, which is about 4x more motionblurry than PureXP Ultra on the ViewSonic XG270. There is a problem/bottleneck partially caused by Talbot-Plateau Law, combined with the increased noise margin (noisy/grainy OLED blacks / dark shades / banding) that gets amplified by OLED strobing. Pushing the needle on LCD and OLED shows different technology bottlenecks. (LCD GtG becomes an easier horse to solve in some ways... to a certain extent)AddictFPS wrote: ↑17 May 2020, 05:20But they take some risk, because if one OLED TV manufacturer like LG launch a 120Hz BFI 1ms input lag using a exclusive gaming mode, gamers that want gaming screen without motion blur go buy TV instead to get the CRT/Plasma feel in PC desktop. 48" 4K 120Hz can be used at native 24" FHD 120Hz with GPU integer scaling, so is perfectly usable for PC desktop if is wall mounted and user have enought space for it, and money

At this point, we're in idealistic speculation. The matter of the fact is that real life is sometimes disappointing and we have to keep the door open to all technologies, LCD, OLED, MicroLED.AddictFPS wrote: ↑17 May 2020, 05:20If LG launch a OLED panel with extremely low input lag, that break the gaming market from motion blur reduction point of view (no crosstalk no input lag), and the rest of gaming panel manufacturers they will be late to these next generation. They should be thinking about doing something about it before this occurs, but only JOLED is in this race Vs LG, but now only 22" monitor OLED 4K 60Hz no gaming.

And, "nonsense" is a verboten word on Blur Busters. Long time readers know how we mythbust this kind of stuff.AddictFPS wrote: ↑17 May 2020, 05:20Race to 1000Hz display with LCD is nonsense [Mod EDIT: Nope -- not nonsense], response time can't fit into refresh rate, non-strobed with smearing, and 120FPS/Hz strobed, can be crosstalk free, but increase input lag, and gamers not want more input lag, so need to go straight to OLED.

One huge problem that has hurt LCD is the "race to bottom". Some aspects of LCD is worse than 20 years ago. People don't want to pay $5000 for an LCD that looks better than an OLED.

Back in year 2001, the IBM T221 (the world's first 4K LCD) did not have much of an IPS glow issue. But today's cheaply made LCDs have more nonuniformities, and so on.

The bottom line is that you cannot judge a book by the cover by having worked maijnly with sub-$1000 LCDs. If you travelled the world to display conventions, display prototypes, and laboratories, one will understand better what I am talking about.

I understand why many people make assumptions on LCD limitations because we judge by the $500 monitor. But the fact is CRT-quality blacks are already achieved in LCDs. Just not at the $500 level.

Just like how Blur Busters helped prove to the world that motion blur can be eliminated on LCDs back in the day people thought that motion blur can never be fixed on an LCD -- that's is how Blur Busters was born. Back in the hobby days, the Blur Busters website used to be www.scanningbacklight.com and I still have a very old scanning backlight FAQ from the orginal 2012 site.

However, a lot has changed since, and it will become much easier/cheaper to do FALD LCDs in a gaming monitors in the coming years, as a stopgap before full-RGB MicroLED slowly goes cheap/commodity/retina (~10-20 years). We'll be able to enjoy great CRT blacks without blooming artifacts much sooner.

While approximately half of your post had good (albiet idealistic) points -- the word "nonsense" is blasphemy to Blur Busters existence.

MicroLED is pretty much the ultimate goal but it's going to be a long time before it's affordable/cheap.

Head of Blur Busters - BlurBusters.com | TestUFO.com | Follow @BlurBusters on Twitter

Forum Rules wrote: 1. Rule #1: Be Nice. This is published forum rule #1. Even To Newbies & People You Disagree With!

2. Please report rule violations If you see a post that violates forum rules, then report the post.

3. ALWAYS respect indie testers here. See how indies are bootstrapping Blur Busters research!

Re: Are these motion blur reduction modes useful for competitive fast paced games?

I remove the word nonsense, i was a bit frustrated at the time of post, i shouldn't have said it

Moreover, i did not know that there are possibilities of microsecond LCD, this change all ! This GtG time is minimun, maximun or average ? Any possibility of see this in shops soon ?

This GtG time is minimun, maximun or average ? Any possibility of see this in shops soon ?

About CX 120FPS/Hz BFI, 8.3ms is the motion blur without BFI, there are 3 levels of BFI low-medium-high, with high BFI use 60% time black, 8.33 - 60% = 3.33ms , not bad, is like not so dimm strobed LCD. Flicker can't be see, realy good motion blur reduction quality, people use it to get CRT/Plasma TV motion feel. But input lag is atrocious. Moreover full screen white only can reach 160cd/m2 - 60% = 64cd/m2 is a serious dimm, OLED BFI is only for low bright rooms, with smooth light sources, because surface is pure glossy. Maybe is possible install antiglare coating.

I trade input lag in LCD to get strobe, for me worth it ! Say go straight OLED BFI is for fix input lag, if is well input lag implemented... not taking away value of current LCD strobing, that well tuned like Blur Buster do, is a work of art.

I never hear about microwires is the cause of low OLED brightness, i read about LG limit energy to combat burn-in and max consuption, (Automatic Brightness Control), spikes in small screen piece up to 750 nits, fullscreen 160. But about MicroLED i read Samsung microwires and welds to hot, a challenge, very high temperature spikes. Only high quality materials can fix this, they have fix it, but MicroLED is very expensive. Anyway, i hope samsung have plans to launch FHD MicroLED monitor, compare it with LCD, and see if worth it.

QNED and QDEL are interesting intermediate Samsung techs between current QLED and high end MicroLED. More cheap that MicroLED, but good quality, more mainstream but with pure blacks to fight with LG OLED.

If i'm not wrong:

1) QLED use QD for backlight, and liquid cristal for color, slow response time, no pure blacks.

2) QNED eliminate liquid cristal, microled blue FALD is the backlight, and QD are used for color conversion. Sound very good ! Maybe a big jump in response time, true black.

3) QDEL use directly QD for emit light (electro luminiscent) and can be cheap to made, printed.

Hope Samsung not take long time to launch these "True" QD screens. Can be a serious competitor of LCD in terms of quality/cost.

Moreover, i did not know that there are possibilities of microsecond LCD, this change all !

About CX 120FPS/Hz BFI, 8.3ms is the motion blur without BFI, there are 3 levels of BFI low-medium-high, with high BFI use 60% time black, 8.33 - 60% = 3.33ms , not bad, is like not so dimm strobed LCD. Flicker can't be see, realy good motion blur reduction quality, people use it to get CRT/Plasma TV motion feel. But input lag is atrocious. Moreover full screen white only can reach 160cd/m2 - 60% = 64cd/m2 is a serious dimm, OLED BFI is only for low bright rooms, with smooth light sources, because surface is pure glossy. Maybe is possible install antiglare coating.

I trade input lag in LCD to get strobe, for me worth it ! Say go straight OLED BFI is for fix input lag, if is well input lag implemented... not taking away value of current LCD strobing, that well tuned like Blur Buster do, is a work of art.

I never hear about microwires is the cause of low OLED brightness, i read about LG limit energy to combat burn-in and max consuption, (Automatic Brightness Control), spikes in small screen piece up to 750 nits, fullscreen 160. But about MicroLED i read Samsung microwires and welds to hot, a challenge, very high temperature spikes. Only high quality materials can fix this, they have fix it, but MicroLED is very expensive. Anyway, i hope samsung have plans to launch FHD MicroLED monitor, compare it with LCD, and see if worth it.

QNED and QDEL are interesting intermediate Samsung techs between current QLED and high end MicroLED. More cheap that MicroLED, but good quality, more mainstream but with pure blacks to fight with LG OLED.

If i'm not wrong:

1) QLED use QD for backlight, and liquid cristal for color, slow response time, no pure blacks.

2) QNED eliminate liquid cristal, microled blue FALD is the backlight, and QD are used for color conversion. Sound very good ! Maybe a big jump in response time, true black.

3) QDEL use directly QD for emit light (electro luminiscent) and can be cheap to made, printed.

Hope Samsung not take long time to launch these "True" QD screens. Can be a serious competitor of LCD in terms of quality/cost.

Re: Are these motion blur reduction modes useful for competitive fast paced games?

This spiraled off topic to be honest as OP didn't ask about QLED or OLED contest. To answer OP a bit as a very high competitive player in various games this is my opinion:

The motion blur technology helps a lot in games, where you are running fast and aim while moving. You will benefit from surroundings not being too blurry also if targets are rushing you and you are tracking them while moving, they will be more clear. This for me has a high benefit in many games and gives me the superior clarity needed for better hits. In some cases the recoil control will be more sharp, as the motion of spraying hard is more clear and you can see better how to control the spray. This can be very subtle though.

Opposed to that the games where your mostly staring at spots and camping corners, you won't see any profit of this technologies. Also if your very used to watching only crosshair, you will probably not notice the difference.

This is my opinion.

The motion blur technology helps a lot in games, where you are running fast and aim while moving. You will benefit from surroundings not being too blurry also if targets are rushing you and you are tracking them while moving, they will be more clear. This for me has a high benefit in many games and gives me the superior clarity needed for better hits. In some cases the recoil control will be more sharp, as the motion of spraying hard is more clear and you can see better how to control the spray. This can be very subtle though.

Opposed to that the games where your mostly staring at spots and camping corners, you won't see any profit of this technologies. Also if your very used to watching only crosshair, you will probably not notice the difference.

This is my opinion.

-

thatoneguy

- Posts: 181

- Joined: 06 Aug 2015, 17:16

Re: Are these motion blur reduction modes useful for competitive fast paced games?

I'm really curious about this FRAT thing. What's the status of this thing?

I understand you might be tied to NDA's and might not be able to tell us everything but it'd be cool to hear an update about it.

The concept of perfect lagless interpolation like that seems like a pipe dream.

One would think you would need some really cutting edge powerful and efficient(both in terms of electricity and thermals) technology to achieve such a feat. This tech feels like it's at least 3 decades away.

Getting CRT-tier input lag(micro/nanosecond) with perfect interpolation seems extremely unbelievable to me.

To me if we could get perfect interpolation upto 120fps that would be amazing, since you can easily combine that with strobing and get great results(especially for content that is fixed framerate like 30fps, 60fps heck even 24fps movies). Especially if you combine that with MicroLED technology.

60hz flicker is intolerable at PC viewing distance but most people don't mind 120hz + there's the addition of tweaking the persistence as well.

Re: Are these motion blur reduction modes useful for competitive fast paced games?

I think lagless interpolation can't be done, because interpolation processor need know the next frame to create the interpolated, so always there are 1 frame + processing time of interpolated frame inputlag. Apart, can occur random artifacts in some non linear motion scenes.

- Chief Blur Buster

- Site Admin

- Posts: 11653

- Joined: 05 Dec 2013, 15:44

- Location: Toronto / Hamilton, Ontario, Canada

- Contact:

Re: Are these motion blur reduction modes useful for competitive fast paced games?

Classic assumption.thatoneguy wrote: ↑19 May 2020, 20:24The concept of perfect lagless interpolation like that seems like a pipe dream.

Not all frame rate ampification technologies use "interpolation".

It doesn't have interpolation lag and it doesn't have interpolation artifacts.

NVIDIA is already working on it: Frame Rate Amplification Technologies.

Classic assumption.AddictFPS wrote: ↑20 May 2020, 07:38I think lagless interpolation can't be done, because interpolation processor need know the next frame to create the interpolated, so always there are 1 frame + processing time of interpolated frame inputlag. Apart, can occur random artifacts in some non linear motion scenes.

It is not called "interpolation".

There are other methods, such as interpolation, extrapolation and reprojection.

For example, the mouse is 1000Hz, far beyond frame rate. If the game knows what direction things are going in, it just has to tell the frame rate amplification technology. If the GPU knows about the mouse, it can shift things around faster with less GPU re-renders. In that sense, it is no longer predicting the movement, but knows about the movement.

Oculus VR manages to do it, and I am already playing Half Life Alyx 45fps->90fps converted essentially perceptually laglessly. But Oculus uses "reprojection" instead of "interpolation". There may be more frametime lag from having only 100fps at the GPU level than 1000fps, but there's no need to know what the next GPU frame is.

Once someone reads the 2nd half of the article, Frame Rate Amplification Technologies carefully before you reply.

Interpolation is "classic" thinking, while other frame rate amplification technologies are less black box and knows what the 3D game engine is doing (e.g. real time communciations between the video game & the frame rate amplification technology). This bypasses the artifacts & latency penalties.

In fact, the Unreal 5 engine has some elements of frame rate amplification technology built into it.

Also, it can be done spatially to an extent instead of temporally -- such as NVIDIA DLSS 2.0 which is another frame rate amplification technology.

I will cross-post a portion of the the article into this thread:

The misunderstanding of classic flawed interpolation (soap opera effect, artifacts, latency) reminds me of the old 30fps and 60fps debates. The future of frame rate amplification technology is much more amazing than that.Frame Rate Amplification Technology wrote:Are They “Fake Frames”?

Not necessarily anymore! In the past, classic interpolation added very fake-looking frames in between real frames.

However, some newer modern frame rate amplification technologies (such as Oculus ASW 2.0) are less black-box and more intelligent. New frame rate amplification technologies use high-frequency extra data (e.g. 1000 Hz head trackers, 1000Hz mouse movements) as well as additional information (texture memory, Z-Buffer memory) in order to create increasingly accurate intermediate 3D frames with less horsepower than a full GPU render from scratch. So don’t call them “Fake Frames” anymore, please!

Many film makers and content creators hate interpolation as they don’t have control over a TV’s default setting. However, game makers are able to intentionally integrate it and make it as perfect as possible (e.g. intentionally providing data such as a depth buffer and other information to eliminate artifacts).

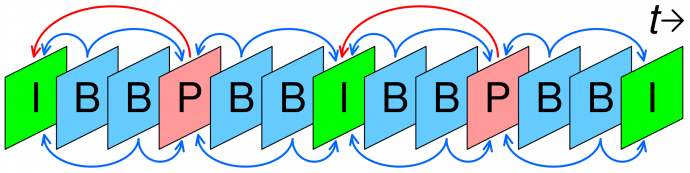

Video Compression Equivalent Of FRAT: Predicted Frames

When you are watching video and films, using Netflix or YouTube or at digital movie theaters, you’re already watching material that often contains approximately 1 or 2 non-predicted frames per second, with very accurate predictive frames inserted in between them.

Modern digital video and films use various video compression standards like MPEG2, MPEG4, H.264, H.265, etc. to generate the full video and movie frame rates (e.g. 24fps, 30fps, 60fps, etc) by using very few full frames and filling the rest with predicted frames! This is a feature of video compression standards to insert “fake frames” between real frames, and these “fake frames” are now almost perfectly accurate, that they might as well be real frames.

- Full Frames:

Video including Netflix, YouTube, Blu-Ray and digital cinema are often only barely more than 1 full frame per second via I-Frames- Predicted Frames:

The remainder of video frames are “faked” by predictive techniques via P-Frames and B-Frames. Yes, that even includes the frames at the digital projector in your local movie theater!

Today, video compression has already achieved the equivalent of approximately 10:1 to 100:1 frame rate amplification ratios today! (Image source: Wikipedia Article on Inter-Frames)

3D Rendering Is Slowly Heading Towards Similar Metaphor!

Towards year 2030, we anticipate that the GPU rendering pipeline will slowly evolve to include multiple Frame Rate Amplification Technology (FRAT) solutions where some frames are fully rendered, and the intermediate frames are generated using a frame rate amplification technology. It is possible to make this perceptually lossless with less processing power than full frame rate. Some FRAT systems incorporate video memory information (e.g. Z-Buffers, textures, raytracing history) as well as eliminating position guessing (e.g. 1000Hz mouse, 1000Hz headtrackers) to make frame rate amplification visually lossless.

Instead of rendering at low-detail at 240fps, one can render at high-detail at 60fps and use frame rate amplification technology (FRAT) to convert 60fps to 240fps. With newer perceptually-lossless FRAT technologies, it is possible to instead get high-detail at 240fps, having cake and eating it too!

Large-Ratio Frame Rate Amplifications of 5:1 and 10:1[

The higher the frame rate, the briefer individual frames are displayed for, and the less critical imperfections in some interframes become. We envision large-ratio 5:1 or 10:1 frame rate amplification to be practical for converting 100fps to 1000fps in a perceptually lagless and lossless way.

This is Key Basic Technology For Future 1000 Hz Displays

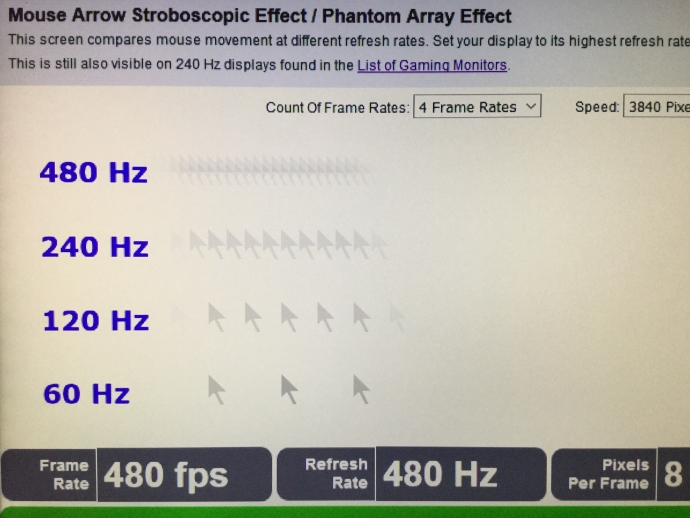

We wrote a now-famous article about the Journey to 1000Hz Displays. Recent research shows that 1000Hz displays has human visible benefits provided that source material (graphics, video) is properly designed and properly presented to such a display at ultra high resolutions. Many new discoveries of the benefits of ultra-high-Hz displays have been discovered through additional research now that such experimental displays exist. Here is a photograph of a real 480Hz display:

Ultra-high-Hz also behaves like a strobeless method of motion blur reduction (blurless sample-and-hold), as twice the frame rate and refresh rate halves display motion blur on a sample-and-hold display.

Frame Rate Amplification Can Make Strobe-Based Motion Blur Reduction Unnecessary

Our namesake, Blur Busters, was born because of display motion blur reduction technology that is found in many gaming monitors today (including LightBoost, ULMB, etc). These use impulsing techniques (backlight strobing, frame flashing, black frame insertion, phosphor decay, or other impulsing technique).

With current low-persistence VR headsets, you can see stroboscopic artifacts when moving head super very fast (or rolling eyes around) on high-contrast scenes. Ultra-Hz fixes this.

Making Display Motion More Perfectly Identical To Real-Life

A small but not-insignificant percentage of humans cannot use VR headsets, and cannot use gaming-monitor blur-reduction modes (ULMB, LightBoost, DyAc, etc) due to an extreme flicker sensitivity.

Accommodating a five-sigma population can never be accomplished via strobed low-persistence. Ultra-high-framerate sample-and-hold displays makes possible blurless motion in a strobeless fashion, in a way practically indistinguishable from real life analog motion.

The only way to achieve perfect motion clarity (equivalent to a CRT) on a completely flickerless display, is to display sharp, low-persistence frames consecutively with no black periods in between. Achieveing 1ms persistence on a sample-and-hold display, requires 1000 frames per second on a 1000 Hertz display.

Tomorrow's motion blur reduction mode will not be strobing, but will be via frame rate amplification technologies (as the method of motion blur reduction).

There may still be the original frametime lag of the original frame rate (e.g. the lag of 100fps native-GPU-generated versus the lag of 200fps native-GPU generated), but adding extra frames doesn't necessarily add lag or artifacts if they are inserted more flawlessly from other non-black-box-sources such as the gaming mouse (1000Hz) communicated directly to GPU to eliminate lag (no prediction needed), and such as the Z-Buffer (3D data) to eliminate parallax artifacts, etc.. In that sense, the frame rate amplification technology ends up having no lag penalty beyond the original frame rate.

(This is on topic, "motion blur reduction modes" is part of the original-post topic)

Head of Blur Busters - BlurBusters.com | TestUFO.com | Follow @BlurBusters on Twitter

Forum Rules wrote: 1. Rule #1: Be Nice. This is published forum rule #1. Even To Newbies & People You Disagree With!

2. Please report rule violations If you see a post that violates forum rules, then report the post.

3. ALWAYS respect indie testers here. See how indies are bootstrapping Blur Busters research!

-

thatoneguy

- Posts: 181

- Joined: 06 Aug 2015, 17:16

Re: Are these motion blur reduction modes useful for competitive fast paced games?

No offense, it just seems crazy to me. Like magic or something.

I seem to recall there being some issues with it that still weren't fixed like geometric awareness or something before.

Correct me if I'm wrong but those issues still haven't been fixed to my understanding.

And as I recall the reprojection technique used on Playstation VR does have some lag(I think it was 1 or 2ms iirc) but I'm not sure if that's the same technique Nvidia uses.

I seem to recall there being some issues with it that still weren't fixed like geometric awareness or something before.

Correct me if I'm wrong but those issues still haven't been fixed to my understanding.

And as I recall the reprojection technique used on Playstation VR does have some lag(I think it was 1 or 2ms iirc) but I'm not sure if that's the same technique Nvidia uses.

- RedCloudFuneral

- Posts: 40

- Joined: 09 May 2020, 00:23

Re: Are these motion blur reduction modes useful for competitive fast paced games?

In my experience frame-rate amplification is still very much a BETA product, I use a Windows Mixed Reality headset and quite frequently experience what looks like strobe crosstalk and stutter using it when FPS drops below 90. WMR uses the SteamVR's implementation which should be similar to any non-Rift headset. What's annoying is that it doesn't always display double-images but the stutter is a consistent feature which can be tested waving your hand across your FoV or moving horizontally in front of a vritual object(I like testing with the computer terminals in The Talos Principal, they're hard to read when you're not hitting a smooth 90FPS.)