Alright, so what I'm calling "tweening" is called "strobing," and it actually begins at 40 fps and below, correct? If so, I can reflect that on the chart easily. I know the method used (whatever it is called) is akin to older 60Hz TVs with so-called "120Hz" or "240Hz" modes to repeat the current refresh in certain intervals (some use black frame insertion, some interpolation, I believe; corrections welcome), I just didn't know the technical term, or exactly when it started with G-Sync.

I also found this post on the NeoGaf G-Sync thread, how accurate is this? (I want to get the chart threshold/wording right):

http://www.neogaf.com/forum/showpost.ph ... tcount=605

At 30-45 fps, the panel will refresh at 30-45 Hz, which is really low. You will probably observe flickering as the display updates at these low rates.

Below 30 fps, the G-Sync module uses a trick to avoid the refresh rate falling even further; it repeats frames. At 29 fps, the display is actually refreshing at 58 Hz, with doubled frames. This helps create a smoother appearance, but adds latency and will make controls feel laggy. Basically, you don't want to be beneath 40 fps at all, but if the drops are momentary, the experience is much better than with a standard fixed-rate display and VSync.

As for my word choice of "polling," I'm simply pulling it, yet again, from that article:

http://www.blurbusters.com/gsync/preview2/

We currently suspect that fps_max 143 is frequently colliding near the G-SYNC frame rate cap, possibly having something to do with NVIDIA’s technique in polling the monitor whether the monitor is ready for the next refresh. I did hear they are working on eliminating polling behavior, so that eventually G-SYNC frames can begin delivering immediately upon monitor readiness, even if it means simply waiting a fraction of a millisecond in situations where the monitor is nearly finished with its previous refresh.

I did not test other fps_max settings such as fps_max 130, fps_max 140, which might get closer to the G-SYNC cap without triggering the G-SYNC capped-out slow down behavior. Normally, G-SYNC eliminates waiting for the monitor’s next refresh interval:

G-SYNC Not Capped Out:

Input Read -> Render Frame -> Display Refresh Immediately

When G-SYNC is capped out at maximum refresh rate, the behavior is identical to VSYNC ON, where the game ends up waiting for the refresh.

G-SYNC Capped Out

Input Read -> Render Frame -> Wait For Monitor Refresh Cycle -> Display Refresh

And here in a later comment:

http://www.blurbusters.com/gsync/preview2/#comment-2591

You want to use the highest possible frame rate cap, that’s at least several frames per second below the G-SYNC maximum rate, in order to prevent G-SYNC from being fully capped out. Testing each run took a lot of time, so I didn’t end up having time to test in-between frame caps (other than fps_max 120, 143 and 300).

Technically, input latency should “fade in” when G-SYNC caps out, so hopefully future drivers can solve this behavior, by allowing fps_max 144 to also have low latency. Even doing an fps_max 150 should still have lower input lag than fps_max 300 using G-SYNC, since the scanout of the previous refresh cycle would be more finished 1/150sec later, rather than 1/300sec later. Theoretically, the drivers only needs to wait a fraction of a millisecond at that time and begin transmitting the next refresh cycle immediately after the previous refresh finished. I believe the fact that latency occured at fps_max 143, to be a strange quirk, possibly caused by the G-SYNC polling algorithm used. I’m hoping future drivers will solve this, so that I can use fps_max 144 without lag. It should be technically possible, in my opinion. It might even be theoretically possible to begin transmitting the next frame to the monitor before the display refresh is finished, by possibly utilizing some spare room in the 768MB of memory found on the G-SYNC board (To my knowledge, this isn’t currently being done, and isn’t the purpose of the 768MB memory). Either way, I think this is hopefully an easily solvable issue, as there should only be a latency fade-in effect when G-SYNC caps out at fps_max 143, fps_max 144, fps_max 150 — rather than an abrupt additional latency. I’ll likely contact NVIDIA directly and see what their comments are, about this.

Finally, he states the polling time is "1ms" on a post in this thread:

http://forums.blurbusters.com/viewtopic ... lling#p221

I talked to people at NVIDIA, and they confirmed key details.

The framebuffer starts getting transmitted out of the cable after about 1ms (the GSYNC poll) after the render-finish of Direct3D Present() call. That means, if your framebuffer is simple, the first pixels are on the cable after only 1ms after Direct3D Present() -- this is provided the previous call to Present() returned at least 1/144sec ago. Also, the monitor does real-time scanout off the wire (as all BENQ and ASUS 120Hz monitors does). Right now, they are polling the monitor (1ms) to ask if it's currently refreshing or not. This poll cycle is the main source of G-SYNC latency at the moment, but they are working on eliminating this remaining major source of latency (if you call 1ms major!). One way to picture this, "on the cable", the only difference between VSYNC OFF and G-SYNC is that the scanout begins at the top edge immediately, rather than a splice mid-scan (tearing).

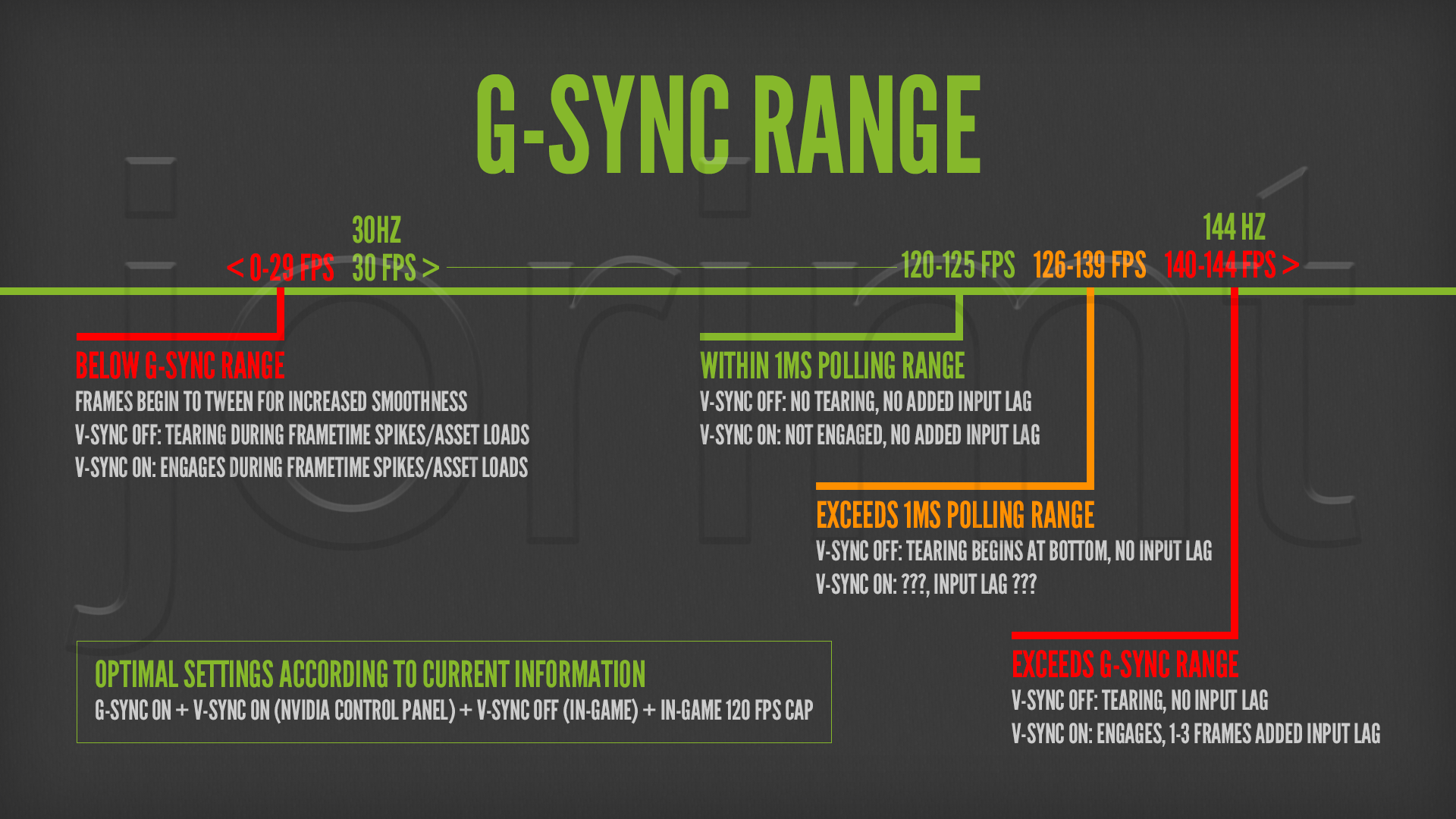

After reading all that, and doing my own simple tests, I found that between 120ish fps and the 144Hz ceiling, some weird stuff is going on, especially with G-Sync on + V-Sync off.

Here's the bottom line. Put yourself in the average G-Sync user's shoes. You buy a G-Sync monitor, you bring it home, plug it in, you pull up google, and you type in "best G-Sync settings." A variety of results pop up, mostly from reddit, which, on average, have two recommendations in common:

1. Set a global 135 fps cap with RTSS to avoid G-Sync ceiling and additional input lag.

2. Disable V-Sync in the control panel and in-game, since it adds tons of input lag, no exceptions.

You then set your display up according to the above "instructions" and launch a game. You begins to see tearing at the bottom of the screen, and worse yet, the whole screen tears sometimes (unbeknownst to you) due to the frametime spikes that happen below G-Sync's range. You angrily pull up the Geforce forum and either start a "G-Sync is Broken!!!" thread, or comment in the latest driver thread, exclaiming G-Sync support is broken, and that Nvidia is legally liable.

Obviously, most of this is untrue. As we already know, a 135 fps cap may not always be enough to stop the tearing seen on a 144Hz display with G-Sync on + V-Sync off. That, and external fps caps add additional input lag (I still don't know how much :\) over in-game limiters. Secondly, G-Sync on + V-Sync on isn't broken; with a proper fps cap, it is actually preferred, and is the only way to achieve a 100% tear free experience when those frametime spikes crop up.

I simply want to clear up this misinformation once and for all.