Blur Buster's complete 4-part "G-SYNC 101" series has been released on blurbusters.com:

http://www.blurbusters.com/gsync/gsync101/

This topic has been closed, but will remain visible for archival purposes.

Please continue the discussion here:

http://forums.blurbusters.com/viewtopic.php?f=5&t=3441

G-Sync Module:G-SYNC 101

The G-Sync module is a small chip that replaces the display's standard internal scaler, and contains enough onboard memory to hold and process a single frame at a time. The module exploits the vertical blank period (the span between the previous and next frame scan) to manipulate the display’s internal timings, perform G2G (gray to gray) overdrive calculations to prevent ghosting, and synchronize the display's refresh rate to the GPU’s render rate.

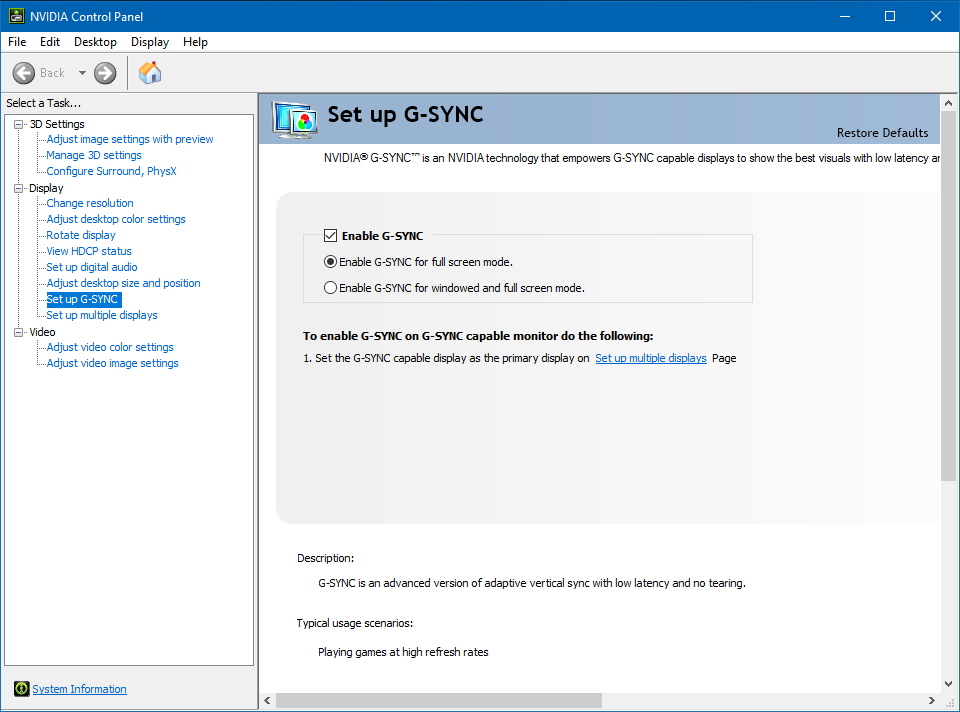

G-Sync Activation:

"Enable G-SYNC for full screen mode" (exclusive fullscreen functionality only) will automatically engage when a supported display is connected to the GPU. If G-Sync behavior is suspect or non-functioning, untick the "Enable G-SYNC" box, apply, re-tick, and apply.

G-Sync Windowed Mode:

"Enable G-SYNC for windowed and full screen mode" allows G-Sync support for windowed and borderless windowed games. This option was introduced in a 2015 driver update, and by manipulating the DWM (Desktop Window Manager) framebuffer, enables G-Sync's VRR (variable refresh rate) to synchronize to the focused window's render rate; unfocused windows remain at the desktop's fixed refresh rate until focused on.

G-Sync only functions on one window at a time, and thus any unfocused window that contains moving content will appear to stutter or slow down, a reason why a variety of non-gaming applications often include predefined Nvidia profiles that disable G-Sync support.

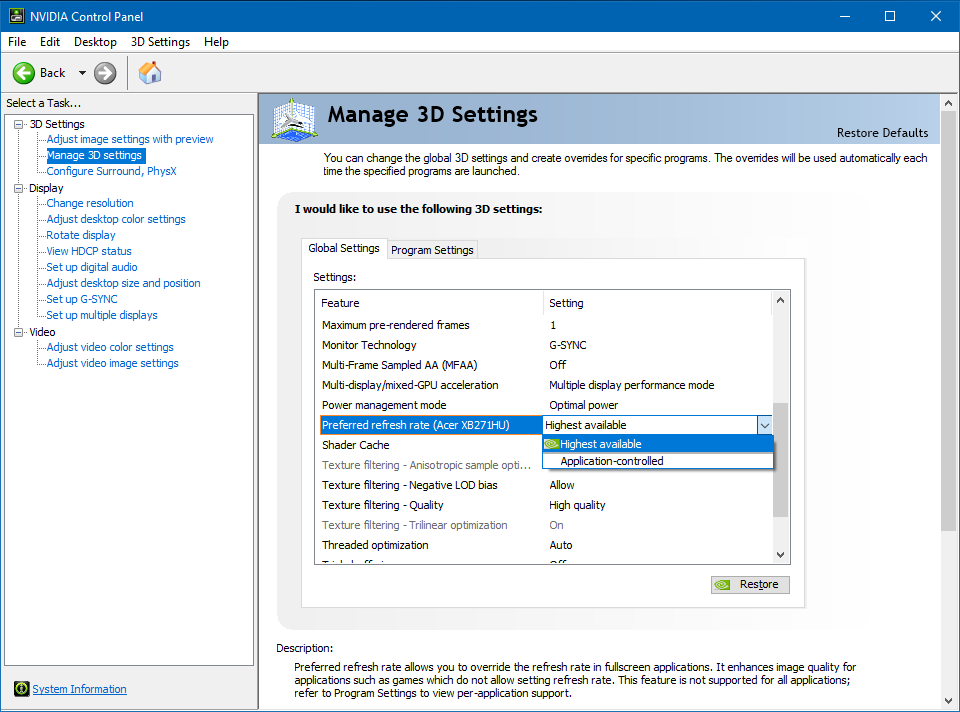

G-Sync Preferred Refresh Rate:

"Highest available" automatically engages when G-Sync is enabled, and overrides the in-game refresh rate option (if present), defaulting to the highest supported refresh rate of the display. This is useful for games that don't include a selector, and ensures the display’s native refresh rate is utilized. "Application-controlled" defers refresh rate control to the game.

G-Sync & V-Sync:

G-Sync (GPU Synchronization) works on the same principle as double buffer v-sync; buffer A begins to render frame A, and upon completion, scans it to the display. Meanwhile, as buffer A finishes scanning its first frame, buffer B begins to render frame B, and upon completion, scans it to the display, repeat.

The primary difference between G-Sync and v-sync is the method in which rendered frames are synchronized. With v-sync, the GPU’s render rate is synchronized to the fixed refresh rate of the display. With G-Sync, the display’s VRR (variable refresh rate) is synchronized to the GPU’s render rate.

On release, G-Sync’s ability to fall back on fixed refresh rate v-sync behavior when exceeding the maximum refresh rate of the display was built-in and non-optional. A 2015 driver update later exposed the option.

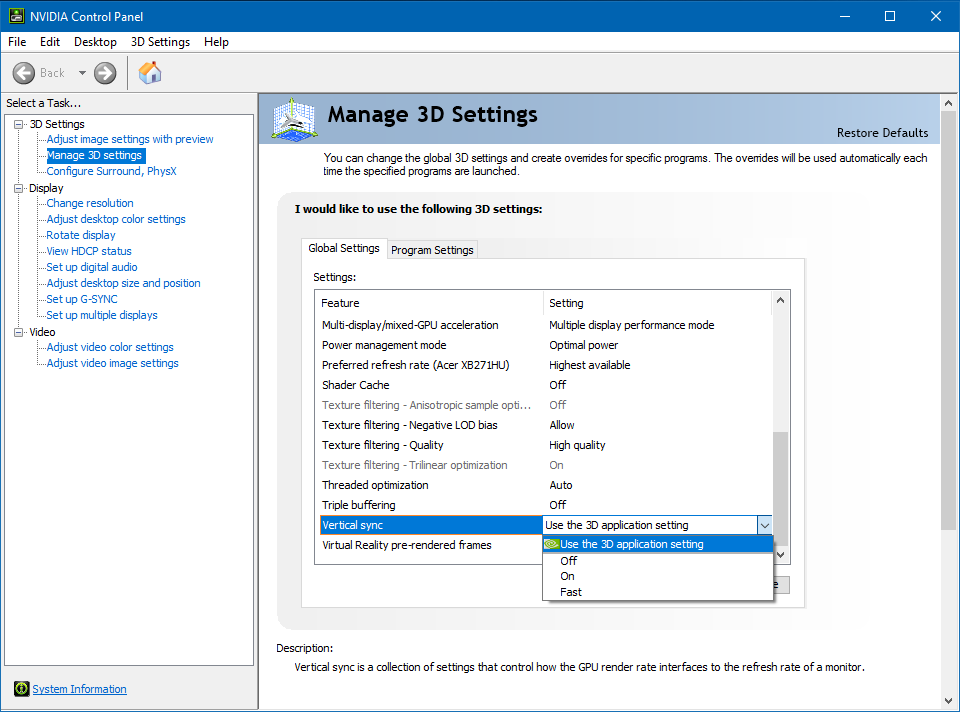

This update led to recurring confusion, creating a misconception that G-Sync and v-sync are entirely separate options. However, the "Vertical sync" option in the control panel actually dictates whether, one, the G-Sync module compensates for frametime variances (see "Upper Frametime Variances" in "G-Sync Range" section), and two, whether G-Sync falls back on fixed refresh rate v-sync behavior; if v-sync is "On," G-Sync will revert to v-sync behavior above its range, if v-sync is "Off," G-Sync will disable above its range. Within its supported range, G-Sync is the only synchronization method active, no matter the v-sync setting.

Currently, when G-Sync is enabled, the control panel’s "Vertical sync" entry is automatically engaged to “Use the 3D application setting,” which defers v-sync fallback behavior control to the in-game v-sync option. This can be manually overridden by changing the “Vertical sync” entry in the control panel to “Off,” “On,” or “Fast” (see “G-Sync Input Latency & Optimal Settings” for suggested scenarios).

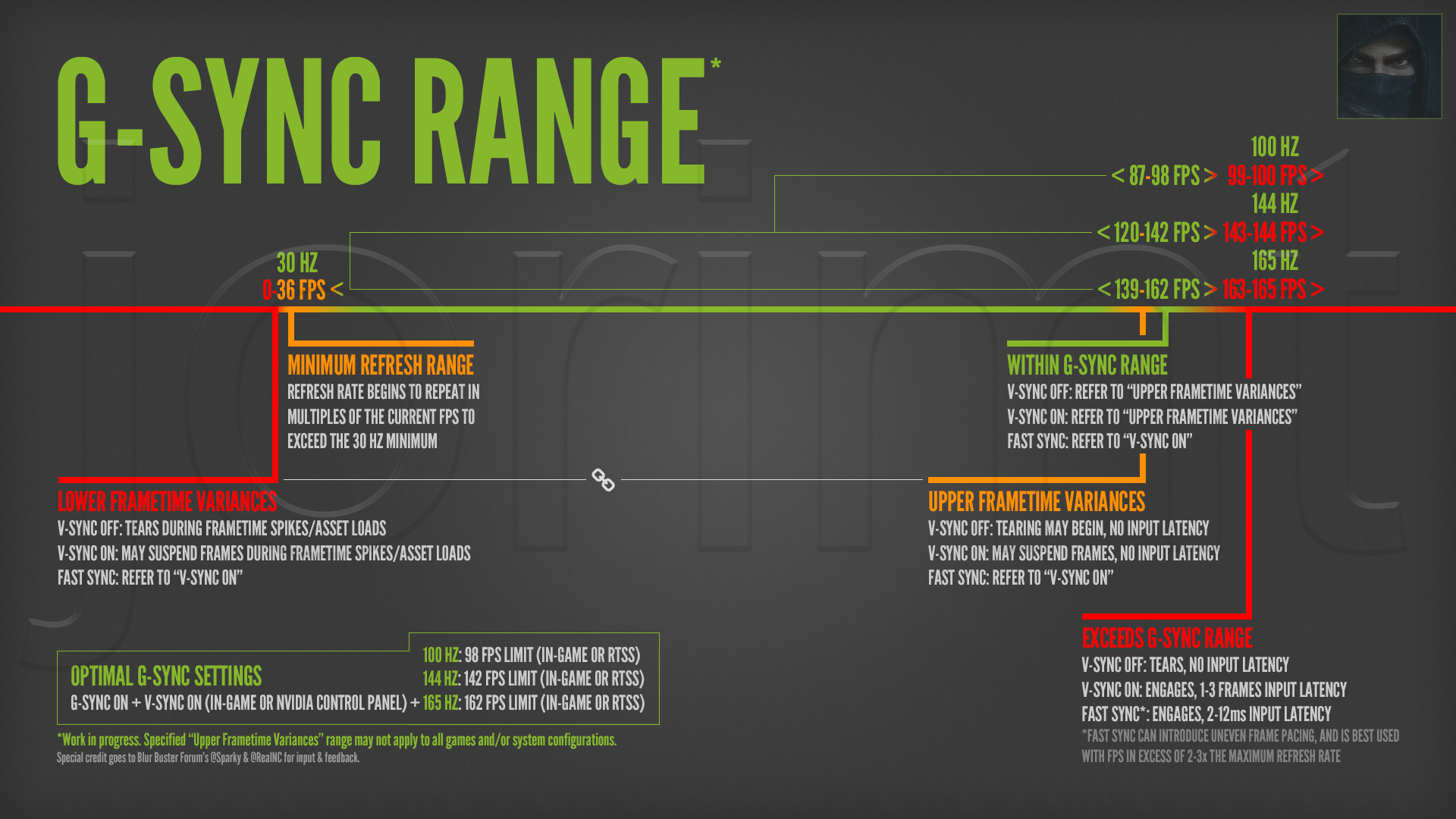

Chart Updated: 12/25/2016G-SYNC RANGE*

View/download full-size chart: https://i.gyazo.com/35523f43ff4fd7566c3 ... 24e221.png

EXCEEDS G-SYNC RANGE

V-Sync Off: G-Sync disengages, tearing begins display wide, no additional input latency is introduced.

V-Sync On: G-Sync reverts to its fixed refresh rate v-sync behavior (see “G-Sync & V-Sync”), 1-3 frames of additional input latency is introduced as frames begin to over-queue in both buffers, ultimately delaying their appearance on-screen.

Fast Sync*: G-Sync disengages, Fast Sync engages, 2-12ms of additional input latency is introduced.

(*Fast Sync is best used with a framerate in excess of two to three times that of the display's maximum refresh rate, as its third buffer selects from the best frames to display as the final render; the higher the sample rate, the better it functions. Do note that even at its most optimal, Fast Sync introduces uneven frame pacing, which can manifest as recurring micro stutter).

WITHIN G-SYNC RANGE

Refer to “Upper Frametime Variances" below.

UPPER FRAMETIME VARIANCES

V-Sync Off: G-Sync remains engaged, tearing may begin at the bottom of the display, no additional input latency is introduced.

The tearing seen at the bottom of the display (example: https://youtu.be/XfFG1r7Uf00) in this relatively narrow range, is due to frametime variances output by the system, which will vary from setup to setup, and from game to game. Setting v-sync to "Off" disables the G-Sync module's ability to compensate for frametime variances, meaning when an affected frame is unable to complete its scan before the next, instead of suspending the frame long enough to display it completely, the module will display the next frame immediately, resulting in a partial tear. Not only does v-sync "Off" have no input latency reduction over v-sync "On" (see “G-Sync Input Latency & Optimal Settings”), but it disables a core G-Sync functionality and should be avoided.

V-Sync On: G-Sync remains engaged, module may suspend frames, no additional input latency is introduced.

This is how G-Sync was originally intended to function (see “G-Sync & V-Sync”). With v-sync "On," the G-Sync module compensates for frametime variances by suspending the affected frame long enough to complete its scan before the next, preventing the tearing seen at the bottom of the display in the “V-Sync Off” scenario above. Since this operation is performed during the vertical blank period (the span between the previous and next frame scan), it does not introduce additional input latency (see “G-Sync Input Latency & Optimal Settings”).

Fast Sync: Refer to “V-Sync On” above.

MINIMUM REFRESH RANGE

Once the framerate reaches the 36 and below mark, the G-Sync module begins inserting duplicate frames to maintain the display’s minimum physical refresh rate, and smooth motion perception. If the framerate is at 36, the refresh rate will double to 72 Hz, at 18 frames, it will triple to 54 Hz, and so on. This behavior will continue down to 1 frame per second.

Do note that regardless of the currently reported framerate and variable refresh rate of the display, each frame scan will still physically complete (from top to bottom) at the display's maximum supported refresh rate; 16.6ms @60Hz, 10ms @100 Hz, 6.9ms @144hz, and so on.

LOWER FRAMETIME VARIANCES

V-Sync Off: G-Sync remains engaged, tearing begins display wide during frametime spikes/asset loads.

As explained in the "V-Sync Off" section of "Upper Frametime Variances," this scenario disables the G-Sync module's ability to suspend frames, and will cause tearing during frametime spikes (see “What are frametime spikes?” further below). As such, “V-Sync On” (below) is recommended for a 100% tear-free G-Sync experience.

V-Sync On: G-Sync remains engaged, module may suspend frames during frametime spikes/asset loads.

Paired with an appropriate framerate limit (see “G-Sync Input Latency & Optimal Settings”), this scenario is recommended for a 100% tear-free G-Sync experience. The G-Sync module may suspend frames during frametime spikes (see “What are frametime spikes?” further below), but said instances last mere microseconds, and thus have no appreciable impact on input response.

Fast Sync: Refer to “V-Sync On” above.

What are frametime spikes?

Frametime spikes occur due to asset loads when transitioning from one area to the next, and/or when a script or physics system is triggered. Not to be confused with other performance issues, like framerate slowdown or v-sync-induced stutter, the spikes manifest as the occasional hitch or pause, and usually last for mere microseconds at a time, plummeting the framerate into the single digits, and concurrently raising the frametime upwards of 1000ms before re-normalizing. The better the game, and the stronger the system, the less there are (and the shorter they last), but no game, or system, can fully avoid their occurrence.

Test Methodology:G-Sync Input Latency & Optimal Settings

The methodology described in Chief Blur Buster's article (http://www.blurbusters.com/gsync/preview2/) was used for the below tests. I employed a Casio Exilim EX-ZR200 camera capable of 1000 fps video capture, and created an app to light up the onboard LED on my Deathadder Chroma's scrollwheel when clicked. All results were sampled from the middle (crosshair level) of the screen.

While I saved myself the trouble of wiring my mouse to accept an external LED, it did come at the cost of additional delay. Due to inherit mouse click latency, driver overhead, and the USB poll, there is an alternating 9 to 10ms delay from mouse click to LED light up. To calculate my error margin, I recorded my index finger depressing the mouse's scrollwheel a total of 20 times, giving an average of 9.45ms, which was added to each sample's final number.

An excel document containing all of the original samples, runs and scenarios is available for download here:

https://drive.google.com/uc?export=down ... FpGVUxZMkk

Test Setup:

Camera: Casio Exilim EX-ZR200, 224 x 64 px video @1000 fps

Display: Acer Predator XB271HU 144 Hz (165 Hz overclockable) G-Sync @1440p, reported 3.25 ms response time

Mouse: Razer Deathadder Chroma, reported 1ms response time (800 dpi/1000 Hz polling rate)

OS: Windows 10 64-bit (Anniversary Update)

Nvidia Driver: 376.09

Nvidia Control Panel: Default settings

Motherboard: ASRock Z87 Extreme4

Power Supply: EVGA SuperNOVA 750 W G2

CPU: i7-4770k @4.2 GHz (8 cores, Hyper-Threaded: 4 physical/4 virtual)

Heatsink: Hyper 212 Evo w/2x Noctua NF-F12 Fans

GPU: GIGABYTE GTX 980 Ti G1 Gaming 6 GB (1404 MHz Boost Clock)

RAM: 16 GB G.SKILL Sniper DDR3 @1866 MHz (Dual Channel: 9-10-9-28, 1T)

SSD (OS): 256 GB Samsung 850 Pro

HDD (Games): 5TB Western Digital Black 7200 RPM/128 MB Cache

Test Scenario:

Game: CS:GO

Settings: Lowest, "Multicore Rendering" disabled

Mode: Offline With Bots (No Bots)

Map: Overpass

Weapon: Glock-18

Samples: 20 shots per run

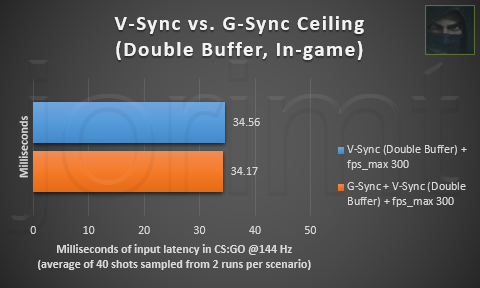

V-Sync vs. G-Sync Ceiling:

Is there an input latency difference between double buffer v-sync and the G-Sync Ceiling?

Conclusion: No input latency difference.

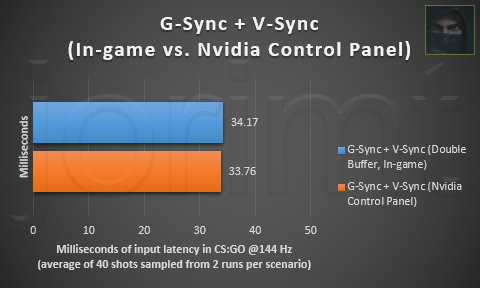

V-Sync vs. G-Sync Ceiling:

Is there an input latency difference between G-Sync + In-game v-sync and Nvidia Control Panel v-sync?

Conclusion: No input latency difference.

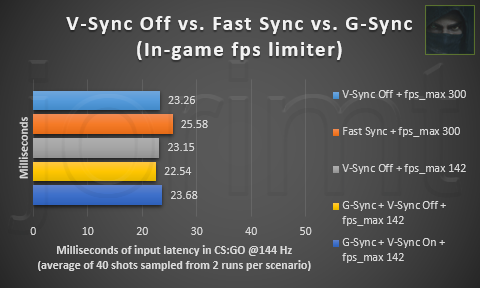

V-Sync Off vs. Fast Sync vs. G-Sync (In-game fps limiter):

Is there an input latency difference between v-sync off and G-Sync + v-sync on/off at the same framerate limit?

Conclusion: No input latency difference (<1ms differences within margin of error). At 144 Hz, limiting 2 fps below the G-Sync ceiling is sufficient in preventing double buffer-level input latency. Fast Sync appears to add a 2ms average input latency increase over the other methods at 144 Hz (research ongoing).

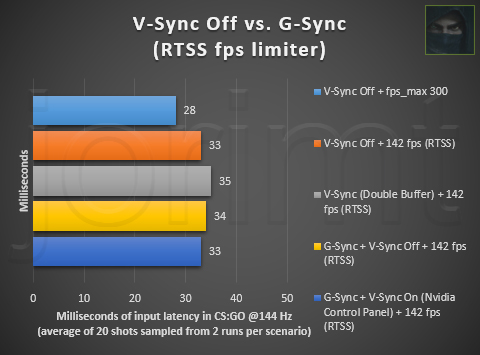

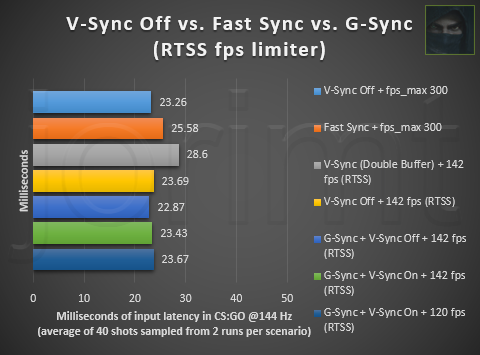

V-Sync Off vs. Fast Sync vs. G-Sync (RTSS):

Is there an input latency difference between v-sync off and G-Sync + v-sync on/off at the same framerate limit with RTSS?

Conclusion: No input latency difference (<1ms differences within margin of error). As evident by the above chart, RTSS adds up to 1 frame of input latency with standalone v-sync engaged, but with v-sync off and G-Sync + v-sync on/off, there is no input latency increase.

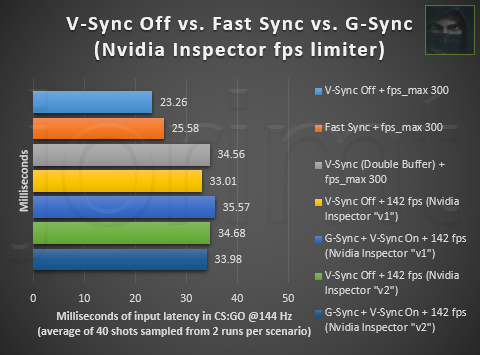

V-Sync Off vs. Fast Sync vs. G-Sync (Nvidia Inspector):RTSS Update (01/24/2017): A recent video by Battle(non)sense (https://youtu.be/rs0PYCpBJjc?t=2m32s) has posed the possibility that RTSS is adding 1 frame of input latency with G-Sync. I'm currently investigating the cause of this discrepancy, and will update here when I learn more.

RTSS Update (03/26/2017): RTSS does indeed appear to introduce up to 1 additional frame of latency, even with G-Sync:

The above test results were captured with identical scenarios, rig, and the mouse I used in previous tests, but the mouse has been modified with an external LED for more consistent, accurate results.

So what was causing the discrepancy? CS:GO's quirky "Multicore Rendering" option. I had it disabled for my previous tests, as it is known to allow the lowest input latency in this specific game. Disabling it makes CS:GO run on a single core of the CPU, and since RTSS limits frames on the CPU side, the specific interaction between this setting and RTSS likely allows it (for whatever reason) to deliver frames without its usual delay, at least when running CS:GO in single-core mode on a multi-core CPU.

Is there an input latency difference between v-sync off and G-Sync + v-sync on at the same framerate limit with Nvidia Inspector?

Conclusion: No input latency difference. However, as evident by the above chart, both Nvidia Inspector's "v1" & "v2" framerate limiting methods add up to 2 additional frames of input latency, even with v-sync disabled. As such, this method should only be used to limit frames when paired with standalone v-sync.

Optimal G-Sync Settings:

G-Sync + V-Sync On (Nvidia Control Panel) + fps limit:

100 Hz: 98 fps limit (In-game)

144 Hz: 142 fps limit (In-game)

165 Hz: 162 fps limit (In-game)

Nvidia Control Panel V-Sync vs. In-game V-Sync:

While Nvidia v-sync has no input latency advantage over in-game v-sync, and when used with G-Sync + fps limit, it should never engage, some in-game v-sync solutions may introduce strange frame pacing behaviors, enable triple buffer v-sync automatically (not optimal for the native double buffer of G-Sync), or simply not function at all. And as described in the "G-Sync Range" section, the G-Sync module relies on v-sync "On" to compensate for frametime variances and avoid tearing at all times. There are rare occasions, however, where v-sync will only function with the in-game solution enabled, and thus, if tearing or other anomalous behavior is observed with Nvidia v-sync (or visa-versa), user experimentation may be required.

In-game vs. External Framerate Limiters:

In-game framerate limiters are the superior method of capping, as they do not introduce additional input latency, and (with exceptions) provide more consistent frame pacing over external methods. External framerate limiters are too far down the rendering chain, and similar to v-sync, effectively throttle the framerate. As long as the external cap is the framerate’s limiting factor, additional input latency will be introduced. Nvidia Inspector limits frames on the driver-side, and adds up to 2 frames of input latency (as much as double buffer v-sync), while RTSS limits frames on the CPU-side, and adds up to 1 frame of input latency.

Finally, in-game framerate limiters are known to drift upwards of +/- 3 frames, while RTSS has very little frame drift in comparison. As such, while an RTSS fps limit of 142 may suffice in keeping the framerate below the G-Sync ceiling on a 144 Hz display, certain in-game limiters may (or may not) need a slightly lower limit to achieve the same result.

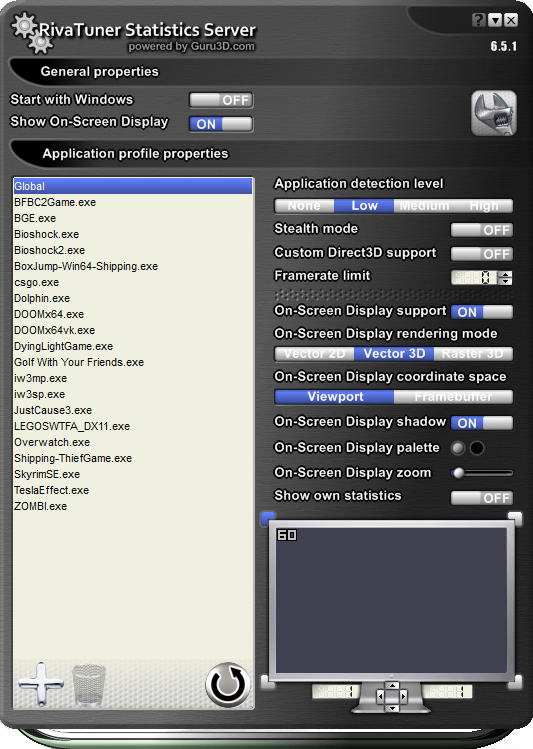

Rivatuner Statistic Server (preferred):External Framerate Limiters: How-to

RTSS is available standalone ( http://www.guru3d.com/files-details/rts ... nload.html), or bundled with MSI Afterburner (http://www.guru3d.com/files-details/msi ... nload.html).

If only a framerate limiter is required, the standalone download will suffice. MSI Afterburner itself is an excellent overclocking tool that can be used in conjunction with RTSS to inject an in-game overlay with multiple customizable performance readouts.

RTSS can limit the framerate either globally or per profile. To add a profile, click the cross button in the lower left corner of the RTSS windows and navigate to the exe. To set a frame limit, click the "Framerate limit" box and input a number.

Do note that RTSS is currently not supported in most Windows Store games utilizing the UWP (Universal Windows Platform).

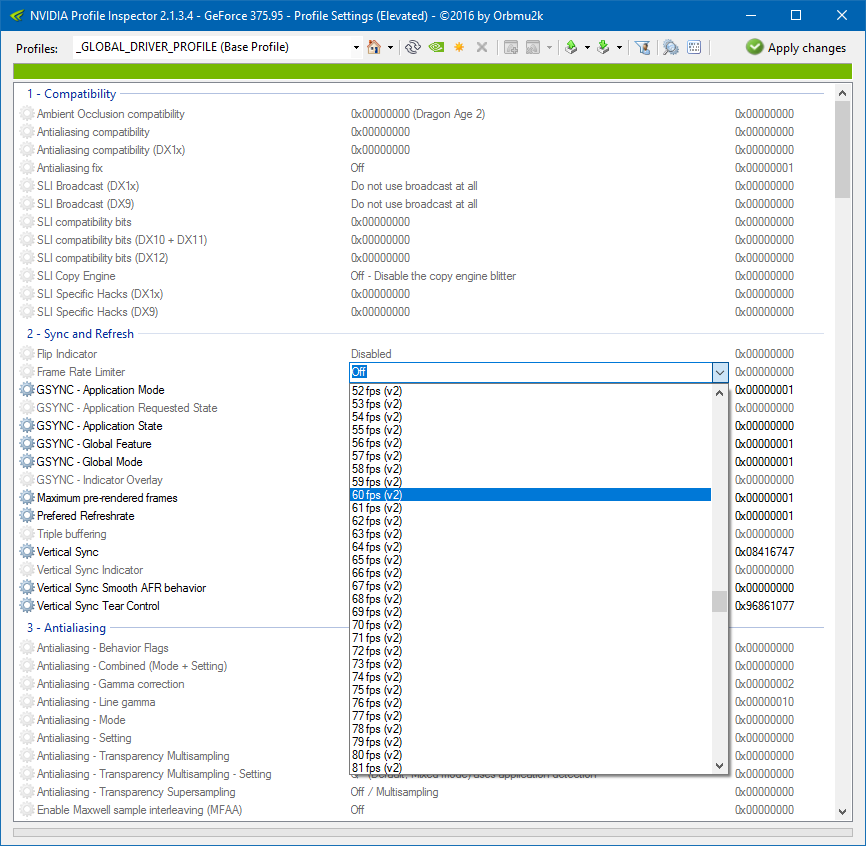

Nvidia Inspector (alternate):

An unofficial extension of the official Nvidia control panel, Nvidia Inspector (https://ci.appveyor.com/project/Orbmu2k ... /artifacts) exposes many useful options the official control panel does not, including a driver level framerate limiter.

Nvidia Inspector can limit the framerate either globally or per profile (more details on profile creation can be found here: http://forums.guru3d.com/showthread.php?t=403676).

To set a frame limit, locate the "Frame Rate Limiter" dropdown in the "2 - Sync and Refresh" section, select a "(v1)" or "(v2)" limit (there are no official details on the differences between the two available versions), and then click the "Apply Changes" button in the upper right corner of the Nvidia Inspector window.

Official:References & Sources

Nvidia's Tom Petersen explains G-Sync in-depth (10/21/2013):

https://youtu.be/KhLYYYvFp9A

Nvidia announces G-Sync windowed mode and Vertical sync toggle (05/31/2015):

http://www.geforce.com/whats-new/articl ... ven-better

Nvidia's Tom Petersen explains G-Sync's "Minimum Refresh Range" (06/10/2015):

https://youtu.be/2Fi1QHhdqV4?t=41m21s

Nvidia's Tom Petersen clarifies G-Sync's performance impact over other syncing methods (06/10/2015):

https://youtu.be/2Fi1QHhdqV4?t=46m2s

Nvidia's Tom Petersen explains G-Sync's windowed mode (06/10/2015):

https://youtu.be/2Fi1QHhdqV4?t=47m22s

Nvidia's Tom Petersen explains Fast Sync (05/17/2016):

https://youtu.be/WpUX8ZNkn2U

Nvidia's Tom Petersen specifies Fast Sync's input latency (05/17/2016):

https://youtu.be/xtely2GDxhU?t=1h34m21s

Third party:

AnandTech explains basic G-Sync module functionality (12/12/2013):

http://www.anandtech.com/show/7582/nvidia-gsync-review

Blur Busters investigates G-Sync and framerate limiter impact on input latency (01/13/2014):

http://www.blurbusters.com/gsync/preview2/

http://www.blurbusters.com/gsync/preview2/#comment-2762

PC Perspective analyzes G-Sync's "Minimum Refresh Range" (03/19/2015 - 03/27/2015):

https://www.pcper.com/reviews/Displays/ ... e-VRR-Wind

https://www.pcper.com/reviews/Graphics- ... ies-Differ

Battle(non)sense in-depth Fast Sync analysis (10/12/2016):

https://youtu.be/L07t_mY2LEU

Battle(non)sense tests G-Sync input latency (11/08/2016):

https://youtu.be/F8bFWk61KWA

Blur Buster's forum's @RealNC explains why Nvidia Inspector's framerate limiter adds up to 2 frames of input latency (11/11/2016):

http://forums.blurbusters.com/viewtopic ... =30#p22798

*G-Sync Range is a work in progress. Specified "Upper Frametime Variances" range may not apply all games and/or system configurations.Disclaimer & Credits

Special credit goes to @Sparky & @RealNC for input & feedback.

Edit #01-04: Corrected "Minimum Refresh Range," and added two more links to its "References & sources" section.Edits

Edit #05: Corrected "G-Sync's 1ms Polling Rate," and added a link to the "References & sources" section.

Edit #06: Added "G-Sync's V-Sync Fallback Behavior" and "G-Sync Windowed Mode" to "G-Sync 101" section, clarified "Minimum Refresh Range" further, and added a link to the "References & sources" section.

Edit #07: Added "Enabling G-Sync," "G-Sync's 'Preferred refresh rate' option," and instructive screenshots to "G-Sync 101" section.

Edit #08: Further clarified "G-Sync's V-Sync Fallback Behavior" section.

Edit #09: Re-merged "G-Sync IS V-Sync" and "G-Sync's V-Sync Fallback Behavior" sections/miscellaneous corrections.

Edit #10-11: Added "How-to: External Framerate Limiters" section/miscellaneous corrections.

Edit #12: Reorganized "References & Sources" section.

Edit #13-17: Added download link below chart image to "G-Sync Range Chart" section/miscellaneous corrections.

Edit #18-19: Corrected Fast Sync input latency amount in "G-Sync Range Chart" and G-Sync Range" sections, and added a link to "References & Sources" section/miscellaneous corrections.

Edit #20-23: Miscellaneous corrections to "G-Sync 101" and "G-Sync Range" sections.

Edit #24-25: Added "G-Sync Functionality" section (to be expanded in the future) and renamed "G-Sync Is V-Sync" section to "G-Sync & V-Sync"/miscellaneous corrections.

Edit #26-30: Added "G-Sync Input Latency" section with preliminary input latency tests (more to come). Updated "G-Sync Range Chart" image and modified "G-Sync Range Chart Legend" to reflect image changes. More edits with wording corrections to come in near future...

Edit #31-38: Wording corrections to "G-Sync Range Chart Legend" section/miscellaneous corrections.

Edit #39-45: Merged "G-Sync Input Latency" and "Optimal G-Sync Settings" sections, added "Why Nvidia Control Panel V-Sync Over In-game V-Sync?" paragraph. Miscellaneous corrections.

Edit #46-52: Merged "G-Sync 101" with "G-Sync Functionality" section, and "G-Sync Range Chart" with "G-Sync Range" section. Miscellaneous corrections.

Edit #53: Miscellaneous corrections.

Edit #54-56: Updated "G-Sync Range" chart and "G-Sync Input Latency & Optimal Settings" section. Miscellaneous corrections.

Edit #57-59: Removed "Original thread" link at the top of post, and clarified margin of error in "G-Sync Input Latency & Optimal Settings" section.

Edit #60-62: Added RTSS input latency disclaimer in "G-Sync Input Latency & Optimal Settings" section. Miscellaneous corrections.

Edit #63-64: Updated RTSS input latency disclaimer with new test results in "G-Sync Input Latency & Optimal Settings" section, and corrected both "Optimal G-Sync Settings" and "In-game vs. External Framerate Limiters" paragraphs to reflect this.