jorimt wrote: ↑20 Aug 2020, 08:33

Your only "reactive" advantage over G-SYNC with G-SYNC off in that range is the visible tearing; when it tears, there's your one and only "advantage." Otherwise, there's no difference between the two performance/input lag-wise during normal operation.

pellson wrote: ↑14 Dec 2020, 20:31

I have the Vg27aq and it 100% has an extremely small increase in input lag just by enabling the VRR in the OSD. No, I don't want to hear "that's because you have to cap fps beneath max bla bla" I know all this stuff already how vsync works.

So depending on interpretation/language barrier, both YOU *and* Jorim is right.

Terminologically, language-barrier-wise, there isn't much difference between the two. It is in a matter of interpretation, given the English language (and its strange nuances), and there can be confusion that arises when the writer is non-English.

I think there's a misunderstanding. "When it tears, there's your one and only advantage" -- is the lower latency that jorim is agreeing with you on, as a tradeoff of the tearing.

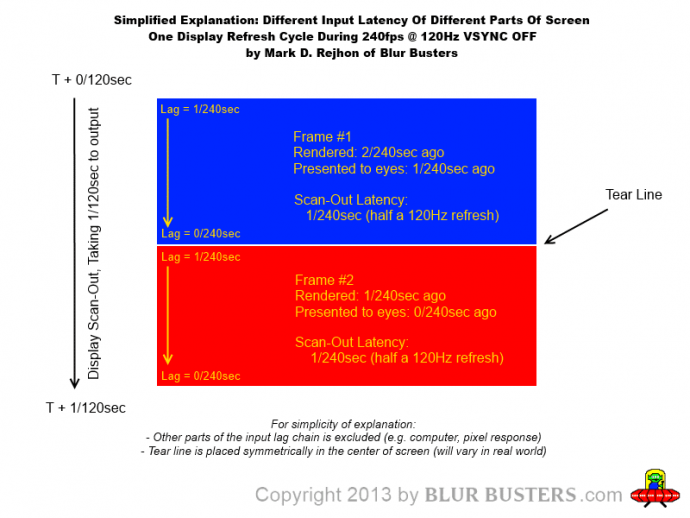

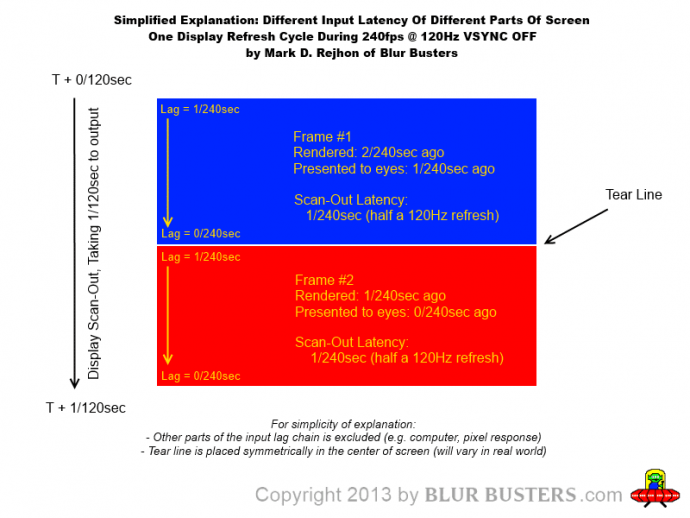

There is a slight sync-technology-related average absolute latency penalty for VRR versus VSYNC OFF corresponding to one scanout halftime (e.g. 0.5/155sec latency penalty), given randomized tearline placement along the scanout.

The whole screen is a veritcal latency gradient that is typically [0....1/maxHz] on most gaming monitors, at least the ones that has realtime synchronization between cable scanout and panel scanout.

For esports-friendly VRR, I highly recommend higher Hz such as 240Hz or 360Hz, since the scanout halftime (0.5/360) begins to create an almost-negligible VRR-induced scanout latency penalty.

That said, VRR is the world's lowest "non-vsync-OFF" sync technology, generally lower lag than everything else other than VSYNC OFF.

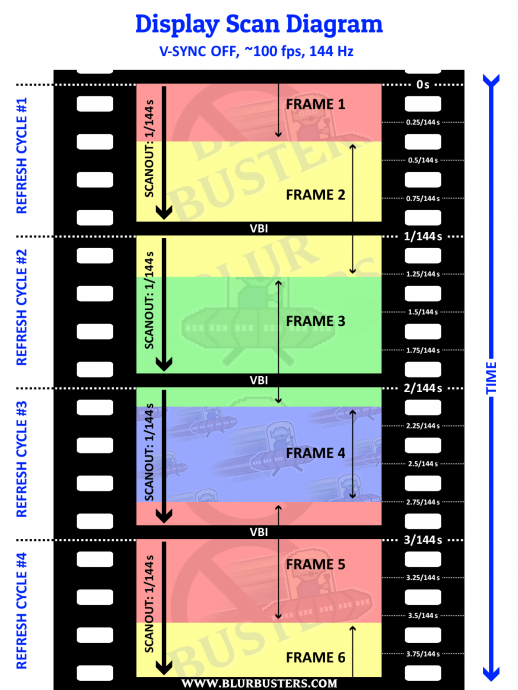

Not all pixels on a display panel refresh at the same time. Both VSYNC OFF and VRR are same latency for scanline number #001 (top edge for VRR, and first scanline of a frameslice for VSYNC OFF). During VSYNC OFF, each frameslice is a latency gradient of [0...frametime] milliseconds, while for any other sync technology, the whole screen surface is a [0...1/maxHz] latency gradient. So the beginning of the latency gradient is identical (+0 adder), but the beginning of the latency gradient (+0) can be closer to the center of the screen, thanks to a tearline occuring right above crosshairs, for example.

That's the advantage that tearing gives you.

However, given over 180 countries read Blur Busters and many people's languages aren't the same, and not everyone realizes that latency is not a single number -- it's true we sometimes need to give a bit more detailed answers nowadays. Given devices such as LDAT and other testers often don't account for the latency gradient effect, as single-pixel photodiode tests aren't good at measuring latency gradients of a random VSYNC OFF frameslice, but it is very visible in things like high speed videos (of Slo Mo Guys fame) or when putting multiple photodiodes simultaneously all over the screen.

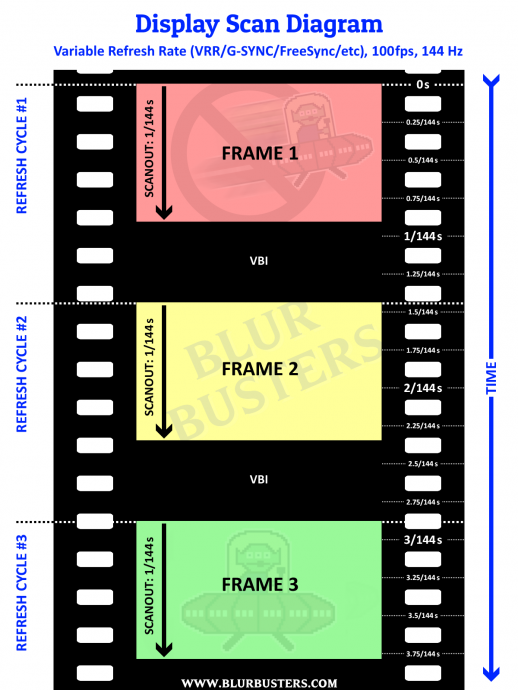

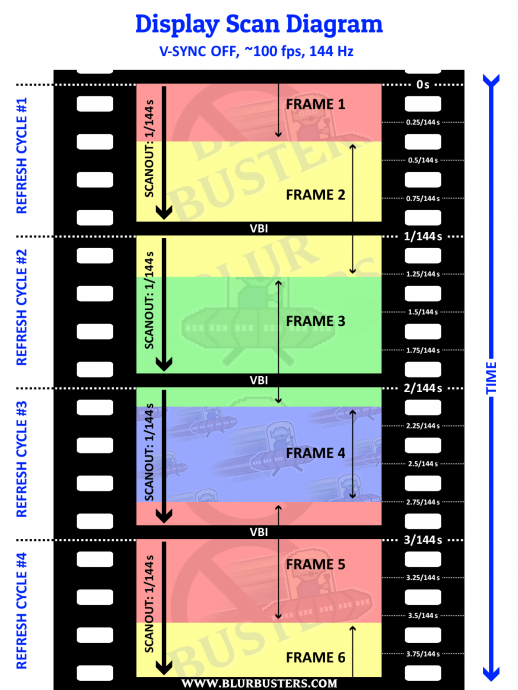

First, screens refresh from top-to-bottom,

as seen in high speed videos, which creates interesting behaviours for GSYNC versus VSYNC OFF.

Example of VSYNC OFF sub-divided latency gradients

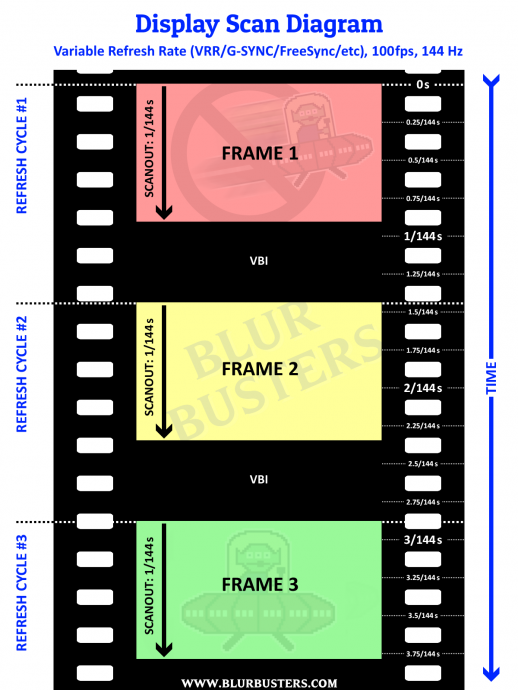

Scanout: GSYNC 100fps at 144Hz

Scanout: GSYNC 100fps at 144Hz

Scanout: VSYNC OFF 100fps at 144Hz

Scanout: VSYNC OFF 100fps at 144Hz

As you can see, the latency gradients are different [0...1/maxHz] versus [0...frametime] for VRR versus VSYNC-OFF. So minimum latency adder (+0) is identical for both (Jorim is correct), but maximum is different (pellson is correct).

Remember: Not All Pixels Refresh At The Same Time!!

Remember: Not All Pixels Are The Same Latency!!

pellson wrote: ↑14 Dec 2020, 20:31

Sorry for bad English, and also writing on mobile.

Many forum members here are not English-language-first.

I'm kind of a stickler about terminology around here to minimize disagreements, given the international nature of our audience, since latency is not a single number, nor for each pixel on the entire screen surface.

VSYNC OFF is more responsive for esports use because of its reliance on lower average latency (midpoint MIN...MAX) at the cost of motion fluidity / tearing. For average absolute latency, VSYNC OFF is hard to beat (especially great in games such as CS:GO), while G-SYNC (at extreme refresh rates) can still help give advantage in different games that are stutter-prone enough to interfere with aiming.