KKNDT wrote:From your post, I can read:

1. Why G-SYNC+V-SYNC tends to have more lag than V-SYNC OFF/(G-SYNC+V-SYNC OFF)

If the frame cap software is perfectly accurate, there is absolutely no difference.

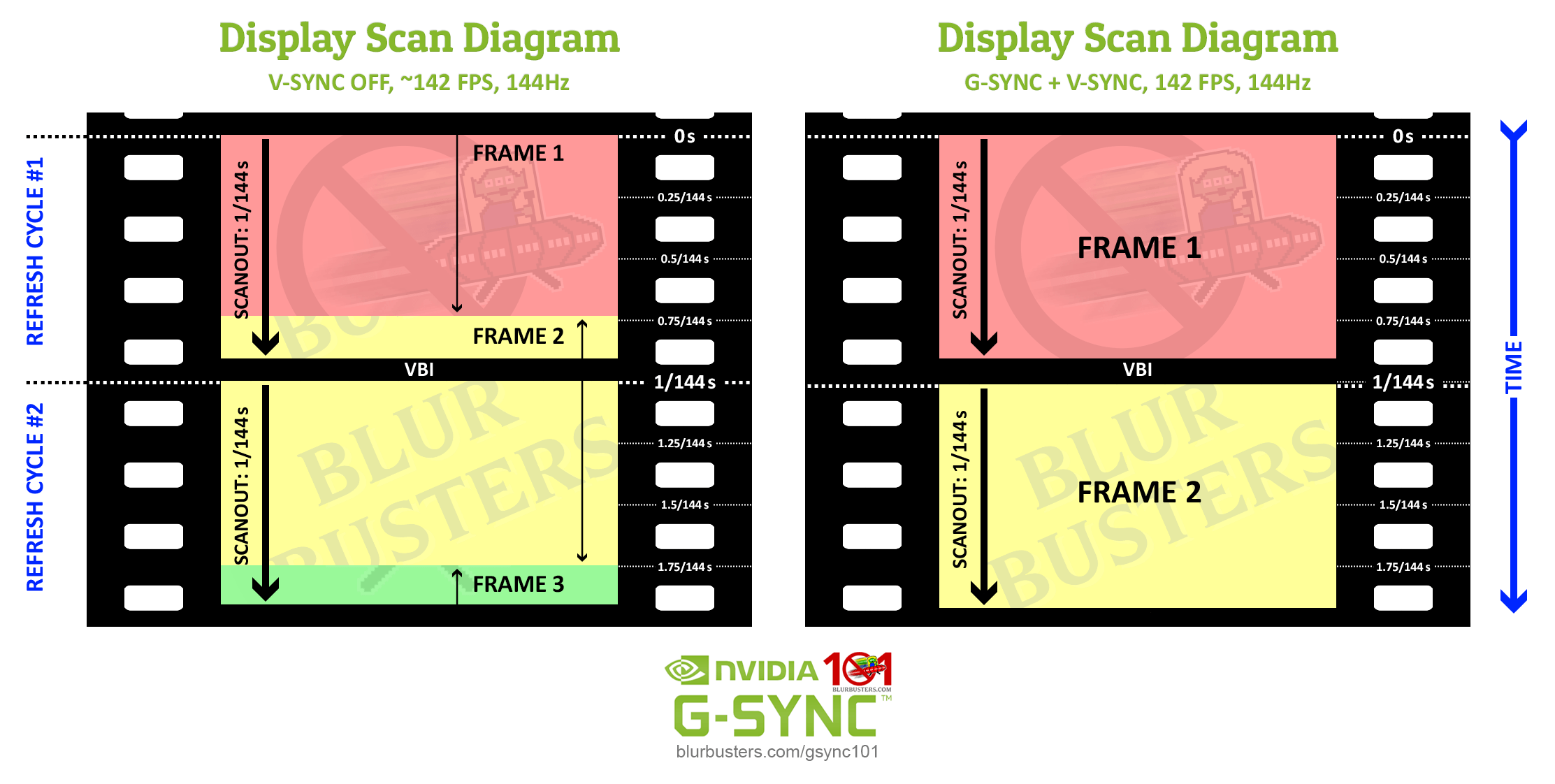

As long as all frametimes never become less than a refresh cycle, the max Hz is never reached, and VSYNC ON never occurs, nor does VSYNC OFF. That only happens when a frame tries to begin delivering before the monitor is finished with its previous refresh cycle.

As long as the cap is perfect, always below max Hz, big whoop. VSYNC ON never occurs. VSYNC OFF never occurs. So the lag differences never occur.

But in the real world, caps are imperfect. Game framerates are imperfect. Caps and framerates fluctuate a bit.

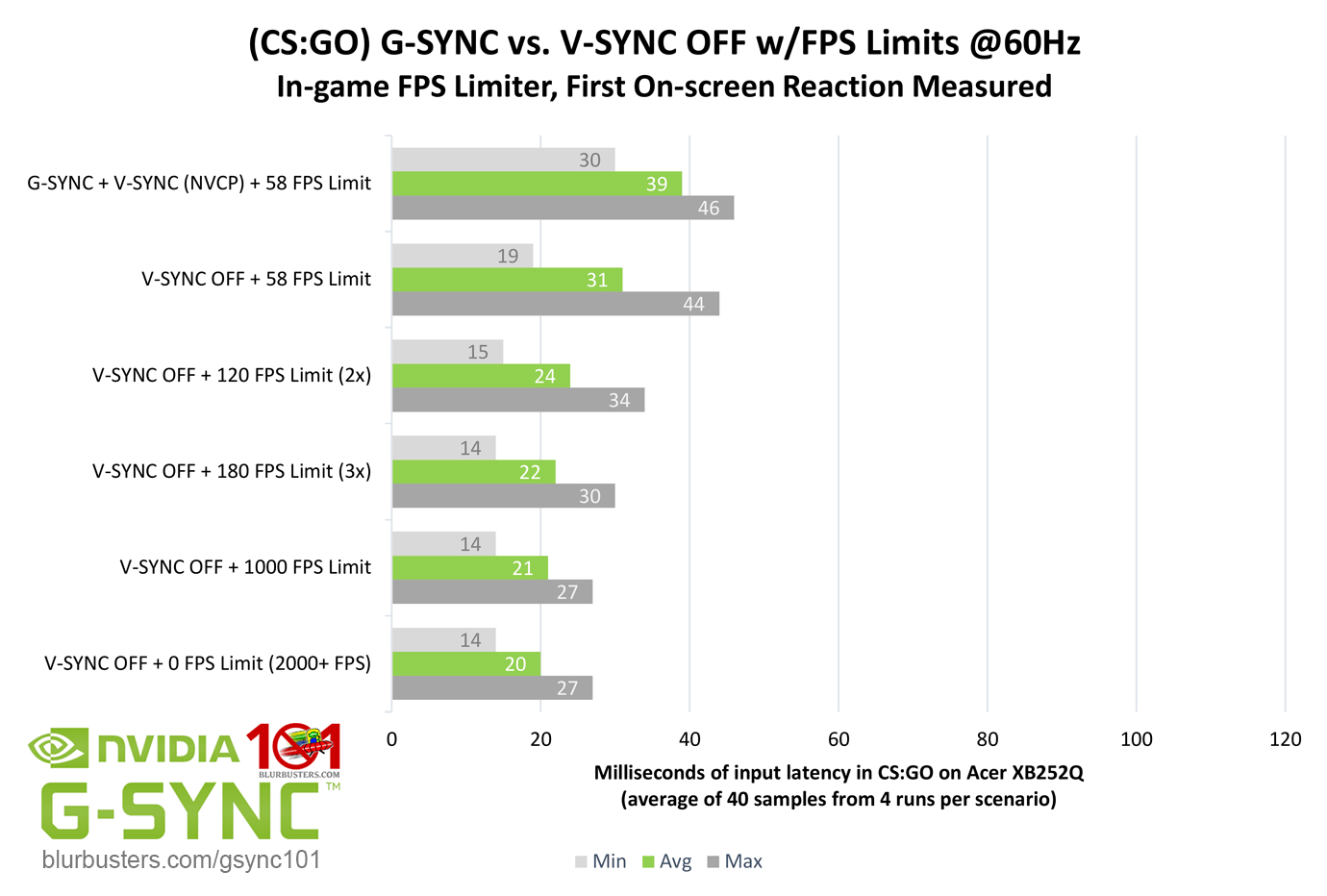

That's why we need to cap a few frames per second below max Hz because the frametimes will vary a bit. The more accurate the cap is, the tighter the limit can be. RTSS can do extremely accurate frame rate capping, while in-game frame rate capping (usually) is less accurate. However, in-game capping has less lag.

You have to trade off the extra lag of more accurate capping, with the extra lag of very erratic capping (that create transient sub-refresh-cycle frametimes, which can create lag against max Hz during GSYNC + VSYNC ON)

KKNDT wrote:2. How fast/slow frames lead to extra lantency

Only transiently, for those specific 'fast' frames.

KKNDT wrote:3. The bigger lag number by capped G-SYNC+V-SYNC usually does not matter at all

There's no difference, if you're using an accurate cap that never causes a single frametime to be faster than a monitor refresh cycle. It only happens for those specific "frame-faster-than-refresh cycles" frames only.

A frame may take 1/143sec then the next frame may take 1/145sec. One of those two is faster than a 144Hz refresh cycle. It'll be delayed by roughly ((1/144) - (1/145)) = 48 microsecond delay for that "too-fast" frame now being forced to wait for a refresh cycle.

Frame cap inaccuracy enroaching VRR max Hz

Now if your framerate cap inaccuracy is about 5%, your cap of 140fps may cause frametimes to vary in time from 0.95/140sec through 1.05/140sec .... As frametimes fluctuate, the 0.95/140sec = 6.78 milliseconds which is faster than 1/140sec = 6.94 milliseconds. So you've got a forced wait of (6.94 - 6.78) = 0.16 milliseconds = 160 microseconds = for that too-fast frame during VSYNC ON + GSYNC. Those are really tiny numbers!

Frame cap so accurate it never hits VRR max Hz

Now, if your framerate cap inaccuracy was only 1% for a 140fps cap, your frametimes will fluctuate between 0.99/140sec and 1.01/140sec. The fastest frame, 0.99/140sec = 7ms ... That's still slightly slower than 1/140sec (6.94ms).

Mathematics theory of determining perfect frame rate caps for a VRR display

In order to do this, you need to experimentally figure out how much inaccuracy your frame cap is (this is extremely hard to do, but you can output frametime values to an excel spreadsheet and run math formulas to figure out how much they vary). Now, assuming your inaccuracy is 1% (very very very hard to get framecapping that accurate. Your frametime variance range of a 1% cap inaccuracy. You want 144Hz * 0.99 = a frame cap of 142.56. But if your frame capping is inaccurate to 5%, your perfect cap might be 144Hz * 0.95 = a frame cap of 136.8fps. So you see, the more accurate your frame capper is, the closer you can get to VRR max without those "fast frames" creating lag. On the other hand, "fast frames" tend to create only microseconds of input lag for properly-implemented VRR algorithms (preferably without a polling granularity).

Lower refresh rates can less frame rate capping error percentage -- theoretically accept tighter frame rate caps

Some software such as well-written 8-bit emulators manage to pull off a 60fps frame cap with such frame-pacing accuracy. Also, lower refresh rates have larger refresh cycle times, and frame capping inaccuracy is much less at lower refresh rates. This can work in your favour. Many emulator users have noticed that VRR monitors reduce the input lag of emulators (instead of using 60Hz, you simply play 60fps at 144Hz or 240Hz -- getting the VSYNC ON experience without the input lag of VSYNC ON). But some FreeSync monitors only go up to 60Hz. There are also 4K G-SYNC monitors that only go up to 60Hz too. For these displays, you could theoretically slightly overclock your 60Hz VRR monitor to approximately 60.5Hz -- and use a 60fps cap to reduce emulator lag without needing a 75Hz+ VRR monitor. As an alternative, one can slowdown the emulator by 1% -- e.g. make the emulator run 1% slower at 59.5fps (sounds will lower in pitch by 1%, game motion slows down by 1%) and use a 59.5fps frame cap with a 60Hz-limited VRR display -- to reduce emulator lag when you are not using a high-Hz VRR display. The low-Hz and the high-capping-accuracy makes this doable, if one has a VRR display limited to 60Hz.

Factors beyond control: Granularity effects

Depending on the VRR drivers and VRR monitor technology, there may be some granularity that forces additional delay above-and-beyond. In the best possible implementations of VRR, the frame begins to be delivered immediately upon the new refresh cycle. However, if a polling mechanism is used for a specific VRR tech (e.g. 1ms polling), there might be some added lag granularity added. It is the graphics card's responsibility to begin delivering the new refresh cycle as quickly as possible after the last refresh cycle -- preferably within microseconds rather than a millisecond.

Microstutter not completely filtered by VRR

1ms errors in VRR refresh-cycle delivery (relative to frametime/gametime) can create visible microstutter, so monitor manufacturers should not induce any random latency between game-time and refresh-cycle-visibility-time. 1ms refresh-cycle delivery timing errors in a 8000 pixels/sec flick turn, is an 8-pixel-amplitude microstutter (8000pix/s * 0.001s = 8pix), so milliseconds matter in gametime-vs-visibilitytime inaccuracies. This requires a high speed camera of sufficient precision (e.g. Phantom Flex) synchronized to verifying gametime stamps. Also, because of varying GPU rendertime, gametimes are not always perfectly in sync with photons hitting the human eyes. So not all capping techniques may 100% eliminate stutter, depending on how the cap affects the sync of gametime-vs-displayrefresh visibility.

KKNDT wrote:But I'm not complaining that capped G-SYNC brings more lantency and influences gaming experience. I just wonder technically what exact causes the number of (G-SYNC+V-SYNC OFF) to be bigger than (V-SYNC OFF) in Jorimt's G-SYNC 101 review, since you agree that G-SYNC+V-SYNC OFF allows the tearing to appear, which means the frame can be scanned out as soon as it finishes rendering.

Depends on the capping algorithm: In theory, the frame rate capping is sufficiently accurate and you have enough capping headroom, tearing never appears. In the real world, not everything is perfect, alas. But as you can see, delays on early frames tends to be extremely minor -- often the lesser of evil compared to a lower cap or using VSYNC OFF.

Once you add a few frames per second to the cap, the occasionally delayed early frames becomes a nonissue. If you see only 1 tearline per second during GSYNC+VSYNC-OFF (only one early frame per second) -- then using VSYNC ON only delays that 1 specific refresh cycle (often by far less than 1ms) for GSYNC+VSYNC ON.

With the 40-run-averaged in Jorim's tests, this really shows the non-issueness of rare (occasional) tearlines that often appear briefly near the bottom edge of the screen.