Colonel_Gerdauf wrote: ↑14 Jun 2022, 02:35

First, your entire response is a case of missing the forest for the trees. Second, the tone that you have used here is not really helping your case, to put it nicely.

I didn't intend to insult or be rude to you. I am no politician who has to use PR words only. Therefore, if some opinions are stupid, I will call them stupid. Opinions, not people, mind you. Just as you did earlier. Point out where I was not clear enough, so if what I wrote may have seemed to be rude towards you, I'll try to do better in the future. I am a bit surprised to see this, to be honest, coming from you, where you didn't care much about the potential of being rude towards people who value motion clarity, to put in mildly:

And a side note: this CRT fanboyism over BFI has another issue that I am struggling to cope with

Remember saying that? Well, that's really rude, derogatory and insultive.

I'm sure we are capable of respectful conversation even if we disagree on the matter of discussion.

But I do apologize for writing more than it should take to convey the point. I'm guilty of that.

Let's begin with my point about pixel density. Perfect motion clarity is completely worthless if a person can tell the pixels apart in the expected viewing distance. You have fallen right for the trap of so many others when it comes to the pursuit of motion clarity; you have become tunnel-visioned to the point of being abrasively dismissive of things such as latency, pixel density, or the variable nature of electronics. Focusing on motion clarity is not by itself a bad thing, but you need to remember that it is only one part, one component, of the viewing/playing experience. There are other factors at play, and to say that one makes the rest insignificant is silly and is doomed to invite "toxic" feedback.

I'll be honest. I don't see the logic in a lot of your reasoning. You are capable of saying that any flicker defeats the purpose of doing anything for the motin, and in the same post, you mention the fact that people's vulnerability for flicker differs a lot. You dismiss what you don't like - for example, when you mention eye strain. From my practical tests and experiments, I can tell you that blur in motion causes the eye strain too. Even dizziness and headaches. If you want to track a fast moving object, and you cannot see a clear, undistorted image, it's unnatural for your brain which will try to do everything it can, to fix the issue. Just like wearing wrong glasses can quickly give you a headache, althought the two situations are not exactly the same and not based on the same issues.

You mentioned the resolution.

I don't really agree this is relevant to the discussion we're having here. Also, you say having a different opinion is bound to invite toxic feedback. I think that's not the problem with the opinion but more to do with your character. You get angry if someone doesn't care for things as much as you do. Flicker, resolution. Just because it angers you, doesn't mean it will anger other people. There's also the problem of you rushing to projecting. For example, me not caring about resolution, doesn't mean I am dismissing the resolution in general, to a point of stupidity, which I get the feeling, you rushed to imagine.

don't see why motion clarity would compromise resolution or latency. The motion clarity can go together with these things. Of course, to a point, and here's probably another moment, where we'll talk about different things. For me, 1080p is acceptable. 1440p + MSAAx4 is high resolution. For you, probably 8K is needed as you probably focus on modern games, where MSAA cannot be used and you probably "flip the table" if you spot a single pixel on the screen ; (that's a hyperbole here, please don't get angry about it

). For me, a person who played games from 160x120 resolutions, who then advanced to the 320x240 and who've read the gaming magazines with reviewers commonly using "increased resolution" for 400x300 and "high resolution" for 640x480 or even 512x384, I couldn't disagree more that pixellation is a deal breaker. Well, OK, some extreme cases, it can be. For example in the new rendering techniques, where the developer uses like 20% of the pixels for shadows or hair detail, with intention to smear it all up later on, by the postprocess and TAA. Such games can look hideous without that (Red Dead Redemption 2 or Assassin's Creed: Origins with temporal and postprocess AA disabled) look like 240p game even at native 8K resolutions. If you get lots of sub-pixel detail, on high contrast parts, like specular highlights, I can agree that 8K for TV and 16 or 32K for wide FOV VR may be very useful. So, since I hopefully estabilished the fact I am not an ignorant or a layman to higher resolution benefits, I hold my stance that even disregarding the fact the resolution doesn't need to be sacrificed for motion clarity, I would happily choose 1080p game over 8K game, if the 8K means the motion clarity is ruined one way or another.

In general, it's complicated to discuss the game resolutions nowadays, as 8K may look more blurry than 4K, just because it uses a different rendering method, different postprocess pipeline.

If you prepare you game well, from the ground up, on a forward renderer, with MSAAx4, then aside from just small portion of the scene, like complex transparencies, specular shaders etc. you should be able to achieve good resolution and comfortable level of aliasing (or the lack of it) even on 1080p screen if it's a smaller size display, or at native 1440p on a bigger one.

Also, even at 4K, the processing power required could be equal to 1080p or 1440p, if the rendering method used was switched from the now almost dominant deferred renders with complex shading and lighting effects applied, to something less "photorealistic". So, if you value resolution so much, you can compromise on many things, not just motion clarity. There's the visual style the dev aims for, the lighting precission, the latency (as Chief explained, not coming from just BFI, but I mean from picking more buferred pipeline, so you can push better graphics but at the expense in latency (again: RDR2). I really don't see why would defending motion clarity force lowering the resolution below any acceptable levels. Especially if higher framerate with perfect motion clarity tends to help with aliasing. It simply doesn't bother the gamer as much. Unless you developped an allergy to this and will angerly flip the table as soon as you notice any pixel crawling on screen, of course.

As I said, the topic of HDR being painfully bright is only relevant in the scope of activating HDR mode when SDR content is on display.

I strongly disagree. If you have a sleep depraved gamer, playing the game late at night, and walking in the virtual world through a dim environment, and there's suddenly a quick change to high brightness (fire, a flashback to a sunny beach scene etc.), it can cause health hazards. Even non-HDR TVs can be harmful if the gamer plays in a dimmed room, and the TV has stubstantial brightness while not covering the full FOV of the gamer. Shining some light behind the TV, "Philips ambilight style" or having a desk light nearby, will surely help, but most people have no idea they should watch on such things. Just like with hearing, it's hard to notice you're harming yourself as it happens usually without pain and also is too slow to make you realize quickly. If a gamer uses a HDR TV in harmful settings/environment, it may take a year to notice the eye damage, but at that point, it will be too late. This is a serious issue nobody talks about. You are angry at BFI being pushed all around you, to which I disagree, but you are fine with pushing dangerous (for millions of people, even if not for you or any educated and health-aware person) technology just boost the profits. You'd be surprised how little the average gamer cares about lighting being super realistic, or how little he/she cares about losing some of the detail in the clouds. Just like I grew allergic to motion issues. Just like you grew allergic to flicker issues and visible pixels. The same way the artists grow allergic to unrealistic lighting, the same way the fans of drawing art, photography and cinematography grow allergic to the flaws of SDR, unrealistic lighting and so on. The majority of gamers wouldn't even notice. Majority of gamers never heard of the terms of "display calibration", "RGB colorspace coverage" etc. Many of gamers are even color blind to some degree. Some people buy 8bit LCD panels for their displays and don't care about the flaws in brings in. You should really think more widely than just your own judgement about display related issues. There are cases where HDR is a great step forward. Cases where it's very useful, and even transformative. But on the other hand, there are cases where it is basically pointless and definitely nonsensical if you count in the vastly increased expense and the health hazards. It's important to narrow the discussion to a specific user, specific use-case, specific environment etc.

If you want to knock against HDR, you could have talked about the worsening lack of proper standardization in the PC space in sharp contrast to TV's. But this line about brightness does not make any sense. Let me repeat, people have had very little issue with looking at things in the real world, so let's entertain the idea with the sun and glares out of the way. The nits of things in a rainy lampless day can still go well beyond 400 nits. An HDR monitor on SDR mode can go to 350 if you put things to the max. A regular display can output from 180 to 250 at its brightest depending on how restrictive the firmware is.

Actually, the lack of standarization isn't as rosy for the TVs as you paint it to be. There are serious issues still, and that's the opinion of Digital Foundry, not mine. Their opinion about the HDR is reverse to mine. They praise it. And still, it was no longer than days ago, when they talked about the annoying problem of the lack of standards for gaming on TVs, related to the consoloes.

You, once again, bring in the argument of real life. I have already explained in my previous post why that argument is invalid. Real life covers the whole FOV, is usually not as sudden, etc. I won't repeat myself. I will add one more, albeit small argument this time: You blink less when you are excited or focused. So there's another difference between sunbathing on a beach vs. playing a competetive match in a HDR game after 2 nights of bad sleep, tons of energy drinks and 2AM on the clock.

You can dismiss VRR all you wish, but the matter of fact is, just about everything on the computer works on variables. From the GPU having throttling and auto overclock parameters, to API's being very complex nowadays and no longer being linear, to games each having its own way of doing the same thing, to CPU being the finite software resource that everyone needs to manage with wildly varying results. You can point the finger at "broken" things all you want, but this is simply the unavoidable reality that comes with using any electronic device. Everything has non-rigid frame rate, and those that appear to be rigid is using some kind of controlling element, such as a (often very poorly designed) frame rate limiter.

Sure, but the variables within the hardware are not the variable output. The fact the GPU adjusts clocks, or the CPU adjusts it, doesn't mean you cannot output stable framerate. You just won't get the 100% out of the maximum, you will sadly notice your super fancy techniques in modern hardware are not as useful, as you would have to settle for the guaranteed performance instead of spikes up and down. But that doesn't mean you cannot do a great game. If that was true, there wouldn't be any locked 60fps on Switch console. No perfectly locked 120fps on PS5 or X Series X consoles. And those exist, although they are very rare. As I mentioned eaerlier: You cannot just jump onto the newest tech idea and scream "look at this, let's destroy everything which was built before!". Just like you cannot say that motion clarity doesn't matter because new games will use TAA, motion blur, variable rate shading and DLSS/FSR2 or other AI-accelerated temporal reconstruction techniques. Just like you don't say that no Switch game should have anything more than Stereo sound in the games, cause many people play using the hendheld's speakers. OK, so the boost clocks technique won't be as useful. The CPU's prediction blocks won't be as helpful. But if PS1 could have locked 60fps, your arugment is invalid, I'm sorry.

In short, to enforce non-variability in frame rate, is to tell people to do things a specific one way. This is where once again the social factor comes in; that idea is pure nonsense and very draconian in nature. Most people cannot be arsed to keep their frame-pacing steady let alone constant, and as much as you want to chastise other people for their incompetence, that is simply the complex reality of the world we are living in. Hell, many indie devs TODAY make PC games under the assumption that you are playing on a phone-like device or the ye olde 800x600. Whine all you want, nothing can be done about this; people are people.

While I agree with the idea behind your stance here, I disagree this applies as much as you say it does, to this specific matter.

Doing something properly instead of bad, is not draconian. Just as the veteran dev once said "if your game runs at different gameplay/physics speed depending on the framerate, you're doing it wrong". It's just a matter of basic knowledge the devs should acquire. It's not complicated. I suggest you try digging out some interviews with John Carmack, the interview with the devs behind the motorcycle game series called Trials (Trials HD, Evolution etc.) where they talk about it. For example, they mention that what's a clurster... and a huge problem in the later parts of the development, can easily be avoided if you start properly. The Trials dev said that you simply need to target the stable desired framerate from the start. Then you can optmize to add more detail etc. If your game starts from stable 60fps, the framerate is not a difficult problem. But if you make a game which barely hits 30fps, turning it into stable 60fps will be a huge pain in the ...

Similarly with resolution. John Carmack mentioned many ideas and "best practices" to development of the games for VR, where he covered the issue of framerate, and aliasing. There was also a superb interview with a code expert from Epic, who specilizes in analyzing the code and helping the game coders to learn what happens deeply "under the hood" to improve their code efficiency and stability. Sadly this one is gone, it was like 7 years ago on a now dead website. The audio was broken and hard to understand, so I guess not many people cared to save that one. The Charlie "I lie sometimes" D. from Semi Accurate was the interviewer. If you happen to find it, you'll find it very, very interesting, I assure you.

Anyway. From 0 knowledge on that, to an expert - it would only take like 6 hours to listen to all the keynotes, read the wisdom in the inverviews. Add a few to find them all if they're hard to find or shrink to like 20 minutes if someone extracts only the inportant part. It's not draconian. The fact that so many devs do things wrong nowadays doesn't mean we should accept it. Imagine if locking PC games to 30fps became the norm. Would you say demanding 60fps is draconian and unrealistic because of social nature of the issue?

More to the point, having a system that accommodates the dynamic nature of refreshes instead of trying to force a fish to climb a tree, carries universally symbiotic benefits. Everything gets a boost, all boiling down to display clarity. Because of the nature of how display latency functions (screen tearing and stuttering) it is a key component of motion clarity. You cannot just say that latency is not important; there is only so much frame rate you can brute force before running into integral limits of some parts of the situation. Tearing and stuttering are both quite disruptive in the ability to properly see actions as they would ideally happen. So VRR is a lot more helpful to the goal of smooth screen motion than you are willing to concede.

I would reverse what you say and use the "forcing the fish to climb a tree" for VRR tech.

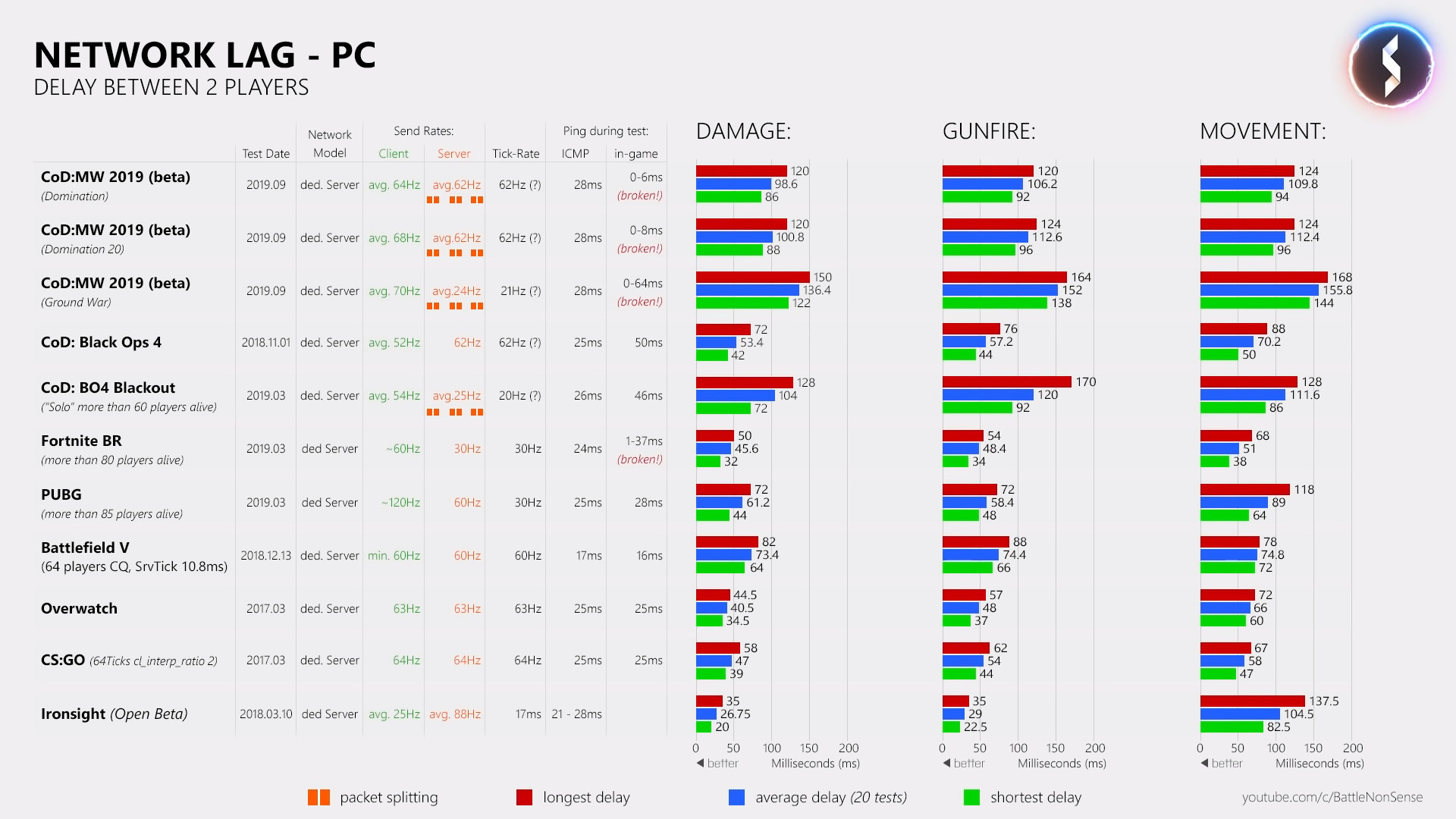

As I mentioned. The same game genre, the same platform, where you can observe vastly different results in terms of latency. Both in terms of local motion-to-photon latency and the also very important player-to-player one.

I cannot quickly find the nice graphs covering the huge differences on PC, with division to v-sync on, off, vrr/gsync on, 60, 120, 240hz.

I'll paste what I found during the quick google attempt:

Console games input lag:

77.7 Call of Duty Modern Warfare 2

97.6 Battlefield 4

157.7 Battlefield 3

PS4

39.3 Call of Duty Infinite Warfare

40.3 Call of Duty Warfare Remastered

56.1 Battlefield 1

63.7 Battlefield 4

71.8 Titanfall 2

76.8 Overwatch

86.8 DOOM

89.8 Killzone Shadow Fall Multiplayer

110.0 Killzone Shadow Fall Single Player W/ FPS Lock Enabled

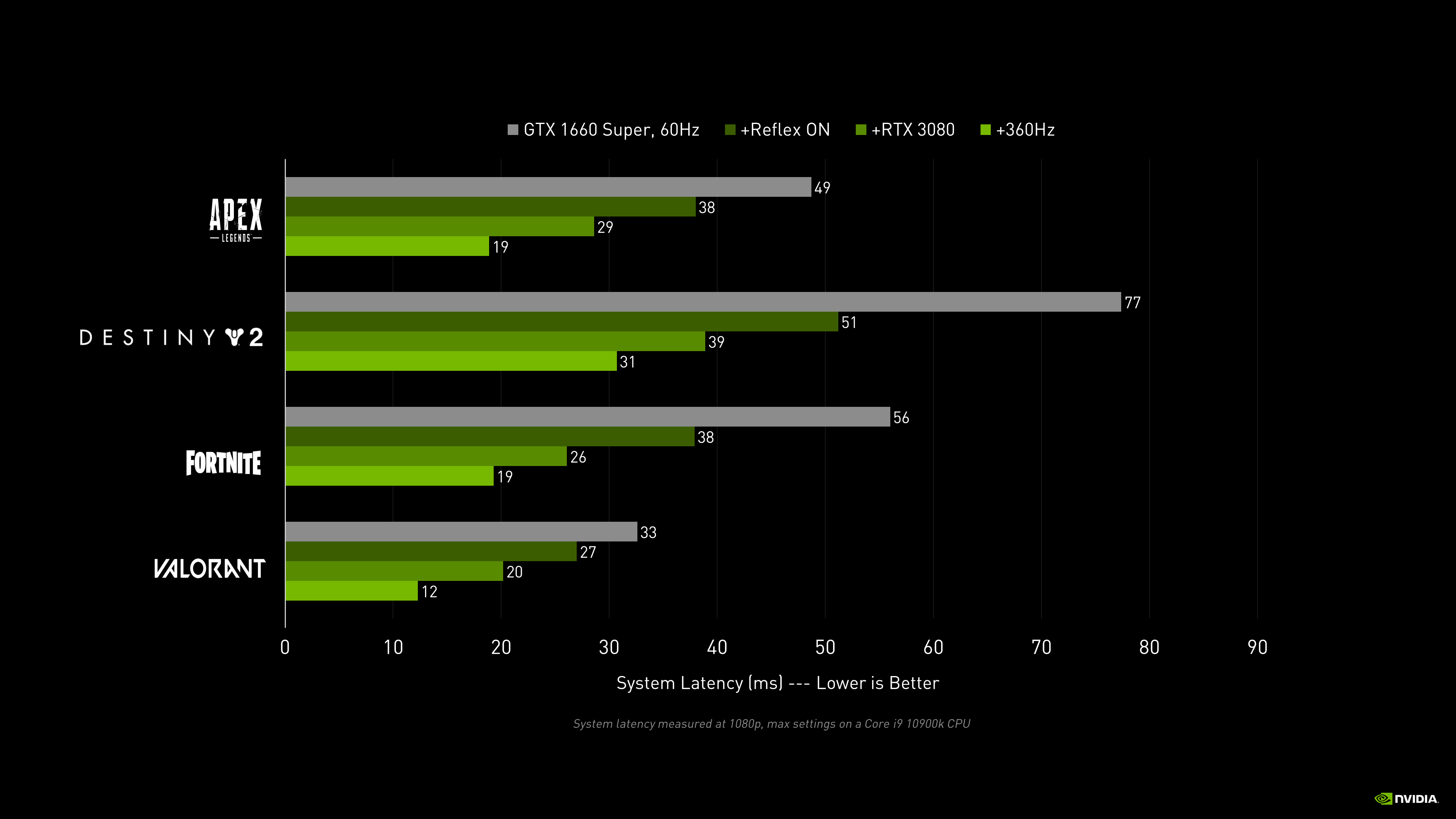

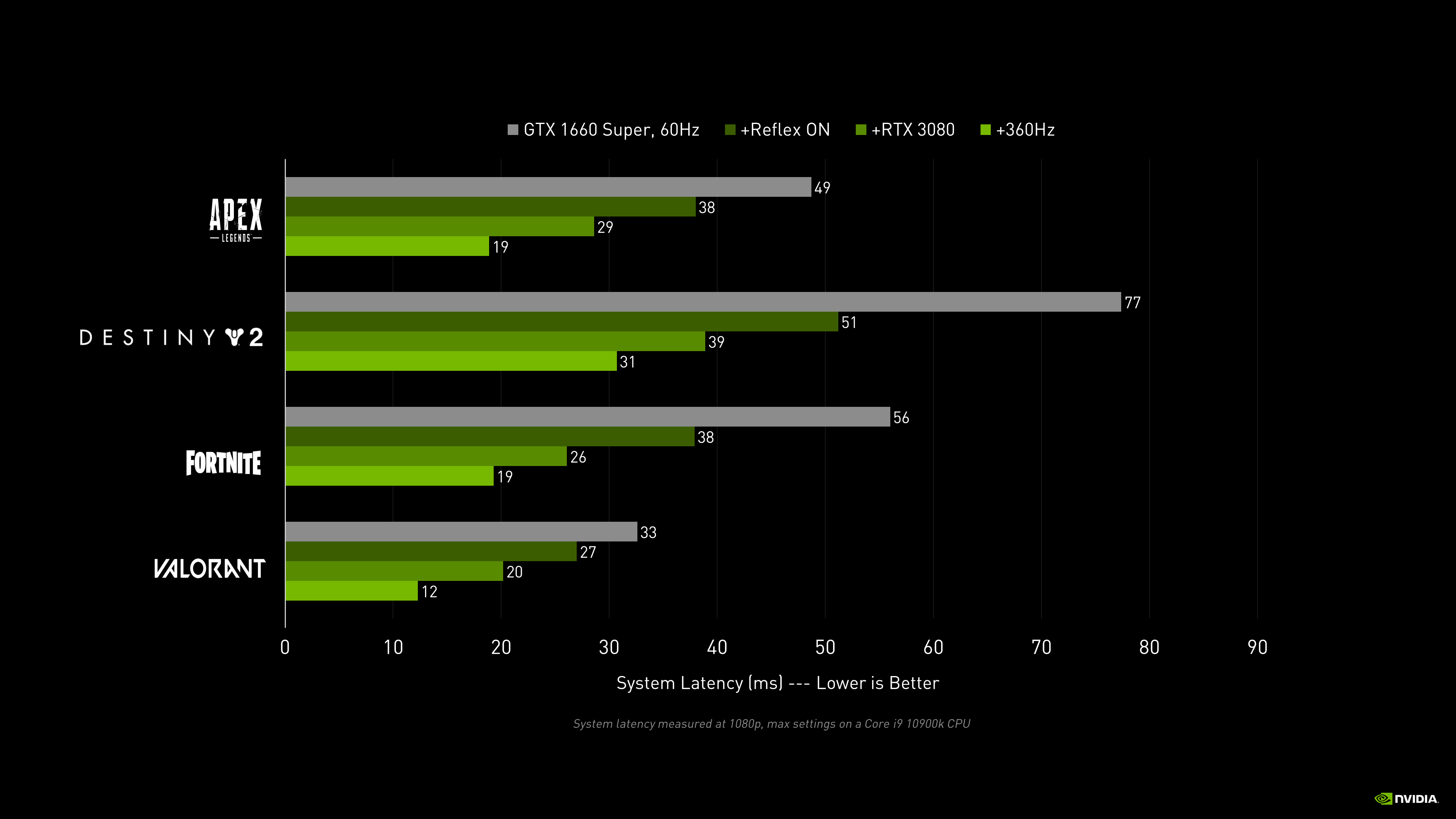

And this one example of differences on PC, albeit not a good one:

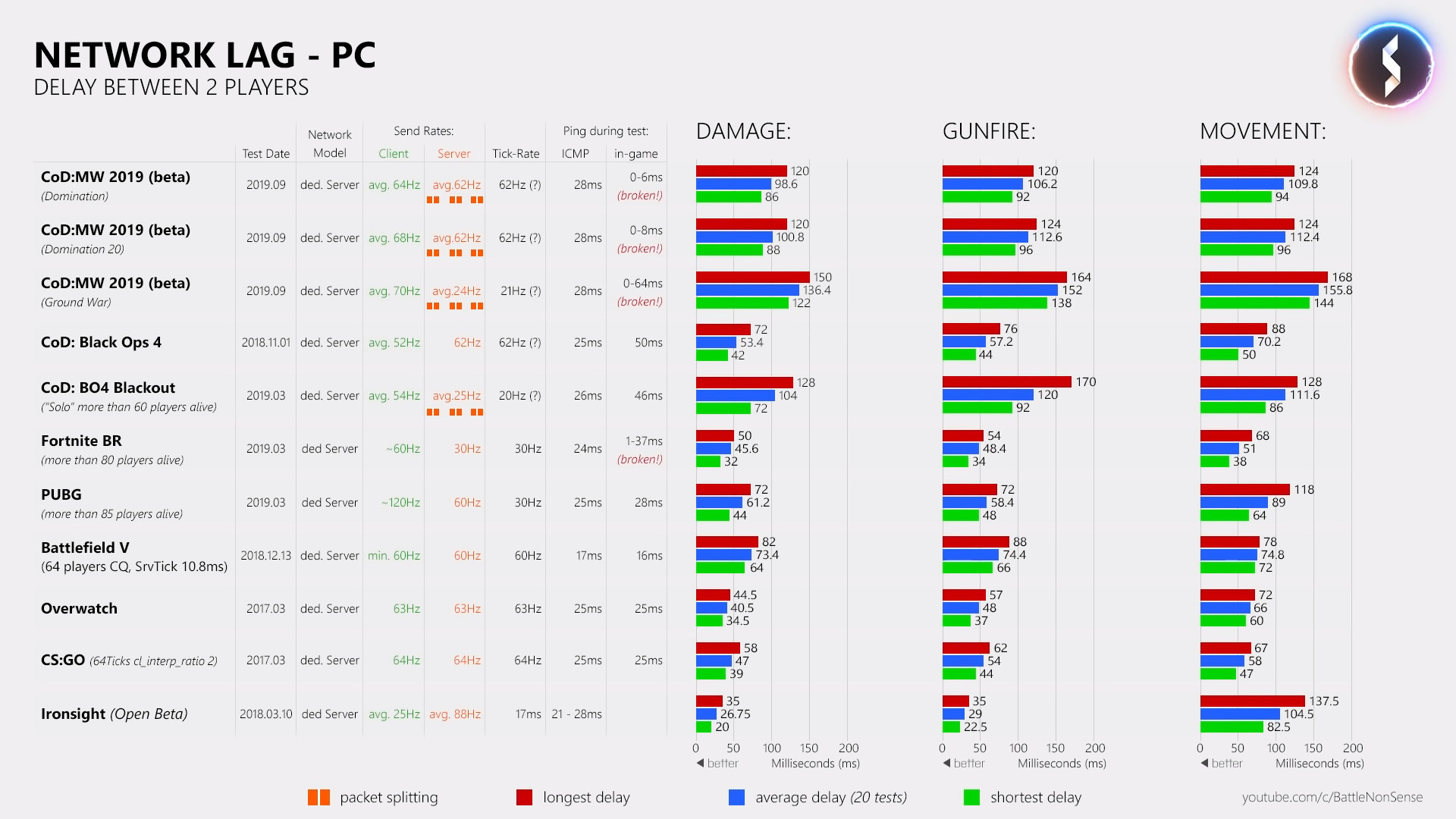

The P2P lag:

As you can see, the input lag (latency) is greatly influenced by other factors. Depending on the game design, OS, API and driver used and their configurations, it can make a bigger impact than VRR which is praised all around (

) as if it was the only remedy for the issues.

As I said. Input latency is horrible in First Person View competetive games at 60Hz with v-sync ON.

In many games it's still a problem even with v-sync OFF, if the game design is bad, and hardware cannot overcome it by brute force.

At 120Hz, the latency with v-sync is starting to become user-dependant. Some will find it too bad, some will find it acceptable.

I think we can assume 240Hz is not that expensive and reasonable for both content and display tech.

I think at 240Hz, even v-sync on can handle the latency just fine. And at higher framerates, BFI implementations on VRR modes can be viable. If someone doesn't want the 120fps flicker, they could compromise on latency or accept some tearing. There should be simply a choice offered:

120fps v-synced

120fps v-sync OFF

120-240fps VRR (with BFI)

240fps v-sync OFF

120fps v-sync interpolated to 240fps for less latency focused game types.

Personally, I'd rather play at 60fps with BFI with more game time pauses in between, than agree to ruin the experience with blurry motion. If you say you need to choose between flicker or latency and motion clarity, I want to present the fact that I, for one, differ from you by a lot. 120fps flicker is not bothering me. I find it hard to notice on all-white screen. I played a lot of games at 85Hz on LCD, which flickers like 75Hz CRT, I'd say. And it was fine. I played hundreds of hours in 3D, where the shutter LCDs help with 60Hz content (60 per eye). And was fine. Sure, I had to take breaks every now and then. But it was fine.

With latency - I was OK with v-sync ON at 120fps even during my hundred of hours played in future racing game. (500-1000km/h speed, so you can guess the reaction time matters)

Let's round our personal point of views a little, shall we?

I am aware my point of view is very subjective. I hope you can realize that as well one day.