So as we all know films are 24fps and displaying them at higher rates such as 48hz or 72hz causes double-image effect(CRT, Strobed LCD, Impulse Displays etc.) or cause constant pulldown judder(via 24fps@48hz or 24fps@72hz due to GtG not being 0).

So 24fps is about 41ms but 48hz is 20ms and 72hz is 13ms.

Would a decrease of 20-28ms persistence be too flickery? Would CRT Phosphor Fade Emulation also help further?

This would at least solve the problem of pulldown judder while also noticeably improving persistence and at the same time maybe still feeling cinematic enough due to the motion not being too clear.

Although I don't know if this would be workable on a large theatre screen, but maybe it could work for home watching at least.

Just tossing an idea out there.

24fps Cinematic Middle Ground: Strobing at 13ms or 20ms at 24fps@24hz

-

thatoneguy

- Posts: 181

- Joined: 06 Aug 2015, 17:16

- Chief Blur Buster

- Site Admin

- Posts: 11653

- Joined: 05 Dec 2013, 15:44

- Location: Toronto / Hamilton, Ontario, Canada

- Contact:

Re: 24fps Cinematic Middle Ground: Strobing at 13ms or 20ms at 24fps@24hz

That assumes framerate=Hzthatoneguy wrote: ↑18 Oct 2022, 03:39So as we all know films are 24fps and displaying them at higher rates such as 48hz or 72hz causes double-image effect(CRT, Strobed LCD, Impulse Displays etc.) or cause constant pulldown judder(via 24fps@48hz or 24fps@72hz due to GtG not being 0).

So 24fps is about 41ms but 48hz is 20ms and 72hz is 13ms.

However, you need to consider the frame as the persistence, not the refresh cycle. So 24fps at 72Hz is still 1/24sec persistence if sample-and-hold.

Now, you can multi-strobe the film frame, though, but that's always more persistence than lowering the refresh rate to match frame rate. See below.

These aren't good ideas. 24Hz strobing is never used for film, even with decay.thatoneguy wrote: ↑18 Oct 2022, 03:39Would a decrease of 20-28ms persistence be too flickery? Would CRT Phosphor Fade Emulation also help further?

This would at least solve the problem of pulldown judder while also noticeably improving persistence and at the same time maybe still feeling cinematic enough due to the motion not being too clear.

Although I don't know if this would be workable on a large theatre screen, but maybe it could work for home watching at least.

Just tossing an idea out there.

That's why 35mm projectors use 48Hz double-strobe. Many used a 180-degree shutter, which reduced persistence by (100% + 50%) / 200% = 3/4ths the motion blur of sample-and-hold 24fps 24Hz. But does add a double image effect during fast pans; however some old-school videophiles like that 'effect'.

For XG2431 my recommendation to film-watchers who want to simulate the projector -- is use 72 Hz strobing at large pulse widths (Even pulse width 40%, recalibrated with Strobe Utility with a QFT 72 Hz mode in XG2431), for maximum brightness and less flicker. You will get 200 nits with a feel that is roughly analogous to a 35mm film projector. It reduces display persistence slightly by (200% + 40%) / (300%) = 4/5ths the original blur, from the leading edge of the first image to the trailing edge of the third image (duplicate strobes).

It actually looks really good if you don't mind the lower brightness! The color quality is almost identical to non-strobed at Brightness ~50%-ish. Almost looks like film projector, except it's triple strobe.

Some people tested the ULMB hack at 24Hz and it worked, but is too painful to watch, like 1920s films where the screen flickered at 18Hz for 18fps.

Film projectors permanently stopped single-strobing low frame rates a century ago, by the invention of the film-projector double-strobe or triple-strobe via a mechanical spinning wheel with holes in it. The film moves only after 2 or 3 flashes of the film frame. Flicker is reduced by keeping "frame visibility time" constant, with the "frame blank out time" constant too. So they have been double strobing for over a century! Fun that we had analog strobing a century ago. Did you know this is why they called them "flicks"? That's where the word came from.

Head of Blur Busters - BlurBusters.com | TestUFO.com | Follow @BlurBusters on Twitter

Forum Rules wrote: 1. Rule #1: Be Nice. This is published forum rule #1. Even To Newbies & People You Disagree With!

2. Please report rule violations If you see a post that violates forum rules, then report the post.

3. ALWAYS respect indie testers here. See how indies are bootstrapping Blur Busters research!

-

thatoneguy

- Posts: 181

- Joined: 06 Aug 2015, 17:16

Re: 24fps Cinematic Middle Ground: Strobing at 13ms or 20ms at 24fps@24hz

Well, technically they used to do it back in the early days of 24fps cinema projection when they didn't use shutters yet.Chief Blur Buster wrote: ↑18 Oct 2022, 19:37These aren't good ideas. 24Hz strobing is never used for film, even with decay.

But yes, what I'm suggesting is basically 24hz strobing with an ON:OFF ratio of 1:2(lit for 20.8ms/dark for 20.8ms) or 1:3(lit for 13.8ms/dark for ~27.7ms).

Maybe this would work at least for Traditional SDR(80-100 nits) Video.

It would provide a decent bump in motion clarity while also eliminating pulldown judder due to no repeating of frames.

I'd like to see an experiment with this, on an OLED screen preferably.

I know there were people who used to do 24fps@24hz on a CRT Monitor via the SVP program, but that's too flickery on a CRT Monitor due to 1ms-2ms phosphor.

Now, 20ms of dark time still sounds very flickery on paper but maybe it wouldn't be in practice.

Alternatively, since with strobing we don't have to follow exact timings like with BFI, we could theoretically use any duty cycle we want so we could do something like ON = 29ms/OFF = 12ms(I base this one on the fact that 4ms strobe at 60hz(12ms difference from 16.67ms) isn't that flickery).

I just don't see why 24hz has to be such a taboo and why we have to put up with gtg/pulldown judder which will probably never be perfect instead of experimenting with methods like this. Depending on technology used(Blurry LCD or Fast OLED) this could help eve more.

Mind you, my attempt with this method is less about reducing blur and is more about eliminating pulldown judder, since manufacturers won't typically allow Native 24hz Sample and Hold.

Well, anyways, those were my $0.02.

- Chief Blur Buster

- Site Admin

- Posts: 11653

- Joined: 05 Dec 2013, 15:44

- Location: Toronto / Hamilton, Ontario, Canada

- Contact:

Re: 24fps Cinematic Middle Ground: Strobing at 13ms or 20ms at 24fps@24hz

Some people here did tests in 24fps BFI with software-based BFI at 72Hz and with the ULMB hack. It's quitethatoneguy wrote: ↑19 Oct 2022, 02:41Well, technically they used to do it back in the early days of 24fps cinema projection when they didn't use shutters yet.Chief Blur Buster wrote: ↑18 Oct 2022, 19:37These aren't good ideas. 24Hz strobing is never used for film, even with decay.

But yes, what I'm suggesting is basically 24hz strobing with an ON:OFF ratio of 1:2(lit for 20.8ms/dark for 20.8ms) or 1:3(lit for 13.8ms/dark for ~27.7ms).

Maybe this would work at least for Traditional SDR(80-100 nits) Video.

It would provide a decent bump in motion clarity while also eliminating pulldown judder due to no repeating of frames.

I'd like to see an experiment with this, on an OLED screen preferably.

I know there were people who used to do 24fps@24hz on a CRT Monitor via the SVP program, but that's too flickery on a CRT Monitor due to 1ms-2ms phosphor.

Now, 20ms of dark time still sounds very flickery on paper but maybe it wouldn't be in practice.

Alternatively, since with strobing we don't have to follow exact timings like with BFI, we could theoretically use any duty cycle we want so we could do something like ON = 29ms/OFF = 12ms(I base this one on the fact that 4ms strobe at 60hz(12ms difference from 16.67ms) isn't that flickery).

I just don't see why 24hz has to be such a taboo and why we have to put up with gtg/pulldown judder which will probably never be perfect instead of experimenting with methods like this. Depending on technology used(Blurry LCD or Fast OLED) this could help eve more.

That's only true for video sources.thatoneguy wrote: ↑19 Oct 2022, 02:41Mind you, my attempt with this method is less about reducing blur and is more about eliminating pulldown judder, since manufacturers won't typically allow Native 24hz Sample and Hold.

For displays, 24Hz fixed-Hz support in LCD displays is extremely widespread, did you know?

More than 95% of widescreen computer monitors and televisions support 24Hz today. Even most generic brands too. As a rule of thumb, if the display either (A) accepts a HDTV broadcast, or (B) supports multiple refresh rates, then it almost always supports 24Hz (semi-undocumented/unknown) because of a USA legislation!

Most widescreen LCD displays (including the computer monitor you're using now) already support 24Hz.

This is because of a USA TV broadcasting standard called ATSC HDTV, which supported 24fps. So all TVs had to support 24Hz, even in an undocumented way. That filtered down to almost all widescreen LCDs, including 360Hz LCDs -- it can do 24Hz too as well as a high end 2008 LCD HDTV does.

Or your cheap $99 DELL/HP LCD -- 24Hz works fine on those too. A few messed it up and added pulldown, but most do 24Hz fine and smoothly now. My old Samsung 245BW TN from year 2006 also supported 24Hz -- one of the first 2ms TN LCDs in the world. (It even supported undocumented overclocking to 72Hz if I used 4:2:2 on the video signal). So both 24Hz (standard) and 72Hz (undocumented) worked! No 24Hz pulldown even on a 15-year old LCD.

Many repeated the refresh cycles (like a fixed-Hz monitor side LFC invented in the pre-VRR days), running 48Hz or 72Hz and the monitor just framebuffered the 24Hz DVI or HDMI or DisplayPort signal for repeat-refreshing. The panel needs to framebuffer it anyway (because overdrive processing requires a framebuffer), so it's an easy firmware modification to repeat-refresh a panel, as long as the scanout is fast enough to be repeated before the next 24Hz refresh cycle. And stock parts had 24Hz out of necessity because of the USA ATSC standardization mandating 24Hz support in the late 1990s.

Almost all 4K TVs on the market does defacto native 24Hz sample and hold now, and high-end 1080p HDTVs supported 24Hz for the last 15 years now. You needed a Blu-Ray player to get 24Hz movies easily, and Netflix used to not support 24Hz (it does now). The surprising thing is that even a 10-year old BenQ XL2720Z supports native 24Hz (works great), and my year-2010 Sony LCD supports 24Hz.

24Hz fixed-Hz sample and hold support is common in panels now, even if it's poorly advertised. Heck, even ViewSonic XG2431 supports 24Hz (try it!). Not all gaming monitors support 24Hz, but most surprisingly do. It's funny to underdrive a 360Hz monitor at 24Hz.

They might do it internally via 48/72/LFC but it's still indistinguishably 1/24sec unchanged pixels. I've confirmed this -- even cheap TCL, Vizo, and HiSense TVs handles 24Hz sample and hold just perfectly fine. On sample-and-hold, repeat refreshes are essentially no-operations (visually), so if the LCD TV uses 48Hz or 72Hz to repeat-refresh internally from a 24 Hz HDMI signal, it still is native 24 Hz look.

Early HDTVs added pulldown to 24Hz (e.g. fixed-frequency 60Hz CRT HDTVs) but multisync displays and multisync LCDs have no problems with 24Hz.

24 Hz is common because in the late 1990s, the ATSC HDTV 1.0 (USA broadcasting standard) specified 24Hz as one of the 18 standard modes (resolution+refreshrate combos), and then it's now supported by all HDTVs (sometimes by accident, not even listed in the manual). Even if it is sometimes an unused feature. But it's part of your TV now! In fact, I can see 24Hz in NVIDIA Control Panel when I connect my HDMI cable to my PC, and "24Hz" shows up! My mouse cursor is super-choppy. Only 24 refresh cycles are being transmitted over my HDMI cable per second, and the TV is refreshing scanouts at perfect 1/24sec intervals. Confirmed by high speed camera.

Sample and hold 24 Hz support has been common for 15 years in television LCDs, but is uncommon in most streaming devices -- but you can get 24Hz with streaming.

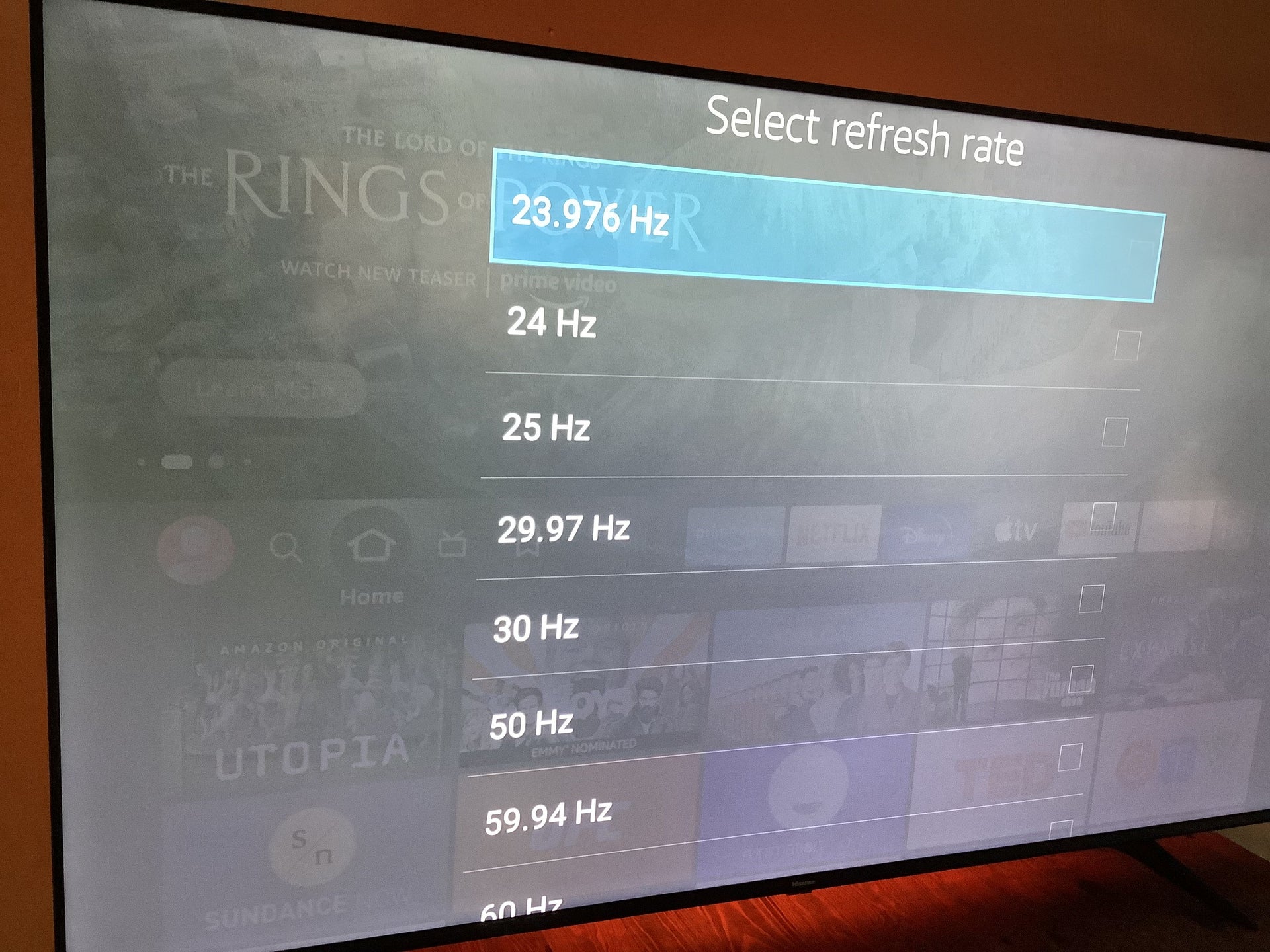

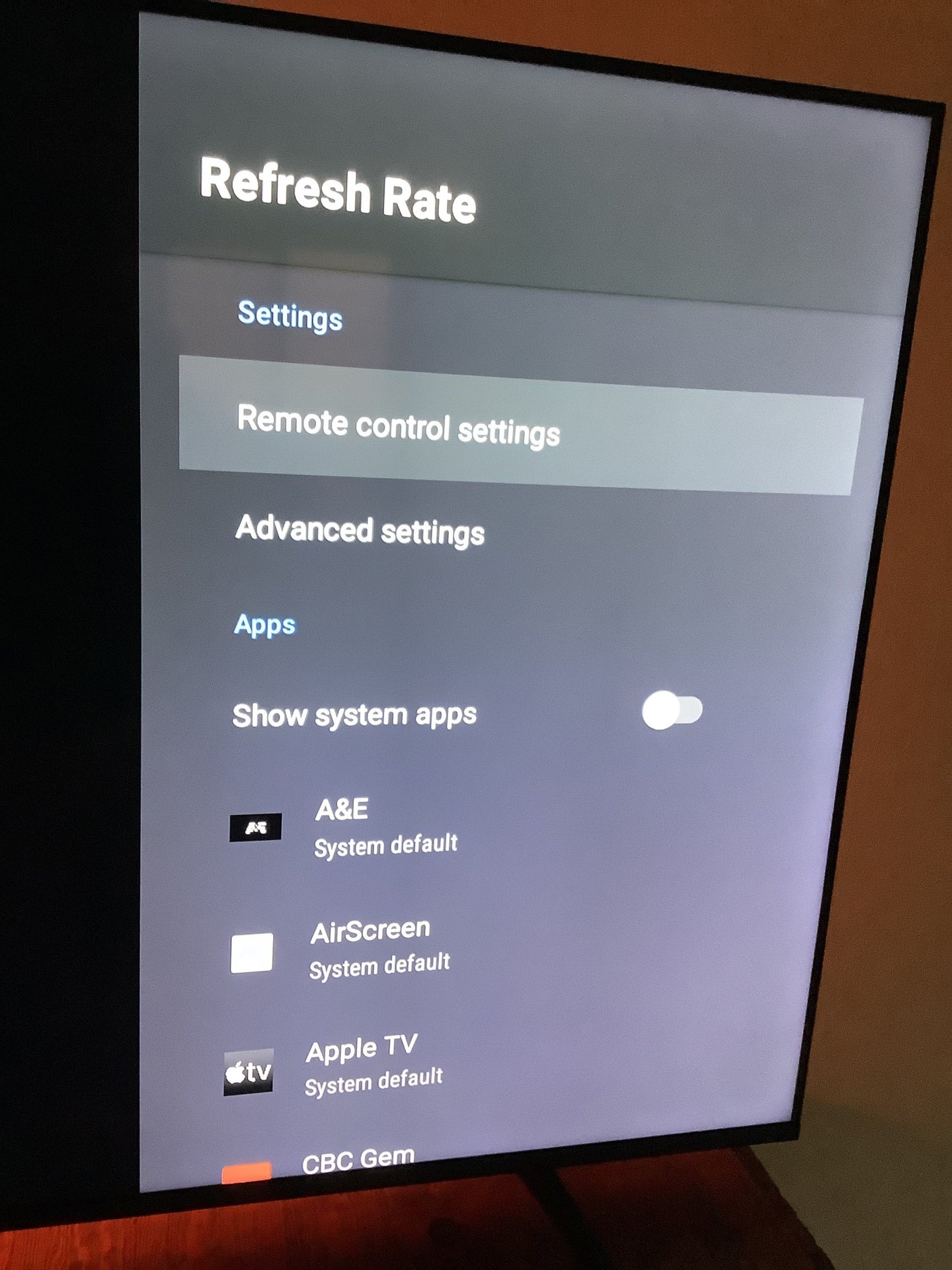

You do need some devices to support native 24Hz. Such as the Apple TV. Lots of streaming devices don't support 24Hz for streaming. But I hacked my Fire TV to support 24 Hz with a sideload. Here's a photo from my smartphone:

On the brand new FireTV 4K Max Prime and Netflix automatically switches my TV to 24 Hz during movies (they now use the new API to switch refresh rates dynamically).

Disney+ can't do it automatically but I can force Disney+ to do it since I sideloaded the NVIDIA Shield refresh rate tool onto my Amazon Fire TV and it works fine on any 4K TV on the market. Now my Disney+, Prime and Netflix all runs at perfect 24Hz.

(Nonwithstanding 23.976Hz vs 24.000Hz, a big rabbit hole I'll leave out of this post).

My FireTV 24Hz-Everything HOWTO was posted on AVFSFORUM:

HACK: 23.976 Hz Disney+ Works on FireTV 4K

There's a standard Android API for refresh rate mode changes, and it works on most Android devices capable of refresh rate changes (including Fire TV, based on AOSP), and the streaming apps play much smoother with it.

The problem is there's no single streaming box that automatically native supports automatic 24Hz for ALL streaming services, but at least I can HACK my Fire TV to allow me to manually switch to 24Hz in ANY streaming app that doesn't support automatic refresh rate-switching (like the latest versions of Netflix and Prime apps for Fire TV 4K Max -- I love the automatic 60Hz-vs-30Hz-vs-24Hz switching depending on what I stream). Very user friendly if the newest version of streaming app on the newest streaming device supports native 24 Hz.

Anyway I can 100% confirm ZERO pulldown judder on ALL my streaming apps.

The 4K Max is high performance to perfectly framepace (very old Fire TVs won't -- they don't framepace as well)

Name it... Disney+, Prime, Netflix, Paramount+, all 100% judder-free, on my system there's no such thing as a 3:2 pulldown (unless it's a 60fps video file with embedded 3:2 pulldown, then I need an external de-judderer & deinterlacer).

There is no motion blur reduction with sample-and-hold 24Hz.

But I have zero pulldown judder with all my streaming apps!

Fundamentally, the streaming app doesn't need to know about the display's refresh rate. The H.264 format supports 24fps, so as long as the operating system (Android, iOS, Windows, Mac) framepaces every 1/24sec, it looks correctly pulldown-free. Even if you force a non-24Hz-supported Disney+ device to play at 24Hz. It's just simple framepacing. Streaming app does NOT need to support 24Hz, in order for 24Hz to look perfect -- 24Hz support is baked into the video file format (aka H.264 or H.EVC). So you just need to force the device's operating system to switch to 24Hz (like via hacks) and voila!

Faster CPU framepace better, so always buy a high performance streaming device if you want stutter-free 24Hz, because you don't want low performance adding frametime jitter to create erratic round-off of 24fps frames to the wrong 24Hz refresh cycle.

So hands off your 10-year $15 sale streaming wheezer, put it straight into the trash, and pony up the 75-200 greenbacks for hackable 4K Ultra Pro Deluxe Super 2000 Streaming Box, and hack that one instead. The faster CPU/GPU will framepace better, creating a better "any-app" 24p experience via 24Hz hacking. That means paying for a high performance NVIDIA Shield, or a high performance Fire TV, to get the perfect 24p, because you're relying on brute framepacing precision to force a 24Hz-unsupported streaming app to play 24p perfectly.

Sure, you can rely on native 24p support built into the device and app (like Apple TV's automatic 24Hz support, I think it was a world's first when it came out -- and only certain streaming apps works with it). But you need a refresh rate app to force all streaming apps to 24Hz, and sometimes getting the app onto the streaming box requires hacking/jailbreaking (e.g. Fire TV)

So you see, it's the device that's the 24Hz problem, not the apps nor the displays. H.264 is already natively 24Hz for movies and all is that needed is OS-support for 24Hz-mode-switching, and you can call it a day. You're simply BYORR (Bring Your Own Refresh Rate), the only missing piece of the 24Hz pulldown-free sample-and-hold ecosystem that's already widespread for 15 years.

_______________

BTW, in 2000-2004 I used to work for scaler companies like Runco, Key Digital, TAW and HOLO3DGRAPH (which used the Faroudja Fli 2200), and I am the author of the world's first open-source 3:2 pulldown de-interlacer algorithm (Internet Archive).

John Adcock converted my pseudocode into C++ code and used it to framepace resulting 60i->24p frames every 1/24sec which made everything (even cable boxes and VHS) play smoothly through a Hauppauge TV card to a 72Hz display! I wrote that 22 years ago, in year 2000.

In year 2000, I was already playing VHS movies in progressive-scan 24p with zero pulldown and zero interlace artifacts via open source dScaler app that simultaneously deinterlaced and dejuddere'd and was compatible at any refresh rate I could set my CRTs to, such as 72 Hz. I was running on my HTPC on my NEC XG135 CRT projector, thanks to my work. Although DVD was starting to boom, I still had lots of VHS tapes before their DVD editions came out, and it made it bearably watchable -- smooth cinematic pans.

Here's a photo of my NEC XG135 CRT projector installation (summer 2000) when I was literally just barely an adult -- this was the previous century. If I recall right, I purchased this projector in late 1999!

Man, I look so young, at less than half my current age. How time flies.

(Unrelated, I remember the old days in ~2001 when I was playing Star Wars Epsisode I pod racer (600mph 4 feet above ground) in true 3D with shutter glasses via the ASUS V7700 Deluxe GeForce 2 GTS Kit (old year 2000 review). 3D stereoscopic worked fine on the CRT projector! I recall it was only 60fps at (30fps/30fps) in true stereoscopic 3D more than two decades ago with one of the early GPUs)

HTPCs could easily do 24p easily, though I needed to use 48Hz or 72Hz as the CRT didn't support 24Hz. I was the first moderator of AVSFORUM's Home Theater Computers forum, and I've been a forum member there since 1999.

All of this early temporal skills in deinterlacing and pulldowns got me started on my display-temporal skills that led to Blur Busters today. Of course, there's the raster interrupt knowledge I had from the 1980s (I started programming 6502 raster interrupts on a Commodore 64 at age 12), which contributes handsomely to my display-temporal knowledge too.

Head of Blur Busters - BlurBusters.com | TestUFO.com | Follow @BlurBusters on Twitter

Forum Rules wrote: 1. Rule #1: Be Nice. This is published forum rule #1. Even To Newbies & People You Disagree With!

2. Please report rule violations If you see a post that violates forum rules, then report the post.

3. ALWAYS respect indie testers here. See how indies are bootstrapping Blur Busters research!