Great question worthy of being moved to the Area 51 Laboratory!

And in fact, one of our forum members tried something similar out... So here's my reply.

Based on what I read of your posts in other forums, you seem to already know most of this stuff, so I write this post for multiple readers. So forgive me, if I write researcher-vetted walls of text here...

Changing LFC-trigger thresholds can be done on an AMD card with ToastyX CRU FreeSync-range edits, so I can create 200Hz LFC on a 500Hz FreeSync panel!

The TL;DR Version

Display-Side VRR-LFC "Interpolation" As Attempted Motion Blur Reduction

This could work, but has some major black-box problems

(This answer is specifically for elvn, not other readers who often ask questions about LFC for blur reduction)

Basically interpolation kicking when going below a high-LFC threshold. The problem is for interpolation to become "perfect" it needs to know the geometry of what is shown on the screen (Z-Buffer, 3D geometry). For frame rate amplification technologies to become more artifactless, it needs access to some knowledge data (Z-buffers, 1000Hz controller data) to reduce artifacts. Oculus Rift ASW is superior to interpolation and less laggy because it "knows" the Z-Buffer. But if you only transmit refresh cycles to the monitor, you don't have the extra metadata needed to make the frame rate amplification perfect. Frame rate amplification can include interpolation / extrapolation / reprojection / AI upsampling -- often an increasingly simultaneous combination of the above. I've already written an article about how frame rate amplification needs extra metadata such as Z-buffers (not transmitted over a DisplayPort cable), at

www.blurbusters.com/frame-rate-amplification-tech ....

I even mention that a co-GPU can be added to the display theoretically, but you also would have to invent a new display signal that added lots of metadata to avoid artifacts caused by black-boxness of interpolation. You need to transmit a LOT of metadata (controller data for reprojection, Z-buffer data for eliminating parallax artifacts, etc) from the computer to the display, if you're wanting a more flawless frame rate amplification on the display side. This could still be a lot less bandwidth than 8K 1000fps 1000Hz, but optical fiber video cables can pull that off uncompressed, by the time we're ready. The problem is we're not yet sure how powerful a co-GPU needs to be built into the display for display-side frame rate amplification (which I mentioned as a theoretical "G-SYNC 2").

This is a fun thought exercise, and all of this has not yet been tested out, but theoretically is viable, but will it be cheaper than a simple optical-fiber video cable. 8K 1000fps 1000Hz will require ginormous amounts of internal processing bandwidth, as the last vestiges of multicore processing is exhausted, so parallelization of the frame rate amplification pipeline becomes critical, e.g. shingled rendering in different cores (on-die SLI type techniques). But where should the engineering/expense go, is the question? We already know that an optical video cable can be enough for 8K 1000fps 1000Hz, so is it cheaper to do the fully computer-side GPU approach, or a GPU+coGPU approach?

We already have coGPU behaviors when doing AirLink streaming on Quest 2. For example, the PCVR might fall to 45fps, but streamed over AirLink, and the Oculus Quest 2 GPU does 3dof reprojection to 90fps successfully to eliminate nausea during headturns. So basically the Quest 2 is already doing on-VR frame rate amplification of an externally GPU-generated signal. So there's already precedent (yay) -- at least for low fixed-Hz frame rates!

In theory, the Z-buffer could be streamed too over the AirLink signal, in order to do 6dof reprojection, not just 3dof reprojection. In addition, this would be excellent for VR streaming (from the cloud), to feel lagless in body movements, regardless of streaming delay. Basically cloud GPU + on-headset reprojecting co-GPU.

The bottom line: To apply this to Ultra HFR on existing computer monitors, we need to invent a new display signal that includes a lot of metadata required for more flawless and lagless frame rate amplification technologies (whether it be interpolation / extrapolation / reprojection). We would also need to invent new Vulkan APIs that puts reprojection close to the metal, so games can communicate directly to the display (whether VR or non-VR) to allow FPS mouseturns at 1000fps even if the game is running at only 100fps. Reduce the combined transistor count of (GPU + coGPU + video cable transceivers).

That would be a fun napkin exercise... The local GPU can do part of the frame rate amplification and the remote (simpler) GPU can do the rest of frame rate amplification. Now that being said, you kind of want good AI autocomplete algorithms for filling-in parallax data, so it's also advantageous to make it the same GPU, but it then becomes a giant GPU (ala RTX 4090). So creative approaches will be needed for large-ratio frame rate amplification technologies.

Sample-And-Hold LFC As Attempted Motion Blur Reduction

Tested and confirmed useless, whether low frame rates or high frame rates (HFR)

LFC on sample-and-hold is still the same motion blur as non-LFC, so we'll skip that, since LFC=nonLFC in motionblur during sample-and hold, so we'll skip that. We've actually tested that 100fps LFC and 100fps non-LFC looks the same on current VRR displays, assuming there's no major overdrive differences (LFC can have different overdrive artifacts, but assuming there's no overdrive appearance difference between different frame rates and refresh rates). So LFC has absolutely zero motion blur reduction benefit. You need to use

frame rate amplification instead if you need to reduce the motion blur of low frame rates (that are too flackery for framerate=Hz strobing). There can be benefit to HFR-LFC if it eliminates overdrive artifacts, e.g. your monitor has less overdrive artifacts with LFC enabled at 100fps+, than without LFC enabled. But that doesn't eliminate motion blur, since MPRT100% can never become less than frametime on a sample-and-hold display, even if GtG=0.

Strobed LFC As Attempted Motion Blur Reduction

Tested and confirmed duplicate image artifacts, whether low frame rates or high frame rates (HFR).

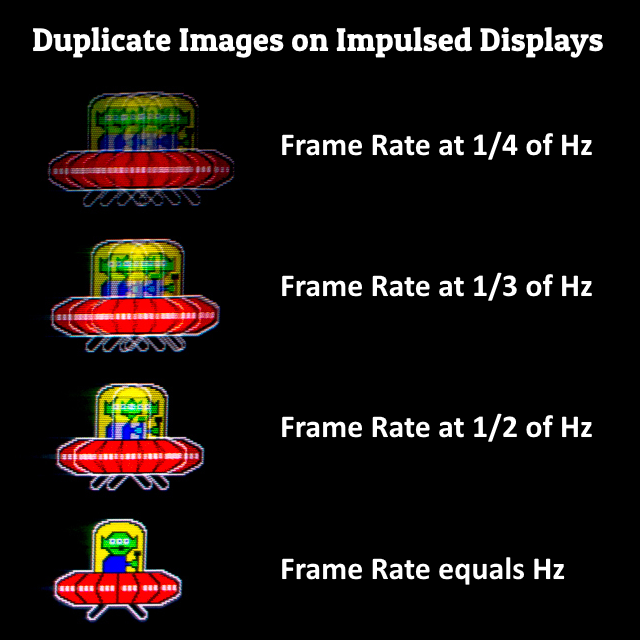

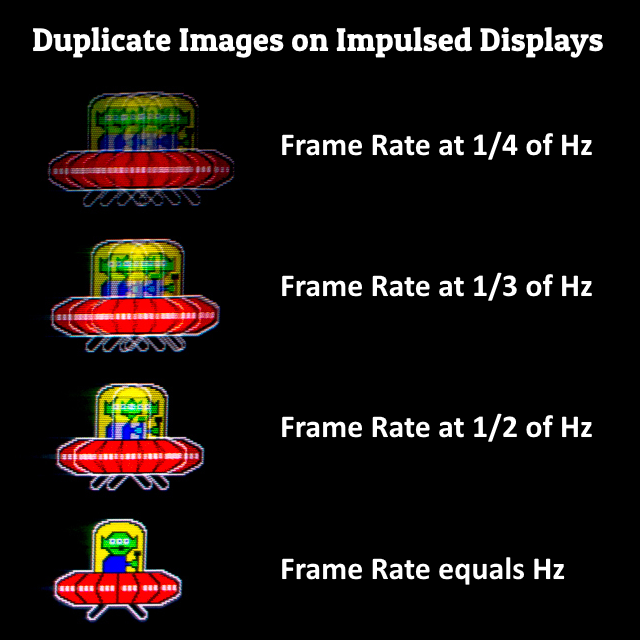

Now, that leaves explaining the artifacts of LFC during strobing, which is akin to CRT 30fps at 60Hz, but applies to any frame rate below strobe rate. LFC creates multi-strobe artifacts. LFC is repeat refresh cycles, which all have their own unique strobes if you combine strobing+LFC.

Now for the long version, if you want to go beyond TL;DR and dive in the famous Blur Busters wall of texts.

The Long Version

(This is mostly for other readers, because I often get questions, so I'm centralizing my reply here, because people sometimes keep asking me if there's a way to add motion blur reduction to LFC!)

Using LFC at same time as VRR Strobing Creates Duplicate Image Effects

I can tell you that one of our forum members hacked a BenQ XL2546 to enable "VRR-DyAc" via a modified strobe backlight.

The problem is that LFC creates multi-strobe effects which creates duplicate images:

This is even software-simulatable in a TestUFO animation (custom hacked TestUFO link). First, we set a frame rate to a frame rate compatible with software-simulation of multi-strobing, and then create the CRT 30fps-at-60Hz effect (but it requires 4 sample and hold refresh cycles to do the double-image effect, and 6 sample and hold refresh cycles to do the triple-image effect). So use the highest refresh rate, turn off strobing, and click one of these two links:

If your display is only 60-165Hz, you will easily see a double image effect at 960pps:

Unfortunately LFC (software-based via driver for FreeSync) starts creating annoying duplicate image effects as LFC enables during strobed hacked DyAc-FreeSync-VRR

Here's the forum member's hacked BenQ XL2546:

[Monitor Electronics Hack] World first zowie XL2540 240hz 60hz singlestrobe Experiment with VRR-DyAc!

He became so annoyed by the duplicate image effects because of LFC sticky behaviors. HIs hacked XL2540 could single-strobe at 60Hz DyAc-VRR, but sometimes even though VRR range ended at 48Hz minimum, LFC stayed activated until frame rates returned well above 60fps (sticky behavior). So this user programmed a utility to compensate for the annoying strobed LFC duplicate-image effect:

nvLFCreset an experimental nvapi trayapp to prevent LFC sticking for gsync compatible monitors

Note: On this hacked XL2540 with user hacked "DyAc-VRR", the Blur Reduction was disabled in the monitor menus, and he added a hardware-based strobing hack by modifying the backlight electronics. What was found out that repeat-refresh-cycles (from LFC) created duplicate images from mutli-strobing.

Duplicate Images Effect is Confirmed Universal For All Kinds Of Multi-Strobing

During eye-tracking all kinds of multi-strobing the same frame creates duplicate image effects.

- Low frame rates (e.g. CRT 30fps at 60Hz)

- Strobed LFC

- bad strobe crosstalk (GtG too slow to erase refresh cycle, so it's defacto repeat refresh)

- PWM dimming

- Even software-based BFI!

All of them.

All tested.

All create duplicate images.

It's a law of physics issue.

Your eyes are analog trackers -- your eyeballs are in different position whenever the unchanged frame is repeat-flashed -- stamping a duplicate copy (by persistence of vision) as you track eyes on a multi-strobed object.

Conclusion

Good Blur Reduction Requires

1. Stroberate=Framerate=Hz.

2. If not storbing, keep frametime=refreshtime short.

4ms strobe flashes (framerate=Hz, any Hz) has same motion blur as 250fps 250Hz sample-hold

2ms strobe flashes (framerate=Hz, any Hz) has same motion blur as 500fps 500Hz sample-hold

1ms strobe flashes (framerate=Hz, any Hz) has same motion blur as 1000fps 1000Hz sample-hold

Strobing is a band aid, but you need to strobe only once, to avoid duplicate image effects. So low frame rates (e.g. 25fps) becomes super-flickery.

That's why for strobless blur reduction (sample and hold) you need giant geometric upgrades, e.g. 60Hz -> 144Hz -> 360Hz -> 1000Hz (at GtG=0) to feel major upgrades. Much like it's hard to tell apart a 4K-vs-5K display, but easy to tell apart 1080p-vs-4K display spatially. The Hz problem is also temporally true.

Even a 480Hz strobed display amplifies phantom array effects during LFC strobing:

The Stroboscopic Effect of Finite Frame Rates.

Don't forget that display motion blur behaves very differently if your eyes are stationary versus moving. Here's a

TestUFO Custom Variable Speed Eye Tracking Demo -- stare at the 2nd UFO on any sample-and-hold display for at least 15 seconds while it bounces from stationary slowly to full speed and decelerates to stationary. That's the motion blur continuum, where stutters-blends-to-blur and blur-blends-to-stutter.

Persistence motion blur (of high sample-and-hold frame rate) and sample-and-hold stutter (of low sample-and-hold framerate) is the same thing. The stutter-to-blur continuum is also visible in VRR ramping animations too,

www.testufo.com/vrr ...

Doubling Hz+fps halves motion blur without needing strobing. (This assumes GtG=0, then this automatically means MPRT100%=frametime=motionblur .... simple math! Motion blur is THE frametime, and frametime is THE motion blur, when it comes to sample-and-hold physics, when removing the LCD GtG error margin). Nonzero GtG is part of why 240Hz-vs-360Hz is only a 1.1x difference instead of a 1.5x difference (only occurs at GtG=0). That's why I am a huge fan of high-Hz OLEDs and MicroLEDs, you can double Hz and frame rate to exactly perfectly halve motion blur, and keep doing it.

To match LightBoost (2ms MPRT) without strobing, you need 500fps 500Hz OLED or MicroLED, framerate=Hz, GtG=0

To match Oculus Quest 2 (0.3ms MPRT) without strobing, you need 3333fps 3333Hz OLED or MicroLED, framerate=Hz, GtG=0

Strobing eliminates the need for ultra high frame rates, but strobing even an ultra high frame rate still causes problem. 1000Hz strobing still produces a phantom array effect if your resolution and motion speed is fast enough. 8000 pixels/sec at 1000Hz strobe on a 1000Hz 8K display creates duplicate images spaced every 8 pixels apart.

We actually have access to experimental 1000Hz displays, and we were able to reliably extrapolate perfectly. Enough experiments have confirmed that 1000Hz is definitely not retina refresh rate once you've got enough resolution and enough FOV -- this is caused by the

Vicious Cycle Effect, where extra resolution and FOV amplifies Hz limitations.

In VR, you could even use an eye-tracker and add a GPU motion blur effect to blur the difference between eye-motion vector and moving-object vectors; so you can solve all 4 combos (stationary/moving gaze/objects) concurrently, assuming you kept pulse widths smaller than 1/(maximum pps of eye-trackability) MPRT. Then you've retina'd everything except flicker of strobing. While still using lower frame rates and strobing. But that is DOA for multiple-viewer external displays..

While 1000Hz might be a retina resolution for a 24" 1080p display viewed from 4-8 feet, the retina resolution goes up when angular resolution goes up (up to spatial retina resolution), especially if the display is wide enough in pixels to allow you to track eyes long enough on the object -- 8000 pixels/sec on an 8K display takes 1 second to scroll horizontally. That's easier than 8000 pixels/sec on a 1080p display, the object moves too fast to eyetrack! So that's why higher resolutions raises the retina refresh rate.

There are two different pixel response measurements,

GtG versus MPRT. Motion blur is caused by both GtG and MPRT. You can zero-out GtG, but you still have MPRT motion blur left over. At GtG=0, MPRT100% can never be less than frametime on sample-and-hold display. And MPRT100% is pulse width time on strobed displays assuming single strobe; shorter strobes reduce motion blur. That's why NVIDIA ULMB still has motion blur at

www.testufo.com/map at 3000 pixels/sec unless you reduce pulse width to 0.5ms MPRT instead of default 1ms MPRT. (Good self-proof of human-visible 0.5ms-vs-1.0ms MPRT by seeing for yourself in TestUFO). And if you multi-strobe (even by LFC), you've got duplicate images to worry about.

Remember you have 4 situations to try to eliminate motion blur or duplicate image effects:

8K 1000fps 1000Hz doing 8000 pixels/sec on 0ms GtG SAMPLE-AND-HOLD

- 8 pixels stroboscopic-stepping during stationary-gaze ()

- 8 pixels motion blur during MOVING-gaze (example:

www.testufo.com)

8K 1000fps 1000Hz doing 8000 pixels/sec on STROBING

- 8 pixels stroboscopic-stepping during stationary-gaze

- No motion blur, no stroboscopic stepping during MOVING-gaze*

8K 250fps LFC 1000Hz doing 8000 pixels/sec on STROBING

- 32 pixels stroboscopic-stepping during stationary-gaze

- 32 pixels stroboscopic-stepping during MOVING-gaze

Ooops. LFC made strobing worse. Ouch.

Confirmed math, guaranteed extrapolatable:

(8000/8) = 8

(8000/250) = 32

*IMPORTANT: Motion blur stops being perceptible when MPRT is noticeably less than 1/pps. This applies to both strobed displays AND sample-and-hold displays. So for fast motion speeds like 8000 pixels/sec, which is more common on 4K and 8K displays, you need 1/8000sec MPRT = 0.125ms MPRT. Yup, the difference of 0.25ms MPRT versus 0.125 MPRT now becomes human noticeable when we're talking about future strobed 8K virtual-reality displays! That's why VR now uses heavily sub-millisecond MPRTs today. Thanks to the Vicious Cycle Effect where more resolution amplifies MPRT limitations and Hz limitations, again.

And realize that motion blur physics (and duplicate-image physics) behave differently with stationary gaze, versus moving gaze, largely because of a displays' finiteness of a refresh rate.

And multi-strobing a frame rate (even by LFC) simply chops-up the same motion blur, so you've created a spread of duplicate images in the same space as the original motionblur.

Even if you fix problems for one (e.g. stationary gaze situation) can create new problems for the other (e.g. moving gaze situation). It's very whac-a-mole, and the only universal whac-all-mole solution is ultrahigh frame rates at ultra high refresh rates, to fix ALL stroboscopics simultaneously with ALL motion blur, in a 100% perfectly flicker free manner and perfectly duplicate-image-free manner.

For 240fps (Even w/LFC) at 480Hz, 2000 pixels/sec will have 2 duplicate images spaced every 4 pixels

For 120fps (Even w/LFC) at 480Hz, 2000 pixels/sec will have 4 duplicate images spaced every 4 pixels

For 60fps (Even w/LFC) at 480Hz, 2000 pixels/sec will have 8 duplicate images spaced every 4 pixels

For 240fps (Even w/LFC) at 480Hz, 4000 pixels/sec will have 2 duplicate images spaced every 8 pixels

For 120fps (Even w/LFC) at 480Hz, 4000 pixels/sec will have 4 duplicate images spaced every 8 pixels

For 60fps (Even w/LFC) at 480Hz, 4000 pixels/sec will have 8 duplicate images spaced every 8 pixels

So, alas, we're SOL with motion blur reducing low frame rates without flicker. I even explained this five years ago in

Blur Busters Area 51, scroll down to the 1000Hz Journey article.

I love making big replies to these sort of questions because we've actually test them, because Blur Busters has helped researchers/manufacturers/vendors to research this sort of stuff over the last 5 years and I'm now in 25 peer-reviewed research papers. For more of that fun, see

www.blurbusters.com/area51 as the Cole Notes.

I love these kinds of questions.

Does my reply or any Area51 articles answer your question?

If not, let me know and I'll be happy to expand.

Does my reply create new questions?

If yes, I'll be happy to explain them, and give custom TestUFO links where available.