MCLV wrote: ↑05 Mar 2021, 16:24

It does not help if you strobe the same frame multiple times, see FAQ for Motion Blur Reduction.

However, increasing number of strobes per frame will approximate motion blur with increasing accuracy. Hence, 60 fps on 6000 Hz strobed display would look basically identical to 60 fps on 60 Hz sample and hold display.

Correct.

Here's 360 Hz PWM at 60 Hz:

Notice how it starts to resemble motion blur again? Bingo.

Now if you have enough PWM, it just fills in to a continuous blur, and you've got a blurry mess again instead of zero-blur.

TL;DR: Ultra-high-Hz PWM just becomes sample and hold to human eyes.

Scientific Explanation:

Scientific Explanation:

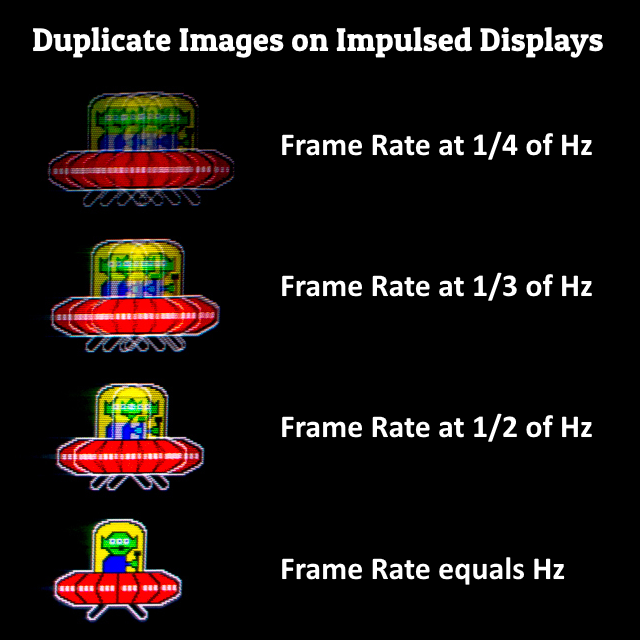

The display is flipbooking through a series of static images. Your analog eyes are moving all the time. As you track moving objects, your eyes are in a different position at the end of a refresh cycle than at the beginning of a refresh cycle. The multiple repeat flashes of PWM is like a stamp that offsets bit-by-bit. Eventually there's enough stamps of PWM that it just looks like a continuous blur.

The humankind invention of using a series of static images is the problem. The concept of "frame rate" is a useful, albet artificial humankind invention, because we don't have any method of doing an analog-motion framerateless display, and will always be guaranteed to have blur and/or stroboscopic effects at low frame rates.

thatoneguy wrote: ↑05 Mar 2021, 14:38

-No more stutter due to very fast pixel response time ala OLED Sample-and-Hold at 24fps

-No persistence blur

-No interpolation needed

Display motion blur physics doesn't work that way.

Unfortunately, ultra-high-Hz PWM replicates sample and hold of the underlying frame rate (e.g. 60fps 60Hz)

Also, there are two causes of eyestrain from PWM

1. People who are PWM-insensitive (doesn't mind flicker/strobe/PWM of any kind, even unsynchronized)

2. People who are PWM-sensitive only to framerates mismatching stroberate (aka "motion blur reduction" PWM)

3. People who are PWM-sensitive to any kind of PWM

I fall in the category of #2.

The bottom line is that

some people get

eyestrain from PWM artifacts, instead of

eyestrain from PWM flicker.

These are essentially

two separate causes of PWM strain, and different people are affected.

Everybody sees differently. We respect that everybody has different vision sensitivities. Focus distance? Brightness? Display Motion Blur? Flicker? Tearing? Stutter? Some of them hurts people's eyes / create nausea / symptoms sommore than others. One person may be brightness sensitive (hate monitors that are too bright or too dim). Another may get nausea from stutter. And so on. Displays are inherently imperfect emulations of real life.

Even back in the CRT days -- some people were insanely bothered by CRT 30fps at 60Hz, while others were not at all.

Also, thanks to the

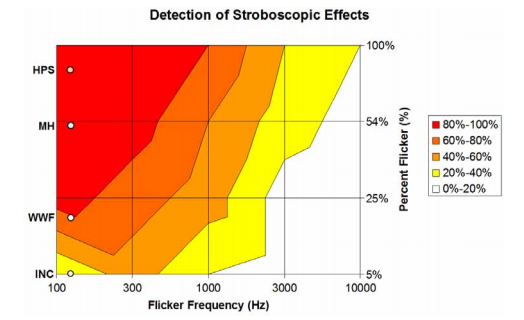

Vicious Cycle Effect where higher resolutions, bigger displays, wider FOV, and faster motions amplfiy PWM artifacts. They become easier to see on 8K displays than 4K displays than 1080p displays.

The visibility of PWM artifacts increases with bigger resolutions & bigger FOV & faster motion. Where the problem of (framerate not equal stroberate) become more visible again at ever higher PWM frequencies. This is part of why I'm a big fan of retina frame rates at retina refresh rates for many use cases.

In fact, many people are bothered by PWM artifacts (#2) when the

display is big enough - such as virtual reality. Headaches of display artifacts (PWM artifacts, motion blur artifacts, etc) are biggest with a massive IMAX display strapped to your eyes. So, that's how VR headsets work to minimize

the percentage of nausea and headaches in the human population, their PWM frequency is matched to Hz to fix headaches from duplicate images.

From an Average Population Sample, Lesser Evil of Pick-Your-Poison Display Artifacts

VR researchers discovered people got a lot of nausea/headaches with things like display motion blur and PWM artifacts, so they (A) chose to strobe, and (B) they chose to strobe at framerate=Hz.

Strobing / blur reduction / BFI / "framerate=Hz PWM" are essentially the same thing --

synonyms from a display point of view. We don't normally equate "PWM" with "motion blur reduction" but motion blur reduction on LCD is essentially flashing a backlight, and that's the scientific definition of PWM (an ON-OFF square wave).

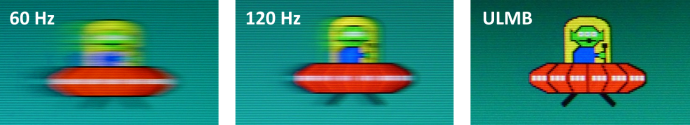

For people matching #2, the fix is to lower refresh rate and raise frame rates,

until they converge to framerate=refreshrate=stroberate. When this happens, you get beautiful CRT motion clarity, zero blur, zero duplicates, etc. You still have flicker, but there can be a point where flicker is the lesser evil (as long as flicker frequency is high enough).

When screens are gigantic to eyes enough (like VR), it becomes a problem bigger and more visible than CRT 30fps at 60Hz.

VR researchers found that the fewest % of headaches occured with VR PWM at framerate=Hz. You do need a proper triple match to eliminate the maximum amount of VR nausea for the maximum population though:

refreshrate == framerate == stroberate PWM

, and the frequency of this to be beyond flicker fusion threshold (i.e. 120Hz instead of 60Hz).

It is the

lesser of evil of a pick-your-poison problem of displays that can't yet perfectly match real life.

That's why if you want this for your desktop games, you must have the triple match,

framerate = refreshrate = stroberate.

That's why blur busting is so difficult in many games at these non-retina frame rates. You need technologies similar to VSYNC ON, but with lower lag, to keep frame rate synchronized. You need GPU powerful enough to run framerates equalling refresh rates that are strobing high enough not to flicker. You need high-quality strobing without ghosting or crosstalk. Etc. So, 120fps, 120Hz PWM, 120Hz display refresh -- very tough to do with many games.

(BTW, this is also partially why RTSS Scanline Sync was invented as a substitute to laggy VSYNC ON -- I helped Guru3D create RTSS Scanline Sync -- which is essentially a low-lag VSYNC ON alternative.)

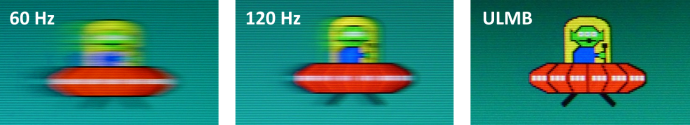

But what if you hate sample-and-hold blur *and* flicker?

The only way to have cake and eat it to is higher frame rates at higher refresh rates -- aka 1000fps at 1000Hz. For now, a good compromise could be 240fps at 240Hz strobing, provided you can hit the magic match

framerate = refreshrate = stroberate

This is precisely why I wrote

Blur Busters Law: The Amazing Journey To Future 1000 Hz Displays.

Motion blur is frame visibility time, from first visibility time to last visibility time of unique frame.

The blur can be distorted (ghosting, coronas, duplicates, rainbow artifacts, etc).

But it's still a blur/smear/artifact of a sustained long frame visibility time -- whether caused by slow MPRT, slow GtG, multiple strobes (multi strobe from PWM or low frame rate similar to CRT 30fps@60Hz strobe). This otherwise increases time between the first time a specific unique frame is visible for, to the end of the specific unique frame visibility time. The whole time length always guarantees artifacts of some form, it's as immutable as speed of light, regardless of what tricks the displays does!

You can push artifacts below human thresholds for various use cases -- like maybe 240Hz or 480Hz is enough for a smartphone while you might need 10,000Hz for a 180-degree retina VR headset. The exact threshold to retina refresh rates (displays look like real life motion) varies on a lot of variables of the

Vicious Cycle Effect -- bigger displays / higher resolutions amplify Hz limitations.

Only way to emulate analog motion is analog motion, but you can get close with utlrahigh framerates at ultrahigh refresh rates (aka 1000fps+ at 1000Hz+) so that you don't have extra blur/artifacts/ghost/duplicates/whatever on a display above-and-beyond real life.