The iPad Mini 6 Jelly Effect Thread

To prepare for a possible future research white paper, I am crossposting a bunch of ArsTechnica comment replies here for their article about the Ars Technica iPad Mini 6 Jelly Effect article.

Jelly Effect on iPad Mini 6 (Blur Busters Technological Explanation)

- Chief Blur Buster

- Site Admin

- Posts: 11653

- Joined: 05 Dec 2013, 15:44

- Location: Toronto / Hamilton, Ontario, Canada

- Contact:

Jelly Effect on iPad Mini 6 (Blur Busters Technological Explanation)

Head of Blur Busters - BlurBusters.com | TestUFO.com | Follow @BlurBusters on Twitter

Forum Rules wrote: 1. Rule #1: Be Nice. This is published forum rule #1. Even To Newbies & People You Disagree With!

2. Please report rule violations If you see a post that violates forum rules, then report the post.

3. ALWAYS respect indie testers here. See how indies are bootstrapping Blur Busters research!

- Chief Blur Buster

- Site Admin

- Posts: 11653

- Joined: 05 Dec 2013, 15:44

- Location: Toronto / Hamilton, Ontario, Canada

- Contact:

Re: Blur Busters Tech Explanation Of Jelly Effect on iPad Mini 6

Post #1 of Series

Jelly Effect is Caused by a Perfect Storm of Multiple Causes

Both Apple and ifixit is right:

It is normal refreshing behaviour BUT unusually amplified in visibility from the convergence of unrelated factors:

I wanted to crosspost a late reply I made from another thread, that appears to corroborate ifixit:

___

Scanout along the long axis of the screen (as iPad Mini 5 does) is much faster, so the jelly effect is harder to see and jelly effect is only visible during ultrafast scrolling while landscape.

Scanout along the short axis of the screen (as iPad Mini 6 does) is slower, so the jelly effect is much more pronounced when scrolling while in portrait mode. The scanout distance is shorter so the scanout is slower. Scanout on a 4:3 screen is 4/3rds faster along the long axis than the short axis. So scan direction is a major determinator of making the jelly effect easier to see, when scrolling in a direction perpendicular to the screen refresh’s scanout direction.

Thus:

(A) On iPad Mini 5, Jelly effect barely visible in vertical scrolling during landscape mode

(harder to see, needs fast scrolling while tracking eyes on text to barely notice)

(B) On iPad Mini, 6 Jelly effect more visible in vertical scrolling during portrait mode

(much more visible with slower scrolling)

The iPad Mini 6 is a perfect storm of multiple converging factors:

- Most people hold an iPad in portrait mode when browsing the web

- This is the screen orientation that jelly effect appears on Mini 6

- It also happens to be the “slowest possible” 60 Hz scanout velocity (the short axis of screen)

You also have to simultaneously eye track the text while scrolling (not fixed gaze) to see the jelly effect, different people gaze differently (stationary vs moving gaze) so not everyone notices.

A specific human’s maximum eye tracking speed is also a variable on whether someone can see the jelly effect. Scroll fairly brisk but at a velocity no faster than your eye-tracking ability. Then stare at the text as you scroll (or bounce scroll the text of a webpage up n down in a bounce like the TestUFO test).

Screens often look different in fixed gaze versus moving gaze. Some TestUFO tests demonstrate this perfectly, such as TestUFO Eye Tracking as well as TestUFO Persistence of Vision.

Likewise, the scientific difference of stationary-vs-moving gaze (interacting with display behaviors) is also true for the jelly effect, it only really becomes visible if you track your eyes on the text while scrolling.

Thus explains a portion of the arguing going on here, from some people who do not see the jelly effect — either because they were doing a fixed gaze while text scrolls past — or because the scrolling speeds necessary for making jelly effect visible is beyond that specific person’s maximum eye tracking speed — or the scanout is not perpendicular to scroll direction. Even just a mere one of these factors means the human likely won’t see the jelly effect.

Jelly Effect is Caused by a Perfect Storm of Multiple Causes

Both Apple and ifixit is right:

It is normal refreshing behaviour BUT unusually amplified in visibility from the convergence of unrelated factors:

I wanted to crosspost a late reply I made from another thread, that appears to corroborate ifixit:

___

It’s not a different thing — it’s simply because the 1/60sec scanout direction is along the “longer vs shorter” axis vs of the screen. A left-to-right scanout vs top-to-bottom. Portrait scan versus landscape scan.Cheshire Cat wrote:TestUFO says Safari vsync @120+ is not supported.mdrejhon wrote:A good web test for this is the TestUFO Jelly Scrolling Test.

Although less visible than the Mini 6, even a Dell 60 Hz office monitor shows some scanout skewing too.

Test in both portrait and landscape, some desktop/mobile screens only do it in one orientation.

Using iPad Pro 2020.

That said, I can certainly notice the native scanline refresh bending vertical lines during stupidly fast scrolling, but it is something I have to look for and definitely not noticeable with normal scrolling speed.

I am afraid what causes this issue on the new iPad mini is a different thing.

Scanout along the long axis of the screen (as iPad Mini 5 does) is much faster, so the jelly effect is harder to see and jelly effect is only visible during ultrafast scrolling while landscape.

Scanout along the short axis of the screen (as iPad Mini 6 does) is slower, so the jelly effect is much more pronounced when scrolling while in portrait mode. The scanout distance is shorter so the scanout is slower. Scanout on a 4:3 screen is 4/3rds faster along the long axis than the short axis. So scan direction is a major determinator of making the jelly effect easier to see, when scrolling in a direction perpendicular to the screen refresh’s scanout direction.

Thus:

(A) On iPad Mini 5, Jelly effect barely visible in vertical scrolling during landscape mode

(harder to see, needs fast scrolling while tracking eyes on text to barely notice)

(B) On iPad Mini, 6 Jelly effect more visible in vertical scrolling during portrait mode

(much more visible with slower scrolling)

The iPad Mini 6 is a perfect storm of multiple converging factors:

- Most people hold an iPad in portrait mode when browsing the web

- This is the screen orientation that jelly effect appears on Mini 6

- It also happens to be the “slowest possible” 60 Hz scanout velocity (the short axis of screen)

You also have to simultaneously eye track the text while scrolling (not fixed gaze) to see the jelly effect, different people gaze differently (stationary vs moving gaze) so not everyone notices.

A specific human’s maximum eye tracking speed is also a variable on whether someone can see the jelly effect. Scroll fairly brisk but at a velocity no faster than your eye-tracking ability. Then stare at the text as you scroll (or bounce scroll the text of a webpage up n down in a bounce like the TestUFO test).

Screens often look different in fixed gaze versus moving gaze. Some TestUFO tests demonstrate this perfectly, such as TestUFO Eye Tracking as well as TestUFO Persistence of Vision.

Likewise, the scientific difference of stationary-vs-moving gaze (interacting with display behaviors) is also true for the jelly effect, it only really becomes visible if you track your eyes on the text while scrolling.

Thus explains a portion of the arguing going on here, from some people who do not see the jelly effect — either because they were doing a fixed gaze while text scrolls past — or because the scrolling speeds necessary for making jelly effect visible is beyond that specific person’s maximum eye tracking speed — or the scanout is not perpendicular to scroll direction. Even just a mere one of these factors means the human likely won’t see the jelly effect.

Head of Blur Busters - BlurBusters.com | TestUFO.com | Follow @BlurBusters on Twitter

Forum Rules wrote: 1. Rule #1: Be Nice. This is published forum rule #1. Even To Newbies & People You Disagree With!

2. Please report rule violations If you see a post that violates forum rules, then report the post.

3. ALWAYS respect indie testers here. See how indies are bootstrapping Blur Busters research!

- Chief Blur Buster

- Site Admin

- Posts: 11653

- Joined: 05 Dec 2013, 15:44

- Location: Toronto / Hamilton, Ontario, Canada

- Contact:

Re: Blur Busters Tech Explanation Of Jelly Effect on iPad Mini 6

Post #2 of Series

Display research Part 2 of my highly insightful reply on page 1.

However, upside down is an additional factor for an unexpected reason:

I later discovered (with Blur Busters contracts with display manufacturers) that it actually had a factor. Being inventor of TestUFO, I created a special jelly effect test pattern about a couple years ago specifically to discover why screens have a jelly effect.

On the iPad Mini 5, with default case stand, the home button is at the right edge. The iPad scanout starts at edge opposite Home button on Mini 5. So with default cases, iPads on a table start scanning at the left edge (lowest lag) towards the right edge.

I later discovered most humans stare at the left edge of the screen first because humans read left to right in most of the world’s languages. So if the screen scanout starts at the right edge first and scans leftwards to the left edge, the leftmost edge is the most lagged screen edge. That amplifies the visibility of the jelly effect if the most lagged screen edge accidentally happens to be the left edge!

However, if I hold the Mini 5 upsidedown (landscape, home button at left edge), the jelly effect became more visible (Even though only 3/4ths as visible as iPad Mini 6, from the 4:3 aspect ratio and long-axis scanout, mentioned in my earlier post).

So it is an additional factor: The general eye gaze area is usually the left edge of the screen, because that is where most people begin to start reading the text. If that edge of the screen is more lagged, the jelly effect is more visible.

So the upsidedown + perpendicular scanout combo indeed amplifies jelly effect visibility. Try holding your iPad Mini 5 upsidedown (home button on right edge) and start scrolling the ArsTechnica cover page up/down in a medium-speed (sine wave) bounce up and down back and fourth (scroll Ars cover page at the same speed as http://www.testufo.com/scanskew or slightly faster) — and instantly, the jelly effect is much more visible on Mini 5.

The Mini 6 adds extra cake because there is no front home button and people are more likely to hold it simultaneously upsidedown + perpenticular scanout. AND the scanout is along the short axis (slower scan, easier to see in peripheral vision)

Not everyone can see it, but a larger proportion of my family starts to see it on the Mini 5 too, not just Mini 6. So that’s yet another additional factor, that I had not hitherto disclosed to public until now, and you Ars comment audience is the first audience I’m disclosing this to —

Fortunately the iPad Mini 5 cases means most people won’t be viewing the iPad Mini 5 with the home buton on the right-hand edge, when propping the iPad down in a smart-case style case. The Mini 5 starts its scanout at the opposite edge of screen than home-button, so that edge of the screen has the lowest latency — the area where people start reading text. Because of the case-hinting and home-button hinting, iPad Mini 5 are almost never held in the screen orientation that amplifies the Mini 5 existing jelly effect.

Mitigation factors include:

(1) Go 120Hz or 240Hz (preferred) as it is a universal solve-all for all jelly effects.

This pushes screen refresh scanout velocity beyond majority of human perception, even in the worst-cases.

(2a) Alternatively, design screen to scan along long axis.

A long-screen-axis scanout is faster and makes jelly effect less visible.

(2b) And/or, design screen to scan vertically in default holding position

Rotation bias / hinting such as case design and home button location encourages holding iPad in less-sensitive orientiations

(2c) And/or, design device to scan beginning at the left edge — when rotated in a way that makes scrolling direction perpendicular to scanout direction.

Same rotation bias as above

(3) All the above (1)(2a)(2b)(2c).

This covers the smaller human population that is still able to see the jelly effect on 120Hz screens.*

*120Hz jelly effect is still within a few humans’ perceptions if the same perfect storm is intentionally done: I personally still see it on the 120Hz iPads but only in one suboptimal orientation (where left edge is the most lagged scanout) and when scrolling twice as fast at fully-outstretched-arm viewing distance while using slightly larger font sizes. It’s HARD to see, but a super-faint jelly effect still occurs at 120Hz at specific scrolling speeds near my eye-tracking speed limits. I use a high speed camera (using this test methodology) to figure out which is the lagged scanout edge, and intentionally hold the iPad that way for the left edge to be the most lagged edge. At this point, I suddenly start to faintly see the 120Hz jelly effect in horizontal ad banners. This time, I don’t notice if I am not paying attention — I really have to look for it hard and scroll fast while at full outstretched arm extension. Easier to see in horizontal ad banners which starts to vertically parallelogram slightly at ultra fast scroll speeds while eye tracking. Unscientifically I’d wager less than 1/100th of people seeing jelly effect at 60Hz will see it at 120Hz, because it’s right at the threshold of human perception. But the fact that at least a few of us sees it when intentionally doing the perfect storm, means it’s not 100% solved, and we should someday go 240 Hz when “free.”. The perfect storm of jelly-effect amplifying factors occurs in only one specific screen orientation (upsidedown AND perpendicular scanout), and eye-tracking (while scrolling) certain jelly-effect-sensitive webpage objects such as wide thin horizontal advertisement banners during fast scrolling.

I prefer option (1) going 120Hz or 240Hz especially as it will eventually become almost “free” battery-wise and cost-wise (like retina screens now are) … High Hz is very ergonomic for browser scrolling as long as it can be done without significant power increase (120Hz on sample-and-hold LCD/OLEDs halves LCD/OLED scrolling motion blur, and 240Hz gives 1/4th motion blur — 240Hz is not just for games anymore. If 240Hz can eventually be made “free” cost-wise and power-wise, it should eventually be used instead of 120Hz someday. But for now, cost wise, this is not the case, so 120 Hz is a good starting point. It is becoming highend-mainstream standard in consoles and many newer TVs, which will bring cost drops to 120Hz to become as free/low cost add as 4K screens and retina screens).

I think I should begin to write a white paper about this. My comment here is the first-ever disclosure of this discovery of “perfect storm of factors” combining to make the jelly effect more visible in normal LCD scanouts. If any researchers reading about this, please credit me for this discovery (I’m already credited in over 20 peer reviewed papers). Verge and IFixit indeed mentioned individual factors, but they aren’t enough by themselves alone — I discovered it required a perfect storm of factors (possibly coincidential / accidental). Any researchers on this, contact me if you want to collaborate / co-author on this.

(Mark Rejhon — display researcher, inventor of TestUFO)

Display research Part 2 of my highly insightful reply on page 1.

Technically, I first thought up-sidedown did not make sense, because jelly effect only becomes easily visible with scrolling directions perpendicular to scanout direction.sprockkets wrote:It's normal, but everyone else puts the screen in the "right" way to avoid this issue. Sometimes, like OnePlus did with the 5, they put it upside down due to their engineering decisions because of where the connector cable had to go.

However, upside down is an additional factor for an unexpected reason:

I later discovered (with Blur Busters contracts with display manufacturers) that it actually had a factor. Being inventor of TestUFO, I created a special jelly effect test pattern about a couple years ago specifically to discover why screens have a jelly effect.

On the iPad Mini 5, with default case stand, the home button is at the right edge. The iPad scanout starts at edge opposite Home button on Mini 5. So with default cases, iPads on a table start scanning at the left edge (lowest lag) towards the right edge.

I later discovered most humans stare at the left edge of the screen first because humans read left to right in most of the world’s languages. So if the screen scanout starts at the right edge first and scans leftwards to the left edge, the leftmost edge is the most lagged screen edge. That amplifies the visibility of the jelly effect if the most lagged screen edge accidentally happens to be the left edge!

However, if I hold the Mini 5 upsidedown (landscape, home button at left edge), the jelly effect became more visible (Even though only 3/4ths as visible as iPad Mini 6, from the 4:3 aspect ratio and long-axis scanout, mentioned in my earlier post).

So it is an additional factor: The general eye gaze area is usually the left edge of the screen, because that is where most people begin to start reading the text. If that edge of the screen is more lagged, the jelly effect is more visible.

So the upsidedown + perpendicular scanout combo indeed amplifies jelly effect visibility. Try holding your iPad Mini 5 upsidedown (home button on right edge) and start scrolling the ArsTechnica cover page up/down in a medium-speed (sine wave) bounce up and down back and fourth (scroll Ars cover page at the same speed as http://www.testufo.com/scanskew or slightly faster) — and instantly, the jelly effect is much more visible on Mini 5.

The Mini 6 adds extra cake because there is no front home button and people are more likely to hold it simultaneously upsidedown + perpenticular scanout. AND the scanout is along the short axis (slower scan, easier to see in peripheral vision)

Not everyone can see it, but a larger proportion of my family starts to see it on the Mini 5 too, not just Mini 6. So that’s yet another additional factor, that I had not hitherto disclosed to public until now, and you Ars comment audience is the first audience I’m disclosing this to —

Fortunately the iPad Mini 5 cases means most people won’t be viewing the iPad Mini 5 with the home buton on the right-hand edge, when propping the iPad down in a smart-case style case. The Mini 5 starts its scanout at the opposite edge of screen than home-button, so that edge of the screen has the lowest latency — the area where people start reading text. Because of the case-hinting and home-button hinting, iPad Mini 5 are almost never held in the screen orientation that amplifies the Mini 5 existing jelly effect.

Mitigation factors include:

(1) Go 120Hz or 240Hz (preferred) as it is a universal solve-all for all jelly effects.

This pushes screen refresh scanout velocity beyond majority of human perception, even in the worst-cases.

(2a) Alternatively, design screen to scan along long axis.

A long-screen-axis scanout is faster and makes jelly effect less visible.

(2b) And/or, design screen to scan vertically in default holding position

Rotation bias / hinting such as case design and home button location encourages holding iPad in less-sensitive orientiations

(2c) And/or, design device to scan beginning at the left edge — when rotated in a way that makes scrolling direction perpendicular to scanout direction.

Same rotation bias as above

(3) All the above (1)(2a)(2b)(2c).

This covers the smaller human population that is still able to see the jelly effect on 120Hz screens.*

*120Hz jelly effect is still within a few humans’ perceptions if the same perfect storm is intentionally done: I personally still see it on the 120Hz iPads but only in one suboptimal orientation (where left edge is the most lagged scanout) and when scrolling twice as fast at fully-outstretched-arm viewing distance while using slightly larger font sizes. It’s HARD to see, but a super-faint jelly effect still occurs at 120Hz at specific scrolling speeds near my eye-tracking speed limits. I use a high speed camera (using this test methodology) to figure out which is the lagged scanout edge, and intentionally hold the iPad that way for the left edge to be the most lagged edge. At this point, I suddenly start to faintly see the 120Hz jelly effect in horizontal ad banners. This time, I don’t notice if I am not paying attention — I really have to look for it hard and scroll fast while at full outstretched arm extension. Easier to see in horizontal ad banners which starts to vertically parallelogram slightly at ultra fast scroll speeds while eye tracking. Unscientifically I’d wager less than 1/100th of people seeing jelly effect at 60Hz will see it at 120Hz, because it’s right at the threshold of human perception. But the fact that at least a few of us sees it when intentionally doing the perfect storm, means it’s not 100% solved, and we should someday go 240 Hz when “free.”. The perfect storm of jelly-effect amplifying factors occurs in only one specific screen orientation (upsidedown AND perpendicular scanout), and eye-tracking (while scrolling) certain jelly-effect-sensitive webpage objects such as wide thin horizontal advertisement banners during fast scrolling.

I prefer option (1) going 120Hz or 240Hz especially as it will eventually become almost “free” battery-wise and cost-wise (like retina screens now are) … High Hz is very ergonomic for browser scrolling as long as it can be done without significant power increase (120Hz on sample-and-hold LCD/OLEDs halves LCD/OLED scrolling motion blur, and 240Hz gives 1/4th motion blur — 240Hz is not just for games anymore. If 240Hz can eventually be made “free” cost-wise and power-wise, it should eventually be used instead of 120Hz someday. But for now, cost wise, this is not the case, so 120 Hz is a good starting point. It is becoming highend-mainstream standard in consoles and many newer TVs, which will bring cost drops to 120Hz to become as free/low cost add as 4K screens and retina screens).

I think I should begin to write a white paper about this. My comment here is the first-ever disclosure of this discovery of “perfect storm of factors” combining to make the jelly effect more visible in normal LCD scanouts. If any researchers reading about this, please credit me for this discovery (I’m already credited in over 20 peer reviewed papers). Verge and IFixit indeed mentioned individual factors, but they aren’t enough by themselves alone — I discovered it required a perfect storm of factors (possibly coincidential / accidental). Any researchers on this, contact me if you want to collaborate / co-author on this.

(Mark Rejhon — display researcher, inventor of TestUFO)

Head of Blur Busters - BlurBusters.com | TestUFO.com | Follow @BlurBusters on Twitter

Forum Rules wrote: 1. Rule #1: Be Nice. This is published forum rule #1. Even To Newbies & People You Disagree With!

2. Please report rule violations If you see a post that violates forum rules, then report the post.

3. ALWAYS respect indie testers here. See how indies are bootstrapping Blur Busters research!

- Chief Blur Buster

- Site Admin

- Posts: 11653

- Joined: 05 Dec 2013, 15:44

- Location: Toronto / Hamilton, Ontario, Canada

- Contact:

Re: Blur Busters Tech Explanation Of Jelly Effect on iPad Mini 6

Post #3 of a Series

A faster scanout solves human-visible jelly effect consideration, while also adding ergonomic bonuses (reduced scrolling blur even for non-game apps).

There’s currently a push to reduce the power and cost penalty of 120Hz. There are now sub-$500 4K HDTVs with 120Hz built in, thanks to PlayStation and XBox forcing the commoditization of 120Hz tech. I expect 120Hz to be commoditized much like retina screens.

Besides, on flickerfree sample-and-hold screens — 120Hz halves the LCD/OLED motion blur of 60Hz, and 240Hz quarters the LCD/OLED motion blur of 60Hz, for the same screen scrolling velocity. Text scrolling blur at different frame rates can be demonstrated at http://www.testufo.com/framerates-text — try this on a 120Hz or 240Hz desktop monitor and the ergonomic benefits become obvious.

It is the current internal industry expectations for 120Hz to eventually become a “freebie” feature much like 4K did. It used to be a $10,000 feature, but now you can buy sub-$300 RCA 4K HDTVs at Walmart or Costco.

Note to other readers; I’m not talking about yesteryear’s “fake” 120Hz by interpolation. The modern 120Hz is native original unique low-latency frames natively rendered by the original native content such as games or webpage scrolling / panning / etc. It is also, additionally, a myth that 120Hz and 240Hz is only useful for games. Although even though 60Hz LCDs don’t flicker like yesteryear CRTs, screen motion blur (a mandatory side effect of flickerfree sample-and-hold displays) and jelly effects are major symptoms of a too-low refresh rate originally linked to AC frequencies for direct-drive of CRT electron beam refresh frequencies.

Working in the display industry, going 120Hz or 240Hz is actually cheaper than having two display drivers.Statistical wrote:Simple solution would be two display drivers that drive the panel based on orientation.

Ok that was tonque in cheek as it is a very not simple solution because it means the panel has to be wired to support lines from both edges, it adds a lot of extra traces, and you need to put a seperate controller in the device. Still who knows with miniturization it could be an economical solution someday.

A faster scanout solves human-visible jelly effect consideration, while also adding ergonomic bonuses (reduced scrolling blur even for non-game apps).

There’s currently a push to reduce the power and cost penalty of 120Hz. There are now sub-$500 4K HDTVs with 120Hz built in, thanks to PlayStation and XBox forcing the commoditization of 120Hz tech. I expect 120Hz to be commoditized much like retina screens.

Besides, on flickerfree sample-and-hold screens — 120Hz halves the LCD/OLED motion blur of 60Hz, and 240Hz quarters the LCD/OLED motion blur of 60Hz, for the same screen scrolling velocity. Text scrolling blur at different frame rates can be demonstrated at http://www.testufo.com/framerates-text — try this on a 120Hz or 240Hz desktop monitor and the ergonomic benefits become obvious.

It is the current internal industry expectations for 120Hz to eventually become a “freebie” feature much like 4K did. It used to be a $10,000 feature, but now you can buy sub-$300 RCA 4K HDTVs at Walmart or Costco.

Note to other readers; I’m not talking about yesteryear’s “fake” 120Hz by interpolation. The modern 120Hz is native original unique low-latency frames natively rendered by the original native content such as games or webpage scrolling / panning / etc. It is also, additionally, a myth that 120Hz and 240Hz is only useful for games. Although even though 60Hz LCDs don’t flicker like yesteryear CRTs, screen motion blur (a mandatory side effect of flickerfree sample-and-hold displays) and jelly effects are major symptoms of a too-low refresh rate originally linked to AC frequencies for direct-drive of CRT electron beam refresh frequencies.

Head of Blur Busters - BlurBusters.com | TestUFO.com | Follow @BlurBusters on Twitter

Forum Rules wrote: 1. Rule #1: Be Nice. This is published forum rule #1. Even To Newbies & People You Disagree With!

2. Please report rule violations If you see a post that violates forum rules, then report the post.

3. ALWAYS respect indie testers here. See how indies are bootstrapping Blur Busters research!

- Chief Blur Buster

- Site Admin

- Posts: 11653

- Joined: 05 Dec 2013, 15:44

- Location: Toronto / Hamilton, Ontario, Canada

- Contact:

Re: Blur Busters Tech Explanation Of Jelly Effect on iPad Mini 6

Post #4 of a Series

They still physically take 1/60sec to refresh from one edge to another.

Higher resolutions simply mean the controller is doing row refresh faster in order to refresh the screen one edge to another in the same 1/60sec time. For Apple products, I discovered resolution has no meaningful effect on the jelly effect because they just row-refresh faster to keep the same refresh interval for first through last pixel.

The jelly effect is caused by other reasons (see Post #1 and Post #2)

The real problem is laws of physics, combined with a perfect storm of factors.

Not all pixels on a screen refresh can at the same time, from first pixel to the last pixel of a screen.

Imagine a screen as is essentially sort of a large RAM — It’s the same way you can’t write all bytes of a flash chip or DRAM chip simultaneously.

- In 1st screen rotation, screen refreshes top-to-bottom

- In 2nd screen rotation, screen refreshes left-to-right

- In 3rd screen rotation, screen refreshes bottom-to-top

- In 4th screen rotation, screen refreshes right-to-left

No non-strobed flickerfree LCD screens can do global refresh (make all pixels of a frame visible simultaneously instantly).

See high speed videos. A 60 Hz screen generally takes 1/60sec to refresh the first pixel to the last pixel. This is the source of the skewing, especially when combined with a perfect storm of factors described in my Post #1 and Post #2.

TL;DR: The perfect storm of jelly-effect visibility occurs with

(1) Screen orientation and default holding position (case design and home button location encourages holding iPad in less-jelly-effect sensitive screen orientations),

(2) scanout along shorter axis of screen (slower) instead of longer axis of screen (shorter scan distance is a slower 1/60sec sweep that is easier to see jelly effect in), and

(3) edge of screen where the screen scanout sweep begins (i.e. beginning of text rows becoming most lagged where humans begin reading).

When all these factors combine simultaneously, the jelly effect is the most visible.

All iPads refresh in the same synchronized way. It’s just that there’s the above perfect storm of factors occuring on Mini 6 as described in my 2 posts. iPad refreshes along the short axis (sideways scan in portrait), and many are holding it in a way where scan is right-to-left (causing beginning of text rows to be most lagged).

Jelly effect occurs on all iPads but may not be visible to humans depending on circumst ances (but the jelly effect behaviours are always able to be captured from all iPads via 1000fps high speed cameras). Even the 120Hz iPads super-faintly by very few people who are unusually sensitive, but it's always visible in sufficiently high speed cameras. It is just most amplified on the iPad Mini 6 due to the perfect storm of simultaneous factors.

60Hz screens refresh at the same physical velocity regardless of screen resolution.Lumian wrote:Resolution is also an issue

The less Pixel Rows there are the quicker you get to the next one.

And Pixel per Row also take up time to render out

They still physically take 1/60sec to refresh from one edge to another.

Higher resolutions simply mean the controller is doing row refresh faster in order to refresh the screen one edge to another in the same 1/60sec time. For Apple products, I discovered resolution has no meaningful effect on the jelly effect because they just row-refresh faster to keep the same refresh interval for first through last pixel.

The jelly effect is caused by other reasons (see Post #1 and Post #2)

This, this. Also, 120Hz will eventually become nearly “freebie” power-wise and cost-wise much like 4K and retina did. This will gradually incrementally happen as this decade progresses.binaryspiral wrote:Bump them all to 120Hz and problem solved.

I can confirm that iPad Mini 6 does exactly what you describe: Synchronized refresh.otso wrote:I’ll think more and harder, but I honestly still dont get it. Simplified: Render image 1, wait until all pixels have been drawn from image 1, render image 2, wait until all pixels have been drawn from image 2, etc.WXW wrote:No, the issue is not that the images sent aren't synchronized with the LCD refresh, but that LCDs like these don't draw all their pixels at once.otso wrote:Can’t this be fixed with Vsync, https://en.wikipedia.org/wiki/Analog_te ... ronization ? I.e., redraw at the exact same frequency the whole screen is refreshed. Edit: wording

The real problem is laws of physics, combined with a perfect storm of factors.

Not all pixels on a screen refresh can at the same time, from first pixel to the last pixel of a screen.

Imagine a screen as is essentially sort of a large RAM — It’s the same way you can’t write all bytes of a flash chip or DRAM chip simultaneously.

- In 1st screen rotation, screen refreshes top-to-bottom

- In 2nd screen rotation, screen refreshes left-to-right

- In 3rd screen rotation, screen refreshes bottom-to-top

- In 4th screen rotation, screen refreshes right-to-left

No non-strobed flickerfree LCD screens can do global refresh (make all pixels of a frame visible simultaneously instantly).

See high speed videos. A 60 Hz screen generally takes 1/60sec to refresh the first pixel to the last pixel. This is the source of the skewing, especially when combined with a perfect storm of factors described in my Post #1 and Post #2.

TL;DR: The perfect storm of jelly-effect visibility occurs with

(1) Screen orientation and default holding position (case design and home button location encourages holding iPad in less-jelly-effect sensitive screen orientations),

(2) scanout along shorter axis of screen (slower) instead of longer axis of screen (shorter scan distance is a slower 1/60sec sweep that is easier to see jelly effect in), and

(3) edge of screen where the screen scanout sweep begins (i.e. beginning of text rows becoming most lagged where humans begin reading).

When all these factors combine simultaneously, the jelly effect is the most visible.

All iPads refresh in the same synchronized way. It’s just that there’s the above perfect storm of factors occuring on Mini 6 as described in my 2 posts. iPad refreshes along the short axis (sideways scan in portrait), and many are holding it in a way where scan is right-to-left (causing beginning of text rows to be most lagged).

Jelly effect occurs on all iPads but may not be visible to humans depending on circumst ances (but the jelly effect behaviours are always able to be captured from all iPads via 1000fps high speed cameras). Even the 120Hz iPads super-faintly by very few people who are unusually sensitive, but it's always visible in sufficiently high speed cameras. It is just most amplified on the iPad Mini 6 due to the perfect storm of simultaneous factors.

Head of Blur Busters - BlurBusters.com | TestUFO.com | Follow @BlurBusters on Twitter

Forum Rules wrote: 1. Rule #1: Be Nice. This is published forum rule #1. Even To Newbies & People You Disagree With!

2. Please report rule violations If you see a post that violates forum rules, then report the post.

3. ALWAYS respect indie testers here. See how indies are bootstrapping Blur Busters research!

- Chief Blur Buster

- Site Admin

- Posts: 11653

- Joined: 05 Dec 2013, 15:44

- Location: Toronto / Hamilton, Ontario, Canada

- Contact:

Re: Blur Busters Tech Explanation Of Jelly Effect on iPad Mini 6

Post #5 of a Series

In the linked page from my earlier post, I already have a 1000fps high speed video of an older iPad Mini (not easily prone to Jelly effect, they refresh faster along the long axis of the screen).

At the OS, they render a whole frame THEN do a refresh sweep of the whole frame from the same buffer. Rinse and repeat 60 times a second (or 120 times a second). The GPU doesn’t re-render each pixel row in a beam-raced fashion (like an Atari), Apple uses full composited framebuffers like most modern OSes, they simply have excellent OS-level framerate=Hz synchronization.

I’m also a software developer and can confirm that the smooth scrolling is the art of a perfect VSYNC that perpetually keeps framerate=Hz (and Apple’s iOS implementation of a very low-latency screen compositor).

Smooth scrolling famous on Apple products is because of Apple’s famously perfect framerate=Hz sync (and excellent low-latency VSYNC ON). I agree with you that their software stack is pretty much a gold standard in silky-smooth scrolling.

No change has occured to the excellent smooth scrolling on the Mini 6.

The jelly effect is also unrelated to how smooth the scrolling is.

The jelly effect is simpy an add-on tilting to the existing super smooth scrolling. The jelly effect is like parallelogramming (of the whole screen) during fast scrolling, essentially a screen equivalent of a slow rolling camera shutter. Kind of like how a jelly sculpture tilts left/right when you shake/rock the plate horizontally. I’m not sure if you have seen it yet, but try http://www.testufo.com/scanskew on a larger screen such as a desktop 60 Hz LCD or television set — this will help educate on the appearance of what the jelly effect looks like.

Some old cameras with a rolling shutter (a slit in a rotating opaque wheel that “scans” along the unexposed film) showed this parallelogramming effect during fast motion such as racing:

On the iPad Mini 6, the jelly effect is when left/right edge looks higher than the opposite edge of the screen, creating the impression of slightly-diagonal lines of text or diagonal ad banners, when you scroll fast WHILE in portrait mode WHILE eye tracking the scrolling text WHILE within the speed of your eye-tracking abilities.

The text-row tilting angle is approximately 3 to 5 degrees on the Mini 6 at moderate scroll speeds that are easily eye-tracked.

The motion is still super smooth, just simply now has a tilt visible in it.

Wrong. iPads don’t refresh that way.OrangeCream wrote:Right now they update the screen as soon as the data is ready:

Update row 1; update image

Update row 2; update image

Update row 3; update image

In the linked page from my earlier post, I already have a 1000fps high speed video of an older iPad Mini (not easily prone to Jelly effect, they refresh faster along the long axis of the screen).

At the OS, they render a whole frame THEN do a refresh sweep of the whole frame from the same buffer. Rinse and repeat 60 times a second (or 120 times a second). The GPU doesn’t re-render each pixel row in a beam-raced fashion (like an Atari), Apple uses full composited framebuffers like most modern OSes, they simply have excellent OS-level framerate=Hz synchronization.

I’m also a software developer and can confirm that the smooth scrolling is the art of a perfect VSYNC that perpetually keeps framerate=Hz (and Apple’s iOS implementation of a very low-latency screen compositor).

Smooth scrolling famous on Apple products is because of Apple’s famously perfect framerate=Hz sync (and excellent low-latency VSYNC ON). I agree with you that their software stack is pretty much a gold standard in silky-smooth scrolling.

No change has occured to the excellent smooth scrolling on the Mini 6.

The jelly effect is also unrelated to how smooth the scrolling is.

The jelly effect is simpy an add-on tilting to the existing super smooth scrolling. The jelly effect is like parallelogramming (of the whole screen) during fast scrolling, essentially a screen equivalent of a slow rolling camera shutter. Kind of like how a jelly sculpture tilts left/right when you shake/rock the plate horizontally. I’m not sure if you have seen it yet, but try http://www.testufo.com/scanskew on a larger screen such as a desktop 60 Hz LCD or television set — this will help educate on the appearance of what the jelly effect looks like.

Some old cameras with a rolling shutter (a slit in a rotating opaque wheel that “scans” along the unexposed film) showed this parallelogramming effect during fast motion such as racing:

On the iPad Mini 6, the jelly effect is when left/right edge looks higher than the opposite edge of the screen, creating the impression of slightly-diagonal lines of text or diagonal ad banners, when you scroll fast WHILE in portrait mode WHILE eye tracking the scrolling text WHILE within the speed of your eye-tracking abilities.

The text-row tilting angle is approximately 3 to 5 degrees on the Mini 6 at moderate scroll speeds that are easily eye-tracked.

The motion is still super smooth, just simply now has a tilt visible in it.

Head of Blur Busters - BlurBusters.com | TestUFO.com | Follow @BlurBusters on Twitter

Forum Rules wrote: 1. Rule #1: Be Nice. This is published forum rule #1. Even To Newbies & People You Disagree With!

2. Please report rule violations If you see a post that violates forum rules, then report the post.

3. ALWAYS respect indie testers here. See how indies are bootstrapping Blur Busters research!

- Chief Blur Buster

- Site Admin

- Posts: 11653

- Joined: 05 Dec 2013, 15:44

- Location: Toronto / Hamilton, Ontario, Canada

- Contact:

Re: Blur Busters Tech Explanation Of Jelly Effect on iPad Mini 6

Post #6 of a Series

The jelly effect is simply a perfect storm of factors. See my earlier post, as well as Post #1 and Post #2.

I work in the display industry with credit/references in over 20 peer reviewed industry papers.

The tilt effect is from eye movement while the screen is mid-refresh. Frame is global, but refresh is not global. To make the scan skew effect disappear — need time-relative sync’d global refresh (instant frame + instant refresh) or sync’d beam racing (beam raced GPU refresh of each pixel row in sync with screen scanout).

For photography, the photographic plate is stationary but the scenery is not static, so you’ve got a time-distortion along one photo axis, is what creates the skew embedded in a photo. The skew actually disappears for the car (but the background begins skewing) if the rolling-shutter camera operator rotates the camera on the tripod to focus on the moving object (e.g. race car) instead. Anything that moves relative to the camera film, gets the skew.

For human eyes on a screen, the OS global frame render versus the time-sequential refresh of a display interacting with eye movement, is what creates the skew seen by eyes, as clearly demo’d in TestUFO scanskew.

...The skew disappears if your eyes are stationary, but the skew reappears if you track the bouncing UFO when scan direction is perpendicular to UFO bounce direction.

...The skew also dissappears for global-refresh screens (e.g. DLP projectors -- not quite global but essentially global -- the DLP scanout of the latest DLP chips is an ultrafast 1-bit refresh cycle scanout sweep in 1/1440sec or 1/2880sec for the newest DLP chips.

Note: Most global refresh displays are still sequential-refresh, just super-fast or refreshing in the dark (before flashed like a strobe backlight). An example of super-fast scanout is consumer DLP chips, most of which run at 1-bit 1440 Hz. For example, 24bit x 60Hz = 1440Hz needed for 1-bit (2-color) refresh cycles to generate 24-bit 60Hz 60fps. DLP creates colors via temporal dithering solely on/off primary-colors. The DLP's ASIC/FPGA generates many 1-bit monochrome (aka 2-color) frames per signal refresh cycle. So there can be 24 DLP refresh cycles for every 1 signal refresh cycle, run in various colors (via color wheel or RGB LED flashing). Even for the slowest DLP chip, the scanout sweep is so fast in a fraction of a millisecond for the whole chip. Thus, it is essentially global refresh, by all human visibility perception standards. Different image artifact side effects can occur for some humans from this temporal behavior -- rainbow effects and/or temporal dither noise -- but you never see any skewing on DLP..

Yes.OrangeCream wrote:Am I misunderstanding?

The jelly effect is simply a perfect storm of factors. See my earlier post, as well as Post #1 and Post #2.

I work in the display industry with credit/references in over 20 peer reviewed industry papers.

The tilt effect is from eye movement while the screen is mid-refresh. Frame is global, but refresh is not global. To make the scan skew effect disappear — need time-relative sync’d global refresh (instant frame + instant refresh) or sync’d beam racing (beam raced GPU refresh of each pixel row in sync with screen scanout).

For photography, the photographic plate is stationary but the scenery is not static, so you’ve got a time-distortion along one photo axis, is what creates the skew embedded in a photo. The skew actually disappears for the car (but the background begins skewing) if the rolling-shutter camera operator rotates the camera on the tripod to focus on the moving object (e.g. race car) instead. Anything that moves relative to the camera film, gets the skew.

For human eyes on a screen, the OS global frame render versus the time-sequential refresh of a display interacting with eye movement, is what creates the skew seen by eyes, as clearly demo’d in TestUFO scanskew.

...The skew disappears if your eyes are stationary, but the skew reappears if you track the bouncing UFO when scan direction is perpendicular to UFO bounce direction.

...The skew also dissappears for global-refresh screens (e.g. DLP projectors -- not quite global but essentially global -- the DLP scanout of the latest DLP chips is an ultrafast 1-bit refresh cycle scanout sweep in 1/1440sec or 1/2880sec for the newest DLP chips.

Note: Most global refresh displays are still sequential-refresh, just super-fast or refreshing in the dark (before flashed like a strobe backlight). An example of super-fast scanout is consumer DLP chips, most of which run at 1-bit 1440 Hz. For example, 24bit x 60Hz = 1440Hz needed for 1-bit (2-color) refresh cycles to generate 24-bit 60Hz 60fps. DLP creates colors via temporal dithering solely on/off primary-colors. The DLP's ASIC/FPGA generates many 1-bit monochrome (aka 2-color) frames per signal refresh cycle. So there can be 24 DLP refresh cycles for every 1 signal refresh cycle, run in various colors (via color wheel or RGB LED flashing). Even for the slowest DLP chip, the scanout sweep is so fast in a fraction of a millisecond for the whole chip. Thus, it is essentially global refresh, by all human visibility perception standards. Different image artifact side effects can occur for some humans from this temporal behavior -- rainbow effects and/or temporal dither noise -- but you never see any skewing on DLP..

Head of Blur Busters - BlurBusters.com | TestUFO.com | Follow @BlurBusters on Twitter

Forum Rules wrote: 1. Rule #1: Be Nice. This is published forum rule #1. Even To Newbies & People You Disagree With!

2. Please report rule violations If you see a post that violates forum rules, then report the post.

3. ALWAYS respect indie testers here. See how indies are bootstrapping Blur Busters research!

- Chief Blur Buster

- Site Admin

- Posts: 11653

- Joined: 05 Dec 2013, 15:44

- Location: Toronto / Hamilton, Ontario, Canada

- Contact:

Re: Blur Busters Tech Explanation Of Jelly Effect on iPad Mini 6

Post #7 of a Series

(A) you hold it landscape with home button at left edge; AND

(B) you eye-track the webpage while scrolling the page; AND

(C) you keep your eyes horizontaly roughly middle; AND

(D) you scroll medium speed on high-contrast objects but don’t scroll faster than your reliable eye-tracking speed; AND

(E) Easier if you hold the iPad a little bit further back than usual (easier to see tilt in peripheral vision); AND

(F) Keep your finger held down & you bounce scroll (slide finger up and down repeatedly at moderate speeds);

Then the tilting effect becomes more visible on Mini 5 especially on horizontal advertisement banners & high-contrast wide photos, or high-contrast rows of text. It’s very, very slight (much fainter than Mini 6).

Mini 5 jelly effect is less pronounced as it’s harder to reproduce on the Mini 5 because of the need to intentionally do more of the above simultaneously, and also the fact that the scanout sweep is faster (the long axis of the screen)

An easier test is the TestUFO Scan Skew / Jelly Effect test which intentionally shows a bouncing UFO, to force your eyes to move around, and intentionally bounce-scrolls to allow easier comparison of two tilting extremes, for maximum amplification of the jelly effect of a screen.

TFT = Thin Film Transistor, so think of a screen as a giant RAM or flash chip. Each pixel has at least three transistors (one for each subpixel), sometimes more for improved pixel hold memory (e.g. ability to refresh 1Hz without needing repeat-refresh for low frame rate compensation). But there are also offscreen transistors at the edges to control row-column addressing.

Shift registers! Otherwise, we need two pairs of unobtainium ribbon cables with 3840 and 2160 wires respectively for the horizontal/vertical addressors of a 3840x2160 screen. Fortunately shift registers in the integrated circuit lithographed onto the screen edge directly onto the screen substrate. This keeps ribbon cables under control, reducing the number of circuit wires on that screen-edge ribbon cable.

So, yes, fully operating electronics circuits directly lithographed onto the screen glass, using the same litho process as pixel transistors on TFT active matrix screens). Screens are sheer complexity, and not all logic electronics can be removed from the screen glass itself!

As you now realize, row-column addressor electronics are mandatorily partially integrated in the edge of a screen, so adding ability to do horizontal and vertical refresh sweeps also make a screen a little more bulky (larger bezels).

It also adds lithographic layers to the screen surface too, due to more over/under circuit paths on the screen glass, for the additional on-glass display refreshing addressors and the addressor-method switching logic (supplemental on-glass circuits to change scanout direction in realtime).

Not to mention more programming/design for the FPGA/ASIC of the scaler/TCON (the main chip attached by ribbon cable to screen edge). That increases design delivery time.

It’s cheaper to increase the screen refresh rate anyway. Most 60Hz LCDs only require minor integrated-circuit-on-glass modifications to be easily upclocked to 120Hz, provided the LCD pixel response is sufficiently fast and the row refresh speed can be doubled without screen artifacts with the same integrated electronics designs. Most 60Hz LCD glass can be overclocked to 120Hz albiet with artifacts (especially if the scaler/TCON is so rudimentary, or is replaced with a DIY FPGA like Zisworks did for the experimental 540p 480Hz on a cheap chinese 4K120Hz LCD. A cheap 60Hz LCD successfully overclocked to 180Hz — had severe compromises such as slower pixel response (not enough power in 1/3rd time to refresh the pixel fully) and streaking effects.

As alluded earlier, the compromises of 120Hz are now becoming more and more cheaply solvable. The limiting factor used to be pixel response (and power to a lesser extent), but pixel response is nowadays fast enough to make 120Hz usable. And 120Hz penalty is using a smaller and smaller % of battery, as the continued efficiency improvement of retina screens have also shown. Once the commoditization weak links dissapear, 120Hz will slowly become an eventual freebie feature (much like 4K and retina screens) as this decade progresses without much compromises. The boom of native 120Hz tech (new PlayStation, new XBox, new iPhone, new Galaxy) is pushing a slow commoditization of 120Hz inclusion in screens.

That won't work. A different screen rotation orientation will just have the jelly effect.aerogems wrote:Yes, because I'm not surprised you apparently didn't read my comment, or the comment I was responding to, where it was proposed the solution might be to add a second controller, one for each orientation, not simply move the controller from one side to the other. Which, of course, the OP recognizes is likely an unworkable solution from a business perspective, even if it's probably the cleanest from an engineering perspective.

It becomes more visible on Mini 5 IF;mikeschr wrote:I have the previous mini and I mostly use it horiontally. It sounds like I should be seeing this effect, but I don't.

(A) you hold it landscape with home button at left edge; AND

(B) you eye-track the webpage while scrolling the page; AND

(C) you keep your eyes horizontaly roughly middle; AND

(D) you scroll medium speed on high-contrast objects but don’t scroll faster than your reliable eye-tracking speed; AND

(E) Easier if you hold the iPad a little bit further back than usual (easier to see tilt in peripheral vision); AND

(F) Keep your finger held down & you bounce scroll (slide finger up and down repeatedly at moderate speeds);

Then the tilting effect becomes more visible on Mini 5 especially on horizontal advertisement banners & high-contrast wide photos, or high-contrast rows of text. It’s very, very slight (much fainter than Mini 6).

Mini 5 jelly effect is less pronounced as it’s harder to reproduce on the Mini 5 because of the need to intentionally do more of the above simultaneously, and also the fact that the scanout sweep is faster (the long axis of the screen)

An easier test is the TestUFO Scan Skew / Jelly Effect test which intentionally shows a bouncing UFO, to force your eyes to move around, and intentionally bounce-scrolls to allow easier comparison of two tilting extremes, for maximum amplification of the jelly effect of a screen.

It can be done but LCD/OLED screens are giant lithographically-created integrated circuits with transistors and circuit lines.hasib wrote:Other than that, I’m just trying to understand whether it is fair to beg the question of adding a second controller in the first place, regardless of “business perspective”.

TFT = Thin Film Transistor, so think of a screen as a giant RAM or flash chip. Each pixel has at least three transistors (one for each subpixel), sometimes more for improved pixel hold memory (e.g. ability to refresh 1Hz without needing repeat-refresh for low frame rate compensation). But there are also offscreen transistors at the edges to control row-column addressing.

Shift registers! Otherwise, we need two pairs of unobtainium ribbon cables with 3840 and 2160 wires respectively for the horizontal/vertical addressors of a 3840x2160 screen. Fortunately shift registers in the integrated circuit lithographed onto the screen edge directly onto the screen substrate. This keeps ribbon cables under control, reducing the number of circuit wires on that screen-edge ribbon cable.

So, yes, fully operating electronics circuits directly lithographed onto the screen glass, using the same litho process as pixel transistors on TFT active matrix screens). Screens are sheer complexity, and not all logic electronics can be removed from the screen glass itself!

As you now realize, row-column addressor electronics are mandatorily partially integrated in the edge of a screen, so adding ability to do horizontal and vertical refresh sweeps also make a screen a little more bulky (larger bezels).

It also adds lithographic layers to the screen surface too, due to more over/under circuit paths on the screen glass, for the additional on-glass display refreshing addressors and the addressor-method switching logic (supplemental on-glass circuits to change scanout direction in realtime).

Not to mention more programming/design for the FPGA/ASIC of the scaler/TCON (the main chip attached by ribbon cable to screen edge). That increases design delivery time.

It’s cheaper to increase the screen refresh rate anyway. Most 60Hz LCDs only require minor integrated-circuit-on-glass modifications to be easily upclocked to 120Hz, provided the LCD pixel response is sufficiently fast and the row refresh speed can be doubled without screen artifacts with the same integrated electronics designs. Most 60Hz LCD glass can be overclocked to 120Hz albiet with artifacts (especially if the scaler/TCON is so rudimentary, or is replaced with a DIY FPGA like Zisworks did for the experimental 540p 480Hz on a cheap chinese 4K120Hz LCD. A cheap 60Hz LCD successfully overclocked to 180Hz — had severe compromises such as slower pixel response (not enough power in 1/3rd time to refresh the pixel fully) and streaking effects.

As alluded earlier, the compromises of 120Hz are now becoming more and more cheaply solvable. The limiting factor used to be pixel response (and power to a lesser extent), but pixel response is nowadays fast enough to make 120Hz usable. And 120Hz penalty is using a smaller and smaller % of battery, as the continued efficiency improvement of retina screens have also shown. Once the commoditization weak links dissapear, 120Hz will slowly become an eventual freebie feature (much like 4K and retina screens) as this decade progresses without much compromises. The boom of native 120Hz tech (new PlayStation, new XBox, new iPhone, new Galaxy) is pushing a slow commoditization of 120Hz inclusion in screens.

Head of Blur Busters - BlurBusters.com | TestUFO.com | Follow @BlurBusters on Twitter

Forum Rules wrote: 1. Rule #1: Be Nice. This is published forum rule #1. Even To Newbies & People You Disagree With!

2. Please report rule violations If you see a post that violates forum rules, then report the post.

3. ALWAYS respect indie testers here. See how indies are bootstrapping Blur Busters research!

- Chief Blur Buster

- Site Admin

- Posts: 11653

- Joined: 05 Dec 2013, 15:44

- Location: Toronto / Hamilton, Ontario, Canada

- Contact:

Jelly Effect on iPad Mini 6 (Blur Busters Technological Explanation)

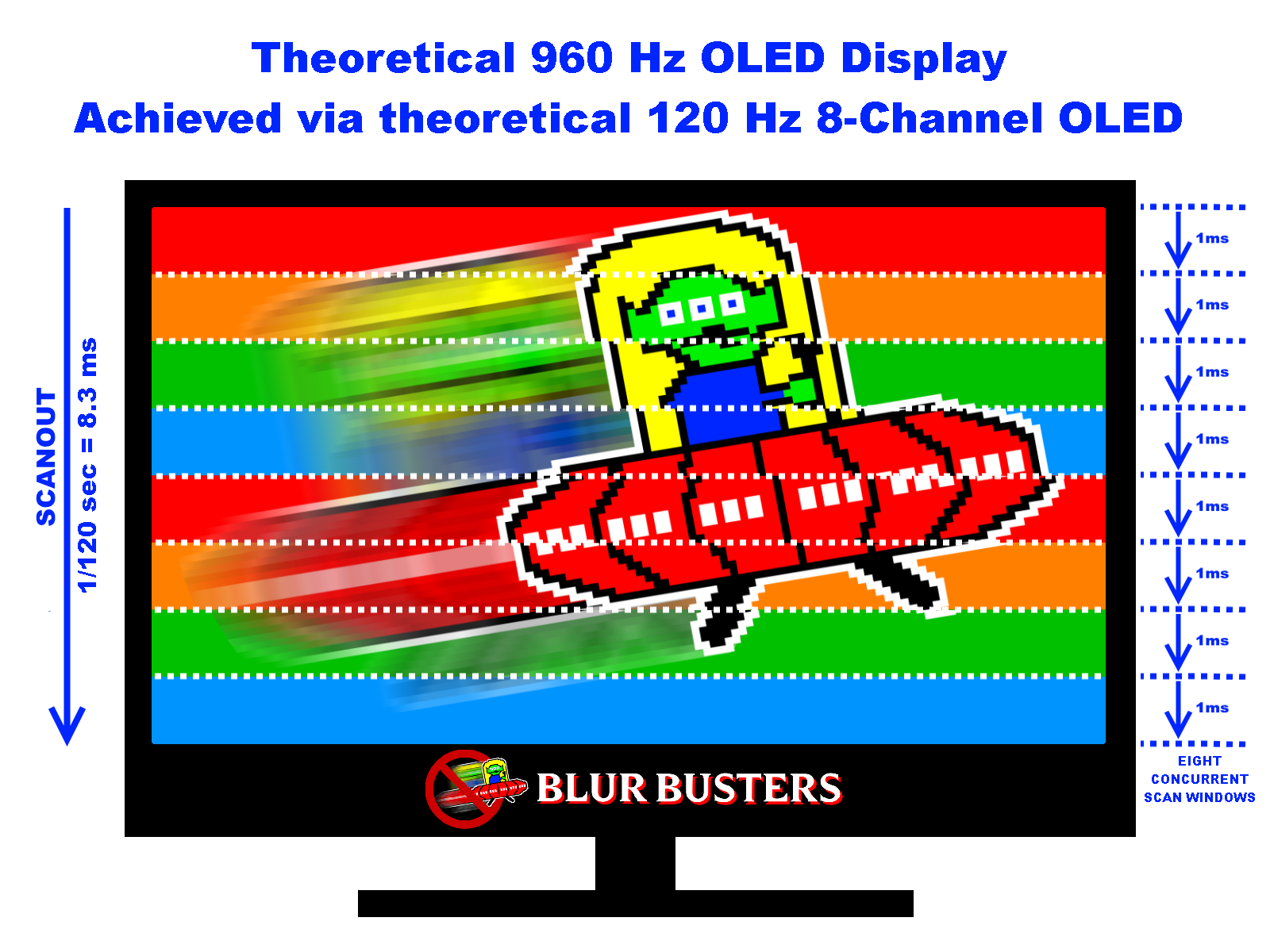

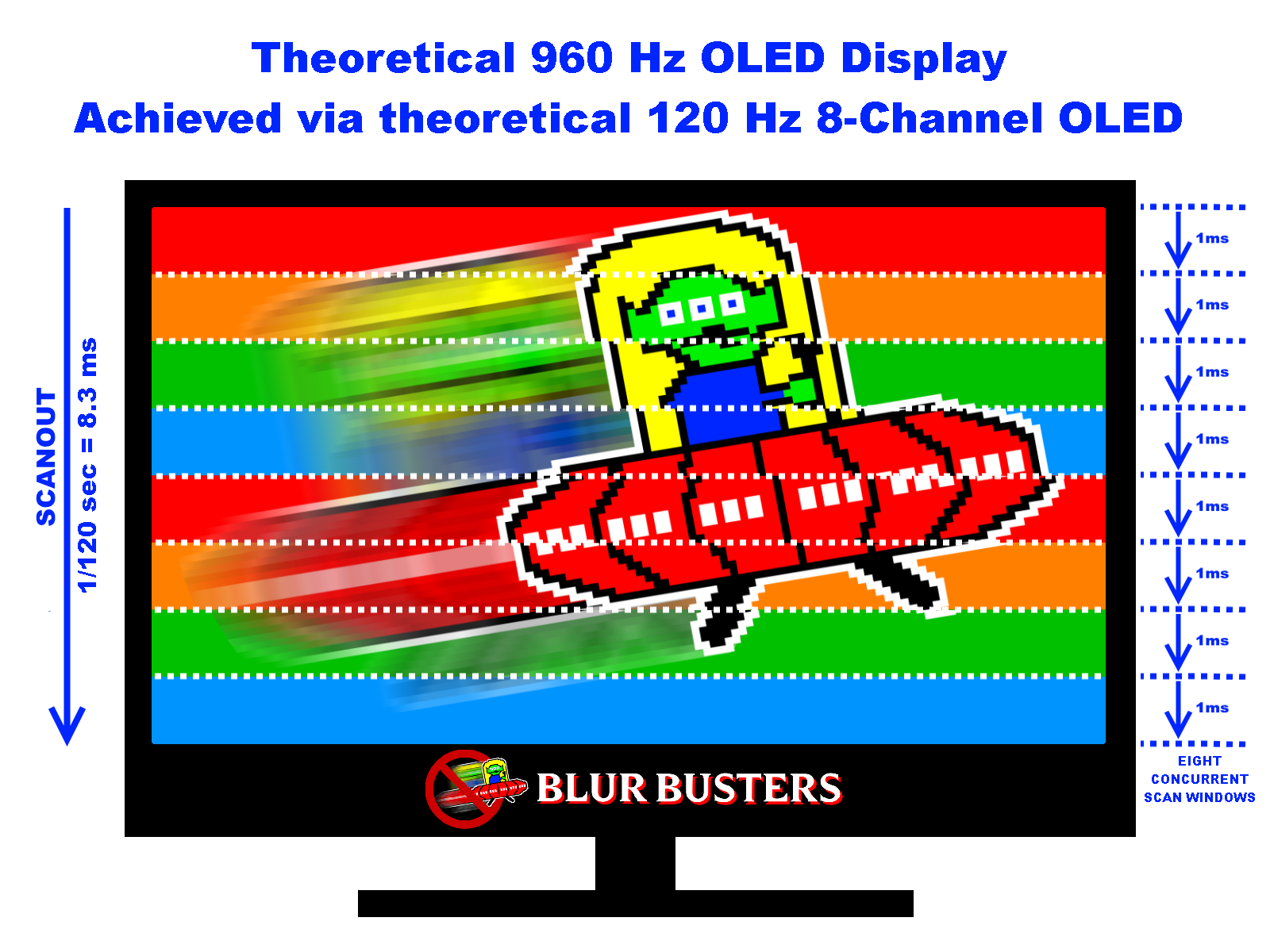

Post #8 of a Series

Concurrent scanouts can worsen other kinds of artifacts or add saw-toothing. There’s a good late 90s paper by Mr. Poyton, from tests on LED marquee refreshing behaviours. See, he knew about scanout skewing in the 1990s! It shows some side effects of subdividing the scanouts. There’s a tearing artifact between the subdivided screens. It has to be one continuous sweep of the same frame.

This is an artifact on a LED billboard that refreshes top/bottom halves concurrently.

It’s like two scanskewed screens flush edge-to-edge.

Artifacts occur from any form of temporally subdividing refresh behaviors:

(For best effect, do the TestUFO animations on a desktop monitor)

Tearing artifact is because of a large temporal difference between adjacent pixel rows. For one form of software tearing (VSYNC OFF), it’s a new frame with its respective sudden increase in game clock, essentially spliced mid-scanout on the GPU transmission (signal / cable) that is not synchronized between refresh cycles — see tearing diagram created by 432 frames per second on 144 Hz. For one form of hardware tearing, it’s a sudden temporal difference in pixel refreshing between adjacent rows.

For all forms of tearing (software side and hardware-design side) it is caused by the large time interval (of the render or of the refresh) in the pixel rows above/below the tearline. So it is a big problem in concurrent scanouts (i.e. treating the screen as multiple subdivided screens) unless mitigated.

Regardless, even if tearing is fixed — concurrent scanouts never help scan skewing, as concurrent scanouts don’t speed up the scanout sweep. In fact, it can make scanout skewing worse. Concurrent scanouts of a 60Hz screen means the scanout sweep would be 1/30sec (1/60sec for top half, then cascaded to bottom half for another 1/60sec, for a grand total of 1/30sec in a slower scanout sweep). In other words, multiscanning can worsen scanskew because you’re reducing the global top-to-bottom scanout sweep velocity via the narrower-height stacked equivalents of multiple screens.

To understand better how scanout subdivision creates slower scanouts:

Although each subdivided screen slice (1/8th height) is scanned-out in 1/960sec, the 8 slices means a total top-to-bottom global sweep is 1/120sec instead of 1/960sec. So scanout subdivision is mainly useful for future ultra high Hz screens made of modular technology (like an array of many tiny screens). Jelly effect would be super-nasty for 60Hz and 120Hz screens doing concurrent scanouts.

Some screens necessarily use subdivided mini-screens (e.g. modular LED screens like Jumbotrons), and many of those LED JumboTron/Daiktronics screen modules concurrently refresh at 600Hz-1920Hz (refreshing a 60Hz refresh cycle between 10 to 32 times) as a skew/tearing/sawtoothing solution.

The modular “equivalent of many stitched-together tiny screens refreshing simultaneously” nature of giant LED screens can also in theory be commandeered to create a native 600Hz-1920Hz frame rate + refresh rate as a motion-blur-elimination method (brute Hz to create low-persistence sample-and-hold display) with some relatively minor modifications to the electronics — for ultra high refresh rate giant screens in the future. So the modularity actually helps the technological work-subdivision necessary to make ultra high refresh rates possible in a non-unobtainium way.

Multiscanning is a known shortcut to achieving retina refresh rates using today’s technology. Retina refresh rates (where the diminishing Hz curve of returns no longer derive humankind benefits) are scientifically well in excess of 1000fps 1000Hz for a wide-FOV high resolution sample-and-hold display due to the Vicious Cycle Effect of higher resolutions and wider FOV amplifying refresh rate limitations (from either motion blur effects, stroboscopic stepping effects, or other motion artifacts generated from a finite refresh rate demonstrated by TestUFO Persistence which only stops looking horizontally pixellated at ultra high refresh rates). This is well known in VR, but also applies to FOV-filling wall sized physical screens, some of which also happens to be built modular like many tiny screens running concurrent scanouts.

Global refresh displays in the past still required sequential behaviours (e.g. multiple fast low-color-precision scanouts like plasma/DLP, or things like refreshing/priming pixels in the dark before a delayed screen illumination pulse). So all past low-Hz global refresh screens always have worse lag and/or worse artifacts than good LCD screens.

Metaphorical technological thought exercise: If a screen has truly global refreshed instantly without lag, then it’s fully idling between refresh cycles — then ask oneself, why is the screen 60Hz instead of a higher refresh rate such as 120Hz? In other words, ask oneself, why not put an idling screen to more work doing more refresh cycles? Rheoretical question to ask oneself. Modern color processing on GPUs can go thousands of framebuffers per second nowadays even on a midrange desktop GPU for 2D graphics such as scrolling or panning. Even modern Apple/Samsung mobile GPUs can now browser-scroll at a few hundred of composited framebuffers per second, and still only hit 50% GPU utilization (for scrolling overhead) on a retina screen for simple scrolling of render-light content like static web pages. The point being, there’s no zero-lag global refresh displays. Otherwise, we’d be milking the extra Hz sooner more cheaply. The only true zero-lag displays are displays that are streaming the signal directly onto the screen like a CRT in a fully raster synchronized manner, and that’s never a global refresh. Global refresh displays historically always had more lag than even a 60Hz CRT or modern low-lag fast-GtG 60Hz LCD.

High Hz single scanout is now ultimately the holy grail method of approaching global refresh in a compromises-free way (low lag, and very fast scanouts that behave as defacto global-refresh). Combining global-refresh and low/zero-lag, requires a display to do all of its processing during the practically global refresh. So the screen is effectively idling between refresh cycles. At that stage, it’s almost no extra cost to allow the display to at least optionally be able to accept more refresh cycles. Naturally, the converse is also true: If you’ve already designed a high-Hz single-scanout screen, it’s already defacto closer to a low/zero-lag global refresh.

Currently, the most scanout-motion-artifact-free technologies (ignoring ghosting differences) are high refresh rate LCD, OLED, LED, MiniLED and MicroLED displays that do a single-pass scanout per refresh cycle:

No, it would not fix scan skewing.Graham J wrote:Makes sense. I wonder, what would it look like if you rendered the first half of the frame scanout from a delayed buffer? So on each refresh you scan out half the frame from the delay buffer, then the other half from the current buffer, then copy the current buffer into the delay buffer.mdrejhon wrote:Yes.OrangeCream wrote:Am I misunderstanding?

The jelly effect is simply a perfect storm of factors. See my earlier post, as well as Post #1 and Post #2.

I work in the display industry with credit/references in over 20 peer reviewed industry papers.

The tilt effect is from eye movement while the screen is mid-refresh. Frame is global, but refresh is not global. To make the scan skew effect disappear — need time-relative sync’d global refresh (instant frame + instant refresh) or sync’d beam racing (beam raced GPU refresh of each pixel row in sync with screen scanout).

Would that halve the effect?

Concurrent scanouts can worsen other kinds of artifacts or add saw-toothing. There’s a good late 90s paper by Mr. Poyton, from tests on LED marquee refreshing behaviours. See, he knew about scanout skewing in the 1990s! It shows some side effects of subdividing the scanouts. There’s a tearing artifact between the subdivided screens. It has to be one continuous sweep of the same frame.

This is an artifact on a LED billboard that refreshes top/bottom halves concurrently.

It’s like two scanskewed screens flush edge-to-edge.

Artifacts occur from any form of temporally subdividing refresh behaviors:

(For best effect, do the TestUFO animations on a desktop monitor)

- Slow scan-out sweep = tilting effect (Demo: TestUFO Scan Skew)

- Interlacing = combing / venetian blinds effect (Demo: TestUFO Interlace Simulation)

- DLP color wheel = rainbow effect (Demo: TestUFO Rainbow Effect)

(Epilepsy warning: Flicker for TestUFO on 60Hz screen. DLP color wheels run at 240-360+ color flashes per second) - Temporal dithering = noise and contouring artifacts (DLP & plasma)

- Concurrent scanouts = sawtoothing with stationary tearing artifact at scanout boundaries (like multiple misaligned scan-skewed screens)

Tearing artifact is because of a large temporal difference between adjacent pixel rows. For one form of software tearing (VSYNC OFF), it’s a new frame with its respective sudden increase in game clock, essentially spliced mid-scanout on the GPU transmission (signal / cable) that is not synchronized between refresh cycles — see tearing diagram created by 432 frames per second on 144 Hz. For one form of hardware tearing, it’s a sudden temporal difference in pixel refreshing between adjacent rows.

For all forms of tearing (software side and hardware-design side) it is caused by the large time interval (of the render or of the refresh) in the pixel rows above/below the tearline. So it is a big problem in concurrent scanouts (i.e. treating the screen as multiple subdivided screens) unless mitigated.

Regardless, even if tearing is fixed — concurrent scanouts never help scan skewing, as concurrent scanouts don’t speed up the scanout sweep. In fact, it can make scanout skewing worse. Concurrent scanouts of a 60Hz screen means the scanout sweep would be 1/30sec (1/60sec for top half, then cascaded to bottom half for another 1/60sec, for a grand total of 1/30sec in a slower scanout sweep). In other words, multiscanning can worsen scanskew because you’re reducing the global top-to-bottom scanout sweep velocity via the narrower-height stacked equivalents of multiple screens.

To understand better how scanout subdivision creates slower scanouts:

Although each subdivided screen slice (1/8th height) is scanned-out in 1/960sec, the 8 slices means a total top-to-bottom global sweep is 1/120sec instead of 1/960sec. So scanout subdivision is mainly useful for future ultra high Hz screens made of modular technology (like an array of many tiny screens). Jelly effect would be super-nasty for 60Hz and 120Hz screens doing concurrent scanouts.

Some screens necessarily use subdivided mini-screens (e.g. modular LED screens like Jumbotrons), and many of those LED JumboTron/Daiktronics screen modules concurrently refresh at 600Hz-1920Hz (refreshing a 60Hz refresh cycle between 10 to 32 times) as a skew/tearing/sawtoothing solution.

The modular “equivalent of many stitched-together tiny screens refreshing simultaneously” nature of giant LED screens can also in theory be commandeered to create a native 600Hz-1920Hz frame rate + refresh rate as a motion-blur-elimination method (brute Hz to create low-persistence sample-and-hold display) with some relatively minor modifications to the electronics — for ultra high refresh rate giant screens in the future. So the modularity actually helps the technological work-subdivision necessary to make ultra high refresh rates possible in a non-unobtainium way.

Multiscanning is a known shortcut to achieving retina refresh rates using today’s technology. Retina refresh rates (where the diminishing Hz curve of returns no longer derive humankind benefits) are scientifically well in excess of 1000fps 1000Hz for a wide-FOV high resolution sample-and-hold display due to the Vicious Cycle Effect of higher resolutions and wider FOV amplifying refresh rate limitations (from either motion blur effects, stroboscopic stepping effects, or other motion artifacts generated from a finite refresh rate demonstrated by TestUFO Persistence which only stops looking horizontally pixellated at ultra high refresh rates). This is well known in VR, but also applies to FOV-filling wall sized physical screens, some of which also happens to be built modular like many tiny screens running concurrent scanouts.

Global refresh displays in the past still required sequential behaviours (e.g. multiple fast low-color-precision scanouts like plasma/DLP, or things like refreshing/priming pixels in the dark before a delayed screen illumination pulse). So all past low-Hz global refresh screens always have worse lag and/or worse artifacts than good LCD screens.

Metaphorical technological thought exercise: If a screen has truly global refreshed instantly without lag, then it’s fully idling between refresh cycles — then ask oneself, why is the screen 60Hz instead of a higher refresh rate such as 120Hz? In other words, ask oneself, why not put an idling screen to more work doing more refresh cycles? Rheoretical question to ask oneself. Modern color processing on GPUs can go thousands of framebuffers per second nowadays even on a midrange desktop GPU for 2D graphics such as scrolling or panning. Even modern Apple/Samsung mobile GPUs can now browser-scroll at a few hundred of composited framebuffers per second, and still only hit 50% GPU utilization (for scrolling overhead) on a retina screen for simple scrolling of render-light content like static web pages. The point being, there’s no zero-lag global refresh displays. Otherwise, we’d be milking the extra Hz sooner more cheaply. The only true zero-lag displays are displays that are streaming the signal directly onto the screen like a CRT in a fully raster synchronized manner, and that’s never a global refresh. Global refresh displays historically always had more lag than even a 60Hz CRT or modern low-lag fast-GtG 60Hz LCD.

High Hz single scanout is now ultimately the holy grail method of approaching global refresh in a compromises-free way (low lag, and very fast scanouts that behave as defacto global-refresh). Combining global-refresh and low/zero-lag, requires a display to do all of its processing during the practically global refresh. So the screen is effectively idling between refresh cycles. At that stage, it’s almost no extra cost to allow the display to at least optionally be able to accept more refresh cycles. Naturally, the converse is also true: If you’ve already designed a high-Hz single-scanout screen, it’s already defacto closer to a low/zero-lag global refresh.

Currently, the most scanout-motion-artifact-free technologies (ignoring ghosting differences) are high refresh rate LCD, OLED, LED, MiniLED and MicroLED displays that do a single-pass scanout per refresh cycle:

- Pixels refresh directly to final pixel color with no temporal tricks.

Fixes rainbows/noise/contouring artifacts - Refreshing use only one scanout sweep for minimum temporal difference between adjacent pixels, while also synchronizing framerate=Hz.

Fixes tearing artifacts - High refresh rates means an ultrafast scanout sweep (from the panel’s shorter refresh interval capability).

Fixes scan skewing (jelly effect) — even for worst jelly effect visibility conditions

Head of Blur Busters - BlurBusters.com | TestUFO.com | Follow @BlurBusters on Twitter

Forum Rules wrote: 1. Rule #1: Be Nice. This is published forum rule #1. Even To Newbies & People You Disagree With!

2. Please report rule violations If you see a post that violates forum rules, then report the post.

3. ALWAYS respect indie testers here. See how indies are bootstrapping Blur Busters research!