05/22/2020 UPDATE. The IPS 240hz monitor tier list.(I've measured/tried the all) and my honest explanation why.

Re: 04/18/2020. The IPS 240hz monitor tier list.(I've measured/tried the all) and my honest explanation why.

So, hypothetically, let's say I'm in the market for a 240hz monitor. I currently have a S2719DGF that I was still going to possibly use as a secondary monitor for work related things/AAA games that may struggle to reach higher frame rates with a 2080. Let's also say I've read nearly all 10 pages of this thread and have come to the conclusion that I'm leaning towards the MAG251RX, or the XG270. I've considered both the AW2521HF (for it's ergonomics alone) and the Omen X 27 (but this isn't IPS nor does it really compete with these monitors I've listed, really wanted to just buy this and be done with it, but I think I'll wait for the second generation of 1440p 240hz monitors when the next GPU generation comes out). I sit about 2-2.5 feet away from my monitor. Vision is also a bit compromised due to amblyopia in one eye. Can say I didn't really enjoy gaming on a 24 inch MG249Q when I had one years ago, and then I had a S2417DG that I ended up selling after a year and buying an S2719DGF since I enjoyed the size a lot more.

Common things I've noted about the MAG251RX:

-10 bit (though I believe it's 8 bit + FRC correct?)

-25 inches

-HDR support (even though it's considered pointless if you're using this for competitive gaming, correct?)

-Best overdrive

-Horrible stand

-Tacky gamer aesthetics

-Cheaper

XG270:

-27 inches (I guess many don't like using 27 inches for competitive gaming?)

-Great stand

-Pricier

-Best strobing

I had a friend previously purchase the MAG251RX and return it since he said he couldn't get used to the poor image quality he felt he had with it which is what's sort of making me hesitant about ordering it over the XG270. My main issue with the XG270 is the size and the fact that I sit about 2 feet or so from my monitor makes me think image quality may be compromised a bit.

So, considering all of this, and let's say these monitors are going to be used for CSGO, Valorant, CoD, Apex, and possibly Destiny 2, what should I consider learning towards more?

Common things I've noted about the MAG251RX:

-10 bit (though I believe it's 8 bit + FRC correct?)

-25 inches

-HDR support (even though it's considered pointless if you're using this for competitive gaming, correct?)

-Best overdrive

-Horrible stand

-Tacky gamer aesthetics

-Cheaper

XG270:

-27 inches (I guess many don't like using 27 inches for competitive gaming?)

-Great stand

-Pricier

-Best strobing

I had a friend previously purchase the MAG251RX and return it since he said he couldn't get used to the poor image quality he felt he had with it which is what's sort of making me hesitant about ordering it over the XG270. My main issue with the XG270 is the size and the fact that I sit about 2 feet or so from my monitor makes me think image quality may be compromised a bit.

So, considering all of this, and let's say these monitors are going to be used for CSGO, Valorant, CoD, Apex, and possibly Destiny 2, what should I consider learning towards more?

-

RLCSContender*

- Posts: 541

- Joined: 13 Jan 2021, 22:49

- Contact:

Re: 04/18/2020. The IPS 240hz monitor tier list.(I've measured/tried the all) and my honest explanation why.

the QZ is not yet listed on here

https://www.nvidia.com/en-us/geforce/pr ... ors/specs/

but lets be real here., Without the G-sync chip, there wont' be variable overdrive(even if they say so). Software emulation variable overdrive can emulate it, but it's simply not the same. I own a g-sync monitor, and i can attest to this.

I dont' really strobe and i'm waiting for the 25 inch version of the xg270. I wouldn't say it's "fast", it's modest but its strongpoitns aren't g2g response times, it's the motion blur reduction which is the best. I usually turn off any form of adaptive sync and strobing since at 240hz gameplay, every thing is crystal clear anyway and strobing at that high ofa refresh rate in my opinion is redundant.(another reason why i chose the MSI over the ELMB-SYNC asus

https://www.nvidia.com/en-us/geforce/pr ... ors/specs/

but lets be real here., Without the G-sync chip, there wont' be variable overdrive(even if they say so). Software emulation variable overdrive can emulate it, but it's simply not the same. I own a g-sync monitor, and i can attest to this.

I dont' really strobe and i'm waiting for the 25 inch version of the xg270. I wouldn't say it's "fast", it's modest but its strongpoitns aren't g2g response times, it's the motion blur reduction which is the best. I usually turn off any form of adaptive sync and strobing since at 240hz gameplay, every thing is crystal clear anyway and strobing at that high ofa refresh rate in my opinion is redundant.(another reason why i chose the MSI over the ELMB-SYNC asus

- Chief Blur Buster

- Site Admin

- Posts: 12056

- Joined: 05 Dec 2013, 15:44

- Location: Toronto / Hamilton, Ontario, Canada

- Contact:

Re: 04/18/2020. The IPS 240hz monitor tier list.(I've measured/tried the all) and my honest explanation why.

This is correct, with a caveat:AddictFPS wrote: ↑26 Apr 2020, 14:07Read my post again, i not said XG270 has GSync module. I not said XG270 has VRR automatic variable overdrive, i just say XG270 in strobe mode, all the fixed strobe frequencies range, are automatic variable overdrive fine tuned by BlurBusters. So, no matter what frequency from 75 to 240 is manually selected by the user, the OD is automaticaly set to the correct value by the firmware, due to this OD in OSD is locked when strobe is On.

I rather say “A new strobe-optimized overdrive configuration is automatically set when the strobed refresh rate is changed”. It’s automatically configured but not continuously dynamic (continuously automatic overdrive syncing to frametimes like for NVIDIA’s native G-SYNC implementations, frametime=refreshtime).

Head of Blur Busters - BlurBusters.com | TestUFO.com | Follow @BlurBusters on: BlueSky | Twitter | Facebook

Forum Rules wrote: 1. Rule #1: Be Nice. This is published forum rule #1. Even To Newbies & People You Disagree With!

2. Please report rule violations If you see a post that violates forum rules, then report the post.

3. ALWAYS respect indie testers here. See how indies are bootstrapping Blur Busters research!

- Chief Blur Buster

- Site Admin

- Posts: 12056

- Joined: 05 Dec 2013, 15:44

- Location: Toronto / Hamilton, Ontario, Canada

- Contact:

Re: 04/18/2020. The IPS 240hz monitor tier list.(I've measured/tried the all) and my honest explanation why.

Also crossposting to this thread for general reader education purposes, as a pre-emptive move to maintain cordial pursuit camera discourse. Any escalations about pursuit camera needs to be tempered to protect the quality & reputation of Blur Busters testing inventions. Appreciated.

Pursuit camera work is amazing to execute -- but:

I would not say "truth" or "lie" because everybody sees differently, including seeing the photo.

Elements from camera-adjustment issues (on how things are accurately photographed, to following pursuit instructions) to vision behavior differences between humans (on seeing results of photo), even for well-intentioned photographs, means it can become flamebait to use the word "truth" or "lie" -- and that includes you.

Science papers or research papers buttress the truth (as can a well-taken pursuit photo from a good camera) but don't self-use the words "truth" or "lie" for many obvious reasons like these.

Pursuit photos are a great show and tell. The WYISIWYG is a best-effort and hard to make perfect given differences on how displays vs camera technology, camera technique, human eyes on the original diplay, human eyes on the resulting display. Often the photographed result shown on the same display is more accurate than the photographed result shown on a different display. AND on top of that, different human visions can impart different gamma-behaviours (e.g. can't see the dim colors or dim ghosts as well as the next human). Whether looking at original motion or the photographed motion (that goes through camera distortion and third-party-monitor distortion).

So there are multiple weak links as a Fair Disclosure.

Yes, even the last bullet. The remote human seeing the resulting photo on a different monitor. Even a WYSIWYG printout of a Word document looks different to a different human, for example a dyslexic sometimes sees continually jumbling text example, or see distorted color multiple examples of partial color blindnesses or you have focussing or astigmatism issues (need glasses), or you have motion blindness (Akinetopsia), or other condition, partial or full-fledged, diagnosed or undiagnosed. Even outside these silos/umbrellas, different eyes and/or human brains add different kinds of weird noises and/or distortions to what they are seeing. This can completely make them unusually sensitive to one thing (tearing or blurring or coronas or stutter or color etc) and unusually insensitive to other aspects (tearing or blurring or coronas or stutter or color etc). There can be a preference aspect but there can also be a eye/brain limitation aspect too. Likewise, a different set of human eyes can see something totally different on a resulting photo even if it's a perfect photo. TL;DR: Different humans see differently

The fact is person A may prefer monitor 1 over 2 (Genuinely seen both). And person B may prefer monitor 2 over 1 (Genuinely seen both). Even without these photos!

Also -- even in simpler contexts -- for example -- it even applies to web page design. Look at the sides of the forum in a desktop browser. The black checkerboard-flag background on the left/right of the forum sidebar is much brighter on some monitors, and totally black background on different monitors or different human eyes. And monitors can have different gamma. And camera settings can also distort the gamma. And distorted yet again when that same photo is displayed on a different monitor. Etc.

Scientific/researcher discourse needs to be tempered/nuanced by the acknowledgement of the limtiations of testing. We can do our best to perfect it (e.g. purchase a camera like a Sony Alpha a6XXX series, teach oneself an accurate manual technique, use a camera rail) so that our camera and technique have minimal error factors, and the photograph is relatively accurate. But it can never be a perfect photon-to-photon record given different displays, computers, humans, etc.

Pursuit camera photos are the best-ever invented way to easily photograph display motion blur.

But results often have to be interpreted through the lens of all of these limitations and error factors (camera-wise, camera-technique-wise and vision-wise). This is generally why I don't want any polar aguments about truth-or-lie. This only manufactures flamebait accidentially (even if not intentional). The wording, the bolding, and the all-caps, thusly, create a window opening for disagreements, under the lens of display research discourse. In other words, keep an open mind when writing your words.

Pursuit camera photos have known challenges just like measuring GtG, even though pursuit camera has greatly simplified the show-and-tell. But there are innate limitations on how it's reliably communicated.

Pursuit camera is the most perfect way to do it, but still necessarily imperfect (humankind-wise).

Appreciated!

RLCScontender wrote: ↑25 Apr 2020, 23:31But my pursuit camera is the most accurate since i got it DIRECTLY straight from the monitor myself.

However, it is subject to interpretation.

Pursuit camera work is amazing to execute -- but:

I would not say "truth" or "lie" because everybody sees differently, including seeing the photo.

Elements from camera-adjustment issues (on how things are accurately photographed, to following pursuit instructions) to vision behavior differences between humans (on seeing results of photo), even for well-intentioned photographs, means it can become flamebait to use the word "truth" or "lie" -- and that includes you.

Science papers or research papers buttress the truth (as can a well-taken pursuit photo from a good camera) but don't self-use the words "truth" or "lie" for many obvious reasons like these.

Pursuit photos are a great show and tell. The WYISIWYG is a best-effort and hard to make perfect given differences on how displays vs camera technology, camera technique, human eyes on the original diplay, human eyes on the resulting display. Often the photographed result shown on the same display is more accurate than the photographed result shown on a different display. AND on top of that, different human visions can impart different gamma-behaviours (e.g. can't see the dim colors or dim ghosts as well as the next human). Whether looking at original motion or the photographed motion (that goes through camera distortion and third-party-monitor distortion).

So there are multiple weak links as a Fair Disclosure.

- Camera used (and flaws within)

- Photography technique (and flaws within)

- Whether the photo is retouched (including 'white hat' retouchings like a post-shoot exposure compensation)

- How the remote computer displays the photo (different photo viewers/browsers can show the same photo slightly differently)

- How the remote display shows the photo (by that computer)

- How the remote person sees the resulting photo (on that monitor)

Yes, even the last bullet. The remote human seeing the resulting photo on a different monitor. Even a WYSIWYG printout of a Word document looks different to a different human, for example a dyslexic sometimes sees continually jumbling text example, or see distorted color multiple examples of partial color blindnesses or you have focussing or astigmatism issues (need glasses), or you have motion blindness (Akinetopsia), or other condition, partial or full-fledged, diagnosed or undiagnosed. Even outside these silos/umbrellas, different eyes and/or human brains add different kinds of weird noises and/or distortions to what they are seeing. This can completely make them unusually sensitive to one thing (tearing or blurring or coronas or stutter or color etc) and unusually insensitive to other aspects (tearing or blurring or coronas or stutter or color etc). There can be a preference aspect but there can also be a eye/brain limitation aspect too. Likewise, a different set of human eyes can see something totally different on a resulting photo even if it's a perfect photo. TL;DR: Different humans see differently

The fact is person A may prefer monitor 1 over 2 (Genuinely seen both). And person B may prefer monitor 2 over 1 (Genuinely seen both). Even without these photos!

Also -- even in simpler contexts -- for example -- it even applies to web page design. Look at the sides of the forum in a desktop browser. The black checkerboard-flag background on the left/right of the forum sidebar is much brighter on some monitors, and totally black background on different monitors or different human eyes. And monitors can have different gamma. And camera settings can also distort the gamma. And distorted yet again when that same photo is displayed on a different monitor. Etc.

Scientific/researcher discourse needs to be tempered/nuanced by the acknowledgement of the limtiations of testing. We can do our best to perfect it (e.g. purchase a camera like a Sony Alpha a6XXX series, teach oneself an accurate manual technique, use a camera rail) so that our camera and technique have minimal error factors, and the photograph is relatively accurate. But it can never be a perfect photon-to-photon record given different displays, computers, humans, etc.

Pursuit camera photos are the best-ever invented way to easily photograph display motion blur.

But results often have to be interpreted through the lens of all of these limitations and error factors (camera-wise, camera-technique-wise and vision-wise). This is generally why I don't want any polar aguments about truth-or-lie. This only manufactures flamebait accidentially (even if not intentional). The wording, the bolding, and the all-caps, thusly, create a window opening for disagreements, under the lens of display research discourse. In other words, keep an open mind when writing your words.

Pursuit camera photos have known challenges just like measuring GtG, even though pursuit camera has greatly simplified the show-and-tell. But there are innate limitations on how it's reliably communicated.

Pursuit camera is the most perfect way to do it, but still necessarily imperfect (humankind-wise).

Appreciated!

Head of Blur Busters - BlurBusters.com | TestUFO.com | Follow @BlurBusters on: BlueSky | Twitter | Facebook

Forum Rules wrote: 1. Rule #1: Be Nice. This is published forum rule #1. Even To Newbies & People You Disagree With!

2. Please report rule violations If you see a post that violates forum rules, then report the post.

3. ALWAYS respect indie testers here. See how indies are bootstrapping Blur Busters research!

-

Dirty Scrubz

- Posts: 193

- Joined: 16 Jan 2020, 04:52

Re: 04/18/2020. The IPS 240hz monitor tier list.(I've measured/tried the all) and my honest explanation why.

I want to point out that this isn't entirely true. FreeSync monitors that can use GSync don't run software emulation (G-Sync is just NVIDIAs name for VRR), it's done via the scalar and it's an open standard VRR implementation. Some G-Sync chips can give slightly better input lag but there are many FreeSync displays that are better w/no input lag and they don't need a G-Sync chip to drive up the cost.RLCScontender wrote: ↑26 Apr 2020, 17:58but lets be real here., Without the G-sync chip, there wont' be variable overdrive(even if they say so). Software emulation variable overdrive can emulate it, but it's simply not the same. I own a g-sync monitor, and i can attest to this.

- Chief Blur Buster

- Site Admin

- Posts: 12056

- Joined: 05 Dec 2013, 15:44

- Location: Toronto / Hamilton, Ontario, Canada

- Contact:

Re: 04/18/2020. The IPS 240hz monitor tier list.(I've measured/tried the all) and my honest explanation why.

<Technical Overdrive Rabbit Hole>

It's not easy to explain VRR overdrive in layman's terms.

Technically, the best method of OD is a lookup table. Ideally a 256x256 LUT for 8-bit panels. A simple formula of A(B) = C, where variables A = original greyscale value, B = destination greyscale value, C = intentional overshoot pixel color to use to accelerate GtG transition from A to B, and used three times per pixel (LUT pass for red channel, green channel, blue channel).

If the right LUT overshoot pixel value is used, the pixels never physically overshoot, it simply transitions faster to B. But goldilocks is extremely hard, it's often too cold (ghosty) or too hot (coronas). Some monitors use smaller overdrive LUTs (e.g. 9x9, 17x17, 65x65) and interpolate the rest of the way. An Overdrive Gain value is a biasing/multiplier factor for the LUT to amplfy/attenuate the LUT-driven OD mechanism.

The OD LUTs are factory pre-generated and preinstalled in monitors. You can have custom OD LUTs for different refresh rates, different settings (strobe settings), etc.

Some even use the Y-axis to vary the OD Gain along the vertical dimension (old LightBoost monitors did this, due to the asymmetric differentials between panel scanout time and global strobe flash time, creating different GtG acceleration needs for different pixels along the vertical dimension of the display. This was vertical dimension biasing factor was publicly discovered by Marc of Display Corner. This is less necessary now thanks to higher Hz and larger blanking intervals (either via internal TCON/scaler scanrate conversion, or Large Vertical Totals) but still slightly reduces edge-of-screen strobe crosstalk if manufacturers bother incorporating the Y axis variable.

Now, you need different LUTs for different frametimes, and sometimes you have to factor in the TWO or THREE previous frametimes. Since GtG can last more than 1 refresh cycle & the GtG curves are at varying different points for different frametimes. There's infinite combos! That's when memory gets horrendous complex and you have to fallback on some algorithmic approaches -- algebra, matrix math, possibly even calculus, etc.

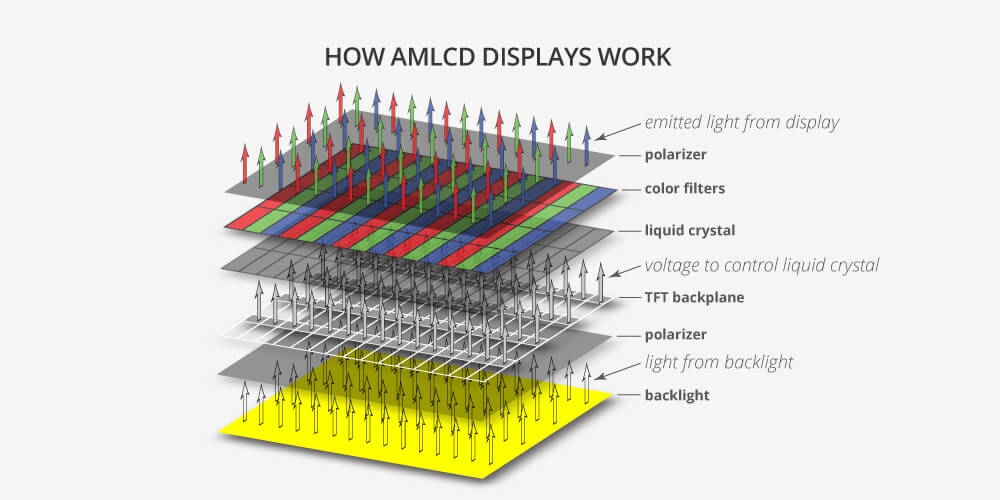

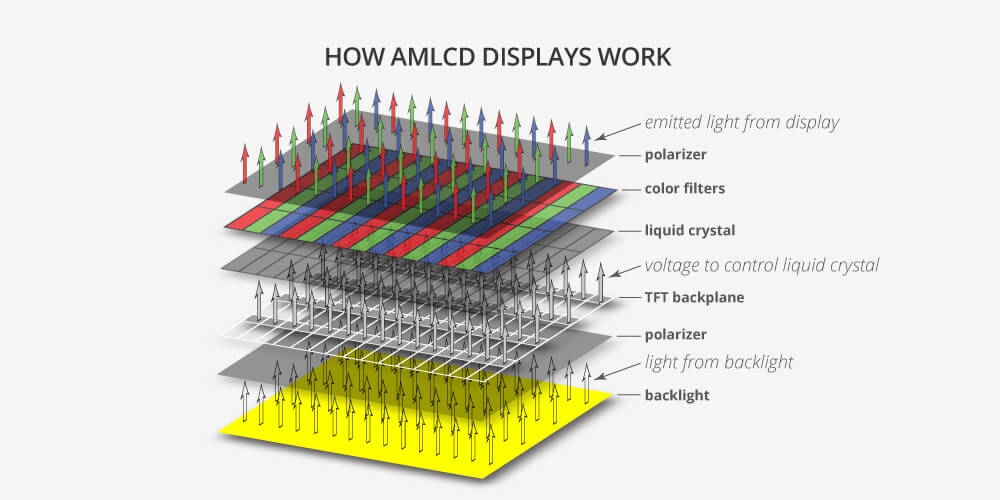

And when temperature changes, the OD LUT calibrated at 20 degrees C looks wrong at 15 degrees or 25 degrees C -- you've seen slow LCDs outdoors in freezing winter -- they go slow. Same thing, same problem. Your gorgeous 4K LCD still uses the same monochrome LCD subpixels as a 1980s LCD wistwatch. All LCD is based of the same law of physics of moving/rotating molecules for blocking/unblocking light:

GtG is just momentum. LCD stands for Liquid Crystal Display. Those liquid crystal molecules are actually moving. They accelerate/decelerate. That's the GtG curve!

Now, the OD LUT only looks perfect at the original temperature the OD LUT was generated at! Nonwithstanding temperature differences along the panel surface -- like a hot power supply corner, and cold top edges.

Theoretically, the most perfect possible overdrive would automatically factor everything in. The problem is it costs 100x as much to improve overdrive by just another 10%. So many manufacters do not bother, or use very bad OD LUTs (sigh, I've seen it happen. Too much OD LUT recycling happening in the industry for inappropriate panels that should just have OD LUT completely regenerated from scratch instead).

A simple overdrive lookup table can escalate to complex calculus or algebra when involving variable frametimes & strobe backlights. It's just easier not to bother, especially in the race to bottom from $5000 panels to $1000 panels to $500 panels to $250 panels, so industry only tries to get overdrive "90% as good as theoretical perfect".

This is as much info about overdrive I can say without NDA. All publicly available info in bits-and-pieces all over the Internet, academic papers, reviewers, hobby tweakers discovering weird overdrive behaviors. All crammed into this one technical post about how complex overdrive can escalate from just simple OD LUTs.

This can be done in hardware or software. Remember the old ATI Overdrive feature of yesteryear. Theoretically, hardware and software can look identical (except for the lack of voltage room above white / below black, but that's not even normally used either). And you'd usually rather not load the CPU/GPU with overdrive.

</Technical Overdrive Rabbit Hole>

TL;DR: VRR realtime dynamic overdrive is complex. That's why native G-SYNC chips have lots of RAM to have better looking VRR OD. The G-SYNC premium reduces ghosting/corona artifats during fluctuating framerates.

It's not easy to explain VRR overdrive in layman's terms.

Technically, the best method of OD is a lookup table. Ideally a 256x256 LUT for 8-bit panels. A simple formula of A(B) = C, where variables A = original greyscale value, B = destination greyscale value, C = intentional overshoot pixel color to use to accelerate GtG transition from A to B, and used three times per pixel (LUT pass for red channel, green channel, blue channel).

If the right LUT overshoot pixel value is used, the pixels never physically overshoot, it simply transitions faster to B. But goldilocks is extremely hard, it's often too cold (ghosty) or too hot (coronas). Some monitors use smaller overdrive LUTs (e.g. 9x9, 17x17, 65x65) and interpolate the rest of the way. An Overdrive Gain value is a biasing/multiplier factor for the LUT to amplfy/attenuate the LUT-driven OD mechanism.

The OD LUTs are factory pre-generated and preinstalled in monitors. You can have custom OD LUTs for different refresh rates, different settings (strobe settings), etc.

Some even use the Y-axis to vary the OD Gain along the vertical dimension (old LightBoost monitors did this, due to the asymmetric differentials between panel scanout time and global strobe flash time, creating different GtG acceleration needs for different pixels along the vertical dimension of the display. This was vertical dimension biasing factor was publicly discovered by Marc of Display Corner. This is less necessary now thanks to higher Hz and larger blanking intervals (either via internal TCON/scaler scanrate conversion, or Large Vertical Totals) but still slightly reduces edge-of-screen strobe crosstalk if manufacturers bother incorporating the Y axis variable.

Now, you need different LUTs for different frametimes, and sometimes you have to factor in the TWO or THREE previous frametimes. Since GtG can last more than 1 refresh cycle & the GtG curves are at varying different points for different frametimes. There's infinite combos! That's when memory gets horrendous complex and you have to fallback on some algorithmic approaches -- algebra, matrix math, possibly even calculus, etc.

And when temperature changes, the OD LUT calibrated at 20 degrees C looks wrong at 15 degrees or 25 degrees C -- you've seen slow LCDs outdoors in freezing winter -- they go slow. Same thing, same problem. Your gorgeous 4K LCD still uses the same monochrome LCD subpixels as a 1980s LCD wistwatch. All LCD is based of the same law of physics of moving/rotating molecules for blocking/unblocking light:

GtG is just momentum. LCD stands for Liquid Crystal Display. Those liquid crystal molecules are actually moving. They accelerate/decelerate. That's the GtG curve!

Now, the OD LUT only looks perfect at the original temperature the OD LUT was generated at! Nonwithstanding temperature differences along the panel surface -- like a hot power supply corner, and cold top edges.

Theoretically, the most perfect possible overdrive would automatically factor everything in. The problem is it costs 100x as much to improve overdrive by just another 10%. So many manufacters do not bother, or use very bad OD LUTs (sigh, I've seen it happen. Too much OD LUT recycling happening in the industry for inappropriate panels that should just have OD LUT completely regenerated from scratch instead).

A simple overdrive lookup table can escalate to complex calculus or algebra when involving variable frametimes & strobe backlights. It's just easier not to bother, especially in the race to bottom from $5000 panels to $1000 panels to $500 panels to $250 panels, so industry only tries to get overdrive "90% as good as theoretical perfect".

This is as much info about overdrive I can say without NDA. All publicly available info in bits-and-pieces all over the Internet, academic papers, reviewers, hobby tweakers discovering weird overdrive behaviors. All crammed into this one technical post about how complex overdrive can escalate from just simple OD LUTs.

This can be done in hardware or software. Remember the old ATI Overdrive feature of yesteryear. Theoretically, hardware and software can look identical (except for the lack of voltage room above white / below black, but that's not even normally used either). And you'd usually rather not load the CPU/GPU with overdrive.

</Technical Overdrive Rabbit Hole>

TL;DR: VRR realtime dynamic overdrive is complex. That's why native G-SYNC chips have lots of RAM to have better looking VRR OD. The G-SYNC premium reduces ghosting/corona artifats during fluctuating framerates.

Head of Blur Busters - BlurBusters.com | TestUFO.com | Follow @BlurBusters on: BlueSky | Twitter | Facebook

Forum Rules wrote: 1. Rule #1: Be Nice. This is published forum rule #1. Even To Newbies & People You Disagree With!

2. Please report rule violations If you see a post that violates forum rules, then report the post.

3. ALWAYS respect indie testers here. See how indies are bootstrapping Blur Busters research!

-

RLCSContender*

- Posts: 541

- Joined: 13 Jan 2021, 22:49

- Contact:

Re: 04/18/2020. The IPS 240hz monitor tier list.(I've measured/tried the all) and my honest explanation why.

Umm no, g sync compatible sucks compared to full fat g sync.Dirty Scrubz wrote: ↑27 Apr 2020, 22:11I want to point out that this isn't entirely true. FreeSync monitors that can use GSync don't run software emulation (G-Sync is just NVIDIAs name for VRR), it's done via the scalar and it's an open standard VRR implementation. Some G-Sync chips can give slightly better input lag but there are many FreeSync displays that are better w/no input lag and they don't need a G-Sync chip to drive up the cost.RLCScontender wrote: ↑26 Apr 2020, 17:58but lets be real here., Without the G-sync chip, there wont' be variable overdrive(even if they say so). Software emulation variable overdrive can emulate it, but it's simply not the same. I own a g-sync monitor, and i can attest to this.

The chip directly communicates with your gpu, via hardware. Not software emulation. Which means non existent input lag zero ghosting and faster response times since the chip will automatically determine the best overdrive on each refresh rate.

Sure, there are maybe 2 or 3 g sync compatible monitors with variable overdrive via software emulation, but from what i hear, its nowhere as good if it actually had the scaler chip that come with full fat g sync.

Xg270qg vs 27gl850 both the same nano panel , but i noticed way less input lag and ghostingnon the viewsonic. The g sync chip is responsible for that.

-

Dirty Scrubz

- Posts: 193

- Joined: 16 Jan 2020, 04:52

Re: 04/18/2020. The IPS 240hz monitor tier list.(I've measured/tried the all) and my honest explanation why.

Ok again what you wrote is wrong but keep thinking you know what you're talking about.RLCScontender wrote: ↑28 Apr 2020, 22:53Umm no, g sync compatible sucks compared to full fat g sync.Dirty Scrubz wrote: ↑27 Apr 2020, 22:11I want to point out that this isn't entirely true. FreeSync monitors that can use GSync don't run software emulation (G-Sync is just NVIDIAs name for VRR), it's done via the scalar and it's an open standard VRR implementation. Some G-Sync chips can give slightly better input lag but there are many FreeSync displays that are better w/no input lag and they don't need a G-Sync chip to drive up the cost.RLCScontender wrote: ↑26 Apr 2020, 17:58but lets be real here., Without the G-sync chip, there wont' be variable overdrive(even if they say so). Software emulation variable overdrive can emulate it, but it's simply not the same. I own a g-sync monitor, and i can attest to this.

The chip directly communicates with your gpu, via hardware. Not software emulation. Which means non existent input lag zero ghosting and faster response times since the chip will automatically determine the best overdrive on each refresh rate.

Sure, there are maybe 2 or 3 g sync compatible monitors with VRR, but from what i hear, its nowhere as good if it actually had the scaler chip that come with full fat g sync.

Xg270qg vs 27gl850 both the same nano panel , but i noticed way less input lag and ghostingnon the viewsonic. The g sync chip is responsible for that.

-

RLCSContender*

- Posts: 541

- Joined: 13 Jan 2021, 22:49

- Contact:

Re: 04/18/2020. The IPS 240hz monitor tier list.(I've measured/tried the all) and my honest explanation why.

Chief Blur Buster wrote: ↑27 Apr 2020, 11:36Also crossposting to this thread for general reader education purposes, as a pre-emptive move to maintain cordial pursuit camera discourse. Any escalations about pursuit camera needs to be tempered to protect the quality & reputation of Blur Busters testing inventions. Appreciated.

RLCScontender wrote: ↑25 Apr 2020, 23:31But my pursuit camera is the most accurate since i got it DIRECTLY straight from the monitor myself.However, it is subject to interpretation.

Pursuit camera work is amazing to execute -- but:

I would not say "truth" or "lie" because everybody sees differently, including seeing the photo.

Elements from camera-adjustment issues (on how things are accurately photographed, to following pursuit instructions) to vision behavior differences between humans (on seeing results of photo), even for well-intentioned photographs, means it can become flamebait to use the word "truth" or "lie" -- and that includes you.

Science papers or research papers buttress the truth (as can a well-taken pursuit photo from a good camera) but don't self-use the words "truth" or "lie" for many obvious reasons like these.

Pursuit photos are a great show and tell. The WYISIWYG is a best-effort and hard to make perfect given differences on how displays vs camera technology, camera technique, human eyes on the original diplay, human eyes on the resulting display. Often the photographed result shown on the same display is more accurate than the photographed result shown on a different display. AND on top of that, different human visions can impart different gamma-behaviours (e.g. can't see the dim colors or dim ghosts as well as the next human). Whether looking at original motion or the photographed motion (that goes through camera distortion and third-party-monitor distortion).

So there are multiple weak links as a Fair Disclosure.So there can be layers of visual distortions added (like a repeat-photocopying that degrades) -- human vision-wise or hardware-wise -- even though it's so vastly superior (compared to past inventions) method of representing motion blur results -- it is still prone to layers like that.

- Camera used (and flaws within)

- Photography technique (and flaws within)

- Whether the photo is retouched (including 'white hat' retouchings like a post-shoot exposure compensation)

- How the remote computer displays the photo (different photo viewers/browsers can show the same photo slightly differently)

- How the remote display shows the photo (by that computer)

- How the remote person sees the resulting photo (on that monitor)

Yes, even the last bullet. The remote human seeing the resulting photo on a different monitor. Even a WYSIWYG printout of a Word document looks different to a different human, for example a dyslexic sometimes sees continually jumbling text example, or see distorted color multiple examples of partial color blindnesses or you have focussing or astigmatism issues (need glasses), or you have motion blindness (Akinetopsia), or other condition, partial or full-fledged, diagnosed or undiagnosed. Even outside these silos/umbrellas, different eyes and/or human brains add different kinds of weird noises and/or distortions to what they are seeing. This can completely make them unusually sensitive to one thing (tearing or blurring or coronas or stutter or color etc) and unusually insensitive to other aspects (tearing or blurring or coronas or stutter or color etc). There can be a preference aspect but there can also be a eye/brain limitation aspect too. Likewise, a different set of human eyes can see something totally different on a resulting photo even if it's a perfect photo. TL;DR: Different humans see differently

The fact is person A may prefer monitor 1 over 2 (Genuinely seen both). And person B may prefer monitor 2 over 1 (Genuinely seen both). Even without these photos!

Also -- even in simpler contexts -- for example -- it even applies to web page design. Look at the sides of the forum in a desktop browser. The black checkerboard-flag background on the left/right of the forum sidebar is much brighter on some monitors, and totally black background on different monitors or different human eyes. And monitors can have different gamma. And camera settings can also distort the gamma. And distorted yet again when that same photo is displayed on a different monitor. Etc.

Scientific/researcher discourse needs to be tempered/nuanced by the acknowledgement of the limtiations of testing. We can do our best to perfect it (e.g. purchase a camera like a Sony Alpha a6XXX series, teach oneself an accurate manual technique, use a camera rail) so that our camera and technique have minimal error factors, and the photograph is relatively accurate. But it can never be a perfect photon-to-photon record given different displays, computers, humans, etc.

Pursuit camera photos are the best-ever invented way to easily photograph display motion blur.

But results often have to be interpreted through the lens of all of these limitations and error factors (camera-wise, camera-technique-wise and vision-wise). This is generally why I don't want any polar aguments about truth-or-lie. This only manufactures flamebait accidentially (even if not intentional). The wording, the bolding, and the all-caps, thusly, create a window opening for disagreements, under the lens of display research discourse. In other words, keep an open mind when writing your words.

Pursuit camera photos have known challenges just like measuring GtG, even though pursuit camera has greatly simplified the show-and-tell. But there are innate limitations on how it's reliably communicated.

Pursuit camera is the most perfect way to do it, but still necessarily imperfect (humankind-wise).

Appreciated!

Interesting. I get that there are multiple other variables that come into play that may cause confirmation bias. But generally speaking, any outside variable such as the person's vision, or the camera used, there is also a happy medium as well.

I have 20/20 vision, and my phone camera is 16 mp 4k. Samsung galaxy.s10+, so with motion tests, im sure my ufo tests is conistent to that of majority of ppl. Yes im fully aware of outside variables but as i states, i generally go by the rule and not the exception. If there's no ghosting, there's no ghosting. Reasonable doubt and perception differences and hareware differenfes maybe argued but generally speaking, unless another person has the same camera and monitor to refute my ufo tests, then there'a really no chance of denying it since there arent any counter evidence backing up the reasonable doubt of a person's perception or the hardware used.

-

RLCSContender*

- Posts: 541

- Joined: 13 Jan 2021, 22:49

- Contact:

Re: 04/18/2020. The IPS 240hz monitor tier list.(I've measured/tried the all) and my honest explanation why.

Expplain me how it's "wrong"Dirty Scrubz wrote: ↑28 Apr 2020, 22:54RLCScontender wrote: ↑28 Apr 2020, 22:53Umm no, g sync compatible sucks compared to full fat g sync.Dirty Scrubz wrote: ↑27 Apr 2020, 22:11I want to point out that this isn't entirely true. FreeSync monitors that can use GSync don't run software emulation (G-Sync is just NVIDIAs name for VRR), it's done via the scalar and it's an open standard VRR implementation. Some G-Sync chips can give slightly better input lag but there are many FreeSync displays that are better w/no input lag and they don't need a G-Sync chip to drive up the cost.RLCScontender wrote: ↑26 Apr 2020, 17:58but lets be real here., Without the G-sync chip, there wont' be variable overdrive(even if they say so). Software emulation variable overdrive can emulate it, but it's simply not the same. I own a g-sync monitor, and i can attest to this.

The chip directly communicates with your gpu, via hardware. Not software emulation. Which means non existent input lag zero ghosting and faster response times since the chip will automatically determine the best overdrive on each refresh rate.

Sure, there are maybe 2 or 3 g sync compatible monitors with VRR, but from what i hear, its nowhere as good if it actually had the scaler chip that come with full fat g sync.

Xg270qg vs 27gl850 both the same nano panel , but i noticed way less input lag and ghostingnon the viewsonic. The g sync chip is responsible for that.

Ok again what you wrote is wrong but keep thinking you know what you're talking about.

I backup everything i say with photos. Do u have better counter evidence to disprove me?

Other than using the 1% exception argument.

"I know this "one monitor" that is freesync monitor's g sync compatible and it has variable overdrive and bett3r den full fat g sync, therefore ur rong)"