edit: sorry, got to run, I probably left like 30 typos in here, and can't fix it now, sorry in advance

Colonel_Gerdauf wrote: ↑11 Jun 2022, 11:01

You are describing all of this as a matter of marketing. In that respect, there are other issues that you yourself have acknowledged, and I will get to those later. But this issue is not specific to "gaming displays". Look around, and you will notice that 24" 1440P is very hard to find as a whole, even in the enterprise, budget/scam, and general markets, and those that are there are ancient panels on clearance. The taste for IPS/VA is understandable to a point, but we are getting to the point in refresh where you cannot actually push refresh and response times any further. The general G2G for IPS is around 7ms. G2G is not a fantastic measurement by the way of how fast pixels actually change states, but without any common ground here it is the best we have. Regardless, refresh rates do not work by magic, they rely on the limits of how fast pixels can fully change, and the rate of speed that would encompass a 120Hz display is 8.33ms. 144Hz would be 6.9ms, 165Hz would be 6ms, 240Hz would be 4.2ms, etc. I think you can see where this becomes weird. The HFR displays using IPS ultimately use quite a bit of number-fudging and masking tricks to make it appear like IPS can do everything when it has clear limits.

This was a part of my hesitation towards the push over retina refreshes, as in order to actually make kilohetz displays, you would need a brand new display tech that can surpass the pixel limits of even TN.

And I have not even gotten to the specific limitations of VA or IPS, which can ruin the intended experience and put things into a matter of drawing a lottery. It does not need to be this way, but QA is notoriously inconsistent with strange criteria.

But of course. I would go a step farther. I am holding an opinion that all the LCD progress is just BS. I can understand the difference between 2000 monitor and 2010 monitor, sure. I remember laptop displays in 1995. Surely there's no comparison. But since the introduction of LED backlight and strobing, there was no progress. TNs from a decade ago are not better or at least comparable to the TNs produced now. As to the VAs - the improvements are still far from a "game changer". They were too slow 20 years ago. They were too slow 15 years ago. They are still too slow now. You cannot get motion clarity comparable to a CRT even at 60Hz. Monitors based on VA sold with "240Hz!" labels are pointless. It's just way too slow and higher refresh rate will only improve latency, although there are far more important factors than display latency after 120Hz, which affect the total motion-to-photon lag.

So of course, LCDs are too slow for HFR. Chief made a test of 540p 480Hz prototype years ago, and since then, we more-or-less know what's possible. LCD even with a TN is way too slow for perfect 480Hz. But at least it gives an usable image in motoin. It's at least something. Obviously VAs will never reach this level and IPS are a bit better than TNs, but for gaming, they're still bad in terms of contrast. They can come close to the TNs but it's impossible for an IPS to be as fast as TN, so let's pull these out of the equation.

Like I mentioned, there is more to a good display than having good refresh, or even good resolution in isolation. If you are going to shove in my throat the "super duper fast" displays which have the comical 95 PPI, then you have wasted my time; it is as simple as that.

I would say exactly the same about motion clarity. If you give me a Microled, absolutely perfect monitor/TV with 150" screen size, with 16K resolution, perfect image quality in every aspect, but 120Hz without any strobe/BFI technology, I wouldn't spend even 100$ on that if I was buying a gaming display.

Once again, the scope of source material needs to be taken into consideration. Where BFI would have helped is the lots of content which are capped to 24 30 and 60 FPS, as everything else is variable (thus VRR being the actual holy grail) or at the very least capped high enough where BFI would not be necessary.

As a person who absolutely hates the idea of pushing VRR before perfect motion clarity is estabilished and well understood widely in the industry and among consumers, I must disagree once again. You wrote "everything else is variable" and that BFI benefits the 24 and 30fps. That's not true. 24 or 30fps is not enough temporal resolution to reconstruct even medium speed motion. Even if you use A.I from year 3000. It simply won't catch objects within frame and accurate AI prediction based on 100% guess is impossible. You can clearly observe this in racing games. Play a blur free racing game and start accelerating. Once you cross a certain threshold of speed, you will see the illusion breaking apart. With wide FOV racers at very high speeds, even 60fps as a source may be insufficient. Actually, I can clearly tell the limits of 60fps and 120fps on my CRT-like TN, low persistence (BFI) monitor in a future racing games where you go over 600km/h. So, back to 30fps, it will start failing at very low speeds, unless you switch to narrov-FOV view and pick the camera hovering above the car to slow the perceived speed down. As to the "others are variable". No. There is no well estabilished standard for above 60fps content, sadly, but I don't see where did you get the "all is variable" from. There is no single variable standardized content as far as I am aware. There is 120fps content. Some. Not much.

Variable refreshrate is a trojan horse on so many levels. Even for the future. If you get the locked content, let say 90fps. Or 100fps. You can interpolate it well to 1000fps. But if you get variable, there will be an issue interpolating it well without artifacts or jitter.

One day the sun will come out again, we'll eventually get something good for motion, maybe MicroLEDs in 2030s. And seriously, I'd rather have 90fps locked game than 95-120 VRR. Latency improvement is small. Motion clarity issue is way more important.

Those would involve the balancing act between "tolerable" flicker and the noticeable reductions of motion blur. You can get around the original issue here by simply using higher clock ratios such as 1/4 or 1/6, but it gets to the point where the reduction gets "neutered" so that is a moot point.

That's the idea I mentioned earlier as stupid. Any variation from fps=hz rule will absolutely ruin the picture in motion and render the whole BFI idea pointless.

But about the compromise. I think 120fps content should be the target, the "holy grail". For people who still complain about flicker at 120Hz (that would be the minority of people, mind you) there should be an easy option to interpolate from 120fps to 240fps BFI or to HFR once the display technology allows that. Latency penalty will be reasonable and for competetive games or simply fast shooters controlled with a mouse, people could just pick the desired mode. A match lasting for 40 minutes will not cause headaches at 120fps BFI for at least 90% of gamers. I'd assume more towards 99%.

On that note, why exactly are the blanks clocked? That was never sufficiently explained to me. It would have been far easier to fine tune, and electronically more simple, to have the blanks timed in relation to the active frame, and have the blank done at the start of the frame.

You want to display the frame as soon as you can to minimize the latency. You display the frame and the amount of time it remains on the screen determines the precission in motion representation. The longer it stays on screen, the more blurry the perceived motion will look like. On the other hand, the longer the frame remains visible, the more bright the image will be, and less flicker will occur. So you need the blank to be at the time it makes the most sense. If your question was about more technical aspects, then you'll need to ask the Chief as I am not the guy who knows much about the engineering science behind displays.

There is physics, and there is the user experience. Physics is static, meaning that there is always a workaround to a problem somewhere. User experience is a mess that requires proper social awareness at the very least, to sift through. I will get to the latter point later. If it is truly a matter of "eye hurt, or motion blur", then that is about the most idiotic kind of compromise to make, and especially foolish when it is being shoved to the general user.

I am not the guy who brags about stuff whenever he can, and I have very little knowledge about anything else, but in terms of motion clarity, I can say I'm a practical expert, without lying. I was discovering the issues with motion 20 years ago, finding the stroboscopic effect which negatively impacts motion, fascinating. I was playing around with games at 170fps 170Hz. I was testing 50fps content at 50Hz. I was testing 85fps content at 170Hz. I started looking at motion interpolation as soon as it appeared, I've spent hundreds of hours comparing CRT and LCD monitors side by side. Even three monitors at one point. CRT, strobed LCD and a non-strobed LCD. I've spent tens of hours analyzing the psychology side of this.

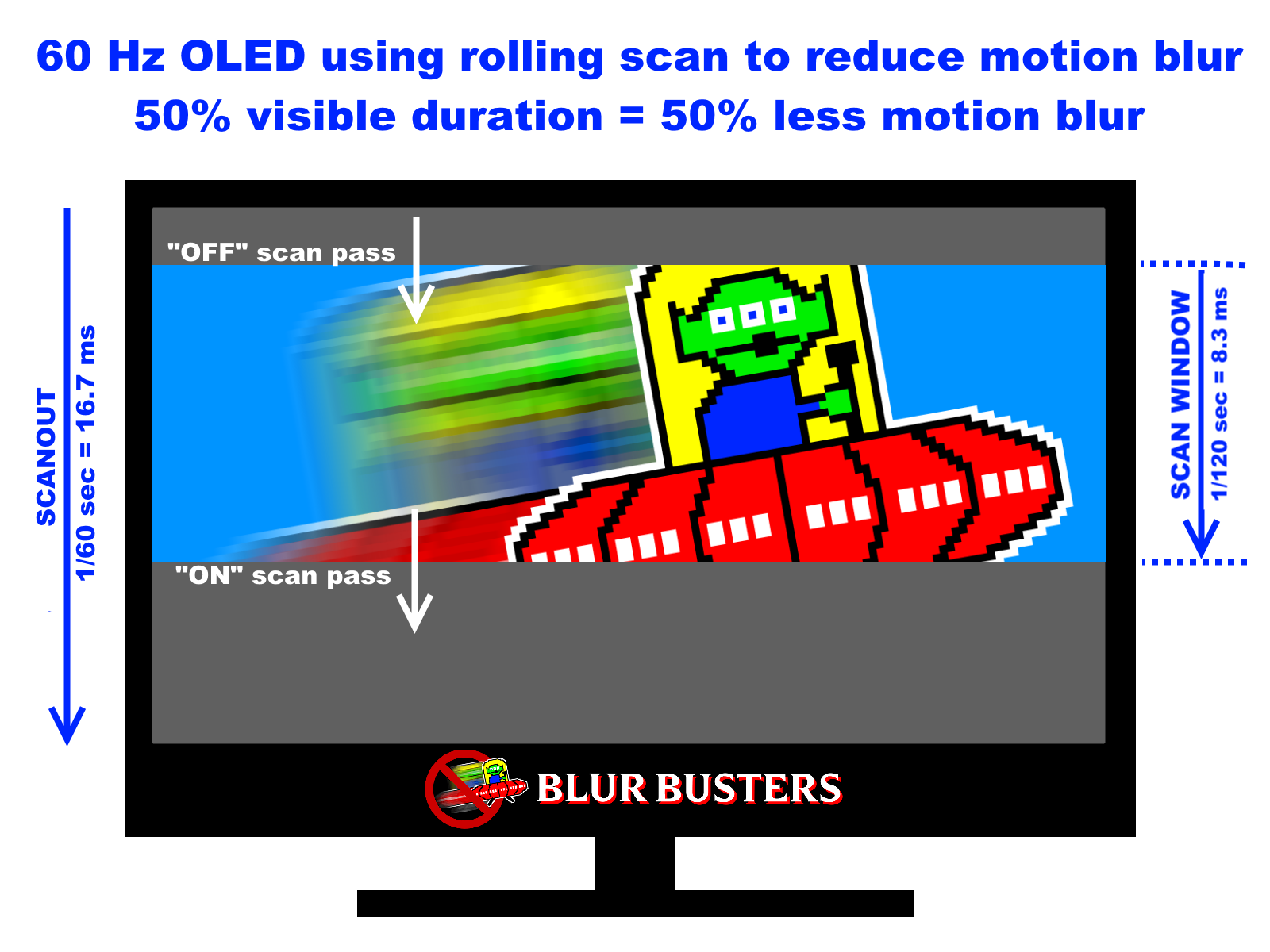

So. What I meant by using "physics" is that there's no way to change how human eye-brain system works. You follow the object, then the said object should remain within the motion vector as Chief's article "Why OLEDS shave motion blur" explains. You simply cannot disrupt it and expect no negative effects. What could've been invented was already invented. The rolling scan BFI has some upsides but it also has its downsides, about which you'll learn more if you watch some Michael Abrash and John Carmack keynotes from Oculus Connect conferences from 2013-2016 (sorry, I don't remember which one). They explain the problem of the image "bending" at specific motion in VR. The idea to simulate CRT's phosphor fade the Chief talks about, is a good counter-flicker remedy, but that's basically it. You cannot invent something more round than a wheel.

There's a lot to be done with display tech, with implementations, but you cannot go against what's in the "Why do OLEDS have motion blur" article says. It simply wouldn't work unless you introduced some computer-brain interface to bypass the human eyes which cause the issue due to their physical/biological nature.

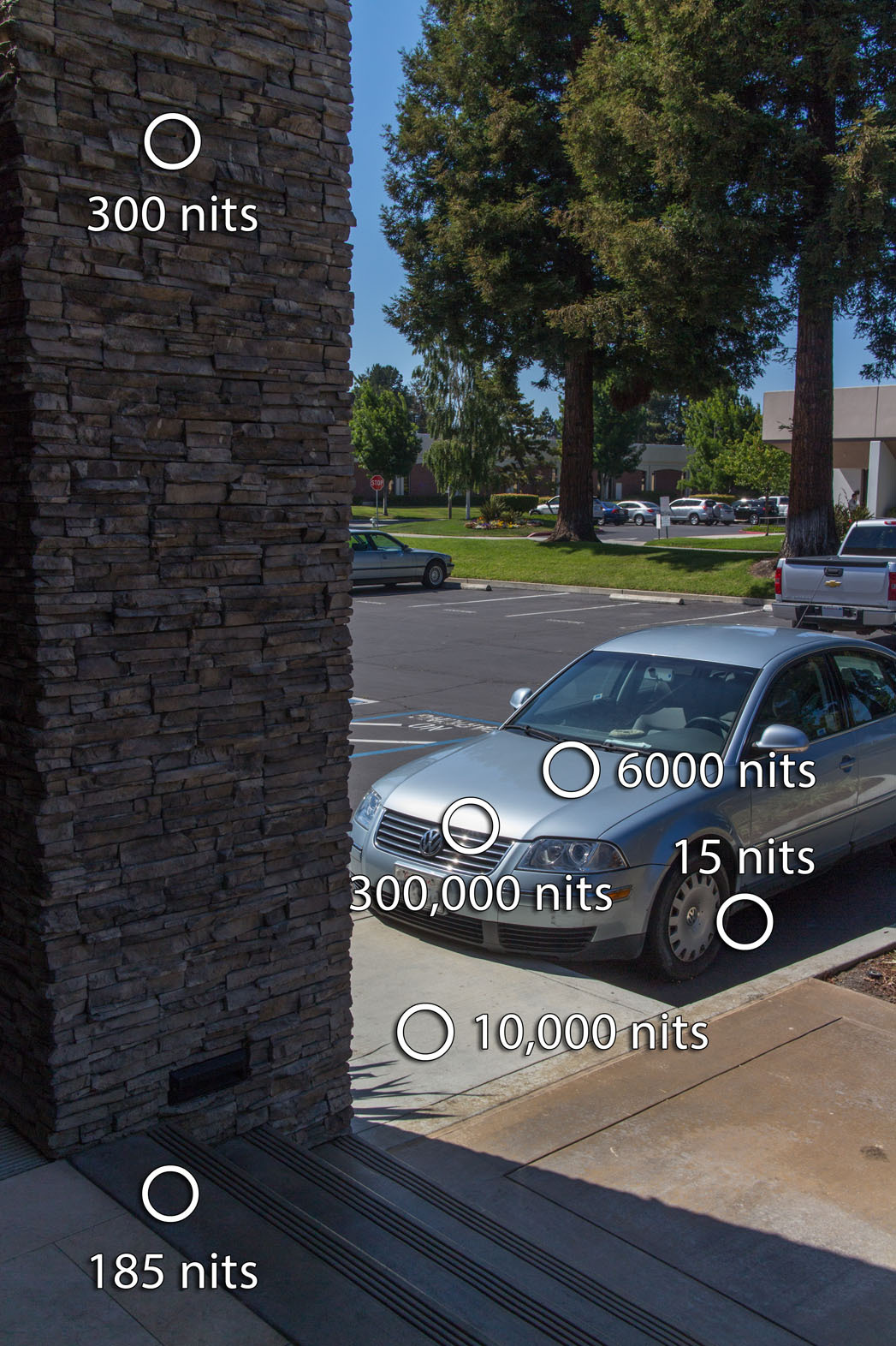

In a technical level, I very strongly disagree. First, the thing about HDR is not about being super-bright. When it talks about nits, it is speaking of the relative vibrancy values from section to section. Think about it like the power rating for a computer PSU; it is only talking about the maximum that can be reached when/where is needed. This is actually really close to real life, as when you walk outside, there are sharp differences in "brightness" everywhere that is not properly captured in a regular display. Looking at the real world hasn't bothered people, so why should HDR?

As with the searing brightness, that is down to faulty implementations in case situations of specs versus the content on display, and that can be easily adjusted on a hardware as well as OS level. In fact the issue on Windows has been dealt with thanks to AutoHDR, as HDR mode is no longer static when turned on, it can dial itself down when looking at SDR content, even on a pixel-to-pixel basis. In addition, the parameters can be tuned by the user to suit their personal needs and limits. BFI does not have this benefit, especially according to what Chief is telling me about the alternatives I have brought to the table, and according to my observations of BFI implementations out in the wild.

[/quote]

As I wrote earlier: Because you have 220° in real life and the TV covers like 20-50° of it. Because in real life, you don't go from night to middle of the summer sunny day in 1 second. Because it matters at what TIME you play on. Because you are a biological machine, which is programmed to work in certain ways though thousands of years of evolution. I'm far from an expert on health but I'll try explain some things to you anyway. Feel free to dive into proper science sources to learn more about these things, if you are not scared of changing your opinion on HDR.

First of all, lets start from the invalid argument I saw multiple times in most of the pro-HDR reviews and articles. That real life can easily hit 10 000 so TVs should get that too. That's true, but the fact the sun gives a ton of light, doesn't mean you can look at it without damaging your eyes. The specular reflection on the metalic car hood on your example, is what damages your eyes in real life. You should minimize looking at such things as much as you can, because with age, your eyes will get damaged. Caring for your eyes will mean you start having issues with night vision and color vibrancy at the age of 80 instead of the age of 50. Or at the age of 30 if you believe that the idiot in hospital who calls herself a doctor, deserves to be called one. I've lost like 20 years of brightness degradation within one single day, cause the "doctor" told me I can go home even when I asked "but to analyze my eyes, you applied the fluid which made my eyes turn into night mode, losing the ability to adjust to higher brightness. Won't that hurt my eyes?". She said "no, it won't or if it will, you won't notice it, just don't stare at the sun". That was a lie. Trust me. You want you eyes to age later rather than sooner.

Why did she tell me that and what it has to do with HDR? Well, it's about the fact we are all different. Different age, different health, different genes. What you can stomach with your HDR TV set without harfmul effects, may hurt someone else, permanently. Just as my eyes were more prone to damage than the other patients the idiot doctor dealt with, you can hurt someone by generalizing too much.

Then there is the fact it's very hard to measure the damage. Even harder to notice it. It's not like you can see it from day to day (unless it was a very severe damage but that's unlikely from a single game session on a HDR TV). Just like you don't realize your hearing to go worse every year, but if you could hear as you did 20 years earlier, you'd immediately notice a big difference (for example, no 50 year old can hear the high tones which are normal to hear for young people).

The fact that close to nobody complains about health issues yet, doesn't mean there's no issue.

So, in real life, your whole FOV gets the higher brightness. It gets time to adjust. The body regeneration system works better to minimize the damage at 2PM, contrary to the situation where someone plays a HDR game in the middle of the night.

Then there's how prone to the damage you are. Sleep defficiency or bad diet can affect it more than you think. We're talking about gamers here. All your "safety systems" are useless until you connect the probes to the brain and analyze what happens in the eyes, which is obviously not going to happen. I didn't mean just the mismatch coming from non-standarized HDR chaos, the HDR10, Dolby HDR and all the other. The issue that various TVs have different panels. The fact people set their TVs as they please. And so on. I understand this was a big problem, but I'm arguing here that even if you ignore this issue completely, HDR is still a threat to health. I can see it becoming less harmful for VR, as it's possible to know about the whole user's FOV. But even then, you'd need to adjust by per-user basis to adjust for what the given user's body can safely handle. And this varies a whole lot.

Lastly. There are newest studies suggesting we underestimated the imporatnce of NIR (near infra-red) light importance. In real life, you get the radiation in the morning, which "arms up" your cells to be prepared better for the upcoming damage from the sunlight. After the noon passes, before the sunset, you again get another portion of IR and NIR light without much of harmful light wavelenghts. Again, aiding your body's natural defense system to deal with the damage. Some people don't go out at all. Have bad health. They are often gamers. Pushing the HDR may turn out to as harfmul as cigarretes or "tons of sugar in everything". This is IMHO a big issue and is on direct path towards hurting people. Lots of people.

Don't get me wrong. I understand what HDR's appeal is. I switched from a CRT monitor made in 1990 to one made in 1996. Then a few more times from used CRT to a new CRT. Then, the biggest difference - from CRT monitor (like 90) to a TV (brightness more towards 200). The difference in contrast, color vibrance, shadow detail - it was absolutely huge and the fact I have no choice but to use the crappy TN, doesn't mean I'm an idiot who would say HDR doesn't help in certain content and certain environment (especially when you watch something in a sunny day, in a sunny room, then having some parts of the screen at even 6000 nits is surely a good idea!).

But what happens with HDR now, the whole craze, it's simply insanely stupid and dangerous. Especially when you realize the color vibrancy was taken away by postprocess effects games, to make the games look more natural/realistic, and now they push HDR displays so you can get some of that back. If you ever used a high-end CRT TV of smaller size (bigger size = less brightness per inch) you surely won't say it was missing anything in terms of brightness, and general "punch". The lights were lit, the fire was "burning", all as it should.

But not in a sunny roon at 1PM. And maybe not for 50 year old people who write pro-HDR articles now. Well, yeah, maybe not for those.

Once again, strong disagreements, and this boils down to the kinds of content that is on screen. As I explained earlier, VRR is genuinely useful in situations where frame rates, and more importantly frame times, are not consistent. And this is in just about everything that you or I have used.

I disagree with your disagreement. If your content frame rate is inconsistent, your content is broken. Simple as that. If you could make stable 60fps on Playstation 1 in 1995, you can surely do stable 120fps on PS5. But the industry's level of professionalism fell by a lot and now you get insanely stupid situations like DIRT 5 game which holds 90fps on consoles, most often holds 100, but has no 90fps stable framerate mode on consoles, no 100Hz. And 120Hz it has won't work with BFI as it has to be 120fps at 120Hz to make it work. That's not the problem with BFI. That's the problem with idiots ignoring the issue and benefitcs of BFI, so it's underutilized and basically ruined. Why would the console lack 90Hz output? There's no hardware reason to not offer this. OK, let's pick something more mainstream. 100Hz. Nope. Not even that. Why wouldn't the game dev set the optimizations parameters to 120fps? Surely if you can get Switch ports of PS5 games, you can move some damn slider a bit to improve the minimum framerate reads. That often doesn't require any work, as the engines used for most of the games can do these things automatically. You'll just get more popin, less detailed and more jaggy shadows etc. Surely better to offer such a compromise than no mode capable of flickerless motion or reasonable quality in a racing game (racing! - so dependant on speed immersion!)

As to other content. Again, if your non-gaming content is VRR, it's broken too. I won't talk about this though, as I don't know anything about VRR non-gaming content and honestly, don't want to. I wouldn't watch such a thing anyway, unless it can be displayed with BFI properly (so maybe a movie at 100-120fps interpolated to 1000, then sure, why not

)

Well, you'll see the issue clearly as even your "tribe" (VRR over BFI

) will feel the problems of industy's stupidity once we'll start getting stupid UE 5.0 games at 30fps. At 35-40fps no VRR will be able to work anyway. By this logic, it would need VRR to change as the 35fps content clearly shows it's not beneficial

In fact, inconsistent frame times are how the GPU gets any work done at all without a massive penalty in latency. GPU's if you don't know are dynamic electronics, and will dial in their workload based on power, thermals, and what exactly is asked of it to do from the millions of API's that does the same thing in a million ways. Funny enough, a dev I know has been working on a software-sync tech that he had hoped to "kill VRR once and for all", but he kept running into issues of practical benefits vs VRR and limitations due to smooth-brained game devs blatantly defying tech standards.

I'm no dev, but I've been reading some stuff about GPUs since before the first one arrived on the shelves and I know about frame pacing issues. And about double and tripple buffering techniques.

But for the stable framerate, it matters more what your CPU single threaded performance is. What your CPU performance is, what CPU-RAM (or CPU-cache in certain scenarios) performance is. If you have issues with frame delivery, it's usually either do to how your engine was designed (badly) or you suffer from CPU insufficient perf.

And that is the point here; VRR takes care of the overall situations regardless quite nicely, and the "alternatives" require everything to function exactly as expected, which is comically unrealistic. BFI as an example, are only "ideal" with the expectation that the content you watch/play has a completely perfect frame time graph with absolute zero variance. The moment a spike occurs, you are going to have a very bad if not painful time with the display. It was a part of why I had strongly suggested a change of blanking philosophy.

From my POV, it achieves nothing but some latency improvements which are marginal to other latency-affecting factors. The inconsistent framerate without BFI, will always mean the image in motion is still completely broken and unusable. The fact the game will feel more smooth and more responsive doesn't change that. And the fact the devs are too stupid to control their framerate or to prioritize it over fancy new graphic rendering technologies, is another story.

As for the engineers, they are not a lot better in the grand scheme of things. They have a holier than thou attitude with just about everything under the sun, even when the subject in question involves a lot of social context. I have seen for myself the effects of anti-social behaviours and how it is so commonplace with the engineers. They often portray themselves as the ultimate information gods, and they are rarely willing let alone able to accept critical feedback for the information they provide, especially where social context and understanding is involved. If you provide them the kinds of information that is beyond their limited scope of understanding, it will be silly not to expect them to become an incredible nuisance to talk with. I will just leave it to you to guess and figure out what specific kind of toxic mindset they would unironically praise.

Oh the irony. You wouldn't believe how toxic people get when I argue about motion clarity importance.

Even if I present facts and explain.

The matter of fact is, you cannot confine yourself to the physics "as is" or the "raw science" of a situation. Social context is completely unavoidable, and that is a part of the point I wanted to deliver here in this thread. Understanding personal limits and unbiased views of a tech is a million times more important than focusing on some dreamy desires of a few and being selective about the benefits and drawbacks. With the specific solutions I had brought such as interlacing and checkerboarding, the effects would have strictly speaking been a placebo, but in the scope of user reception and feedback, that does not really matter. A nice looking placebo will always be preferred over a "true" implementation that comes with drawbacks, and is just how it is.

But understanding the importance of motion clarity and benefits of BFI (due to technology not being available for any other solution within near term future) cannot reach mainstream. It becomes a niche because it's hard to understand. As I mentioned, it has too many pitfalls and people are not willing to become scientists to analyze what happens in their own brains while they are having fun (or not). I'll give you an example: I posted like 1000 forum replies and threads about it. Most people disagreed and became toxic after I refused to accept their narrative that I'm just a weird guy who focuses on motion too much. The average ratio of convinced people was like 1/20. But about 15 people bought the monitors I recommended and gave the BFI a try. Out of those people who never playedon clear motion display before, 80% sent me DMs later on, thanking for me being so persistent to convince them. Out of 5 people I made a "presentation" for, sitting them down to 2 monitors side by side, the convinced number of people came out to be 5. Not convinced: 0. Out of those 5, 2 were really strongly stubborn and sure they won't change their minds.

And.. out of those convinced people, some turned back around after some time, due to the psychological trap I'd have to write a whole wall of text about, but in short: the information in the brain evaporates and the gamer stops being aware of what he/she's missing.

Low latency is very important in gaming, especially in the context of HFR, and even in 60Hz, so the need to disable such a mode is highly questionable in that scope. Sure, you may argue about it activating only in games, but that invites a hornets nest of situations where low-latency is still desirable and BFI is much less useful in relation. And as I said, BFI in the wild today only has one setting, on and off, and with such problems it has in relation to the real world and the absurd lack of fine tuning, it raises a question mark for why anyone would want BFI in a gaming system.

But the latency is not dependant on VRR alone. It's not even the major part of it.

Once you move from 60fps v-sync, to 120fps v-sync, the latency improves by a lot. But if your code is properly written and the environment configured (OS, API, driver etc.) it's good enough even without VRR. Then, if you need more, you can simply disable v-sync. The moving motion quality will degrade by a lot, but still won't be worse than VRR without BFI. This means you can get really comparable or even better latency without VRR if you play at 120. And if you are a game dev creating a game in which latency matters even a bit, you should NOT even consider making your game for anything under 120fps.

Then, we have higher refresh rates. At 240fps, difference between VRR and vsync will be microscopical.

Look around. What happens in the industry. We are about to enter into the 30fps swamp again due to the hype for UE5.0 and ray-tracing. Look at the RDR2 game. This prioritized animation quality and buffering techniques to push the visuals so much, the latency reached 150-200ms. Even 250ms in some cases. The same happened with GTA IV and V on PS3 and x360.

The 60fps online shooter games can vary by TWICE as much just because of how they are written. You can get just a fraction of this difference thanks to VRR. Seriously, there's no need to push VRR everywhere. You just don't push 4K, tripple buffer and super complex shaders into a fricking Quake Arena-like games.

(and yeah, that happens in the industry nowadays)

So, instead of fixing their wrongdoing, by improving the games and approach in displays (TV and monitor manufacturers are also to blame) we got something which by trying to fix it a bit (latency) is braking it even more (vrr is the enemy of BFI and motion clarity nowadays, at least for now).

The same goes with variable rate shading, which is trying to save up on some GPU power draw to get more performance in static image, but at the cost of motion quality.

You shouldn't turn the problem upside down. Just like I wouldn't agree in 2030 that motion clarity is completely not important cause 100% of the games uses the stupid temporal AA methods, motion blurs and the image looks like Fallout 76 ( a synonim

) in motion due to variable shader rate anyway