Re: What will a fine PC's latencymon result look like?

Posted: 17 May 2024, 17:59

Don’t forget to change settings so it displays kernel latency.

Who you gonna call? The Blur Busters! For Everything Better Than 60Hz™

https://forums.blurbusters.com/

yes my shows here 2,9ghz and 2,9ghz even if running game while latencymon run in background...

It's because of the timer resolution. Kernel latency will always be around 500, 1000 or 12000.themagic wrote: ↑07 Feb 2025, 15:13yes my shows here 2,9ghz and 2,9ghz even if running game while latencymon run in background...

but it should be reported speed 4,1 ghz ?...cause 2,9ghz is stock speed of my cpu and if without cpu boost enabled in bios..but everything enabled and taskmanager shows 4,1ghz when running apps.

i dont get this shit with that reports and why is that...

what it shows for you in reports ?

ok now i understand why...

_________________________________________________________________________________________________________

Reported CPU speed (WMI): 2901 MHz

Reported CPU speed (registry): 2904 MHz

Note: reported execution times may be calculated based on a fixed reported CPU speed. Disable variable speed settings like Intel Speed Step and AMD Cool N Quiet in the BIOS

setup for more accurate results.

_________________________________________________________________________________________________________

i think this has something with idle and power savings to do too maybe...but 360mhz is strange idle and i only saw 800mhz idle max with processors here...

guys check my kernel time...

but this shitt trash programm still says that: Your system appears to be suitable for handling real-time audio and other tasks without dropouts.

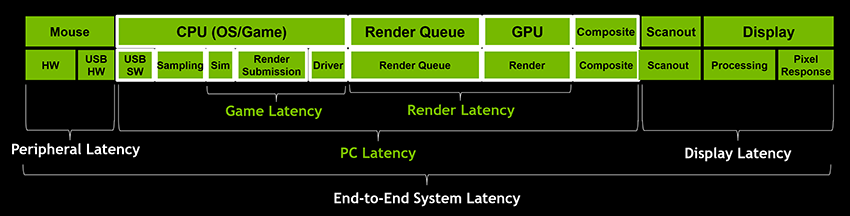

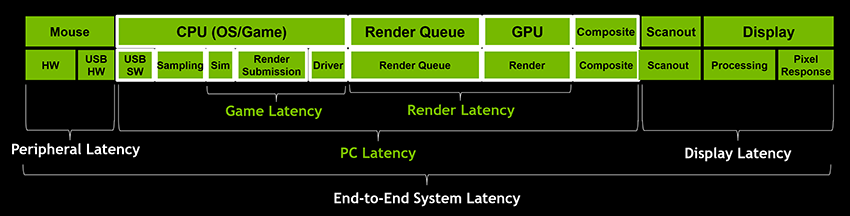

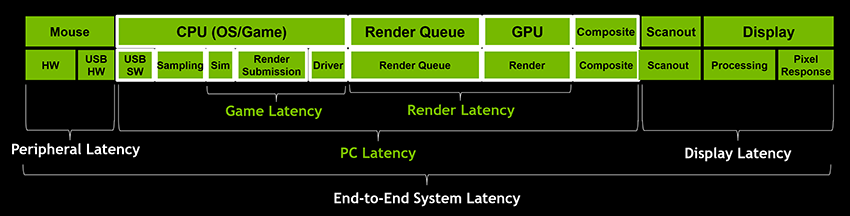

No, we are simply comparing results. The PC gaming community needs to come to an agreement about what makes sense and what doesn't. The way I see, there are only flawed testing methodologies at the moment and just because it is widely accepted that microseconds of delay is imperceivable doesn't make it true. This is the same thing as self proclaimed geniuses measuring latency with mouse clicks and swearing that better hardware means lower latency and newer software means better compability and performance. Ironically the level-minded people today are those who idealize the most without taking actual possibilities into account.synthMaelstrom wrote: ↑09 Feb 2025, 08:52Am I correct in assuming that you guys are obsessing over the tiny portion of the input latency chain, measured in nanoseconds, in the hope of reducing input lag?

Have you tried the Latency Split Test by ApertureGrille? I struggle to perceive a difference starting from 7ms of added latency, anything higher I can pinpoint with >90% accuracy. From what I've seen online some people can accurately tell the difference at as low as 3ms, but microseconds? 400μs = 0.4ms, sure the difference is there since it's measurable, but spending any more time than reasonable on optimising this part of the chain to bring it down to 0.2ms or even 0.1ms seems counterproductive. Look, I'm not bashing the practice, just putting it out there that a tech tinkerer's precious time is much better spent diagnosing the rest of the chain.

I haven't heard of it but seems interesting so I'll check it out sometime.synthMaelstrom wrote: ↑10 Feb 2025, 03:17Have you tried the Latency Split Test by ApertureGrille? I struggle to perceive a difference starting from 7ms of added latency, anything higher I can pinpoint with >90% accuracy. From what I've seen online some people can accurately tell the difference at as low as 3ms, but microseconds? 400μs = 0.4ms, sure the difference is there since it's measurable, but spending any more time than reasonable on optimising this part of the chain to bring it down to 0.2ms or even 0.1ms seems counterproductive. Look, I'm not bashing the practice, just putting it out there that a tech tinkerer's precious time is much better spent diagnosing the rest of the chain.

People on this subforum are clueless and like to wander in circles, keep this in mind.synthMaelstrom wrote: ↑09 Feb 2025, 08:52Am I correct in assuming that you guys are obsessing over the tiny portion of the input latency chain, measured in nanoseconds, in the hope of reducing input lag?