masneb wrote:Obviously this technology is still in its infancy

According to the rep in the video, if that's all this new "Anti-Lag" feature is, it isn't in it's infancy though, it's been around forever. He states in that video:

So anti-lag is a software optimization. What we're doing is we're making sure that the CPU work where your input is registered doesn't really get too far ahead of the GPU work where you're drawing the frame, so that you don't have too much lag between click mouse, when you press key, and when you hit the response out on the screen.

What he's describing is literally the pre-rendered frames queue, which (I

think) is already available to control via AMD reg edits, and is an existing entry in the Nvidia control panel.

The only difference I see (unless they're holding a lot back here) is possibly automatic and/or more reliable global override of the existing in-game frames ahead queue value at the driver level.

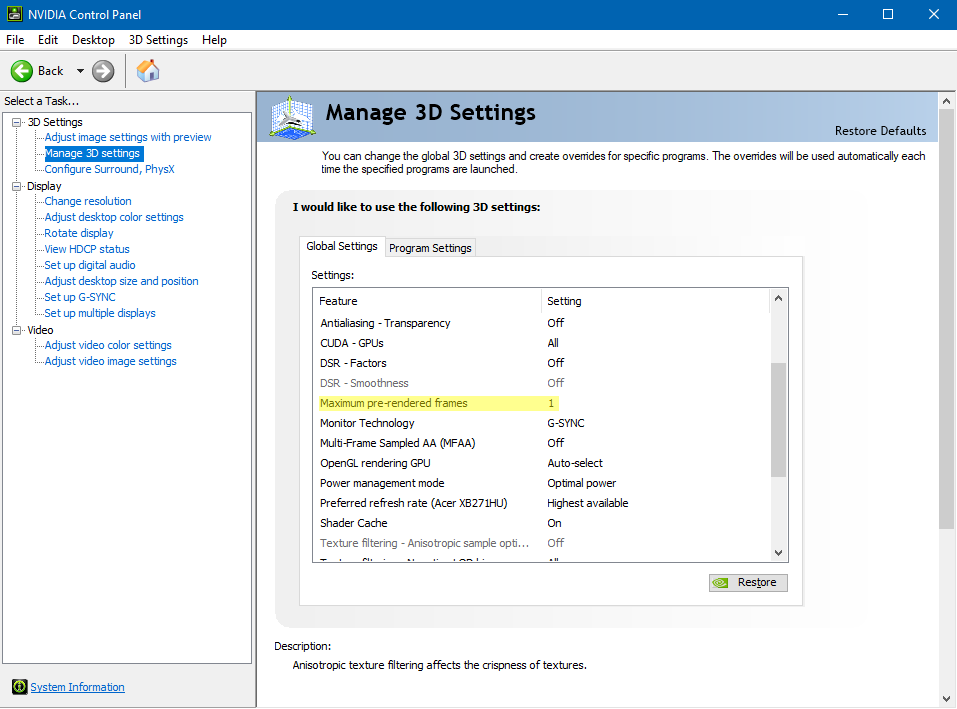

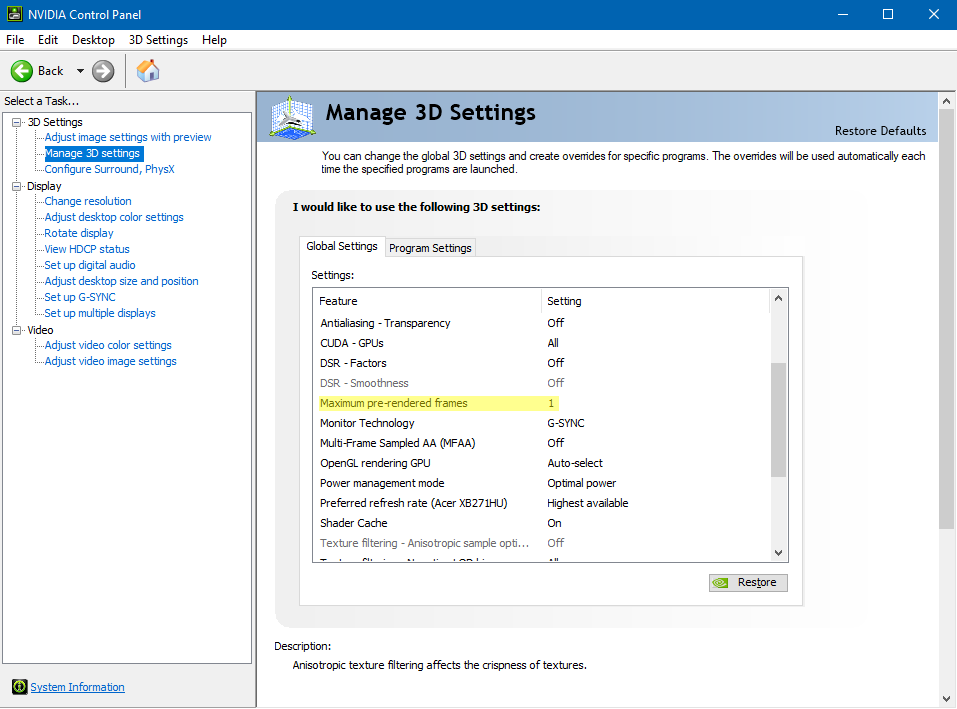

If you have an Nvidia GPU, you can do this now (for the games that accept the override; not all do, usually for engine-specific, or raw performance reasons), just set the below option to "1":

I have a description of this setting in my G-SYNC 101 article's "Closing FAQ" as well:

https://www.blurbusters.com/gsync/gsync ... ttings/15/

Some games also offer an engine-level equivalent of this setting, like Overwatch, which labels it "Reduced Buffering."

Finally, regarding the input lag difference between AMD/Nvidia on the test setups in that video, that can easily be explained; Nvidia's max pre-rendered frames entry is set to "Use the 3D application setting" at the driver level at default (an equivalent of "2" or "3" pre-rendered frames), so if they left it at that, and enabled their own "Anti-Lag" feature on the AMD setup (probably an equivalent of "1" pre-rendered frame), then you'd get the difference we're seeing there (at 60 FPS, each frame is worth 16.6ms, and the difference is about 15ms in the video).

So far, this just sounds like very clever marketing and re-framing of an existing setting for an audience that is less familiar with this than we are. Which isn't a bad or disappointing thing though, at least from my perspective (if it ends up being the case); it would be great to have a more clearly/correctly labeled and guaranteed pre-rendered frames queue override for virtually every game it's applied to (something that doesn't seem to be the case with the Nvidia setting currently).