hmukos wrote: ↑17 May 2020, 12:20

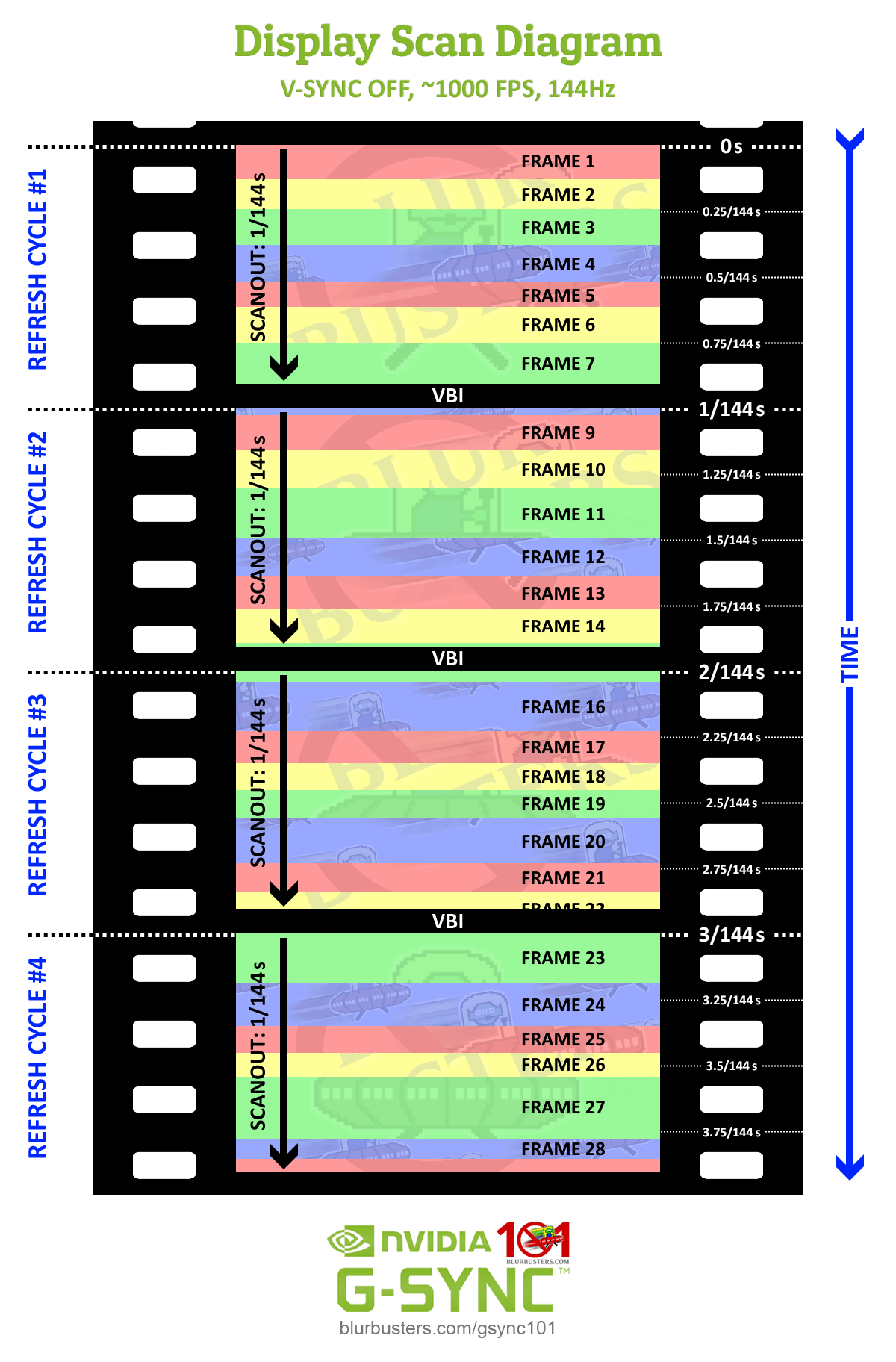

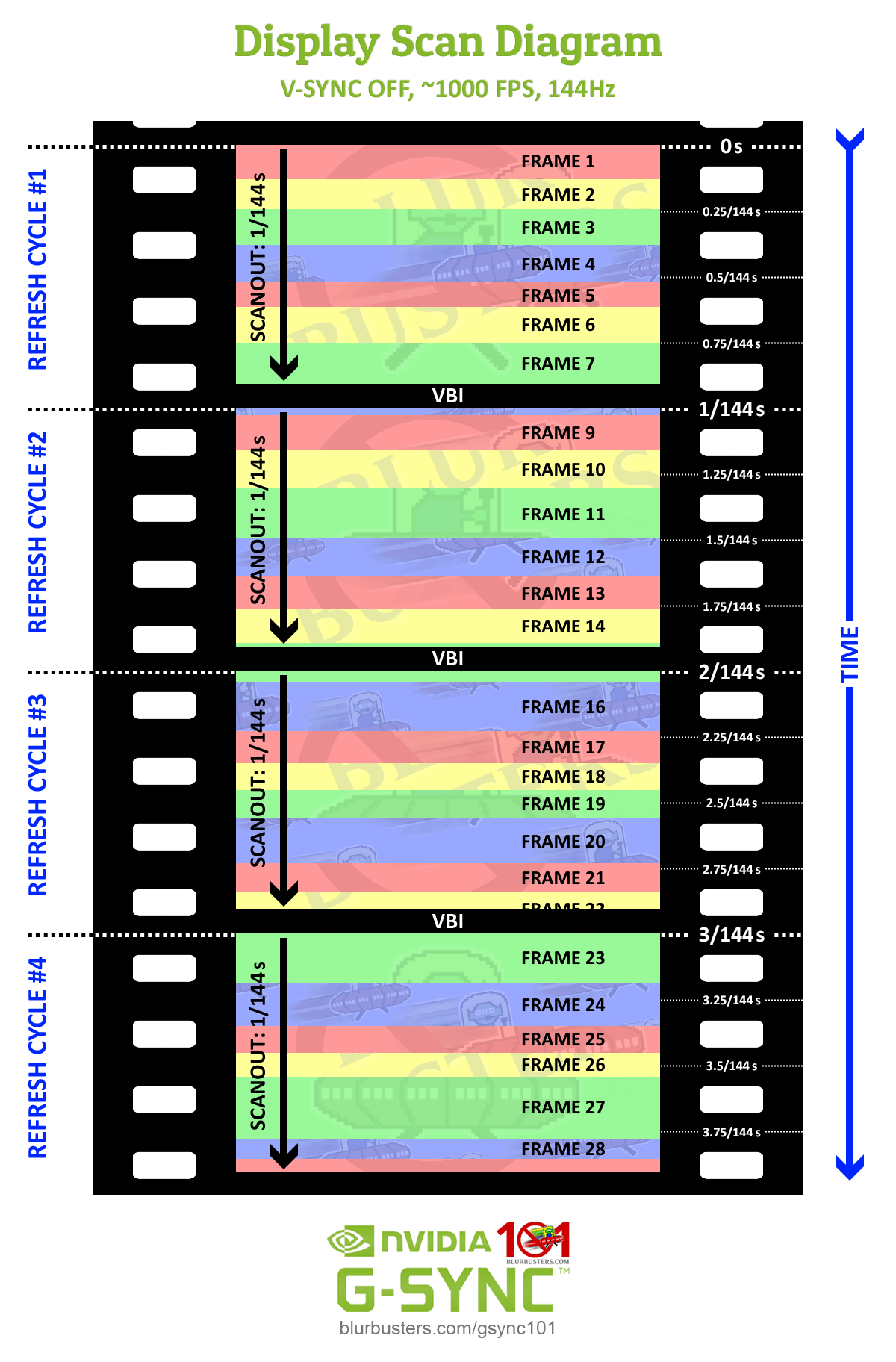

That's what I don't understand. Suppose we made an input right before "Frame 2" and than it rendered and it's teared part was scanned out. How is that different in terms of input lag than if we did the same for "Frame 7"? Doesn't V-SYNC OFF mean that as soon as our frame is rendered, it's teared part is instantly shown at the place where monitor was scanning right now?

Yes, VSYNC OFF frameslices splice into cable scanout (usually within microseconds), and some monitors have panel scanout that is in sync with cable scanout (sub-refresh latency pixel-for-pixel).

What I think Jorim is saying is that higher refresh rates reduces the latency error margin caused by scanout. 60Hz produces a 16.7ms range, while 240Hz produces a 4.2ms range.

For a given frame rate, 500fps will output 4x more frameslice pixels per frame at 240Hz than at 60Hz. That's because the scanout is 4x faster, so a frame will spew out a 4x-taller frameslice at 240Hz than 60Hz for a specific given frame rate. Each frameslice is a latency sub-gradient within the scanout lag gradient. So for 100fps, a frameslice has a lag of [0ms...10ms] from top to bottom. For 500fps, a frameslice has a lag of [0ms...5ms] from top to bottom. We're assuming a 60Hz display doing 1/60sec scan velocity, and 240Hz display doing a 1/240sec scan velocity, with identical processing lag, and thus identical absolute lag.

Even if average absolute lag is identical for a perfect 60Hz monitor and for a perfect 240Hz monitor, the lagfeel is lower at 240Hz because more pixel update opportunities are occuring per pixel, allowing earlier visibility per pixel.

Now, if you measured average lag of all 1920x1080 pixels from all possible infinite gametime offsets to all actual photons (including situations where NO refresh cycles are happening and NO frames are happening), the average visibility lag for a pixel is actuallly lower, thanks to the more frequent pixel excitations.

Understanding this properly requires decoupling latency from frame rate, and thinking of gametime as an analog continuous concept instead of a digital value stepping from frame to frame. Once you math this correctly and properly, this makes sense.

Metaphorically, and mathematically (in photon visibility math), it is akin to real life shutter glasses flickering 60Hz versus 240Hz while walking around in real life (not staring at a screen). Your real life will feel slightly less lagged if your shutters of your shutter glasses are flashing at 240Hz instead of 60Hz. That's because of more frequent retina-excitement opportunities of the real-world photons hitting your eyeballs, reducing average lag of the existing apparent photons.

Once you use proper mathematics (treat a display as an infinite-refreshrate display) and calculate all lag offsets from a theoretical analog gametime, there's always more lag at lower refresh rates, despite having the same average absolute lag.

Most lag formulas don't factor this in -- not even absolute lag. Nearly all lag test methodologies neglect to consider the more frequent pixel excitation opportunity factor,

if you're trying to use real world as the scientific lag reference.

The existing lag numbers are useful for comparing displays, but neglects to reveal the lag advantage of 240Hz for an identical-absolute-lag of 240Hz-vs-60Hz, thanks to more frequent sampling rate along the analog domain. To correctly math this out, requires a lag test method that decouples from digital references, and calculates against a theoretical infinite-Hz instant-scanout mathematical reference (i.e. real life as the lag reference). Once the lag formula does this, suddely, the "same-absolute-lag number" feels like it's hiding many lag secrets that many humans do not understand.

We have a use for this type of lag references too, as part of a future lag standard, because VR and Holodecks are trying to emulate real life, and thus, we need lag standards that has a venn diagram big enough -- to use this as a reference.

Lag is a complex science.