Re: Blur Buster's G-SYNC 101 Series Discussion

Posted: 18 May 2020, 13:41

.

Who you gonna call? The Blur Busters! For Everything Better Than 60Hz™

https://forums.blurbusters.com/

Correct; conceptual, oversimplified wording on my part. They are indeed essentially part of the same thing.Chief Blur Buster wrote: ↑18 May 2020, 16:37At the driver level, "pre-rendered frames" and "buffer" are essentially the same format, so I consider them one and the same.

I intuitively grasp this but doesn't the first on-screen reaction measure "absolute" input lag rather than lagfeel? Imagine for example infinite framerate situation on 60 hz instant response screen and let's ignore any input lag (mouse, engine, etc) except for scanout related. Shouldn't there be instant moving of vertical line in jorimt's CS:GO test map starting in place where display was scanning out right now? What is the situation where there would be a 1/60s delay in first on-screen reaction?Chief Blur Buster wrote: ↑17 May 2020, 13:05Even if average absolute lag is identical for a perfect 60Hz monitor and for a perfect 240Hz monitor, the lagfeel is lower at 240Hz because more pixel update opportunities are occuring per pixel, allowing earlier visibility per pixel.

Could you elaborate, please, how would those testing methods differ in this situation?

Because with the camera continually spinning in place, there would be no way to test for "input" lag, as now the screen is constantly visibly updating with no way to tell when input started or stopped, and instead, you're effectively just watching a non-interactive video loop rendering in real-time. And as we all know, with videos and movies, input lag doesn't matter in the least, only audio/video sync.

The same tearlines are effectively being drawn in the same places, but since the 60Hz scanout is slower, it reveals them more slowly than it does at 240Hz.

Doesn't this example require that an already teared image was stored somewhere before scanout? I thought tearing happens when new frame was rendered while panel was scanning out.jorimt wrote: ↑19 May 2020, 09:02take a single static image of a game scene containing multiple tearlines frozen in motion across a single, complete scanout cycle, now print it out, once with a fast printer, once with a slow printer. Both will ultimately print the same image, but one will print it faster. That's effectively what you're seeing in those 60Hz vs. 240Hz V-SYNC off graphs.

Fixed refresh. E.g. any scenario that doesn't use VRR.BTRY B 529th FA BN wrote: ↑19 May 2020, 11:37Just so this is clear, by "Non-G-SYNC" do you mean G-Sync + Vsync Off, or (monitor technology) Fixed Refresh + Vsync Off? Or are they the same thing?

I'm not sure whether I'll be able to make it much clearer for you at this point, but...hmukos wrote: ↑19 May 2020, 11:56Doesn't this example require that an already teared image was stored somewhere before scanout? I thought tearing happens when new frame was rendered while panel was scanning out.

So looking at this picture, shouldn't we see some reaction on first scanout right when "Draw 1" happened? This is how I see it: at first we had some frame in the buffer and the panel was scanning it out, but then GPU drew a new frame based on last input and panel is right away continuing the scanout but with new frame. How can input lag in this situation be longer than the time it took to render last frame?

Yes, I'm aware, but I couldn't find better picture to illustrate what I was trying to say.jorimt wrote: ↑19 May 2020, 14:15The graph you embedded as an example is 1) measuring in 16ms intervals (aka 60Hz; for 240Hz, that same graph would be measured in 4ms intervals), and 2) at what looks to be 60 FPS (single tearline per frame scan).

Pointing back to the scenario in your original question, with 2000 FPS V-SYNC off @60Hz, there are parts of multiple frames being delivered in the span of a single scanout cycle.

But wouldn't the first/second tearline since input reveal changes already (considering other factors don't increase lag)? What is the case where we should wait for all 5 tearlines to be scanned to get the on-screen reaction since input?

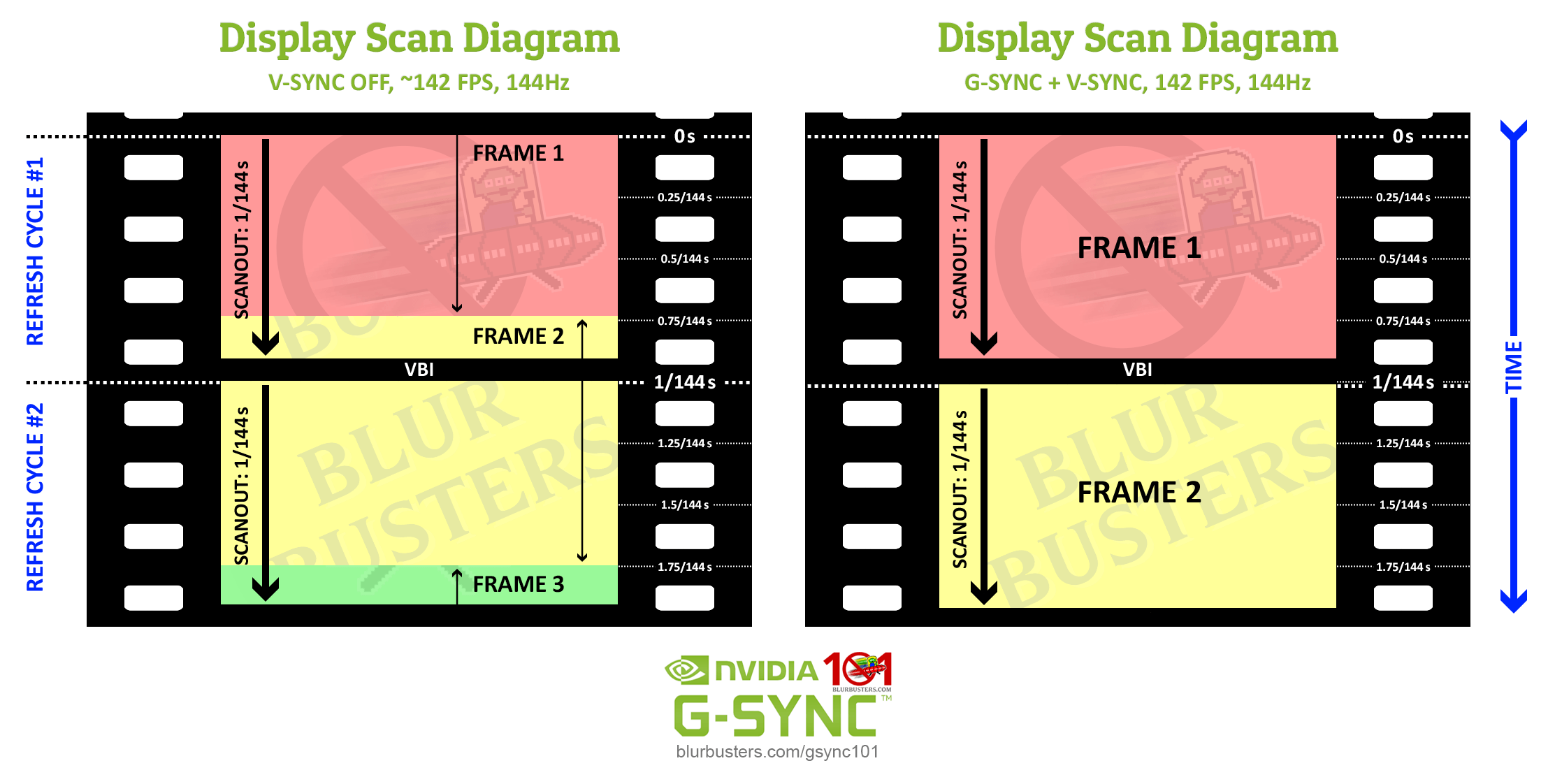

Yes, I have read it. I think that is where my misunderstanding started. I can't understand how does VSYNC OFF in this case defeat G-SYNC. Isn't the moment in time when lower part of "frame 2" in VSYNC OFF starts to appear (left image) the same as moment when the G-SYNC starts to scan the "frame 2" (right image)? Cause both situations in my understanding happen right when the "frame 2" was rendered.jorimt wrote: ↑19 May 2020, 14:15Have you read this part of my article per chance?

https://blurbusters.com/gsync/gsync101- ... ettings/6/