Re: Driver 441.08: Ultra-Low Latency Now with G-SYNC Support

Posted: 07 Nov 2019, 18:18

Is there any reason to disable fullscreen optimizations? It's essentially just to make sure triple buffering vsync isn't applied correct?

Who you gonna call? The Blur Busters! For Everything Better Than 60Hz™

https://forums.blurbusters.com/

Just to confirm: if we force enable V-Sync on NVCP, we should disable all forms of buffering in-game?jorimt wrote:G-SYNC functions on a double buffer, so select in game "Double Buffered" or force V-SYNC to "On" in the NVCP for those instances.disq wrote:What about games that don't have Vsync - ON as an option but only "Double Buffered", "Triple Buffered", "Adaptive", "Adaptive (1/2 Rate)" like the case on Apex Legends. Which one should we pick?

Read the last entry of my Closing FAQ.tygeezy wrote:Is there any reason to disable fullscreen optimizations? It's essentially just to make sure triple buffering vsync isn't applied correct?

I don't believe there would be a point to testing such scenarios. Any setting that is dedicated to reducing the pre-rendered frames queue only takes effect with uncapped framerates (above or below the refresh rate, G-SYNC/V-SYNC or no sync), and/or only in GPU-bound scenarios.EeK wrote:1. In the "not GPU bound" scenario, with G-Sync ON, V-Sync ON and an FPS cap below the refresh rate, he tested NULL with a different in-game cap than in his previous tests.

I wish that he performed all tests using the same in-game cap of 139, instead of 130, so we could compare all input latency results. A higher framerate, even if by just 9 frames, will incur in higher input latency no matter what.

2. In the "GPU bound" scenario, again with G-Sync ON and V-Sync ON, he only tested NULL without an FPS cap below the refresh rate.

Games with high GPU utilization are the most common scenario for most non-competitive players, so it'd be helpful to see the results of NULL with G-Sync ON, V-Sync ON and the FPS capped at a value below the display's maximum refresh rate.

Yes, pick: in-game or NVCP V-SYNC, not both.EeK wrote:Just to confirm: if we force enable V-Sync on NVCP, we should disable all forms of buffering in-game?

RDR2 has the option to enable or disable triple buffering, not sure what to do with it.

That's a tricky subject, because frametime spikes "suck" no matter what configuration you have. That, and no input updates are reflected during the frametime spike (nor can you technically control anything in that time span), so the increase in responsiveness between the two scenarios is typically extremely minimal where frametime spikes are frequent.Kamil950 wrote: Maybe it could be good idea to add what you wrote in this link below (on guru3D forums) to G-Sync FAQ on BlurBusters?

https://forums.guru3d.com/threads/the-t ... st-5692121

If somebody would read only G-Sync FAQ on BlurBusters he/she would not know that he/she could has shorter stutters (but with a tear) which seems (at least to me) better compromise than longer stutter (but without tear).

Sorry, missed this previously; you're welcomedisq wrote:Thanks for the info

Well, he did test NULL with an FPS cap, but only in the "not GPU bound" scenario, as I mentioned.jorimt wrote:I don't believe there would be a point to testing such scenarios. Any setting that is dedicated to reducing the pre-rendered frames queue only takes effect with uncapped framerates (above or below the refresh rate, G-SYNC/V-SYNC or no sync), and/or only in GPU-bound scenarios.

If your FPS is limited by an FPS cap, the pre-rendered frames queue shouldn't apply, thus the settings to reduce it don't either. More on this in entries #6 and #8 of my Closing FAQ.

I should've been more clear. When I said "buffering", I only meant double buffering and triple buffering. V-Sync should not be enabled both in-game and on NVCP.jorimt wrote: Yes, pick: in-game or NVCP V-SYNC, not both.

Okay, so if the game only has an option for triple buffering, like RDR2, we should disable it. But if it has a double buffer setting, that should be enabled in-game on top of V-Sync?jorimt wrote:As for triple buffer V-SYNC/options, again, it's worthless with G-SYNC, as G-SYNC runs on a double buffer.

Those differences look within margin of error to me. I think he only does 20 samples per test (I did 40 per in my tests), so his variances are going to be slightly greater between identical or similar scenarios.EeK wrote:If you check the results, both the maximum and minimum input lag are increased with a lower framerate, as expected (even though the average remains about the same). I just wish that, like in his previous tests, he had capped the FPS at 139, for the sake of consistency. There's a difference of half a milisecond in frametimes between 139 FPS (7.19ms) and 130 FPS (7.69ms), I'm curious to see if that would result in an even lower input latency, or if it would be impossible to measure.

Once your system's framerate can be maintained above you're maximum refresh rate, and you've prevented both V-SYNC and GPU input lag using an FPS limiter, there's not much more reduction you can expect to get on the system/display side, and where there is, we're talking severely diminishing returns; a blink vs. a bat of an eyelash, basically. Some people can't even tell the difference between uncapped G-SYNC + V-SYNC vs. capped G-SYNC + V-SYNC @144Hz in actual practice, for instance.EeK wrote:In the end, NULL is only recommended if you don't cap the framerate at all? If you do choose to use an FPS limiter, NULL should be disabled in all scenarios?

--------Regarding Low Latency Mode "Ultra" vs. "On" when used in conjunction with G-SYNC + V-SYNC + -3 minimum FPS limit, I'd currently recommend "On" for two reasons:

1. "On" should have the same effect as "Ultra" in compatible games (that don't already have a MPRF queue of "1") in reducing the pre-rendered frames queue and input lag by up to 1 frame whenever your system's framerate drops below your set FPS limit vs. "Off."

2. Since "Ultra" non-optionally auto-caps the FPS at lower values than you can manually set with an FPS limiter, for the direct purposes of point "1" above, you'd have to set your FPS limiter below that when using "Ultra" to prevent it from being the framerate's limiting factor, and allow the in-game (or RTSS) limiter to take effect. At 144Hz, you would need to cap a couple frames below 138, which isn't a big deal, but at 240Hz, "Ultra" will auto-cap the FPS to 224 FPS, which I find a little excessive, so "On" which doesn't auto-cap, but should still reduce the pre-rendered frames queue by the same amount as "Ultra" in GPU-bound situations (within the G-SYNC range) is more suited to such a setup.

Yeah, I wasn't targeting your comment specifically, there. I'm just trying to spell things out as much as possible, as people tend to get paranoid when discussing this (or with any finicky technical subject like it).EeK wrote:I should've been more clear. When I said "buffering", I only meant double buffering and triple buffering. V-Sync should not be enabled both in-game and on NVCP.

I only asked because I had never seen that setting in any games until RDR2 (where it's separate from V-Sync), and another user also mentioned Apex Legends as having the option of a double buffer.

Yes on the triple buffer option, no on the double buffer option, as that is just another term for standard V-SYNC (you'd be enabling it twice).EeK wrote:Okay, so if the game only has an option for triple buffering, like RDR2, we should disable it. But if it has a double buffer setting, that should be enabled in-game on top of V-Sync?

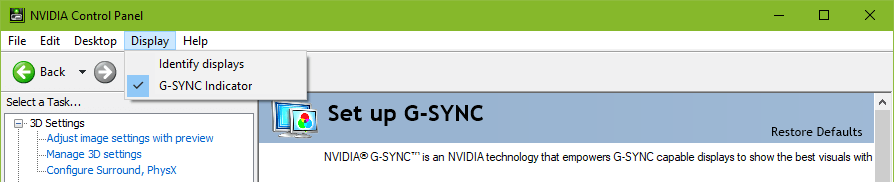

EeK wrote:My TV doesn't have a built-in refresh rate meter, so that option is out. What is the "G-SYNC indicator" setting that you mentioned, though? Would the option for G-Sync disappear from "Monitor Technology" in each individual program setting, if you disable fullscreen optimizations for the executables of problematic games?

A higher resolution will indeed always put more strain on your GPU. Use RTSS to monitor your GPU usage percentage.a22o2 wrote:If I am running higher than 1080p, does that mean it will put "strain" on my gpu for all games ran at 1440p?

Sound right to me, including NULL "On"...except for this game; it runs on DX12, and DX12/Vulkan currently handle the pre-rendered frames queue at the engine level, and thus don't support external setting's manipulation of the queue size.a22o2 wrote:Right now I am using: 1440p in game, RTSS set to 160 (165hz monitor), NVCP: gsync on, vsync on, NULL set to ON instead of Ultra. Vsync off in game. Do you recommend these settings or anything different? Thank you!

edit: reading another member's post is giving me conflicting answers. In my specific case, null should be on or off?