Chief Blur Buster wrote: ↑04 Aug 2022, 04:40

Thatweirdinputlag wrote: ↑02 Aug 2022, 18:01

This is becoming a bit extreme, things like disabling idle state, throttling or even forcing your GPU to run on high performance when the PC is doing nothing is just too much. Even things like disabling speedstep in Bios are becoming more of a religious act rather than an actual justified need. Turning on "Game Mode" on your PC will literally force your CPU to boost into its limits without compromising intel's limitations "unless MCE is turned on". Please do your research on every feature and ask yourself whether you really need that turned off or not.

Game Mode is an excellent move, but sometimes we need to go beyond, because Microsoft never tested 500Hz.

Unfortunately this tweak isn't exactly extreme... if you own a 360Hz, 390Hz or a prototype 500Hz monitor, combined simultaneously with the Razer 8Khz mouse, combined simultaneously with strobing, combined simultaneously with certain non-VSYNC-OFF sync technologies, combined with a game (e.g. Quake) that is able to keep up with refresh rate. The perfect storm.

At 60Hz, 1ms was unimportant, but at 360Hz+, 1ms has actually become human visible. The refresh rate race removed a lot of links that made ever-tinier milliseconds even more visible. In some cases, a 0.5ms stutter or a 0.5ms motion blur is human-visible (if your MPRT is less than stutter error margin in milliseconds). For example, a future prototype 4K 240Hz strobed display (0.3ms MPRT), actually can make quite a lot of sub-millisecond jitter human-visible whereas it used to not be.

It's part of the pushing-envelope of the Vicious Cycle Effect;

Power Management has created a lot of major problems (FreeSync stuttering and capped stuttering), because the idle states sometimes take more than 0.5ms-1ms to wake up on some motherboards when you're not in High Performance.

Please see

The Amazing Human Visible Feats Of The Millisecond

I am sorry to be the messenger that this is actually far more legitimate (more common) than the voodoo EMI stuff (much rarer). Even though, we are kind of tired of the EMI forums (Especially forum members who ONLY sign up ONLY for the EMI forum, even though that issue is legitimate in many cases), this type of "force high power" tweak solves much more common issues we've discovered in the refresh rate race (e.g. 8000Hz mouse + 360Hz monitor + etc) when you've already whac-a-mole'd a lot of weak links, and now running into problems (e.g. VRR microstuttering that isn't the game engine fault) that was recently traced to flawed power management sheninigans in drivers, etc.

We are running headstrong into a lot of weak links (even VSYNC OFF -- due to its microjitter issues -- needs to become obsolete because it partially masks 240Hz-vs-500Hz differences) -- we need lagless non-VSYNC-OFF technologies to become esports-popular in the next 5-10 years in our 1000Hz future.

The refresh rate race is creating perfect storms where weak links (LCD GtG, power management, etc) creates human-visible 1ms microjitters under certain refresh-rate-race perfect storm conditions.

This tweak, also, however, is a very double edged sword, though -- it can also worsen things on some computers. In a computer, especially overclocked, enabling High Performance can trigger thermal throttling microjitters worse than power management microjitters. In this case, this becomes a microjitter pick-poison effect.

The bottom line is as (Hz=increasing) AND (synctech=clearer motion quality than VSYNC OFF) AND (MPRT=supwer low) AND (game=keeping up) AND (display=getting closer to retina rez), creating the necessary perfect storm for ever-tinier milliseconds becoming above the human-visibility noisefloor, because other sources of jitter was eliminated. Scientifcally, this is a serious matter in the current refresh rate race towards retina refresh rates. As the researchers recently published a peer reviewed paper showing Razer 8KHz is a legit humasn-visible improvement, we have to call plausible here, if you've already whac-a-mole'd other higher-priority micojitter weak links.

(Also, keep in mind sometimes improvement is via microjitter, not via lag -- anything human-visibly-beneficial is fair game here, and that is my POV -- if it's lag-worthless but jitter-improvement-worthy, that doesn't dismiss the tweak).

Depending on how far you've pushed your high end gaming rig, this is (on some systems) more common than rarer causes of microjitter (e.g. EMI).

240Hz-vs-360Hz is invisible (1.1x difference instead of 1.5x) largely because of weak links that also includes microjitter (high-frequency microjitter is additional motion blur above-and-beyond simple perfect square-stairstep sample and hold mathematics -- like a fast-vibrating guitar string blending to blur, microjitter/microstutter that vibrates 70 times per second is additional motion blur. You can see this effect at

www.testufo.com/eyetracking#speed=-1 (SEE FOR YOURSELF!)... watch the bottom UFO for at least 20 seconds to understand this effect. This is applicable for motion that microjitters 70-200 cycles per second at 500fps 500Hz, diminishing 240Hz-vs-500Hz comparisions, above-and-beyond slowness of LCD GtG)

DID YOU KNOW? 4ms stutters are invisible in 16.7ms MPRT of blur (60Hz), but 4ms stutters are human visible at 2ms MPRT (strobed display or recent 500Hz prototype display!). The thickness of motion blur is no longer enough to hide the stutter vibration amplitude.

DID YOU KNOW? 4ms stutters are invisible in 16.7ms MPRT of blur (60Hz), but 4ms stutters are human visible at 2ms MPRT (strobed display or recent 500Hz prototype display!). The thickness of motion blur is no longer enough to hide the stutter vibration amplitude.\

DID YOU KNOW? 1ms stutter error = 4 pixel offset at 4000 pixels/sec, which is only one screenwidth per second on a 4K monitor. This jump can be visible with low MPRTs (e.g. strobed 0.5ms MPRT at 4000 pixels/sec = 2 pixels of motion blur.

DID YOU KNOW? 1ms stutter error = 4 pixel offset at 4000 pixels/sec, which is only one screenwidth per second on a 4K monitor. This jump can be visible with low MPRTs (e.g. strobed 0.5ms MPRT at 4000 pixels/sec = 2 pixels of motion blur.

Power management effects can exceed 1ms since Windows is not always fast at waking up CPUs on all motherboards on all implementations -- even a 20% slowdown of CPU cycles in an 8KHz mouse driver can peg a core that creates an issue. A stationary mouse can cause the CPU to speedstep down, then you suddenly move mouse, 8000 polls per second, it overloads a CPU core (only 5000 polls processed at 100% CPU in the mouse driver), until Intel speedsteps it up, and Intel won't speedstep it in 1/8000sec, interfering with your mouse, and thus screwing up your mouse, since mouse drivers are CPU-bound, and 8000 polls a second pushes the limits of one core of your CPU. Research has shown that even 1 missed poll (1/8000sec) shows as a human-visible missing-mouse-pointer gap in its phantom array effect, and this form of microjitter can be accidentally perceivd/interpreted by some humans as as 'lag'. This is yet another famous BlurBusteresque Millisecond Matters example, that is far more relevant and scientifically measurable than random voodoo one-off EMI situations;

Related, see

Example Blur Busters Success Story: Incubating Research on Mouse 1000Hz vs 8000Hz, and it showed an excellent example of human-visible submillisecond:

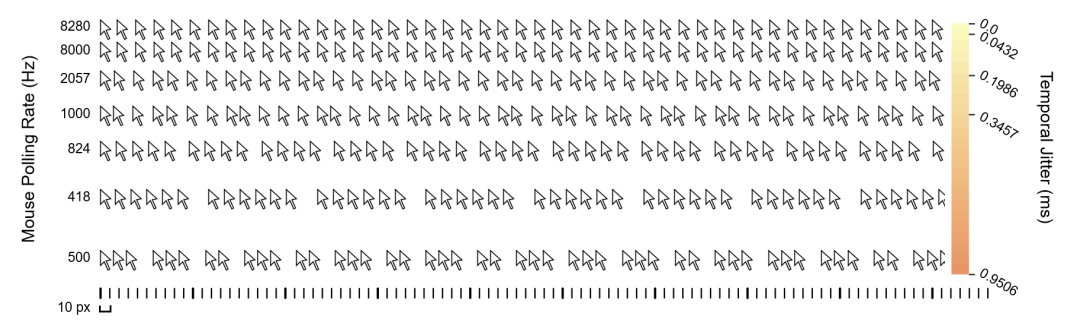

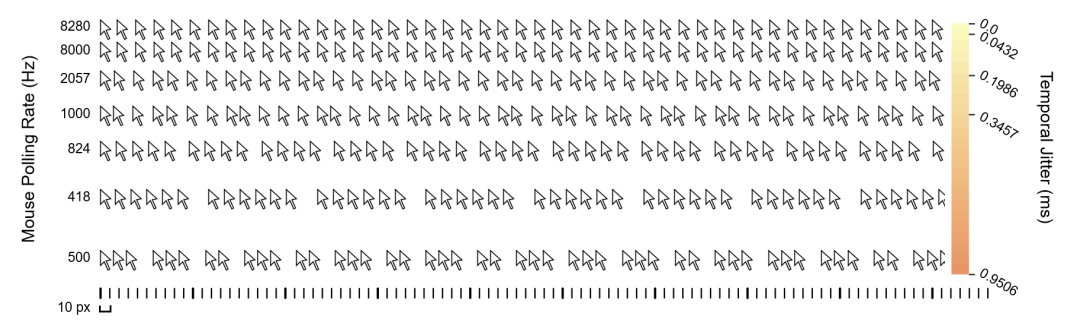

Observe that in the 1000 and 2000 rows, the gap-variances are submillisecond!

And, this is applicable to mouseturns/pans in games too (they stroboscopic too, like a mouse cursor, e.g. fixed gaze on crosshairs while the background stroboscopics past your fixed view, like

The Stroboscopic Effect of Finite Frame Rates, an article apparently widely spread internally at NVIDIA)

Now, the pollrate of 8000 pegs one of my CPU cores if my core is downclocked a bit, and in this case 8000Hz looks worse than 1000Hz in some software, until I lower it to 2000-4000Hz, or if I force the CPU/GPU to never downclock. Since the clockspeed changes will lag behind somewhat (by more than 1/8000sec), this is enough time to create microjitter havoc if you're unusually sensitive to jitter. (Everybody is sensitive to different things. Tearing or Stutter or Blur....sometimes all of the above!)

Unintended consequences, my ***...

<FuturamaBenderBiteMyAss.mp3>

Myself as a well-credited display researcher, being referenced in over 25 scientific peer reviewed papers (

www.blurbusters.com/area51 and

www.blurbusters.com/research-papers) -- Blur Buster already far beyond the "Human Cant See 30fps-vs-60fps" debate, famously incubates/convinces researchers to study new outlier areas that has gained new legitimacy due to ever-raising-Hz lifting the veil of formerly-invisible effects.

Also, when I testing raster-interrupt-style beam-racing with NVIDIA/AMD graphics cards (

successfully -- Tearline Jedi), even 10 microseconds moved a tearline down 1 pixel. This technology was adopted by RTSS Scanline Sync and Specal-K Latent Sync -- sync technologies far superior to VSYNC OFF if you can sustain framerate=Hz at 50%-or-less GPU. But these sync technologies that are super-sensitive to power management settings. Using this specific Full Performance tweak is very useful for improved reliability by RTSS Scanline Sync users!

Also, different sync technologies with different microjitter timing sensitivities, are always continuously being invented, and some systems are having more FreeSync stutter problems (less of a problem with G-SYNC native chip, since FreeSync is more software/driver driven) when running in Balanced Mode. Game Mode often fixes it, but this is a perfect example of how power management really screws around things, already, even at 144Hz. But now we're at 500Hz consumer monitors hitting the market soon, and Game Mode doesn't quite whac-a-mole all weak links completely at that stratosphere...

This tweak is mostly worthless if you're on a 60Hz monitor, though

Most users should try to stick to Game Mode, and do their homework, their research, and not try to test unknown tweaks, but this is definitely not a "placebo tweak" in certain perfect-storm refresh rate race situations, especially on many implementations of motherboards/CPU/driver combinations that don't have microsecond-accurate wakeup abilities.

Tweak Benefits Fact Check:

Scientifically Plausible due to refresh rate race side effects, with known caveats