chocobo wrote:1) If I set my monitor to 144Hz and in-game framerate limit to 144 FPS, and keep my settings low enough to maintain a constant 144, will I have no screen tearing at all and will it function the same as if I had G-Sync?

No, you will still have tearlines. Tearlines will become a beat-frequency relative to frame rate.

141fps at 144Hz = rolling tearlines 3 cycles downwards a second (faster rolling)

142fps at 144Hz = rolling tearlines 2 cycles downwards a second (fast rolling)

143fps at 144Hz = rolling tearlines 1 cycles downwards a second (slow rolling)

144fps at 144Hz = stationary tearline

145fps at 144Hz = rolling tearlines 1 cycles upwards a second (slow rolling)

146fps at 144Hz = rolling tearlines 2 cycles upwards a second (fast rolling)

147fps at 144Hz = rolling tearlines 3 cycles upwards a second (faster rolling)

The more precise the capping software (e.g. RTSS instead of in-game), the less the tearline vibrates and becomes more smoothly rolling. So random tearline positions make it more invisible.

chocobo wrote:2) When I have the monitor on 144Hz and the game is in the 200-240 FPS range, I see a little bit of screen tearing. At 60Hz, there's plenty of screen tearing because 60Hz is terrible. But at 240Hz, the screen tearing is virtually undetectable unless I'm looking closely and trying hard to find some.

Tearline visibility length is controlled by two things:

(1) Refresh cycle length. A tearline appears/disappears in 1/240sec at 240Hz.

(2) Position of next tearline versus previous tearline.

If they reappear in the same location (e.g. like the above scenario), 144fps cap at 144Hz, that amplifies visibility of tearline, but does kind of contain the tearline visibility.

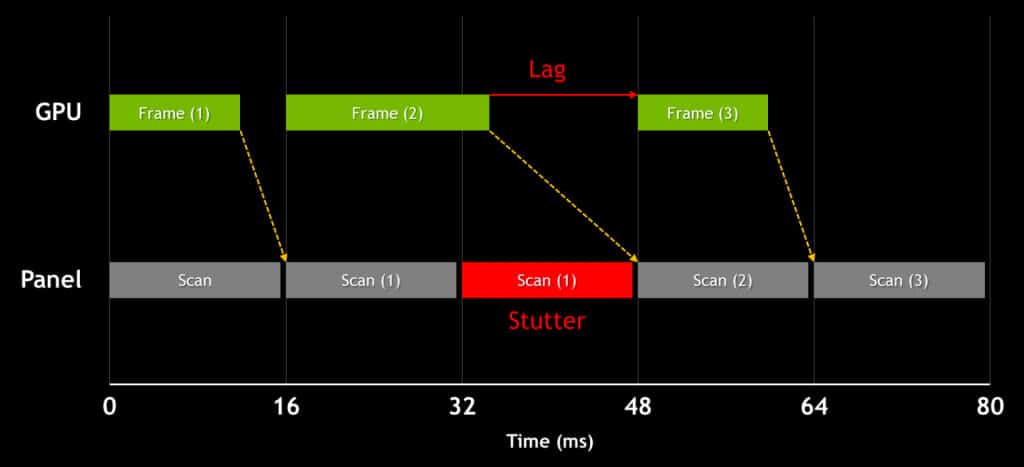

Even without tearing -- microstutters will still be more visible.

chocobo wrote:Am I correct that higher refresh rates make G-Sync less important? I was wondering if maybe I made the wrong choice buying this monitor instead of a 144Hz G-Sync monitor that was on sale. But at 240Hz it seems like the tears are smaller, and of course they're visible for a much shorter time, so the result is that it seems (to me) like adaptive sync would be only a very tiny benefit. Am I understanding this right?

To a certain extent for some people, but it depends on your stutter-sensitivity and your tearing-sensitivity.

Personally, during my

480Hz monitor tests I could still barely see tearing & microstutters at 480Hz. So the diminishing points of returns have not yet ended -- though it requires a continual doubling of Hz to keep milking the diminishing point of returns (120Hz -> 240Hz -> 480Hz -> 960Hz).

A good educational journey of the diminishing returns curve, see

Blur Busters Law And The Amazing Journey To Future 1000Hz Monitors -- it doesn't disappear fully till the quintuple digits Hertz in the most extreme use case scenario. It does get increasingly difficult to tell differences without increasingly massive jumps in Hz however, but the vanishing point is still currently very far away.

chocobo wrote:3) If that's right, then is there an argument to be made for ~210 fps 240Hz over 144Hz G-Sync? Extra smoothness at the cost of imperceptible screen tears sounds like a decent tradeoff, right? (of course my settings have to be low to do this, and the 144Hz G-Sync option could use higher graphics settings... but tbh I don't care about graphics in this games, I just want smooth and easy to see gameplay)

Yes, 210fps at 240Hz looks smoother with VRR than without. Microstutter has harmonic-frequency / beat-frequency effects with Hz -- such that when framerates modulate near harmonic frequencies (e.g. 121fps at 240Hz), you can witness 1 microstutter per second. VRR eliminates those kinds of microstutters from fluctuating frame rates.

Go to

http://www.testufo.com/gsync and watch how VRR eliminates microstuttering. The real thing (real GSYNC) looks even better than that simulated animation.

chocobo wrote:4) I've seen people say things like "100 FPS G-Sync looks smoother than 144 FPS without it". How can 100 FPS look smoother than 144? They just mean they prefer the G-Sync option over a higher framerate + screen tearing, right? G-Sync and Freesync don't accomplish anything outside of eliminating screen tearing, right?

Again, go to

http://www.testufo.com/gsync to understand. 100fps means the display is currently 100Hz.

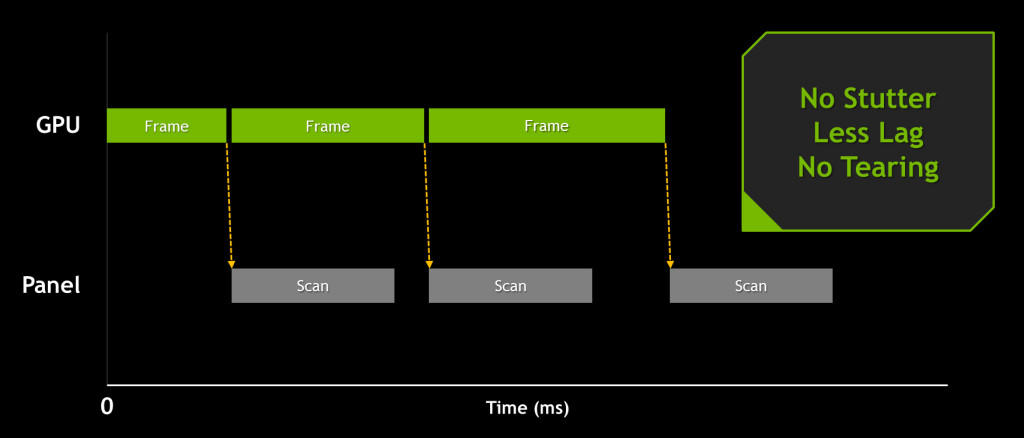

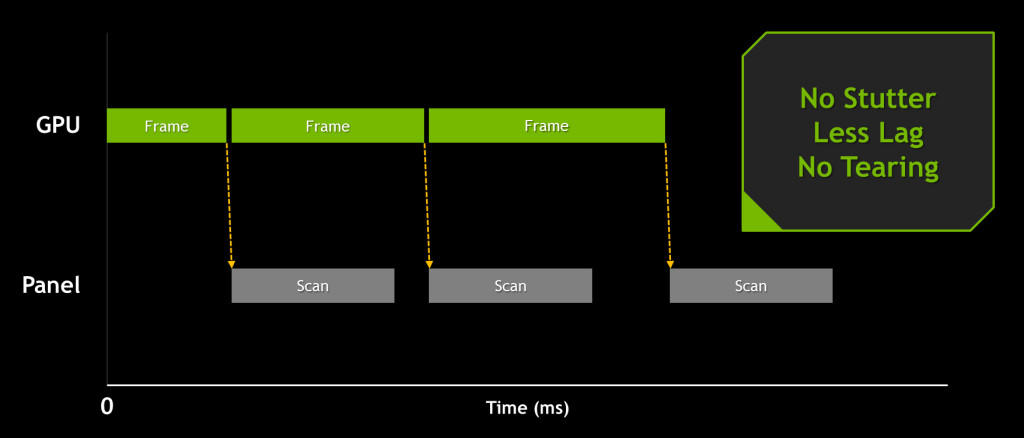

The refresh rate of a GSYNC display can change hundreds of times per second seamlessly. Frame rate is the refresh rate. Refresh rate is the framerate. 47.159fps means the monitor is 47.159Hz. The monitor waits for the software before beginning to refresh. The refresh cycle is directly controlled by the software itself. When a frame is presented by the game, the monitor immediately refreshes at that instant.

Versus:

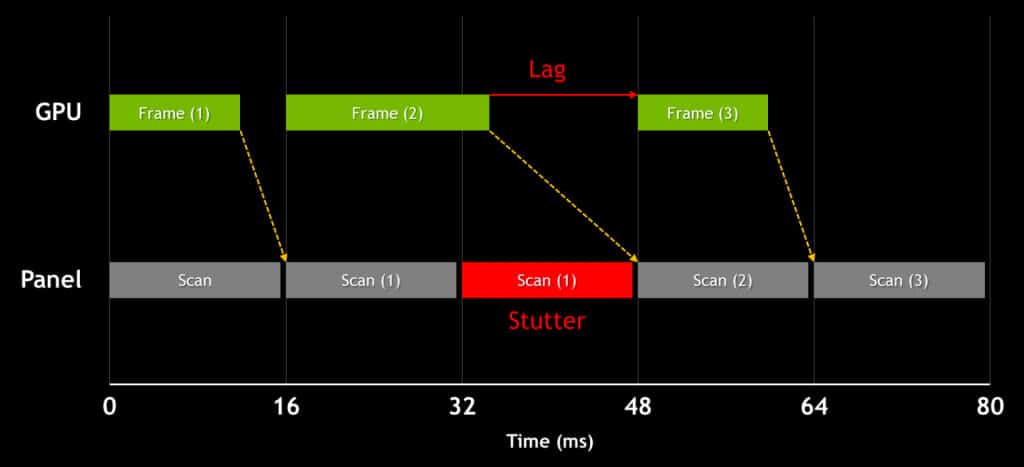

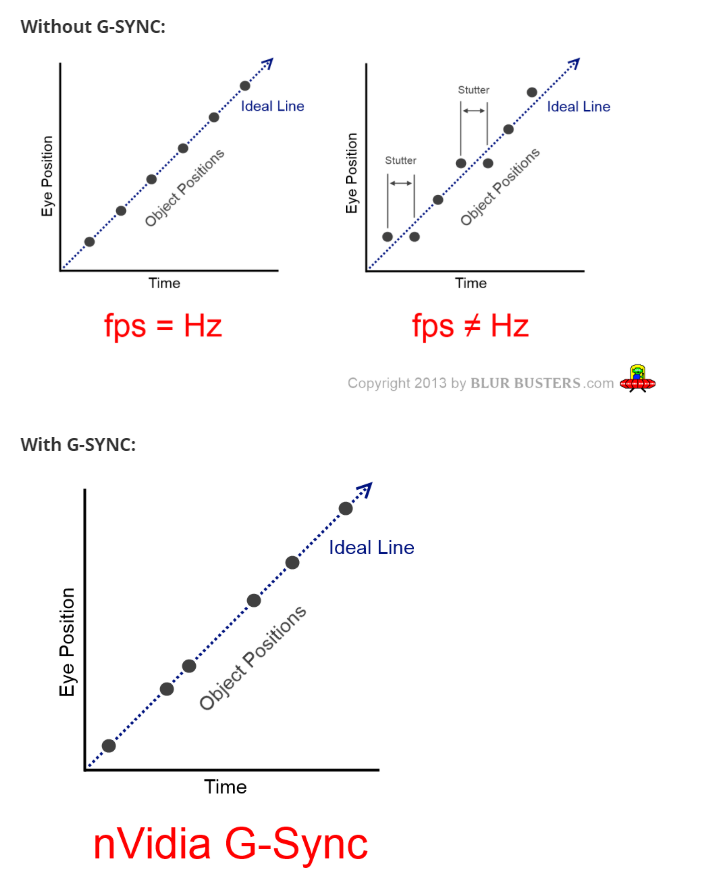

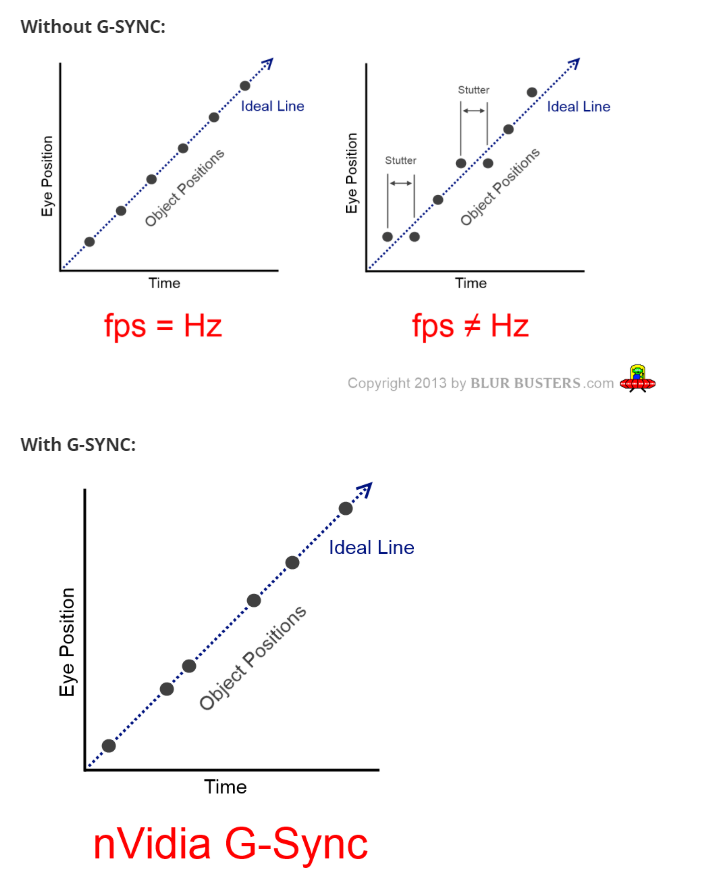

From an eye-tracking perspective, object positions in sync with frame positions:

As you can image, "perfect 51.943fps at 51.943Hz" looks smoother and better than "51.943fps at 60Hz". GSYNC makes variable framerate look like permanent perfect VSYNC ON. Meaning random fluctuating 50-70fps looks near identical to perfect 60fps@60Hz VSYNC ON but without the input lag of VSYNC ON. Random framerates have no stutter if it's in a tight varying range.

So generally, the stutter-removal feature gives you roughly a 30-50% framerate-prettiness premium. In other words, many people prefer perfect-smooth 40fps over stuttery 55fps@60Hz fixed-Hz. VRR becomes 40Hz because fps permanently equals Hz, and Hz permanently equals fps, whenever in VRR range.

For a wonderful demonstration of how framerate randomization are successfully destuttered, see these simulated animations:

So as you can see, VRR eliminates stutter caused by changes in framerates (as long as the frametimes continually stay within the VRR range -- e.g. 1/50sec frametime versus 1/100sec frametime -- it can't do anything to major frametime spikes like hard disk accesses like 1/10sec frametimes caused by disk accesses and such which is well below typical VRR range). Fortunately, most frametime variations are caused by GPU rendering variations, and thusly, VRR is able to fix stutters caused by framerate variance.

Make sure you run these animations in a 100% stutterfree web browser (continuously green "READY") so that browser stutters don't interfere with the framerate-change-caused stutters.

<MATH POINT OF VIEW>

Technical Numbers View:

Also, if you're a math person, this may help put sense in how much "milliseconds matters" in some of the stutter mathematics:

Imagine horizontal panning motion, 4000 pixels per second horizontally panning (1 screen width per second at 4K). A 1 millisecond error means a 4 pixel misalignment (4 pixel stutter). So milliseconds matter during stutter. Now the milliseconds difference between 1/100sec = 10ms and 1/144sec = 6.9ms. That's a 3.1 millisecond difference. Now, with worst-case refresh-cycle misalignment, e.g. missing a VSYNC interval -- perfect framepacing at 10ms (VRR) or rounded-to-nearest-refresh-cycle (non-VRR) ... half of 6.9ms is approximately 3.4

milliseconds. 3.4 milliseconds out of 1000 is still 0.34%. Now, 0.34% of 4000 pixels/second movement (1 screenwidth panning) is equal to a 13.6 pixel jump (stutter) from (4000 * 0.34) = 13.6 ....

Those situations can mean a several pixel-jump of microstutter during near-harmonics situations (e.g. 239fps at 240Hz, 118fps or 121fps at 240Hz, 181fps at 240Hz, and whatnots) -- as the framerate floats up and down, the microstutter mechanics appears and disappear. The higher the framerate, the fainter the microstutter does become. But not all your games can run at 500 frames per second, so, VRR saves the day by completely eliminating this.

Obviously, the amount of pixel jump totally depends on how much the frametime is misaligned with refreshtime when the game is slaved to a fixed monitor Hz. But with GSYNC, the monitor is slave to the game's gametime instead, and the game software directly controls the refresh cycles instead -- framebuffer present timing equals timing of photons hitting eyeballs.

</MATH POINT OF VIEW>

chocobo wrote:Any info would be appreciated, please let me know if I have badly understood how some of these things work. I'm hoping that I made the right monitor choice and won't end up regretting passing up the 144Hz G-Sync one.

Maybe. Maybe not.

- Some of us are very stutter sensitive. Others may not be.

- Some of us are very tearing sensitive. Others may not be.

Just like other vision behaviours:

- Some of us are color sensitive. Others may not be (e.g. slight color blindness -- 8% of population is colorblind)

- Some of us are brightness sensitive. Others may not be. (e.g. eyestrain from brightness)

- Some of us are very flicker sensitive. Others may not be. (PWM-free monitors versus blur-reducing strobe backlights)

- Some of us are very blur sensitive. Others may not be. (Blur? Hah! We are BLUR BUSTERS

)

- Etc.

TL;DR: Everybody's vision is different.