Falkentyne wrote:As far as clarity, you won't be able to tell a difference human perception wise, between 0.5ms (the lowest) and 1.0 ms.

Not true. It depends on a specific human's maximum eye tracking speed. A number of us (including myself and masterotaku) CAN just about see a minor motion blur difference of 0.5ms and 1.0ms, but only at motionspeeds faster than 2400pix/sec. (e.g.

http://www.testufo.com/photo at motion speed setting "3000" and looking at the blurriness of window frames in Quebec photo when comparing 0.5ms and 1.0ms in Strobe Utility.). There's only a small window of motionspeeds where having 0.5ms persistence benefits; since motion speed 1920 pixels/sec is too slow to easily show difference 0.5ms versus 1.0ms and motion speed 3840 pixels/sec (1 screenwidth in 1/2 second) is too fast for most people's eyes to track on a 1080p monitor at desktop viewing distances. If you happen to play a specific videogame, during VSYNC ON where motionspeeds of 3000pix/sec are common, and you've got light-sensitive eyes, then there's a definite motion clarity difference between 0.5ms and 1.0ms. If your eyes cannot track faster than one screen-width per second (~1920 pixels/second), you will not see 0.5ms versus 1.0ms difference. If your eyes are able to track faster than that, this is where you begin to see the benefits of 0.5ms versus 1.0ms.

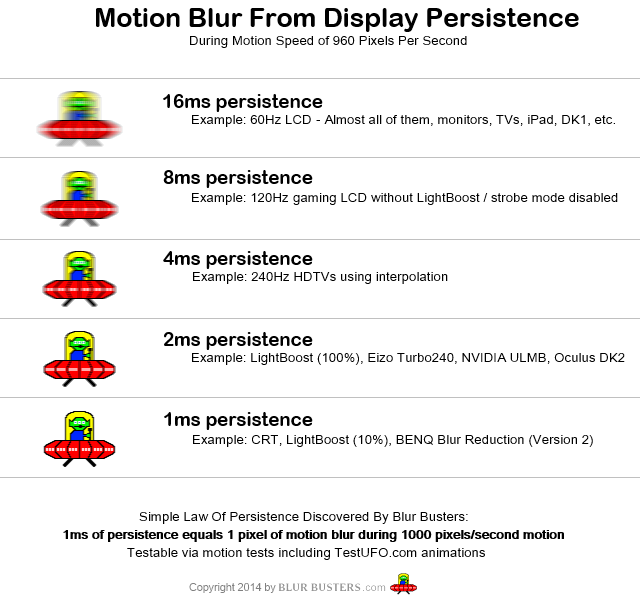

1ms difference = 1 pixel difference of motion blur for every 1000 pixels/second smooth motion.

So a 0.5ms difference at 3000 pixels/second translates to a 1.5 pixel difference in the motion blur.

This is still human noticeable, albiet barely, enough to notice a bit of motion blur in small fine-point text that's scrolling at 3000 pixels/second, for humans that can still track motion that fast. Or the UFO at

www.testufo.com and changing motion speed faster, observing the triple eyes of the alien UFO; the alien eyes are crisp versus blurry at 0.5ms versus 1.0ms.

It is certainly subtle; there is only a 1 pixel to 1.5 pixel motion blur difference between 0.5ms persistence and 1.0ms persistence during 3000 pixels/second fast motion. This is roughly equivalent to the speed of a fast-flick 180 degree, or a very rapid full-screen full-framerate pan, at approximately 1 screenwidth panned in 2/3rds of a second. That said, 0.5ms and 1.0ms difference is mainly noticeable during VSYNC ON (stutter-free) situations, rather than VSYNC OFF (lower lag, but more microstuttery; too difficult to tell apart 0.5ms and 1.0ms).

However, yes, 1.0ms is the lowest "practical" persistence, on a brightness versus motion clarity basis, for most everyday FPS gaming, especially with VSYNC OFF which is essential for competition (microstutters from both VSYNC OFF and mouse fluidity limitations, will usually tend to obscure ability to see 0.5ms versus 1.0ms). However, people who are brightness sensitive and still need to go even dimmer (e.g. in a totally dark room), might as well reduce persistence to 0.5ms if the brightness is perfect for them. But usually, you'll want 1.0ms or 1.5ms or thereabouts.

Example; see below, posted in another thread.

masterotaku wrote:At high frequencies, I use 003 (0.5ms), and 1920px/s is perfectly crisp, but there's a bit of blur at 2400px/s. This monitor is amazing at this.

(I confirm this; My eyes concur too).