Next-generation scanrate: Global Scanrate/F-SRR

Posted: 12 Oct 2020, 00:26

Hello everyone I've known about BlurBusters since about 2012/2013 when Mark Rehjon popped into the scene albeit some websites banned him for what they consider spamming. It's great this website was created but I've noticed people haven't discussed certain things in regards to some technologies out there or implementation of technology.

As it stands now my knowledge is based on me entering the internet and learning about this. I have no experience with the things found among your site. It's interesting and I understand it intellectually but I don't use any of the technologies available. As back in around late 2012/early 2013 I decided to quit gaming and focus on other things. I have not played a actual game in about 8 years. Most of my aspect is gaming wise as it's the fastest mode of visual reproduction but I can see people wanting it in movies and other media.

Anyways I'm talking about GSR or Global Scan-rate a.k.a. F-SRR or Full-Screen Refresh Rate. First before mentioning this I have done, years ago about 3 years ago research on this and while finding nothing on various websites or even scientific websites. I did run into an AVSforum post someone made about just the same tech Global Scan-rate, I can't find their post albeit it was searchable in their: Future tech display forum section, the title was something along the lines of Future scanning technology: Global Scan-rate. Or something to that nature.

The person and the replies given mentioned circuits to produce such a thing requires expensive wise circuits either pixel wise or sub-pixel wise or maybe a circuit that controls both pixels and sub-pixels. Each pixel/sub-pixel would need a circuit driving up costs.

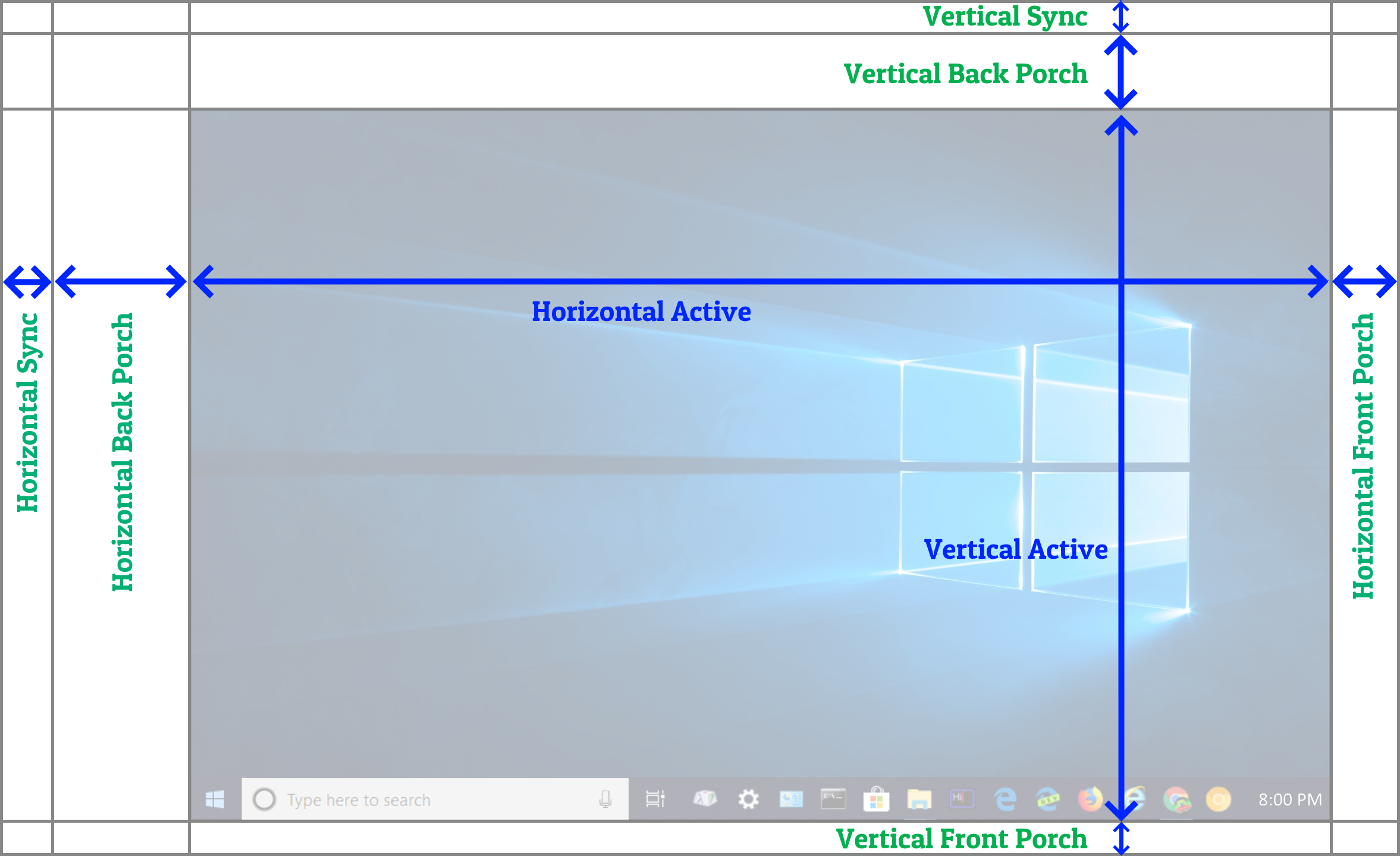

Interlaced Scan: performs interleaving of fields such as odd then even 1, 3, 5, 7 etc.etc. and then 2, 4, 6, 8 etc.etc.

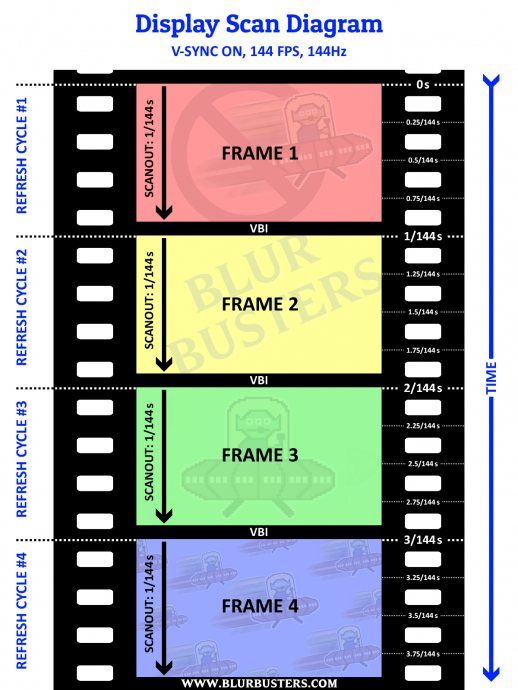

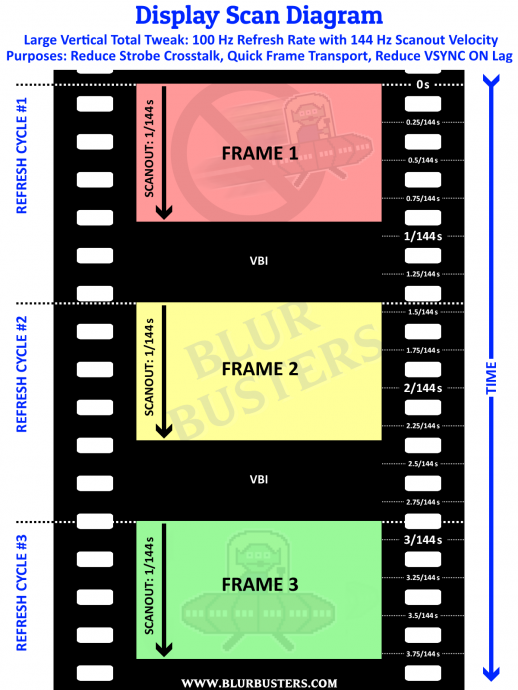

Progressive Scan: performs a sweep of progressive 1, 2, 3, 4, 5 etc.etc. visually wise Progressive looks the best but suffers some motion limitations such as spatial limitations due to Interlaced scan producing double-pumped levels of scanning. Higher refresh rate and higher framerate dictate motion blur reduction.

Global Scan: I assume in my opinion a memory buffer is used to keep the entire drawn image and then the entire image is scanned completely in one shot. So it looks like a flash from a camera a full-screen refresh rate.

Besides costs which I assume can only be handled by R&D divisions of major display manufacturers or subsidies done by Universities.

Why is it no one has performed a Global Scanrate whereby say 75Hz there are 75 full-frames displayed near-instantly at the user. In other words no progressive rather full-screen. I'm very surprised that this type of information isn't readily available even theorycrafted by display users or companies even the AVSforum post was a funny coincidence in finding someone who thought along the lines of said display scanrate. It seems even paywall websites don't have this information or it's paywalled to hell and back and the only way to acquire such information is to spend lots of money on various scientific research papers.

I'm aware recently reading that LCDs particularly those which insert black-frames for the purpose of clearing an image from the buffer and reduce motion blur do perform global scanrates in the sense the image is flashed entirely black. But that is just a quirky way of reducing and or eliminating montion blur or excess motion blur. My question is in regards to the very image displayed and "drawn" on the screen. Rather than progressive, a flash out complete of the entire image to further reduce motion blur and also reduce the who zig-zag pattern experienced by some people who've witness back in the CRT days more often than not the various scanout degrees from top to bottom. I assume LCDs experience similar actions in a more subtle way as their nature is a fixed display and more 2D rather than 3D nature like the 3Dness of CRTs due to the hollow section of the tube producing certain distortions whether measurable by the user or by scientific equipment.

Anyways is there a specific reason why Global Scanoutrate has not been investigated as sourced of future scanning technologies? besides costs which I don't care about. I'm surprised people don't want to invest in new ways of using the display.

As it stands now my knowledge is based on me entering the internet and learning about this. I have no experience with the things found among your site. It's interesting and I understand it intellectually but I don't use any of the technologies available. As back in around late 2012/early 2013 I decided to quit gaming and focus on other things. I have not played a actual game in about 8 years. Most of my aspect is gaming wise as it's the fastest mode of visual reproduction but I can see people wanting it in movies and other media.

Anyways I'm talking about GSR or Global Scan-rate a.k.a. F-SRR or Full-Screen Refresh Rate. First before mentioning this I have done, years ago about 3 years ago research on this and while finding nothing on various websites or even scientific websites. I did run into an AVSforum post someone made about just the same tech Global Scan-rate, I can't find their post albeit it was searchable in their: Future tech display forum section, the title was something along the lines of Future scanning technology: Global Scan-rate. Or something to that nature.

The person and the replies given mentioned circuits to produce such a thing requires expensive wise circuits either pixel wise or sub-pixel wise or maybe a circuit that controls both pixels and sub-pixels. Each pixel/sub-pixel would need a circuit driving up costs.

Interlaced Scan: performs interleaving of fields such as odd then even 1, 3, 5, 7 etc.etc. and then 2, 4, 6, 8 etc.etc.

Progressive Scan: performs a sweep of progressive 1, 2, 3, 4, 5 etc.etc. visually wise Progressive looks the best but suffers some motion limitations such as spatial limitations due to Interlaced scan producing double-pumped levels of scanning. Higher refresh rate and higher framerate dictate motion blur reduction.

Global Scan: I assume in my opinion a memory buffer is used to keep the entire drawn image and then the entire image is scanned completely in one shot. So it looks like a flash from a camera a full-screen refresh rate.

Besides costs which I assume can only be handled by R&D divisions of major display manufacturers or subsidies done by Universities.

Why is it no one has performed a Global Scanrate whereby say 75Hz there are 75 full-frames displayed near-instantly at the user. In other words no progressive rather full-screen. I'm very surprised that this type of information isn't readily available even theorycrafted by display users or companies even the AVSforum post was a funny coincidence in finding someone who thought along the lines of said display scanrate. It seems even paywall websites don't have this information or it's paywalled to hell and back and the only way to acquire such information is to spend lots of money on various scientific research papers.

I'm aware recently reading that LCDs particularly those which insert black-frames for the purpose of clearing an image from the buffer and reduce motion blur do perform global scanrates in the sense the image is flashed entirely black. But that is just a quirky way of reducing and or eliminating montion blur or excess motion blur. My question is in regards to the very image displayed and "drawn" on the screen. Rather than progressive, a flash out complete of the entire image to further reduce motion blur and also reduce the who zig-zag pattern experienced by some people who've witness back in the CRT days more often than not the various scanout degrees from top to bottom. I assume LCDs experience similar actions in a more subtle way as their nature is a fixed display and more 2D rather than 3D nature like the 3Dness of CRTs due to the hollow section of the tube producing certain distortions whether measurable by the user or by scientific equipment.

Anyways is there a specific reason why Global Scanoutrate has not been investigated as sourced of future scanning technologies? besides costs which I don't care about. I'm surprised people don't want to invest in new ways of using the display.