chenifa wrote: ↑17 Mar 2021, 10:48

My first thought is that the gpu takes the most recent prerender and dumps the rest.

Are you using VSYNC ON or NVIDIA Fast Sync?

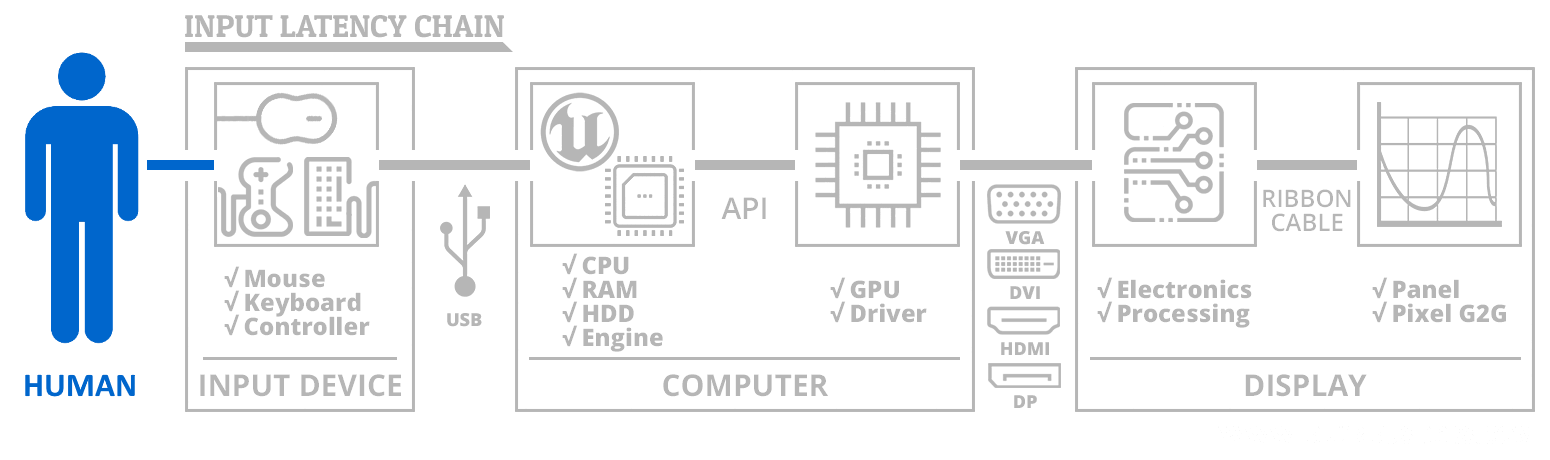

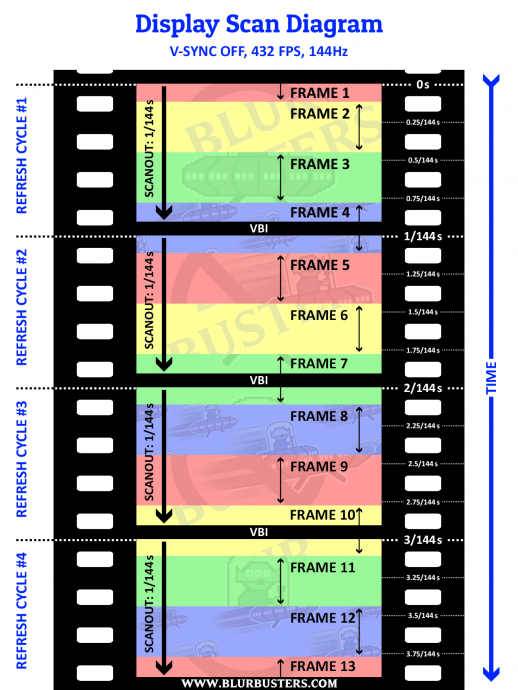

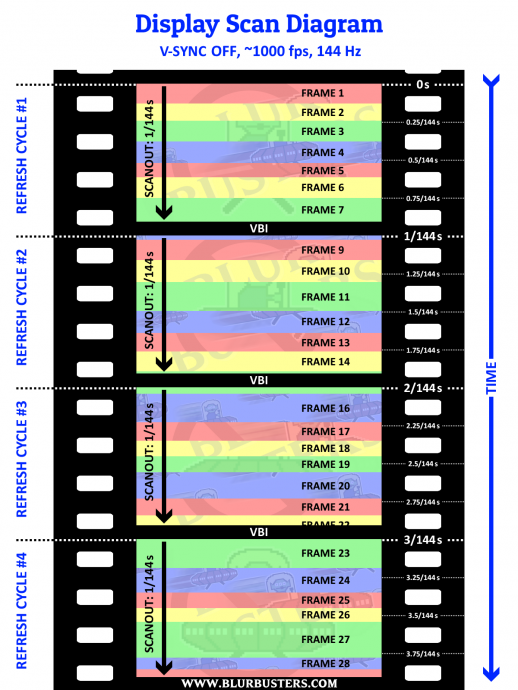

If you're using VSYNC OFF, frame dumping never happens (unless the game intentionally does it -- but that's rare during VSYNC OFF). You can have variable-thickness frameslices, since the distance between tearlines is dependent on frame time, and you can have multiple frame slice per refresh cycle:

Rarely, in multithreaded rendering VSYNC OFF occasionally/rarely creates a temporary frame queue because of a blocked frame presentation (i.e. GPU too busy doing something else, like a maxed 100% GPU that is now blocking everything else) but then they spew out suddenly (it can take less than 1ms to present 3 frames during VSYNC OFF -- but even they appear as thin frame slices). Most of the time, GPUs pipeline differently than this, but situations may occur where present latency suddenly spikes (blocked call) while a different thread continues rendering (in multithread rendering), so you may have sudden brief spikes in frame queues, especially if you're pushing GPU limits.

But during VSYNC OFF, the frame queue can flush out very fast -- yet still all have frames visible -- see my diagrams below. So frames aren't necessarily "dumped" unseen during VSYNC OFF -- once you understand the relationship of VSYNC OFF versus scanout.

It's useful to know a tearline follows the math of horizontal scan rate (240Hz is usually 270,000 pixel rows per second, so a 0.5ms frame interval between two Present() calls creates a frameslice approximately 270000 * 0.0005 = 135 pixel tall frameslice for a 0.5ms presentation interval (i.e. suddenly emptying of a frame queue in two consecutive Present()'s if Present() latency is 0.5ms). The horizontal scan rate also known as the horizontal refresh rate (horizontal = number of pixel rows per second, including offscreen pixel rows in blanking interval, usually less than 5% of visible resolution)

I'm an expert in terms of the black box between Present()-to-photons, including the pixels coming out of the GPU output, you can see my

Tearline Jedi VSYNC OFF Raster Beam Racing Experiments which is how Guru3D was inspired to invent RTSS Scanline Sync.

VSYNC OFF 432fps at 144 Hz

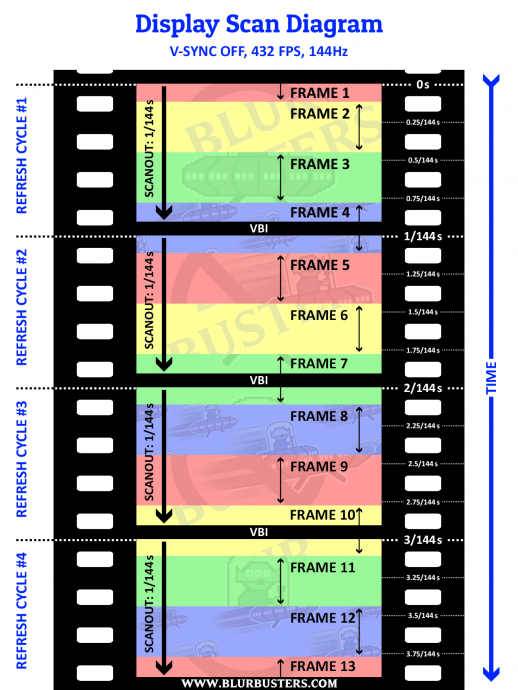

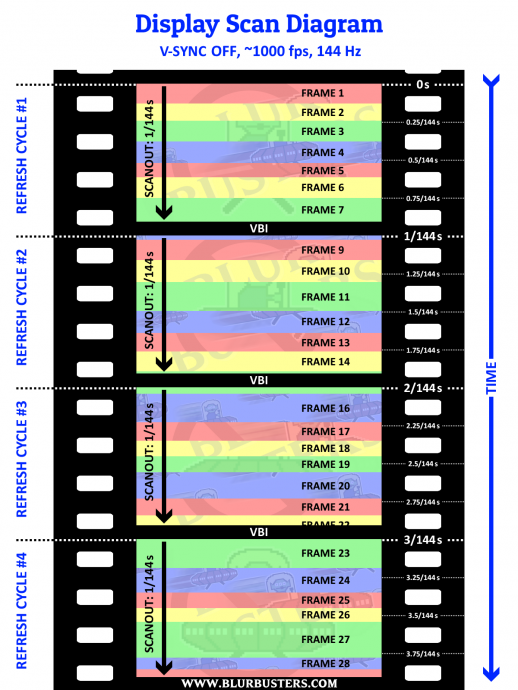

VSYNC OFF 1000fps at 144 Hz

VSYNC OFF 1000fps at 144 Hz

Notice the thinner/thicker slices. That's from frametime variances. Some frametimes may take 0.5ms and other frame times may take 2ms. Those 2ms frametimes have 4x taller frameslices than 0.5ms frametimes.

And, to show mastery of tearline control in software programming:

If you need to pick up your jaw from the floor, there's

more YouTubes of Tearline Jedi mastery...

At 240Hz at 270KHz scanrate, I needed to program microsecond busywaits in 1/270000sec increments before Present()'ing the frame to move tearline downwards by 1 pixel. It all jitters because of timing imprecisions, but it demonstrates tearlines are just rasters based off the Horizontal Refresh Rate (the number visible in ToastyX Custom Resolution Utility).

To answer some questions, some of these stuff is measurable via tearline behavior too -- to know the latency of my Present()'s and frame queues if I stare at the ground truth of raster tearline positions (they're almost atom-clock-like in precision: You can even use a high speed camera and use the tearline position as part of latency calculations, and the numbers actually end up matching photodiode measurements depending on whether the photodiode is put immediately above tearline or immediately below tearline -- latency is highest at the bottom of frameslices and latency is lowest at the top edge of framelices, and it's a continuous latency gradient along the vertical dimension of the frameslices (between two tearlines).

Frame queue latency can sometimes be sub-millisecond and still produce visible frame slices. So say, you present 3 consecutive frames (during VSYNC OFF) to quickly empty a frame queue (3 down to 1) in less than a millisecond -- that doesn't mean the frames are discarded, if you're using VSYNC OFF.

Example: If you present 3 frames quickly (present call unblocks, and the frame queue unblocks suddenly and flows) -- you might notice, say, 3 frames that are thin -- like, only 100 pixel tall frame slices (seen in high speed camera if you're trying to catch briefly momentarily-visible tearlines). Like no tearlines for a while then suddenly 3 tearlines in same refresh cycle. (Hard to see unless you have a high speed camera). To turn tearline distances into driverside frame presentation latencies, you can even load ToastyX, get the horizontal scan rate, then calculate the time interval between frames simply by the distance between tearlines! You can even estimate; If it's 1/10th screen height between each tearlines and there were three tearlines, it's 1/10th of a refreshtime. So if 144Hz, you had 1/1440sec (0.7ms) between tearlines, during a sudden frame-queue-emptying that say, maybe took about 1.4ms total (2 x 0.7ms), measured from high speed footage and measuring distances between tearlines, and converting it to time numbers that way. Clever method of tearline-position-as-clock (if done properly). It's a bit of overkill work, but valid if one was scientifically inclined to try to measure a black box this way via its outputted tearlines.

So there's multiple ways to measure behaviours. I would like to close out, that adding a photodiode oscilloscope to this, Present()-to-photons is essentially almost lagless at the first pixel row underneath the tearline -- about 2-3ms (the latency of the DisplayPort transceiver + scaler/TCON + pixel GtG response).

The point being, is if you're using VSYNC OFF, frames are typically not thrown out. And most of the time it stays at 1 queue depth because frame presentation (aka Present() ...) is almost non-blocking during VSYNC OFF but in rare cases may block for whatever reason, frame queue gets piled up, but the frame queue may spew out as rapid sub-millisecond frameslices -- so even if you deliver 3 frames in one millisecond, you may still have visible parts of 3 frames on the screen anyway, thanks to the way how VSYNC OFF is scanout-splicing....

As the process of scanout -- serializing a 2D image out of a 1D output for a 1D wire to a display -- a GPU output is spewing out 1 pixel row every 1/270000sec for 1080p240 (just look at the "Refresh" under "Horizontal" in ToastyX to know how fast a GPU outputs one pixel row), and most gaming monitors just realtime streaming that pixel row straight onto the panel in its existing top-to-bottom scanout sweep (there's a small rolling window of a few pixel rows for processing/GtG/etc, but effectively sub-refresh latency for cable:panel refresh).

What this really means, is you can present 3 frames very fast (less than a millisecond apart), and still have visible frameslices from ALL of them, despite less than 1ms to present 3 frames.

Of course, this assumes you're testing using VSYNC OFF rather than certain VSYNC ON / Fast Sync workflows (where frames CAN be discarded completely unseen)

P.S. This talk made RTSS Scanline Sync possible, when I communicated all this to Guru3D...