This is seriously getting out of hands!!

I will read your post carefully and maybe come back with one or two questions, if I may.

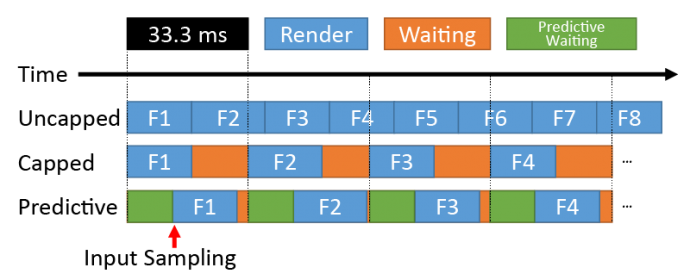

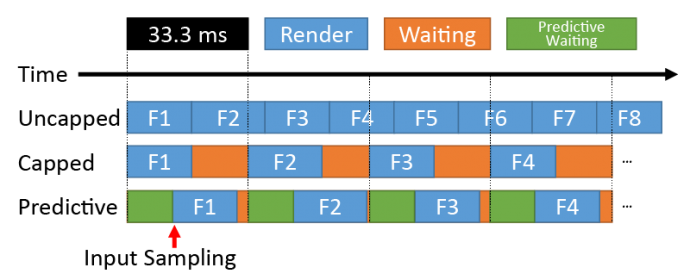

There are many tactics of waiting. Let's also note the GeDoSaTo technique of delaying inputread+render+flip too, which is not normally typically done by RTSS. It's possible for a capper to present quickly, followed by immediately a predictive blocking-wait (based on historic frametimes) before returning to the caller's Present() to put the next inputread-render-flip closer to the VSYNC intervals, like this:pegnose wrote:So RTSS replaces the present() function with one that is able to wait until the given frame time requirement is fulfilled. Effectively the system is CPU limited (hence 0 pre-rendered frames), even if actually not much work is done.

It can be useful in Quad-SLI situations, then it's only 2 per card.pegnose wrote:Makes sense. Wow, 8!RealNC wrote:The highest value you can use in NVidia Profile Inspector is 8. Maybe the driver allows even higher values, but there's no point in having an option to that. Even 8 is pretty much useless. Games become unplayable.

Not sure what the default ("app controlled") is when a game doesn't specify a pre-render queue size. I've heard it's 3, and that's decided by DirectX. But not sure. It could be 2. Or it might depend on the amount of cores in the CPU. The whole pre-render buffer mechanism and the switch to asynchronous frame buffer flipping was done because mainstream CPUs started to have more than one core in the 2000's.

No worries, but since people were coming up with "scanout latencies" discussion. I had to make sure that was covered. Most of this is far beyond the average layperson's ability to conceptualize but this topic thread certainly attracted highly skilled discussion.pegnose wrote:@Chief Blur Buster:

This is seriously getting out of hands!!

I will read your post carefully and maybe come back with one or two questions, if I may.

Forum Rules wrote: 1. Rule #1: Be Nice. This is published forum rule #1. Even To Newbies & People You Disagree With!

2. Please report rule violations If you see a post that violates forum rules, then report the post.

3. ALWAYS respect indie testers here. See how indies are bootstrapping Blur Busters research!

I *think* you're talking about the issue (correct me if I assumed wrong here) where G-SYNC + V-SYNC, when within the refresh rate, can exhibit more hitching during frametime spikes or transitions from 0 to x frames (and visa-versa) when compared to standalone V-SYNC, no sync, or G-SYNC + V-SYNC "Off"?pegnose wrote:From the previous discussion I understood that VRR with V-Sync enabled in NVCP has the same issues as mere V-Sync, correct? Only if you disable V-Sync with VRR, and accept some minor tearing here and there, you can get rid of all the down-sides?

At the time a particular line appears on the screen this line is less old then if it was created with VSÝNC ON. So latency is more uniform with respect to when data appears on the screen. But at the time all lines have been drawn and the image is complete, the latency with VSYNC ON is more uniform again from a certain standpoint, because all pixels have been created at the same time and have the same latency wrt the time point of viewing (while with VSYNC OFF the image consists of multiple images in fact).Chief Blur Buster wrote: Which means latency is more uniform for the whole screen plane during VSYNC OFF, unlike for VSYNC ON

I am having trouble understanding this, particularly the last sentence. Do you happen to have a figure to illustrate this clearer? Which time depends on what time from 1 frame earlier?Chief Blur Buster wrote: For VRR, best stutter elimination occurs when refreshtime (the time the photons are hitting eyeballs) is exactly in sync with the time taken to render that particular frame. But VRR does current refreshtime equal to the timing of the end of previous frametime (one-off)...

Enjoy.

Ah, jorimt and RealNC were talking about that, too. Seems like the optimal solution. So, some games implement this?Chief Blur Buster wrote: There are many tactics of waiting. Let's also note the GeDoSaTo technique of delaying inputread+render+flip too, which is not normally typically done by RTSS. It's possible for a capper to present quickly, followed by immediately a predictive blocking-wait (based on historic frametimes) before returning to the caller's Present() to put the next inputread-render-flip closer to the VSYNC intervals, like this:

Nice.Chief Blur Buster wrote: There is a new framerate capper here too:

viewtopic.php?f=10&t=4724

Holy shit!Chief Blur Buster wrote:It can be useful in Quad-SLI situations, then it's only 2 per card.pegnose wrote:Makes sense. Wow, 8!RealNC wrote:The highest value you can use in NVidia Profile Inspector is 8. Maybe the driver allows even higher values, but there's no point in having an option to that. Even 8 is pretty much useless. Games become unplayable.

Not sure what the default ("app controlled") is when a game doesn't specify a pre-render queue size. I've heard it's 3, and that's decided by DirectX. But not sure. It could be 2. Or it might depend on the amount of cores in the CPU. The whole pre-render buffer mechanism and the switch to asynchronous frame buffer flipping was done because mainstream CPUs started to have more than one core in the 2000's.

Look at Quad Titan setup for an eye opening setup I wrote about five years ago. Whoo!

Interesting, never tried that. Unfortunately, I have sold my 1080 SLI setup in favor of a 2080 Ti. Basically no VR game supported VR SLI.Chief Blur Buster wrote: Correct me if I am wrong, but it is my understanding that prerendered frames can be useful for assisting in SLI framepacing to fix microstutters. (Hopefully it's not multipled by cards, like 8 times 4 equals 32 -- that would be insanely ridiculous)

Honestly, I can't say anymore without reading up much of my past comments. I might have only meant the frame stacking issue. But this was much more interesting! If you think you know by now most of the intricate details of a technique, there is still so much in between.jorimt wrote:I *think* you're talking about the issue (correct me if I assumed wrong here) where G-SYNC + V-SYNC, when within the refresh rate, can exhibit more hitching during frametime spikes or transitions from 0 to x frames (and visa-versa) when compared to standalone V-SYNC, no sync, or G-SYNC + V-SYNC "Off"?pegnose wrote:From the previous discussion I understood that VRR with V-Sync enabled in NVCP has the same issues as mere V-Sync, correct? Only if you disable V-Sync with VRR, and accept some minor tearing here and there, you can get rid of all the down-sides?

Because G-SYNC (even paired with the V-SYNC option) has none of the issues that standalone V-SYNC has within the refresh rate; it has a different issue (and cause) entirely.

If that's what you're referring to...

Very few. It takes a lot of intentional programmer work to do that. I've seen some emulators with a configurable inputread-delay to reduce input lag.pegnose wrote:Ah, jorimt and RealNC were talking about that, too. Seems like the optimal solution. So, some games implement this?

I've got an Oculus Rift, so I guess I should be replacing my GTX 1080 Ti Exteeme with an RTX 2080 Ti eventually.pegnose wrote:Interesting, never tried that. Unfortunately, I have sold my 1080 SLI setup in favor of a 2080 Ti. Basically no VR game supported VR SLI.

Forum Rules wrote: 1. Rule #1: Be Nice. This is published forum rule #1. Even To Newbies & People You Disagree With!

2. Please report rule violations If you see a post that violates forum rules, then report the post.

3. ALWAYS respect indie testers here. See how indies are bootstrapping Blur Busters research!

From what I am understanding in newer developments:jorimt wrote:If that's what you're referring to, it's because 1) G-SYNC + V-SYNC appears to have what could be called a brief "re-initialization" or "re-connection" period (between the module and the GPU) when there is an extremely abrupt transition in the framerate (FYI, there were official reports of a 1ms polling rate on the original modules, and it was never confirmed whether that polling rate either diminished or was entirely eliminated in further module iterations), and 2) since the scanout speed itself on the display is static and unchanging, unavoidable timing misalignments between framerate and scanout progress can occur during these abrupt transitions where the module attempts to adhere to the scanout (to completely avoid tearing), which will create frame delivery delay where the other methods would not, since the module may have to hold the next frame and skip part of (or sometimes, even all of a scanout cycle) before delivery in extreme instances, and thus repeat display of/refresh with the previous frame once or more in the meantime...

Forum Rules wrote: 1. Rule #1: Be Nice. This is published forum rule #1. Even To Newbies & People You Disagree With!

2. Please report rule violations If you see a post that violates forum rules, then report the post.

3. ALWAYS respect indie testers here. See how indies are bootstrapping Blur Busters research!

Yes, but only if using G-SYNC (what you called Gsink)pwn wrote:But based on the conversation, I understand the best configuration for playing CsGo is Locked FPS 237 (I have a 240Hz monitor supporting Gsink) and not free FPS 300+?

Forum Rules wrote: 1. Rule #1: Be Nice. This is published forum rule #1. Even To Newbies & People You Disagree With!

2. Please report rule violations If you see a post that violates forum rules, then report the post.

3. ALWAYS respect indie testers here. See how indies are bootstrapping Blur Busters research!