Alright

@Martinengo, here are my previously un-posted frametime graphs that went along with my two LatencyMon images I posted a while back in this thread.

This should give you a much better idea on what "normal" is on a healthy, well functioning higher-end system (hint: the answer isn't that simple)...

Specs:

OS: Windows 10 64-bit (v1809)

Nvidia Driver: 419.67

Display: Acer Predator XB271HU (27" 144Hz G-Sync @1440p)

Motherboard: ASUS ROG Maximus X Hero

Power Supply: EVGA SuperNOVA 750W G2

CPU: i7-8700k @4.3GHz (Hyper-Threaded: 6 cores/12 threads)

Heatsink: H100i v2 w/2x Noctua NF-F12 Fans

GPU: EVGA GTX 1080 Ti FTW3 GAMING iCX 11GB (1936MHz Boost Core Clock)

Sound: Creative Sound Blaster Z

RAM: 16GB G.SKILL TridentZ DDR4 @3200MHz (Dual Channel: 14-14-14-34, 2T)

SSD (OS): 500GB Samsung 960 EVO NVMe M.2

HDD (Games): 5TB Western Digital Black 7200 RPM w/128MB Cache

All of the below applies to Fortnite running at my display's native res with G-SYNC + NVCP V-SYNC "On."

My

"1st session" was captured with the Windows power profile set to "Highest Performance," NVCP "Power management mode" set to "Prefer maximum performance," and game settings maxed with in-game limiter set to 120 FPS...

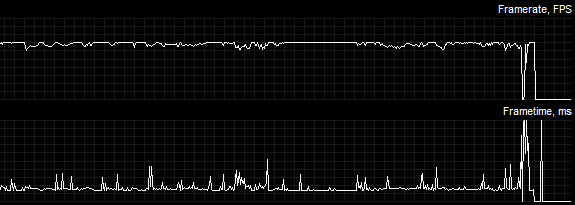

Afterburner framerate/frametime graph #1:

LatencyMon #1:

LatencyMon #1:

As you can see, there are frametime variances from the fluctuating framerate (since my system couldn't maintain 120 FPS 100% of the time) AND frametime spikes evident (43ms was the highest there I believe). And while in-game FPS limiters typically have lower input lag than the RTSS FPS limit, since they set an average framerate target, instead of a frametime target, they usually have much more frametime variances.

We can also see via LatencyMon above, that lower DPC levels don't always necessarily translate to less frametime variances/spikes.

---

My

"2nd session" had the same conditions as the "1st session," but the in-game limiter was disabled, and I used a 141 RTSS FPS limit instead...

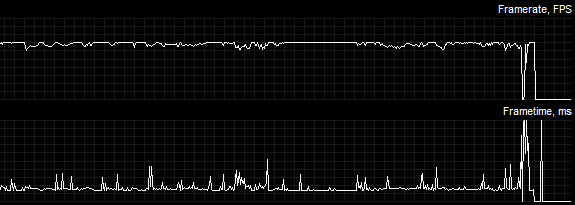

Afterburner framerate/frametime graph #2:

LatencyMon #2:

LatencyMon #2:

Well would you look at that? Even though parts of my DPC readings were improved over the "1st session," and I was using RTSS to limit the FPS instead of the in-game limiter, since I could reach 141 FPS even less of the time than 120 FPS, I had even more (or at the very least, as much) frametime variances, and a spike that actually exceeded 50ms (and also note in the above framerate/frametime graph that where framerate

drops, frametime

rises in direct relation). That said, when my system could hold 141 constantly, RTSS did a better job than the in-game limiter in keeping the frametimes consistent (the small flat areas visible near the very end of the frametime graph before I exited to the main menu).

---

Okay, so for my

"3rd session," what about if I rule out frametime variances from framerate drops by reducing all the in-game settings to low? Also, for the fun of it, I'm now using my default Windows "Balanced" power profile (which clocks down my CPU when idle), and now have NVCP "Power management mode" set to "Optimal power," again, with a 141 FPS limit via RTSS to reduce frametime variances even further, which should really make the frametime spikes stand out:

Afterburner framerate/frametime graph #3:

LatencyMon #3:

LatencyMon #3:

Even more interesting. Even though my CPU and GPU are not set to run at 100% clocks all the time now (which actually doesn't matter, as they virtually always kick in appropriately when running a demanding app), and with the in-game settings set to low, I barely saw any frametime variances or spikes compared to the other scenarios, and the few spikes I did have were under 20ms. Not only that, but some of the DPC levels were actually lower (the "Current" reading was only higher because the CPU down-clocking is taking place now at idle) than the previous sessions.

If I hadn't informed you outright, you'd probably assume these frametime graphs were from entirely different systems. However, none of this is strange or unexpected behavior...

Frametime spikes are caused by the system taking longer to render the more demanding frames; the less demanding the frames are to render, the less frametime spikes there are, and the quicker they pass. It's that simple.

This also explains why pro streamers (and even non-streaming pro players in general) have less visible frametime spikes, since they usually play these games at the lowest settings paired with seemingly overkill system specs for the highest framerate possible, and to keep things smooth while both running and streaming the game simultaneously.

And yes, frametime variances, spikes, GPU usage, CPU usage, DPC latency levels, you name it, will fluctuate/change from session to session, even in the same game on the same system. As I said earlier, you're system is simulating the game in real-time,

every time; it's not the same thing as playing back a static video (which usually contains entirely predetermined frame information) repeatedly, for instance.

Barring any non-game-specific issues your system has (that we have yet to fully determine either way), you simply want to run the game at the lowest graphical settings possible to have the lowest possible amount of frametime spikes, and to use the RTSS limiter (even though it has up to 1 frame more input lag than in-game limiters) to have the least frametime variances as possible (with the average framerate hitting that limit as much as possible, obviously).

I could have probably even gotten less spikes (maybe even near zero) and even lower DPC numbers by OCing my CPU to 5GHz or more (it auto Turbo Boosts to 4.3GHz in-game currently), disabling all power saving, SpeedStep, and Turbo Boost settings at the bios-level, turning hyper-threading off, disabling both G-SYNC and V-SYNC, and running Fornite from my SSD at the game's lowest available graphical settings and it's lowest internal resolution (like 640x480 levels). Not worth it to me, personally, but a viable (and a frequently used) option for those playing at pro-level.

---

Bonus post from another thread containing a frametime/framerate graph of Fortnite running on my system (note the graph looks wildly different from the previous three; separate sessions in the same game on the same system can easily cause this due to differences in map routes taken, amount of visible enemy models, how many firefights/explosions take place, netcode, ping, etc):

viewtopic.php?f=5&t=5045&start=10#p39136

jorimt wrote:GFresha wrote: Don't know if its possible if you have fortnite and msi afterburner installed if you can run a frametime graph in game for a few minutes and see if you get the same results.

I played a solo match, eliminated two, placed 4th:

Highest spike I saw was 25.9ms (others were in direct relation to framerate fluctuation; didn't have a constant 141 FPS lock). The spikes near the very end of the graph were from me exiting the game.

In-game settings were maxed, in-game FPS limiter was set to unlimited, I used RTSS for a 141 FPS cap (I usually use the in-game 120 cap, but assumed you were using RTSS, and I wanted to replicate), fullscreen optimizations were left enabled for the game exe, Game bar/DVR disabled, Game Mode enabled, and the game is installed on my 5TB HDD (Western Digital Black 7200 RPM w/128MB Cache).

I was running G-SYNC + NVCP V-SYNC "On" (full PC specs in sig), my NVCP settings were "Maximum pre-rendered frames" at "1," and "Power Management mode" at "Prefer maximum performance" for that game profile.

The only background program I had running was Afterburner/RTSS.

---

Bonus image of all my Afterburner readouts during my

"3rd session" from this post:

Oh, and fun fact: while the RTSS FPS limiter has steadier frametimes than almost any other limiter, it doesn't actually have

perfect frametimes. The reason the framerate/frametime readouts

appear perfectly flat when using the RTSS FPS limiter (with the FPS sustained above the set limit) is because RTSS can only read framerate and frametime

below it's set limit, not

above, so while those upper frametime variances are still there (the RTSS limiter can actually fluctuate up to about a frame above it's set limit), it just can't reflect them in the graph when it's set as the limiter (which it can do when an in-game limiter is set instead, which makes the in-game limiter look even more unstable than it is when directly compared to RTSS).