TLDR: I'm a VSync user. I use VSync because I hate screen tearing and I'm very sensitive to it. My understanding of VSync is that, given a double buffer scenario, VSync at 60 Hz should not add any more than ~33ms of latency.

Here's my thought process: You have a 60 Hz monitor that takes 1/60th of a second to display a frame before it switches to the next frame. With double buffer VSync (which is the standard) you have a back buffer that holds a frame from the GPU and sends that frame to the monitor when the monitor is done displaying the previous frame. Knowing that, shouldn't the maximum latency being added by VSync be 16.67ms plus 16.67ms to give ~33ms? I see some people claiming that VSync can add more than 50ms, but I don't see how this is possible in this scenario.

Now, another question: When using Windows 10 for normal desktop tasks, VSync is always forced by Windows, yet no one complains about latency or notices a delay in clicking response and mouse movement. Isn't this implementation of VSync the same (or probably worse due to triple buffering) as what we get when we enable VSync in a game like PUBG: Battlegrounds? That game takes raw mouse and keyboard input, so it should function the same as Windows.

I am aware that VSync adds latency, but isn't the added latency (while in-game) the same as what you get when you're using Windows normally? In that case, I should have no issues using VSync in-game, as I notice no latency while using Windows. My monitor is 60 Hz and I can't stand screen tearing. I also do not notice any added latency when using VSync. I hope someone can confirm my thoughts on this topic.

Question about VSync in Gaming and Windows 10

-

Mark Fable

- Posts: 12

- Joined: 29 Jul 2021, 16:01

Re: Question about VSync in Gaming and Windows 10

I noticed no one is contributing here. Is the question too technical? I'd especially appreciate it if the Chief Blur Buster could chime in. I strongly believe that my arguments are correct, but I'd like to confirm.

- Chief Blur Buster

- Site Admin

- Posts: 12139

- Joined: 05 Dec 2013, 15:44

- Location: Toronto / Hamilton, Ontario, Canada

- Contact:

Re: Question about VSync in Gaming and Windows 10

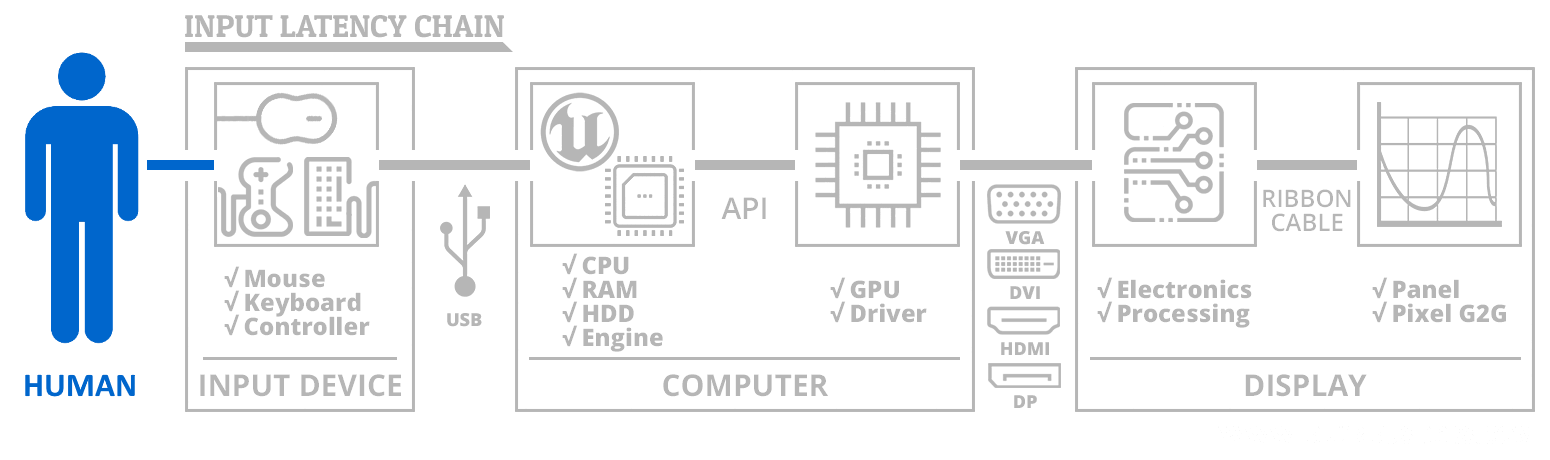

The input lag chain is extremely huge, with different latencies in different parts of the VSYNC chain:Mark Fable wrote: ↑06 Mar 2022, 17:11TLDR: I'm a VSync user. I use VSync because I hate screen tearing and I'm very sensitive to it. My understanding of VSync is that, given a double buffer scenario, VSync at 60 Hz should not add any more than ~33ms of latency.

Here's my thought process: You have a 60 Hz monitor that takes 1/60th of a second to display a frame before it switches to the next frame. With double buffer VSync (which is the standard) you have a back buffer that holds a frame from the GPU and sends that frame to the monitor when the monitor is done displaying the previous frame. Knowing that, shouldn't the maximum latency being added by VSync be 16.67ms plus 16.67ms to give ~33ms? I see some people claiming that VSync can add more than 50ms, but I don't see how this is possible in this scenario.

That's why in some games, VSYNC ON 60Hz can be over 100ms latency, when all the factors pile up simultaneously. You might not feel that directly, however.

What this means is that if you have terrible lag in each of the stage, you can have more than 100ms of latency in a double buffer scenario, especially if there's multiple blocking stages.

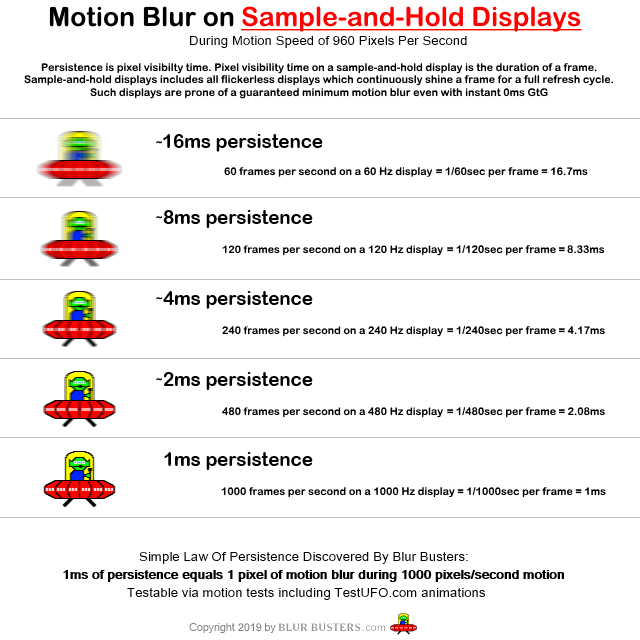

A higher framerate on the GPU (e.g. 240fps) can reduce GPU lag to 4ms per frame, especially if it's run via a "replace-latest-undisplayed-buffered-frame" triple buffer algorithm, or if using a VRR display that refreshes on demand. (A VRR display means the screen refreshes immediately on frame presentation, the display is actually waiting for the software to display. Which means a game doing 52fps means the display is doing perfect 52Hz). So VRR is a method of getting perfect VSYNC ON look and feel (www.testufo.com/vrr) at framerates not integer-divisible by Hz -- and also erases framerate-transition stutters (60fps-60fps-59fps-60fps-60fps is stutterless on a VRR display -- since framedrop stutters are erased by VRR)

Attacking latency reductions without increasing frame rate on this can require concurrent moves:

- 1000Hz+ mice

- NVIDIA's NULL setting

- Games that don't have their framebuffer queue's daisychained to the driver's double buffering queue

- Get higher Hz to reduce lag of 60fps, by utilizing VRR or QFT tricks (e.g. "60Hz" refresh cycles refreshed in 1/240sec)

- Choosing a display that has less tapedelay latency. Some displays have to buffer refresh cycles on the opposite end of the cable, while others can stream pixel rows straight to the screen (a video cable is a serialization of 2D data into 1D cable -- see high speed videos of refresh cycles)

Etc.

Windows DWM compositor is a double-buffered implementation so it typically has 2 frames of latency at DWM level. However, individual software can sometimes bypass this (force things to display sooer) or add an additional frame queue (adds MORE frames of latency). So 2 frames queue in software and 2 frames queue in drivers = 4 frames of latency.Mark Fable wrote: ↑06 Mar 2022, 17:11Now, another question: When using Windows 10 for normal desktop tasks, VSync is always forced by Windows, yet no one complains about latency or notices a delay in clicking response and mouse movement. Isn't this implementation of VSync the same (or probably worse due to triple buffering) as what we get when we enable VSync in a game like PUBG: Battlegrounds? That game takes raw mouse and keyboard input, so it should function the same as Windows.

These days, things are so abstracted/virtualized, that you can have multiple double buffers daisychaining off each other, thinking the next step is the display but is actually a wrapper. It gets even uglier if you're running things inside a virtual machine, then you may have three layers (or four!) of double buffers daisychaining into each other. And the display side might even have its own double buffer too, if it needs to use full framebuffer processing algorithms (all plasmas and DLP required it).

Tons of optimization is necessary to merge double buffering responsibilities to the bare minimum, and VRR is one method.

I've seen this happen, especially with laggy web browsers adding 3-4 frames in the past. But that was fixed with some optimizations merging driver/software responsibilities better.

This of course, excludes the tapedelay-style latency of many 60Hz screens that have to buffer refresh cycles before displaying them. Most gaming monitors are realtime-streaming-to-scanout (like CRT), with just linebuffered processing.

You don't have to feel the millisecond to win by the millisecond.Mark Fable wrote: ↑06 Mar 2022, 17:11I am aware that VSync adds latency, but isn't the added latency (while in-game) the same as what you get when you're using Windows normally? In that case, I should have no issues using VSync in-game, as I notice no latency while using Windows. My monitor is 60 Hz and I can't stand screen tearing. I also do not notice any added latency when using VSync. I hope someone can confirm my thoughts on this topic.

Just like two Olympics athletes can cross a finish line 20ms apart and not figure out who won, until they see the scoreboard.

Likewise, in a game, you and your enemy see each other at the same time in a hallway. You start shooting at exactly the same human reaction time. The system that showed button-to-photons 10ms sooner will usually win the day. Even the 60fps-on-240Hz user will frag you first versus a 60fps-on-60Hz.

See Amazing Human Visible Feats Of The Millisecond, and if you studied university, you will probably fall in love with Blur Busters Research Portal (Area51) to learn more about how displays refresh themselves.

Addendum

I presume you are asking this question because you only use 60Hz. Are you aware that increased refresh rates also reduce tearing visibility, too, and also that wider VRR ranges reduce middle-framerate stutter even more (e.g. 30Hz-240Hz VRR range)?

If you hate tearing, then you will absolutely adore VRR, because that's the world's lowest lag "looks-like-VSYNC-ON" technology.

Plus...

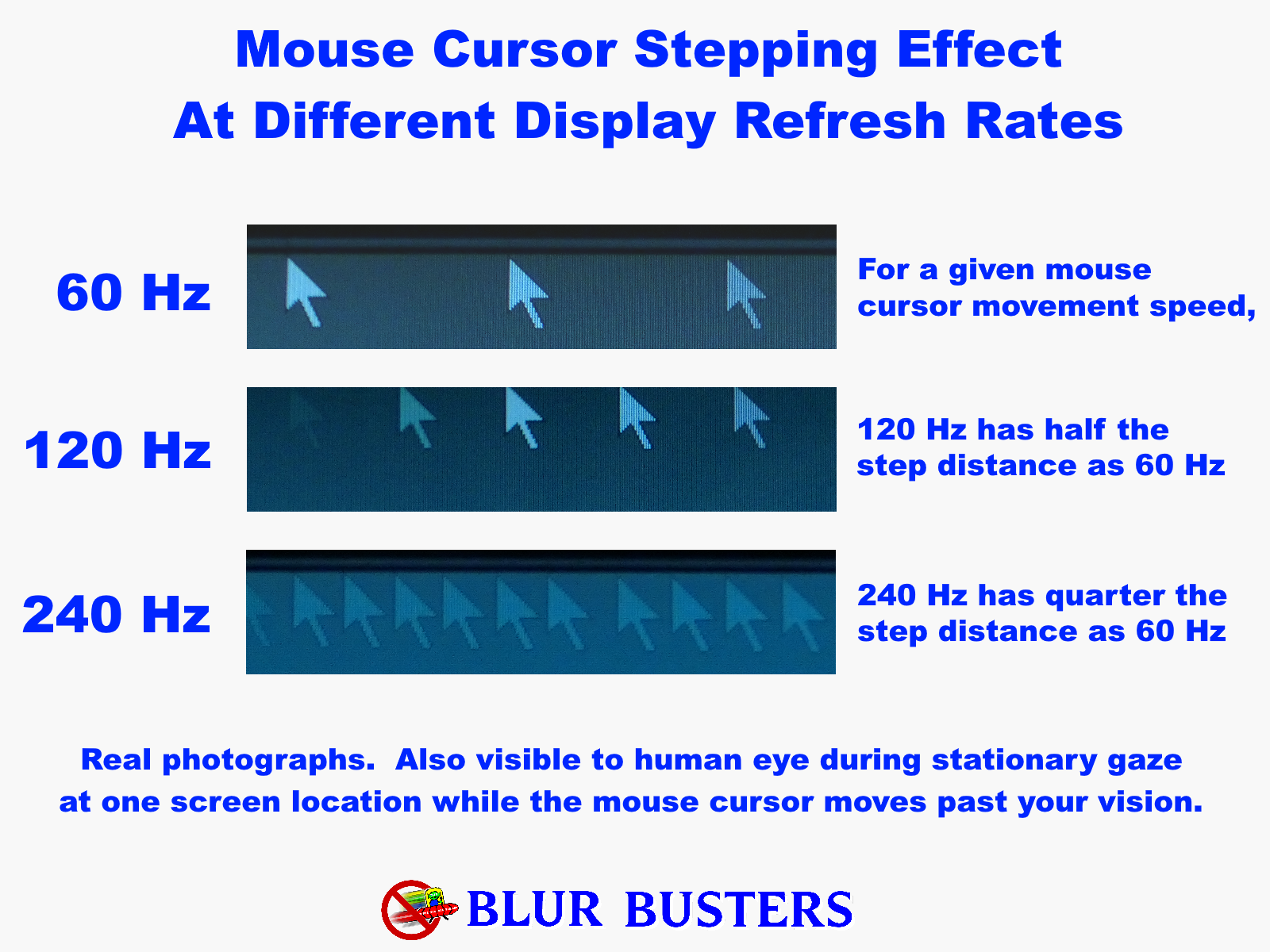

As observed, there's a certain diminishing curve of returns, which is why I recommend 3x or 4x refresh rate upgrades instead of just 1.5x or 2x refresh rate upgrades, if you don't see much difference in 2x refresh rate upgrades. So thusly, there are other reasons why to use higher Hz, not just for eliminating tearing and stutter.

One common situation is that if you've never tried 240Hz or 360Hz, you don't usually realize how laggy 60Hz is. It's easier to understand the lag of 60Hz when you've tried a system with 1/4th the latency. It's also why even Grandmas can notice 60Hz-vs-240Hz, it's more visible than VHS-vs-DVD. Even web browser scrolling has 1/4th the motion blur at 240Hz (while having 1/4th the lag too -- but you notice the reduce motion blur more).

Geometric upgrades on the refresh rate curve is necessary to punch the diminishing curve of returns, which is why I recommend non-gamers upgrade by multipliers rather than absolute increments.

60 -> 120 -> 240 -> 480 -> 1000 Hz (2x upgrade)

60 -> 144 -> 360 -> 1000 Hz (2.5x upgrade)

60 -> 240 -> 1000 Hz (4x upgrade)

People in esports can go whoopee in small Hz increments (e.g. 40Hz such as 240Hz -> 280Hz -> 360Hz) but average laypeople see refresh upgrade benefits when it's geometric (Situation is similar to spatials like 480p -> 1080p -> 4K -> 8K), because of the stroboscopic stepping benefit -- The Stroboscopic Effect of Finite Frame Rate -- as well as the motion blur reducing benefit Blur Busters Law: The Amazing Journey to Future 1000 Hz Displays as well as Pixel Response FAQ: Two Pixel Response Benchmarks: GtG Versus MPRT. You may have seen this already, but in case you or another reader has not, this is worthwhile reading for people who want to understand the proper researched science. (I'm in over 20 peer reviewed papers).

The motion blur equivalence is similar to camera shutter, e.g. SLR camera 1/60sec versus 1/240sec. It's harder to see 2x differences in blur, than 4x differences in motion blur trail size, for example.

Also, if your browser properly supports framerate=Hz animation, VRR looks like this (click to go fuller screen):

(This simulated VRR uses frame blending/interpolation to emulate VRR on a non-VRR display)

Head of Blur Busters - BlurBusters.com | TestUFO.com | Follow @BlurBusters on: BlueSky | Twitter | Facebook

Forum Rules wrote: 1. Rule #1: Be Nice. This is published forum rule #1. Even To Newbies & People You Disagree With!

2. Please report rule violations If you see a post that violates forum rules, then report the post.

3. ALWAYS respect indie testers here. See how indies are bootstrapping Blur Busters research!

-

Mark Fable

- Posts: 12

- Joined: 29 Jul 2021, 16:01

Re: Question about VSync in Gaming and Windows 10

Thanks, Chief, however, you didn't exactly answer my question.

My question skips over the other contributing factors to input latency and only assumes that there is VSync and no Vsync. I do not own a VRR monitor, so GSync is out of the question. I only own a 60 Hz monitor. Also, I am VERY sensitive to screen tearing and I consider it unbearable.

Now, with VSync on versus off, shouldn't the maximum input delay that is caused by VSync be a difference of ~2 frames or ~33ms of delay? This is my main question. I currently play with VSync and I notice no difference between VSync on and off, I also play a fast-paced shooter. I scored an average of 180ms on Human Benchmark, so I am very responsive to delay, however, I don't notice it with VSync, and Vsync helps me perfectly eliminate screen tearing.

I just don't want to feel like I am stupid for using VSync. It really does help me, and I don't think it adds more than ~33ms of delay when compared to VSync off. Provided that VSync in-game is as responsive as VSync is Windows 10 (which you confirmed is true) there shouldn't be an issue with me using VSync right?

Note that I don't seek the lowest possible latency, but I just want it low enough that I can't notice it. Also, I win most of my gunfights in-game against people who don't use VSync and have similar network latency, so I don't think that VSync affects my gameplay as you described.

My question skips over the other contributing factors to input latency and only assumes that there is VSync and no Vsync. I do not own a VRR monitor, so GSync is out of the question. I only own a 60 Hz monitor. Also, I am VERY sensitive to screen tearing and I consider it unbearable.

Now, with VSync on versus off, shouldn't the maximum input delay that is caused by VSync be a difference of ~2 frames or ~33ms of delay? This is my main question. I currently play with VSync and I notice no difference between VSync on and off, I also play a fast-paced shooter. I scored an average of 180ms on Human Benchmark, so I am very responsive to delay, however, I don't notice it with VSync, and Vsync helps me perfectly eliminate screen tearing.

I just don't want to feel like I am stupid for using VSync. It really does help me, and I don't think it adds more than ~33ms of delay when compared to VSync off. Provided that VSync in-game is as responsive as VSync is Windows 10 (which you confirmed is true) there shouldn't be an issue with me using VSync right?

Note that I don't seek the lowest possible latency, but I just want it low enough that I can't notice it. Also, I win most of my gunfights in-game against people who don't use VSync and have similar network latency, so I don't think that VSync affects my gameplay as you described.

- Chief Blur Buster

- Site Admin

- Posts: 12139

- Joined: 05 Dec 2013, 15:44

- Location: Toronto / Hamilton, Ontario, Canada

- Contact:

Re: Question about VSync in Gaming and Windows 10

Differentially in a simple answer?Mark Fable wrote: ↑15 Mar 2022, 22:30Now, with VSync on versus off, shouldn't the maximum input delay that is caused by VSync be a difference of ~2 frames or ~33ms of delay? This is my main question. I currently play with VSync and I notice no difference between VSync on and off, I also play a fast-paced shooter. I scored an average of 180ms on Human Benchmark, so I am very responsive to delay, however, I don't notice it with VSync, and Vsync helps me perfectly eliminate screen tearing.

Commonly about 1-2 frames, depending on driver and game settings.

NVIDIA drivers have a "Max Prerendered Frames" setting, which can turn a common linear double buffer into a linear triple buffer, or a linear quadruple buffer, or a linear pentuple buffer. With the attendant multiple frames of lag. However, typical it is 1-2 frames.

More complex answer below:

It also depends on the pixel. VSYNC ON is a global sync technology, while refreshing is a sequential technology. Each pixel on a display is a different latency, because of how the last pixel on a display finishes refreshing 1/60sec after the first pixel.Mark Fable wrote: ↑15 Mar 2022, 22:30I just don't want to feel like I am stupid for using VSync. It really does help me, and I don't think it adds more than ~33ms of delay when compared to VSync off.

So best-case lag of VSYNC ON may be only 1 frame (staring at top edge of screen) and may be 2 frame (staring at bottom edge of screen).

If you're staring at crosshairs in the exact middle, you may be getting 1.5 frames of extra delay relative to VSYNC OFF, for most common double-buffered VSYNC implementations.

If your game is tightly optimized, and the game has an excellent VSYNC ON implementation, and you are using full screen exclusive (bypassing Windows 10 DWM), you may sometimes get as low as 1 frame latency (0 frame for top edge, 0.5 frame for middle, 1 frame for bottom edge), but that mainly occurs with emulators and games with good built-in inputdelay algorithms. You may be able to achieve this with RTSS Scanline Sync (a method of VSYNC ON emulation that removes ~1 frame of latency)

Also because not all pixels refresh at the same time, there are time-differentials between top and bottom edge of screen, depending on how global-vs-nonglobal the GPU sync technology is, versus how global-vs-nonglobal the display photon-visibility is. Screen latency of top/center/bottom:

For LCDs, the rule of thumb:

VSYNC ON + nonstrobed means top < center < bottom

VSYNC ON + strobed means top == center == bottom

VSYNC OFF + nonstrobed means top == center == bottom

VSYNC OFF + strobed means top > center > bottom

*Exceptions apply. Some LCDs do scan conversion, e.g. to convert top-to-bottom cable scan, into a bottom-to-top panel scan. Scan conversion mandatorily requires full frame buffering on panel side before refresh begins. Some TVs do this.

So that means rule of thumb for a bog-standard unthrottled double buffer relative to VSYNC OFF.

VSYNC ON = 1 frame latency for top, 1.5 frame latency for middle, and 2 frame latency for bottom.

But your game may not be using a standard double buffer algorithm; it might be improving upon it -- some of them do. Some games override the driver's buffer handling and use waitable swapchains, which means "invent your own buffering algorithm in your software application" with a totally different latency!

In all cases, VSYNC ON with default EDID timings means bottom edge is always guaranteed to never have less than 1 frame of latency, relative to the game's frame presentation. The pixels arriving on the cable will arrive sooner for top edge, and the gaming display can display the pixels at the top edge almost immediately -- meaning some pixels can have less than 1 frame latency during VSYNC ON, if it's running a very efficient VSYNC ON implementation (you can get a similar less-than-1-frame VSYNC ON result via RTSS Scanline Sync, or capping 0.01 below Hz).

This is more important if you play competitively, than with solo games. But as you say you have won gunfights...

Well-implemented games, with good NVIDIA driver settings, will usually have slightly less VSYNC latency than Windows 10.Mark Fable wrote: ↑15 Mar 2022, 22:30Provided that VSync in-game is as responsive as VSync is Windows 10 (which you confirmed is true) there shouldn't be an issue with me using VSync right?

Especially if you've capped your framerate at least 0.01 below the number you see at www.testufo.com/refreshrate

If your game has a 60 cap configured in its menu, or if your game is 60fps-selfcapping, then it may actually be reducing your VSYNC latency already by a frame, by rendering frames closer to delivery to monitor, because the GPU is throttled from rendering faster than the monitor can display them. Thus, less waiting for buffered frames. The wait can be pushed closer to 0 frame rather than 1 frame, if software render just in time before a refresh cycle.

It can be worse, it can be better.

1-2 frames is just a common parrot, but under the hood is more complex!

Skill and other equipment can greatly compensate for the difference in lag between VSYNC ON and VSYNC OFF, in a specific game. You're very good if you're winning at 60Hz VSYNC ON.Mark Fable wrote: ↑15 Mar 2022, 22:30Note that I don't seek the lowest possible latency, but I just want it low enough that I can't notice it. Also, I win most of my gunfights in-game against people who don't use VSync and have similar network latency, so I don't think that VSync affects my gameplay as you described.

It helps if the game optimized its own internal VSYNC ON implementation so well, that the difference between VSYNC ON and VSYNC OFF becomes only 1 frame instead of 2. But games are a black box, we don't know what lag it has, until testing techniques such as 1000fps high speed camera is pointed at the game during VSYNC ON versus VSYNC OFF. VSYNC ON-vs-OFF lag differentials apparently vary a lot between games..

Head of Blur Busters - BlurBusters.com | TestUFO.com | Follow @BlurBusters on: BlueSky | Twitter | Facebook

Forum Rules wrote: 1. Rule #1: Be Nice. This is published forum rule #1. Even To Newbies & People You Disagree With!

2. Please report rule violations If you see a post that violates forum rules, then report the post.

3. ALWAYS respect indie testers here. See how indies are bootstrapping Blur Busters research!

-

Mark Fable

- Posts: 12

- Joined: 29 Jul 2021, 16:01

Re: Question about VSync in Gaming and Windows 10

Thank you so much for the detailed answer!

Based on all you've mentioned, I will continue to use VSync until I find myself with a GSync monitor. I do not notice that VSync holds me back in any way. As you stated, double-buffered VSync adds no more than 2 frames of delay and can even add less (with tight optimizations). That being said, I don't think that a difference of ~33ms is noticeable to me. I tried to notice it but couldn't. Maybe this is also because Windows 10 runs with that delay at default so I've always been accustomed to a VSynced experience.

I play an aim trainer called Kovaak's and I am at the 90th percentile in a few training scenarios. These scenarios require that your setup is as responsive as possible (especially the mouse flicking scenarios) so I'm impressed that VSync is not holding me back in that as well. Maybe that's why I do well in competitive shooters with VSync haha.

I think that I fell for the trap of fussing about input delay and that's what led me to start this thread. Delay is often not noticeable and I strongly doubt that 33ms of delay would be the reason why I lost a gunfight, especially with the fact that there are so many other parameters in-game that can determine the outcome of a gunfight. As far as I'm concerned, there will always be some delay when it comes to electronics, and that won't ever change, not even with the possibility of a 1000 Hz (1 ms) monitor. The most important thing is that the delay is not noticeable.

Once again, thanks for your detailed response. It's been really reassuring to me.

Based on all you've mentioned, I will continue to use VSync until I find myself with a GSync monitor. I do not notice that VSync holds me back in any way. As you stated, double-buffered VSync adds no more than 2 frames of delay and can even add less (with tight optimizations). That being said, I don't think that a difference of ~33ms is noticeable to me. I tried to notice it but couldn't. Maybe this is also because Windows 10 runs with that delay at default so I've always been accustomed to a VSynced experience.

I play an aim trainer called Kovaak's and I am at the 90th percentile in a few training scenarios. These scenarios require that your setup is as responsive as possible (especially the mouse flicking scenarios) so I'm impressed that VSync is not holding me back in that as well. Maybe that's why I do well in competitive shooters with VSync haha.

I think that I fell for the trap of fussing about input delay and that's what led me to start this thread. Delay is often not noticeable and I strongly doubt that 33ms of delay would be the reason why I lost a gunfight, especially with the fact that there are so many other parameters in-game that can determine the outcome of a gunfight. As far as I'm concerned, there will always be some delay when it comes to electronics, and that won't ever change, not even with the possibility of a 1000 Hz (1 ms) monitor. The most important thing is that the delay is not noticeable.

Once again, thanks for your detailed response. It's been really reassuring to me.