Enigma wrote: ↑11 Mar 2025, 09:21

I meant 240Hz VRR vs 240Hz No Sync At all (No VRR, No V-Sync, etc)

Do you mean

VRR on + V-SYNC option on vs.

VRR off + V-SYNC option off at x framerate within 240Hz refresh rate?

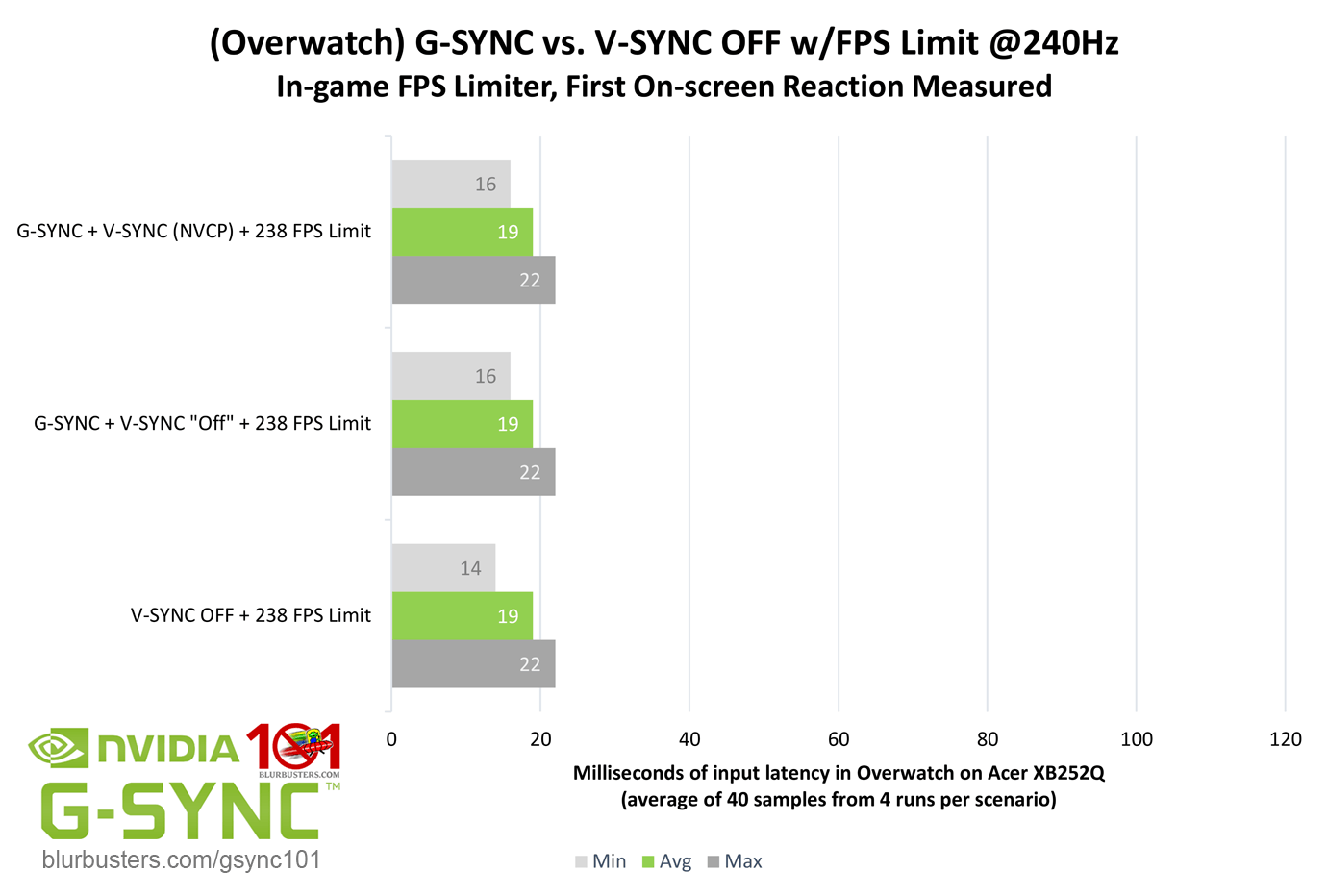

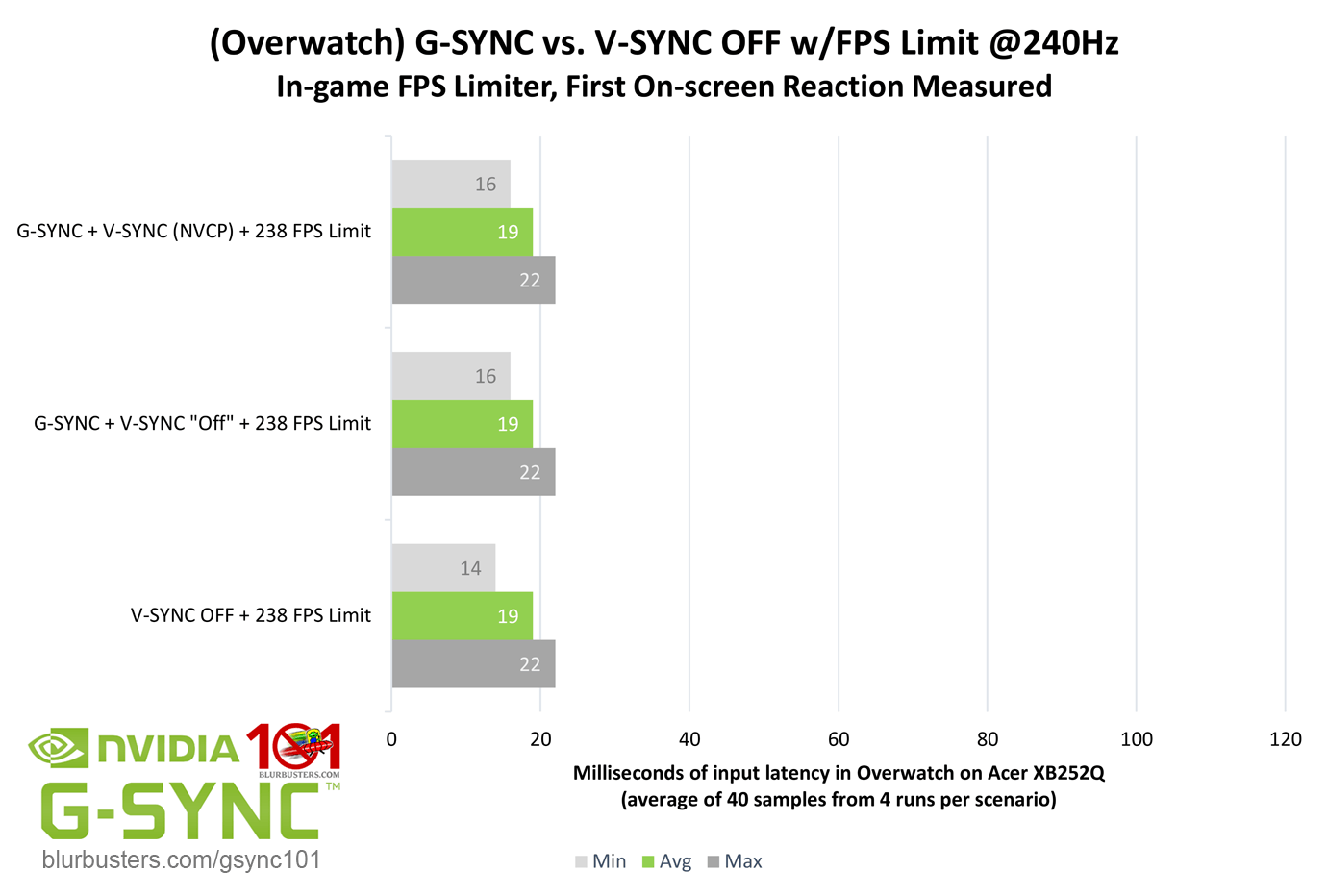

If so, this has already been tested:

https://blurbusters.com/gsync/gsync101- ... ettings/6/

That 14ms minimum in the "V-SYNC OFF + 238 FPS Limit" result

(aka your VRR off + V-SYNC option off scenario) above means there was a single sample that registered as 14ms, but it wasn't enough to change the average latency over the course of 40 samples.

I.E. that extra 2ms reduction for that single sample was achieved by a tearline

(a portion of the previous and next frame scan appearing simultaneously in a single scanout).

In other words, any latency difference between your two scenarios is determined by whether tearing is present, nothing more.

Tearing in and of itself is a latency reducer, but of course the latency reduction is the tearlines themselves, which the average gamer tends to dislikes the look of, hence the use case for syncing methods, VRR included.

As I noted to you in the other thread, VRR has the lowest

tear-free latency possible. Any lower latency

requires tearing. No way around that.