RonsonPL wrote: ↑08 Jan 2025, 12:22

- let's start from the least important and one that nobody even here will agree with

- they introduced G-sync which pushed strobing away and steered discusion from "how clear the motion is" to "how fluid the motion is", placing a seed of misconceptions into many, many minds.

- introduced TAA, which sacrifices quality of moving image for antialiasing of static or very slowly moving images

- introduced variable rate shading, which basically relies on "nobody can see anything in motion so let's degrade it even more and move the power to static image"

- introduces ray-tracing, path tracing and AI upscalling, which again, poses serious problems for popularization of clear motion in gaming. hem t

The nice thing is that at CES 2025 I saw RTX ON graphics at >300fps on a 480Hz OLED, TAA fully disabled, and it looked much better than TAA. There's no way I'm not awarding at least a little kudo to those improved less-fake frames.

I think NVIDIA is kinda course correcting.

Not perfect or good enough. But much better route.

Next piece will be fawning over DLSS 4, so be forewarned. But as a subset within it, I will have a fire-breathing piece again, this time roasting TAA and variable-rate shading, but kudos to DLSS 4 for being a motion-purist improvement over DLSS 3.5. The multi-frame framegen is much better than 99% of TV's interpolation, so I give them that. The AI parallax infills has improved so much, that the 3 fake frames looked better than 4000-series 1 fake frame. That's an accomplishment, less artifacts despite more fake frames (Now... I remind you, triangles and polygons are a different way of 'faking' real life... but I disgress). We're in an era where we're down to ~0.1% of the artifacts of 2010-era Sony Motionflow interpolation. That will continue to improve.

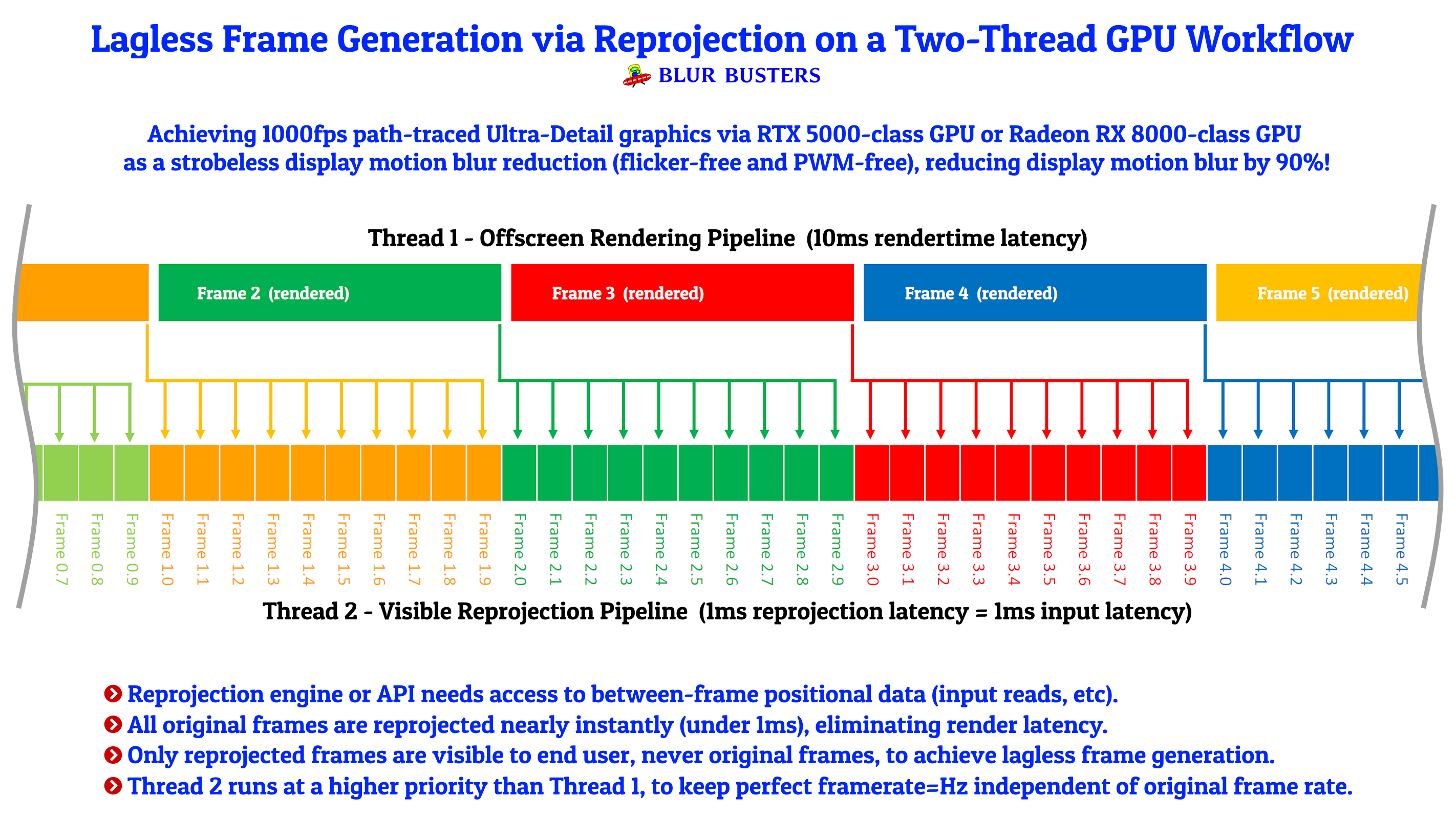

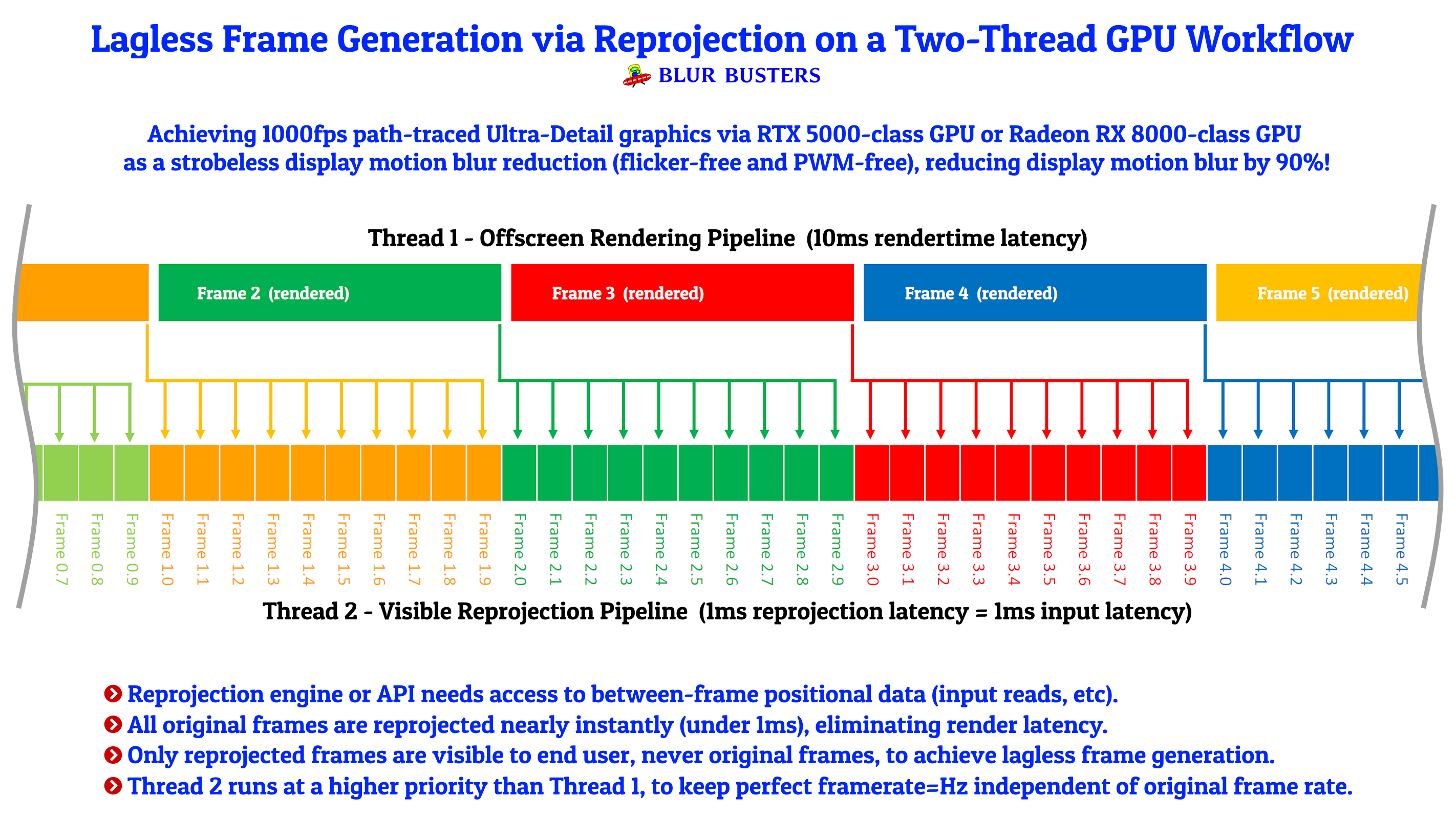

I cheerfully pointed out to NVIDIA, that the clearer motion becomes -- the more TAA/VRS artifacts show -- and that they should focus on lagless & artifactless framegen instead. Correct path IMHO.

Yes, a year ago, at CES 2024, I literally screamed (literally - loud voice) at two NVIDIA employees for neglecting 60fps. My new reduced-flicker CRT simulator, is my tour de force micdrop. Seth Schneider can tell you how upset I was at NVIDIA.

One of my rare slips of professionalism as it may -- but I'm an irish ginger, after all, with those redhead stereotypes. Oh, and I'm in Canada because of an 18th century potato famine in my ancestral country. You can imagine. But I robinhood for my Blur Busters fans as much as I can. I run my hobby-turned-business but I don't forget my fans. At least I try not to (can't remember a million names & requests).

But I have to keep cordial relations to the beautifully rendered green gorilla that makes Blur Busters possible (LIghtBoost catapulted Blur Busters to fame). No matter the love-hate relationship, the business itself still has to love them more than hate em. I do get GPU samples from 'em from time to time, and I do feed back a bit of tech suggestions back at them.

Glad to see them 'steal' (

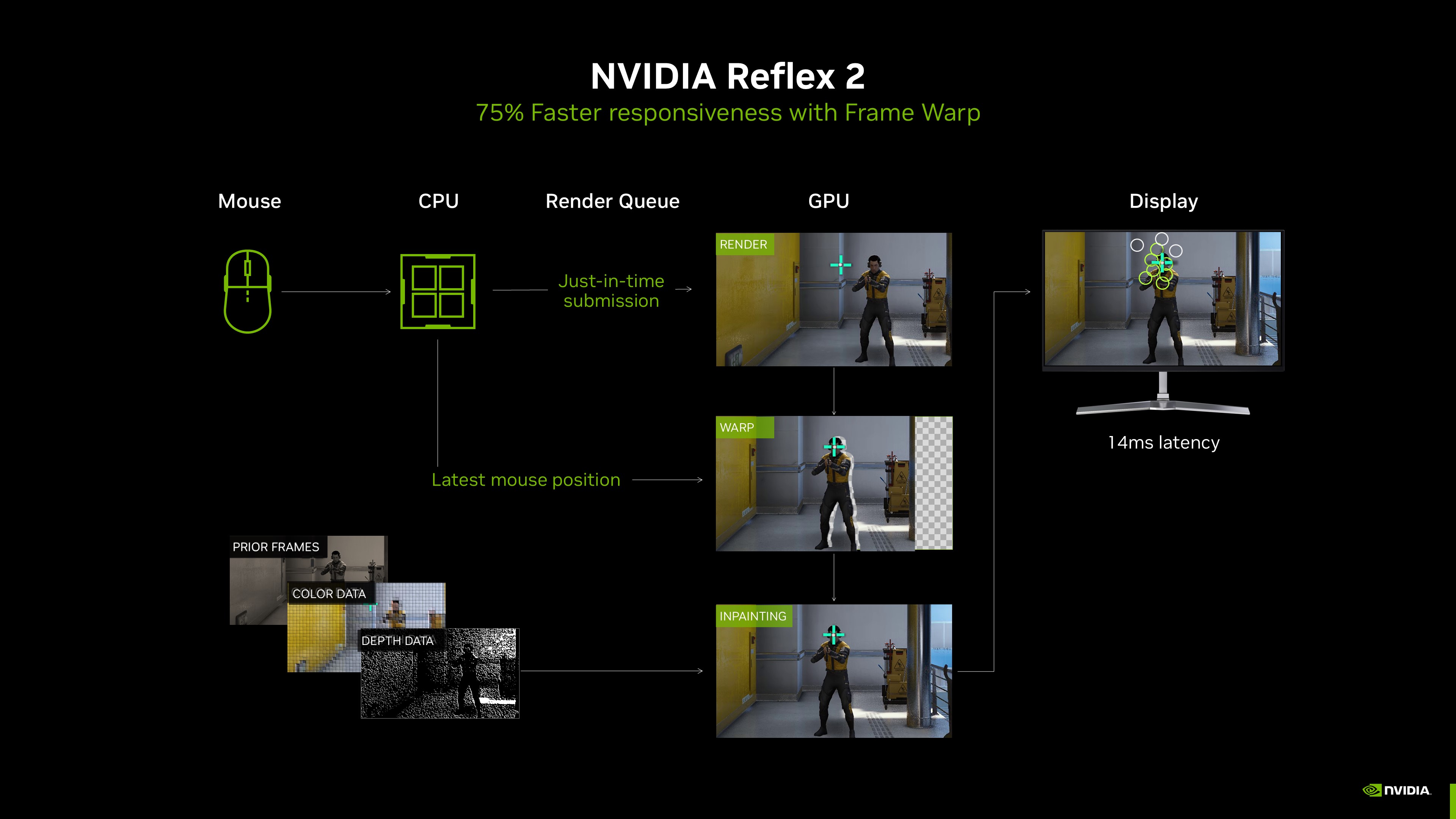

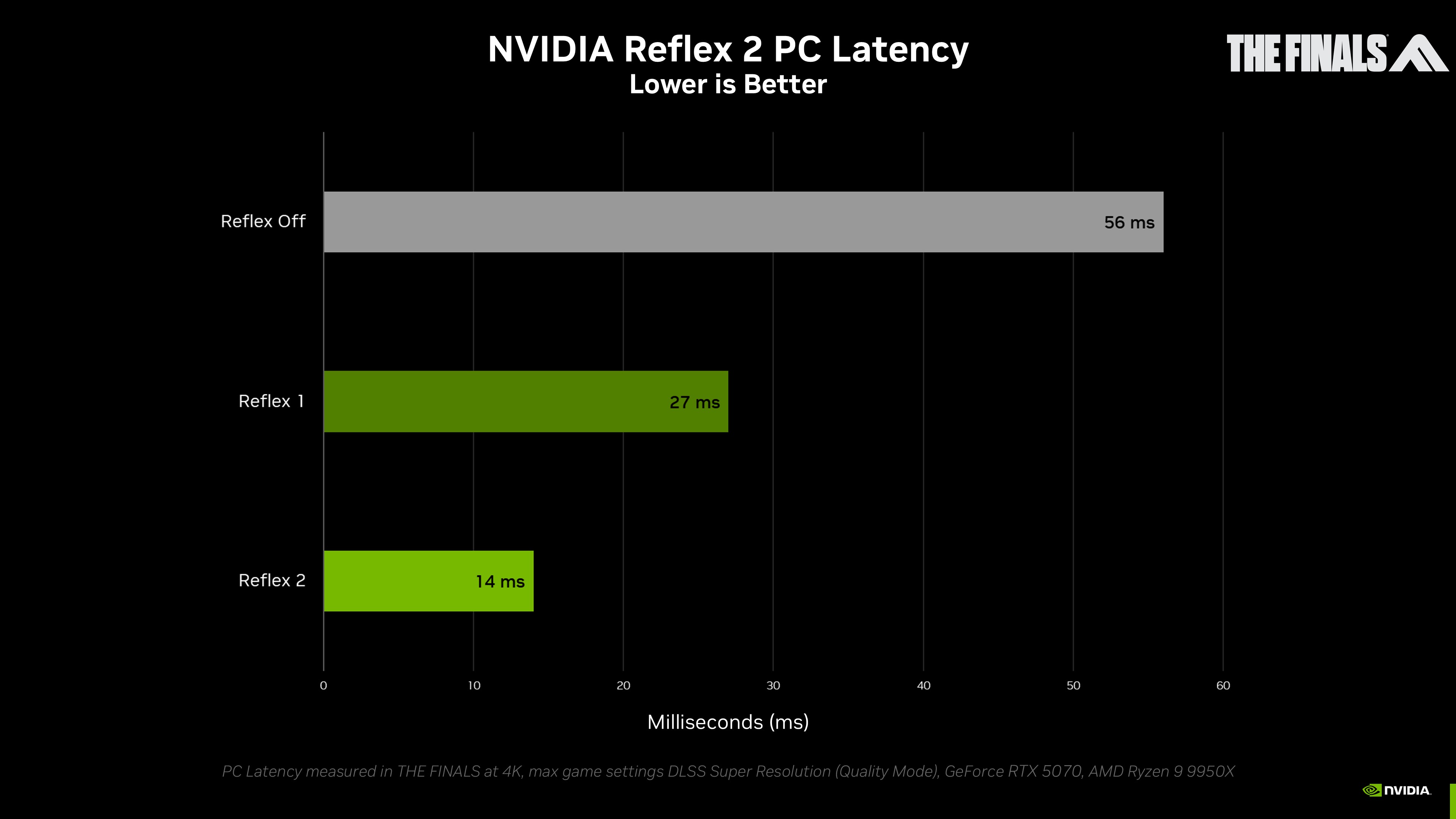

with my blessing) a small subset of the lagless framegen concepts into Reflex2 with between-frame inputreads as extra ground truth to make framegen much less fake. Now let's extend that to aggregate objects (e.g. between-frame enemy movements) to make framegen even more lagless. Even the ultra-4K-highdef textures stayed sharp during framegen this time around.

I'm not the only idea generator around here, but I'm helping with a lot of the goading and amplifying since I've got fans and content creator allies that can be sic'd onto them too. We have to team up as a unified battlefront.

Even way back in 2014 beginning in early ULMB + GSYNC monitors when "ULMB Pulse Width" exists in your monitor menus --

because of me (one NVIDIA employee confirmed as such, it was inspired by the LightBoost 10% fans of short-pulsewidth strobing that reduces even more motion blur).

This very forum exists

because of a G-SYNC giveaway, one of my first collabs with NVIDIA.

Anyway;

You can see I root AMD too, to goad them to leapfrog NVIDIA, at the very top of my infographic:

I play both the strobe-based blur busting game, and the framegen-based blur busting game too -- users should have a choice!

There's no way we're doing 4K 1000fps path tracing without the help of framegen, and framegen needs to improve massively, even much better than DLSS4. But let's put it this way: It's fallen to less than 0.1% of a 2010-era TV interpolation system, as long as your pre-framegen framerates are roughly at least ~70-80fps before framegen stage. The high pre-framegen framerate is VERY important; it pushes oscillating framegen-vs-nonframegen artifacts above flicker fusion threshold, making it much less visible than before.

And with better framegen, there's also fewer of those artifacts, but the picky motion purists will notice that a pre-framegen framerate above flicker fusion is very key to a sudden increase in motion quality of framegen, especially for OLEDs.

True. Not all of you will like it, but that's why I am giving people choice -- by releasing the

Blur Busters Open Source Display Initiative. I'll have a plasma TV shader open sourced too, 2025-2026, as well as other display simulation shaders (like simulating LCD GtG on an OLED for better less-stuttery 24fps Netflix; and no I won't worsen the blacks)

Framegen does look amazing *if* you have an OLED, because framerate increases dramatically reduces motion blur on OLEDs much more than it does on LCD. The motion blur improvement of yesteryear framegen was too pathetic to overcome the framegen artifacts, but in the OLED era + 4:1 framegen, the motion clarity improvement far massively more than outweighs the now much-more-minor detail loss of framegen.

Maybe wait if you have just a LCD, especially if you already have a display model with poor quality strobing that you never use. Upgrade to 4:1 framegen if you have an OLED. The blur busting benefits of 4:1 to 8:1 framegen is gigantically dramatic on a 480Hz OLED. Some prefer strobe-based blur reduction since they can do 0.5-1ms MPRT, but some of us want the tradeoff of an extremely bright colorful HDR-equipped 2-3ms MPRT. And 60fps CRT simulators work more reliably on 240/360/480Hz OLED, if you want to blur bust your 60fps material.

Now, I finally met a MiniLED display that kept up with the CRT simulator. The new Lenovo 240Hz miniLED gaming laptop looked amazing with the CRT simulator, so I am very pleased they are fixing MiniLED latencies. The LCD is solidly staying in the ballgame; they're having to catch up because of OLED.