It's my understanding that high bit color depth is useful for accurately representing intermediate shades, thus preventing banding (specially important in wide color gamuts), and that Frame Rate Control is a method used to simulate higher bit depth.

However, I've recently noticed that the majority of monitors use FRC instead of true high bit depth support. From professional high-end 4K monitors that use 8 bits + FRC to budget 1080p monitors that use 6 bits + FRC. There are some standard 1440p and 4K monitors that are true 8 bit, but also others that use FRC even though they barely reach %100 sRGB coverage, and also some 144 Hz monitors that are 8 bit but support FRC at lower refresh rates (I believe this is due to bandwidth limitations).

What is it that makes FRC so attractive for manufacturers to implement? Is it that much cheaper than true high bit depth support? Maybe FRC allows the panel/monitor to receive high bit input even though the pixels don't have the physical capability to reproduce certain shades of color without dithering?

And what advantages does FRC give to standard sRGB monitors? Maybe it improves accuracy by reducing quantization errors?

Why is FRC dithering so widely used?

Why is FRC dithering so widely used?

Last edited by Aldagar on 08 May 2020, 06:54, edited 1 time in total.

- Chief Blur Buster

- Site Admin

- Posts: 12099

- Joined: 05 Dec 2013, 15:44

- Location: Toronto / Hamilton, Ontario, Canada

- Contact:

Re: Why is FRC dithering so widely used?

FRC is essentially temporal dithering to spread color depth over multiple refresh cycles.

Some technologies do temporal dithering in a sub-refresh manner (e.g. 1-bit temporal dithering 1440Hz for DLP chips, or 600Hz plasma subfields) but LCD FRC is temporal dithering at the full refresh cycle level. Which means it intentionally uses multiple refresh cycles to generate improved color depth.

The easiest explanation is digital bandwidth limitations in the panel. It's an incredible amount of bandwidth at the Pixel Clock level. Trying to do over one half BILLION pixels per second, is an insane Hoover Damburst at the panel/TCON level that sometimes needs FPGA/ASIC level processing. Doing it more cheaply by reducing bandwidth by 25% (6bit instead of 8bit) can save enough costs to use cheaper electronics in the panel/TCON (that motherboard built into the rear of the LCD panel). It can mean the difference between a $400 monitor and a $500 monitor.

Math: 1920 x 1080 x 240 = about half a billion per second (not including blanking intervals, or subpixels)

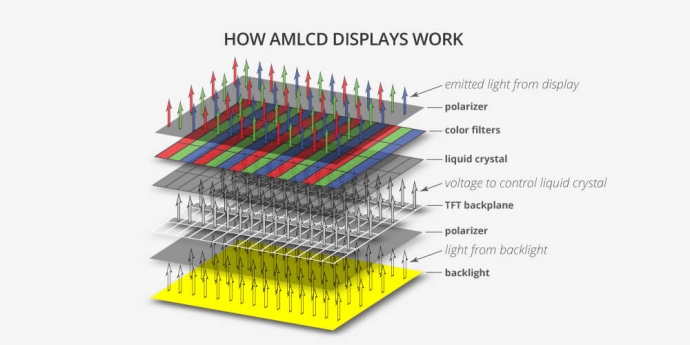

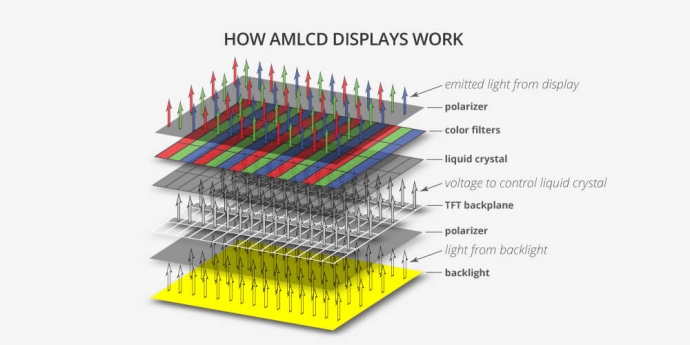

The otherwise-digital LCD pixels are inherently analog at the final molecular level (they're just rotateable liquid crystal molecules between two polarizers to block/unblock light), and the panel/TCON has to essentially do half a billion digital-to-analog conversions per second. It's easier to do a 6-bit digital-to-analog conversion than an 8-bit digital-to-analog conversion when you only have nanoseconds to do it for a single pixel.

In other words, think of continually readjusting half a billion analog room dimmers per second, literally. Your living room dimmer with the slider or analog knob? Each subpixel is like that, for one refresh cycle. The monitor's chip has to readjust half a billion times per second to adjust the correct amount of electricity going through a pixel. That's tough to do at full bit depth cheaply, and try to control all those analog GtG's for every single subpixel for every refresh cycle. It's already an engineering miracle that LCDs are cheaper than $5000 today.

Very old LCDs like an early portable TV or old 1994 Thinkpad, had a lot of pixel noise, the electronics weren't able to adjust pixels as accurately as they can today.

LCDs weren't meant to drive screens originally -- it was originally invented for wristwatches and calculators in the 1970s. Today LCD drives beautiful retina smartphones screens, fancy 4K UHD TVs, and ultra-high-Hz gaming monitors.

8bit equals 256 levels of grey per color channel (Red, green, and blue). Imagine 256 dimming levels for a LCD wristwatch, and having to repeat those 256 levels for each subpixels, hundreds of times per second (240 times per second for 240Hz).

The LCD in a gaming monitor is still exactly the same law-of-physics as a 1970s wrist watch. Just way more pixels, and those monochrome LCD pixels filtered to color.

So giving up a few bits to make the firehose easier to manage, can save quite a bit of cost. 6bit+FRC (for 8bit) or 8bit+FRC (for 10bit). Also an algorithm virtually identical to FRC can be done at the GPU level too. NVIDIA GPUs automatically does that (GPU level temporal dithering) when the DVI or DisplayPort cable is put into 6bit mode, especially during the 220Hz overclocking of a BenQ XL2720Z.

A pixel clock of half a billion pixels per second is 12 gigabits per second at 24-bits (8+8+8) but only 8 gigabits per second at 18-bits (6+6+6).

FRC is still advantageous even at 8bits (to generate 10bits) and 10bits (to generate 12bits).

Banding artifacts in smoky haze, sky, or other gradients. Adding extra bits to that can elminate that.

At 400 nits, having only 8-bits is okay. But if you add 10x more brightness levels, you need 10x more space...even 10bits is not enough to eliminate banding on a 10,000 nit HDR display. That's why we've started using floating-point color and/or 12-bit color, to eliminate banding in ultrawide-gamuts.

So FRC is still super-useful even with 10bit HDR displays to generate 12bits.

Temporal artifacts from FRC is much less noticeable for 8bit+FRC than for 6bit+FRC.

You could dynamically shift gamut around (e.g. dynamic contrast) but HDR is superior because it's per-pixel dynamic contrast. For that, you need humongous bitdepth. Imagine 8-bit color, combined with extra bits for extra backlight brightness levels, to create 12-bits, and sometimes 12-bit linear is not enough to eliminate banding in extreme-HDR situations, so you have those new 16bit and floating point color depth systems.

10bit is usually sufficient and good enough, but limitations certainly show, especially if breaking past 1,000 nits to the 10,000 nits territory. I saw demo HDR material on prototype 10,000 nits displays and the effect is quite impressive -- e.g. streetlamps and neon lights in night scenes, to things like sun reflection off a shiny car ("whites far brighter than white on those sun reflections"), and other things.

It's an amazing sight to behold, but gobbles oodles of color depth. Many of these displays can't do this bitdepth natively and have to mandatorily use internal FRC-style algorithms to keep up with the expanded HDR color depths. Sometimes it's done subrefresh league (e.g. 120Hz FRC on 60Hz material). Still much more natural looking than ultra-high-Hz DLP.

Some technologies do temporal dithering in a sub-refresh manner (e.g. 1-bit temporal dithering 1440Hz for DLP chips, or 600Hz plasma subfields) but LCD FRC is temporal dithering at the full refresh cycle level. Which means it intentionally uses multiple refresh cycles to generate improved color depth.

The easiest explanation is digital bandwidth limitations in the panel. It's an incredible amount of bandwidth at the Pixel Clock level. Trying to do over one half BILLION pixels per second, is an insane Hoover Damburst at the panel/TCON level that sometimes needs FPGA/ASIC level processing. Doing it more cheaply by reducing bandwidth by 25% (6bit instead of 8bit) can save enough costs to use cheaper electronics in the panel/TCON (that motherboard built into the rear of the LCD panel). It can mean the difference between a $400 monitor and a $500 monitor.

Math: 1920 x 1080 x 240 = about half a billion per second (not including blanking intervals, or subpixels)

The otherwise-digital LCD pixels are inherently analog at the final molecular level (they're just rotateable liquid crystal molecules between two polarizers to block/unblock light), and the panel/TCON has to essentially do half a billion digital-to-analog conversions per second. It's easier to do a 6-bit digital-to-analog conversion than an 8-bit digital-to-analog conversion when you only have nanoseconds to do it for a single pixel.

In other words, think of continually readjusting half a billion analog room dimmers per second, literally. Your living room dimmer with the slider or analog knob? Each subpixel is like that, for one refresh cycle. The monitor's chip has to readjust half a billion times per second to adjust the correct amount of electricity going through a pixel. That's tough to do at full bit depth cheaply, and try to control all those analog GtG's for every single subpixel for every refresh cycle. It's already an engineering miracle that LCDs are cheaper than $5000 today.

Very old LCDs like an early portable TV or old 1994 Thinkpad, had a lot of pixel noise, the electronics weren't able to adjust pixels as accurately as they can today.

LCDs weren't meant to drive screens originally -- it was originally invented for wristwatches and calculators in the 1970s. Today LCD drives beautiful retina smartphones screens, fancy 4K UHD TVs, and ultra-high-Hz gaming monitors.

8bit equals 256 levels of grey per color channel (Red, green, and blue). Imagine 256 dimming levels for a LCD wristwatch, and having to repeat those 256 levels for each subpixels, hundreds of times per second (240 times per second for 240Hz).

The LCD in a gaming monitor is still exactly the same law-of-physics as a 1970s wrist watch. Just way more pixels, and those monochrome LCD pixels filtered to color.

So giving up a few bits to make the firehose easier to manage, can save quite a bit of cost. 6bit+FRC (for 8bit) or 8bit+FRC (for 10bit). Also an algorithm virtually identical to FRC can be done at the GPU level too. NVIDIA GPUs automatically does that (GPU level temporal dithering) when the DVI or DisplayPort cable is put into 6bit mode, especially during the 220Hz overclocking of a BenQ XL2720Z.

A pixel clock of half a billion pixels per second is 12 gigabits per second at 24-bits (8+8+8) but only 8 gigabits per second at 18-bits (6+6+6).

Banding is visible even at 10-bits on a 10,000nit HDR monitor.

FRC is still advantageous even at 8bits (to generate 10bits) and 10bits (to generate 12bits).

Banding artifacts in smoky haze, sky, or other gradients. Adding extra bits to that can elminate that.

At 400 nits, having only 8-bits is okay. But if you add 10x more brightness levels, you need 10x more space...even 10bits is not enough to eliminate banding on a 10,000 nit HDR display. That's why we've started using floating-point color and/or 12-bit color, to eliminate banding in ultrawide-gamuts.

So FRC is still super-useful even with 10bit HDR displays to generate 12bits.

Temporal artifacts from FRC is much less noticeable for 8bit+FRC than for 6bit+FRC.

You could dynamically shift gamut around (e.g. dynamic contrast) but HDR is superior because it's per-pixel dynamic contrast. For that, you need humongous bitdepth. Imagine 8-bit color, combined with extra bits for extra backlight brightness levels, to create 12-bits, and sometimes 12-bit linear is not enough to eliminate banding in extreme-HDR situations, so you have those new 16bit and floating point color depth systems.

10bit is usually sufficient and good enough, but limitations certainly show, especially if breaking past 1,000 nits to the 10,000 nits territory. I saw demo HDR material on prototype 10,000 nits displays and the effect is quite impressive -- e.g. streetlamps and neon lights in night scenes, to things like sun reflection off a shiny car ("whites far brighter than white on those sun reflections"), and other things.

It's an amazing sight to behold, but gobbles oodles of color depth. Many of these displays can't do this bitdepth natively and have to mandatorily use internal FRC-style algorithms to keep up with the expanded HDR color depths. Sometimes it's done subrefresh league (e.g. 120Hz FRC on 60Hz material). Still much more natural looking than ultra-high-Hz DLP.

Head of Blur Busters - BlurBusters.com | TestUFO.com | Follow @BlurBusters on: BlueSky | Twitter | Facebook

Forum Rules wrote: 1. Rule #1: Be Nice. This is published forum rule #1. Even To Newbies & People You Disagree With!

2. Please report rule violations If you see a post that violates forum rules, then report the post.

3. ALWAYS respect indie testers here. See how indies are bootstrapping Blur Busters research!

Re: Why is FRC dithering so widely used?

Very interesting. So, am I wrong to assume that FRC dithering is affected by resolution and refresh rate, specially considering some LCD technologies like IPS and VA have very slow pixel response times?

Also, I'm wondering if FRC looks worse on motion than on static images. I can see some kind of "noise" in darker shades on my 8 bit + FRC and 6 bit + FRC monitors, both IPS and 60Hz. And from what you have explained, even on a true 8 bit panel receiving an 8 bit source, there can still be dithering caused by software or GPU drivers.

I do not think it's something to worry about since it's very faint and I have to focus to notice it, but I'm interested nonetheless.

Also, I'm wondering if FRC looks worse on motion than on static images. I can see some kind of "noise" in darker shades on my 8 bit + FRC and 6 bit + FRC monitors, both IPS and 60Hz. And from what you have explained, even on a true 8 bit panel receiving an 8 bit source, there can still be dithering caused by software or GPU drivers.

I do not think it's something to worry about since it's very faint and I have to focus to notice it, but I'm interested nonetheless.

- Chief Blur Buster

- Site Admin

- Posts: 12099

- Joined: 05 Dec 2013, 15:44

- Location: Toronto / Hamilton, Ontario, Canada

- Contact:

Re: Why is FRC dithering so widely used?

Human vision already has natural noise (but most brain filters that). The same problem affects camera sensors.

The important job is to make sure FRC/temporal dithering is below the noise floor of human vision. Also at high resolution, FRC pixels are so fine that it's almost like invisible spatial dithering instead of temporal dithering. Also, FRC is dithering only between adjacent colors in the color gamut, not between widely-spaced colors (like some technologies such as DLP has to).

Yes, motion amplifies temporal dithering visibility.Aldagar wrote: ↑08 May 2020, 06:53Also, I'm wondering if FRC looks worse on motion than on static images. I can see some kind of "noise" in darker shades on my 8 bit + FRC and 6 bit + FRC monitors, both IPS and 60Hz. And from what you have explained, even on a true 8 bit panel receiving an 8 bit source, there can still be dithering caused by software or GPU drivers.

It's a bigger problem for more binary dithering (DLP/plasma) and much smaller problem for FRC dithering (LCD) because of the distance between dithered color pairs in the color gamut. DLP noisy darks, plasma christmas tree effect pixels in darks, are (at similar viewing distance for similar size / similar resolution display) usually more noticeable dither noise than LCD FRC on noisy dim shades

Mind you, turning off FRC (or defeating FRC via software-based black frame insertion) will mean two different near-shades will become looking identical. Slight darn-near-invisible noise is preferable to banding/contouring/identical shades.

Also, the noise in dim colors is not necessarily always FRC based on all panels. It's definitely almost always the case for 6bit+FRC though. There can be noise in the pixel drivers (poor electronics in the panel driver sometimes creates noise much akin to analog VGA noise, even for a digital input, but this is rare nowadays fortunately). Voltage jitter during voltage inversion algorithms or other mudane reasons for pixel noise to exist. Early color LCDs (25 years ago) often had a bit of analog-like pixel noise independently of FRC noise, but most of this is now far below the human noisefloor.

Head of Blur Busters - BlurBusters.com | TestUFO.com | Follow @BlurBusters on: BlueSky | Twitter | Facebook

Forum Rules wrote: 1. Rule #1: Be Nice. This is published forum rule #1. Even To Newbies & People You Disagree With!

2. Please report rule violations If you see a post that violates forum rules, then report the post.

3. ALWAYS respect indie testers here. See how indies are bootstrapping Blur Busters research!