Mark Fable wrote: ↑06 Mar 2022, 17:11

TLDR: I'm a VSync user. I use VSync because I hate screen tearing and I'm very sensitive to it. My understanding of VSync is that, given a double buffer scenario, VSync at 60 Hz should not add any more than ~33ms of latency.

Here's my thought process: You have a 60 Hz monitor that takes 1/60th of a second to display a frame before it switches to the next frame. With double buffer VSync (which is the standard) you have a back buffer that holds a frame from the GPU and sends that frame to the monitor when the monitor is done displaying the previous frame. Knowing that, shouldn't the maximum latency being added by VSync be 16.67ms plus 16.67ms to give ~33ms? I see some people claiming that VSync can add more than 50ms, but I don't see how this is possible in this scenario.

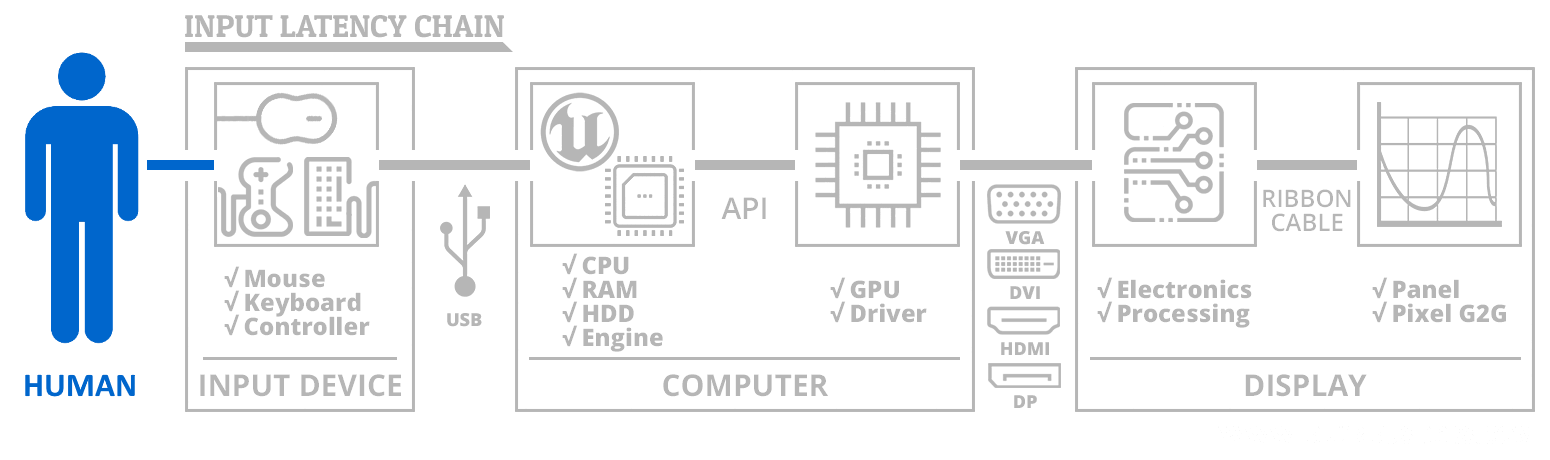

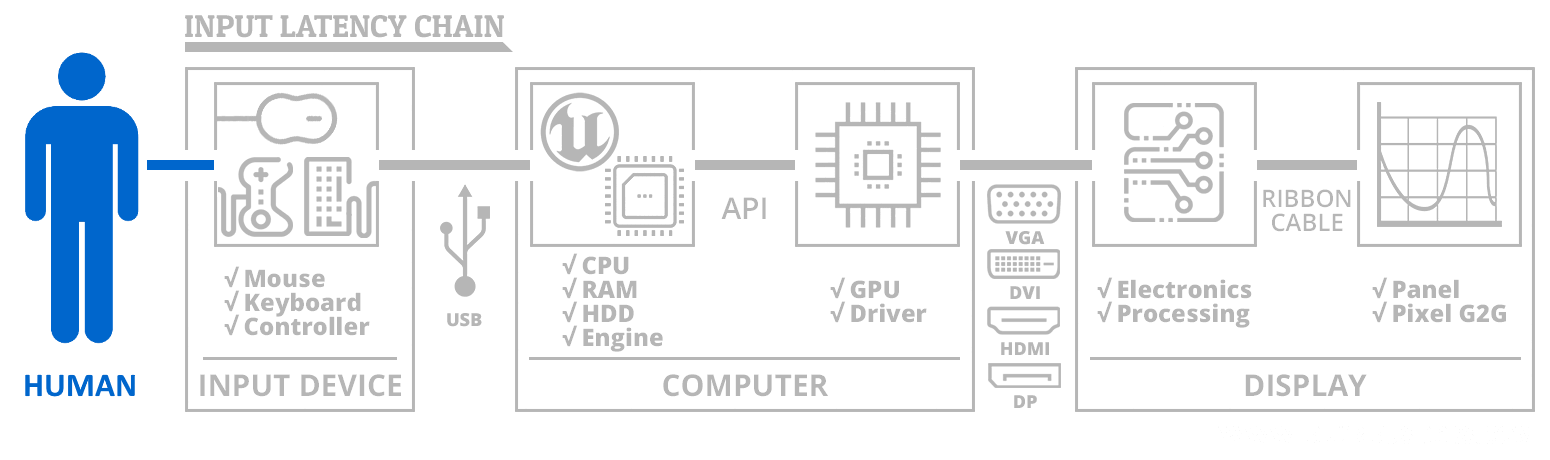

The input lag chain is extremely huge, with different latencies in different parts of the VSYNC chain:

That's why in some games, VSYNC ON 60Hz can be over 100ms latency, when all the factors pile up simultaneously. You might not feel that directly, however.

What this means is that if you have terrible lag in each of the stage, you can have more than 100ms of latency in a double buffer scenario, especially if there's multiple blocking stages.

A higher framerate on the GPU (e.g. 240fps) can reduce GPU lag to 4ms per frame, especially if it's run via a "replace-latest-undisplayed-buffered-frame" triple buffer algorithm, or if using a VRR display that refreshes on demand. (A VRR display means the screen refreshes immediately on frame presentation, the display is actually waiting for the software to display. Which means a game doing 52fps means the display is doing perfect 52Hz). So VRR is a method of getting perfect VSYNC ON look and feel (

www.testufo.com/vrr) at framerates not integer-divisible by Hz -- and also erases framerate-transition stutters (60fps-60fps-59fps-60fps-60fps is stutterless on a VRR display -- since framedrop stutters are erased by VRR)

Attacking latency reductions without increasing frame rate on this can require concurrent moves:

- 1000Hz+ mice

- NVIDIA's NULL setting

- Games that don't have their framebuffer queue's daisychained to the driver's double buffering queue

- Get higher Hz to reduce lag of 60fps, by utilizing VRR or QFT tricks (e.g. "60Hz" refresh cycles refreshed in 1/240sec)

- Choosing a display that has less tapedelay latency. Some displays have to buffer refresh cycles on the opposite end of the cable, while others can stream pixel rows straight to the screen (a video cable is a serialization of 2D data into 1D cable --

see high speed videos of refresh cycles)

Etc.

Mark Fable wrote: ↑06 Mar 2022, 17:11

Now, another question: When using Windows 10 for normal desktop tasks, VSync is always forced by Windows, yet no one complains about latency or notices a delay in clicking response and mouse movement. Isn't this implementation of VSync the same (or probably worse due to triple buffering) as what we get when we enable VSync in a game like PUBG: Battlegrounds? That game takes raw mouse and keyboard input, so it should function the same as Windows.

Windows DWM compositor is a double-buffered implementation so it typically has 2 frames of latency at DWM level. However, individual software can sometimes bypass this (force things to display sooer) or add an additional frame queue (adds MORE frames of latency). So 2 frames queue in software and 2 frames queue in drivers = 4 frames of latency.

These days, things are so abstracted/virtualized, that you can have multiple double buffers daisychaining off each other, thinking the next step is the display but is actually a wrapper. It gets even uglier if you're running things inside a virtual machine, then you may have three layers (or four!) of double buffers daisychaining into each other. And the display side might even have its own double buffer too, if it needs to use full framebuffer processing algorithms (all plasmas and DLP required it).

Tons of optimization is necessary to merge double buffering responsibilities to the bare minimum, and VRR is one method.

I've seen this happen, especially with laggy web browsers adding 3-4 frames in the past. But that was fixed with some optimizations merging driver/software responsibilities better.

This of course, excludes the tapedelay-style latency of many 60Hz screens that have to buffer refresh cycles before displaying them. Most gaming monitors are realtime-streaming-to-scanout (like CRT), with just linebuffered processing.

Mark Fable wrote: ↑06 Mar 2022, 17:11

I am aware that VSync adds latency, but isn't the added latency (while in-game) the same as what you get when you're using Windows normally? In that case, I should have no issues using VSync in-game, as I notice no latency while using Windows. My monitor is 60 Hz and I can't stand screen tearing. I also do not notice any added latency when using VSync. I hope someone can confirm my thoughts on this topic.

You don't have to feel the millisecond to win by the millisecond.

Just like two Olympics athletes can cross a finish line 20ms apart and not figure out who won, until they see the scoreboard.

Likewise, in a game, you and your enemy see each other at the same time in a hallway. You start shooting at exactly the same human reaction time. The system that showed button-to-photons 10ms sooner will usually win the day. Even the 60fps-on-240Hz user will frag you first versus a 60fps-on-60Hz.

See

Amazing Human Visible Feats Of The Millisecond, and if you studied university, you will probably fall in love with

Blur Busters Research Portal (Area51) to learn more about how displays refresh themselves.

Addendum

I presume you are asking this question because you only use 60Hz. Are you aware that increased refresh rates also reduce tearing visibility, too, and also that wider VRR ranges reduce middle-framerate stutter even more (e.g. 30Hz-240Hz VRR range)?

If you hate tearing, then you will absolutely adore VRR, because that's the world's lowest lag "looks-like-VSYNC-ON" technology.

Plus...

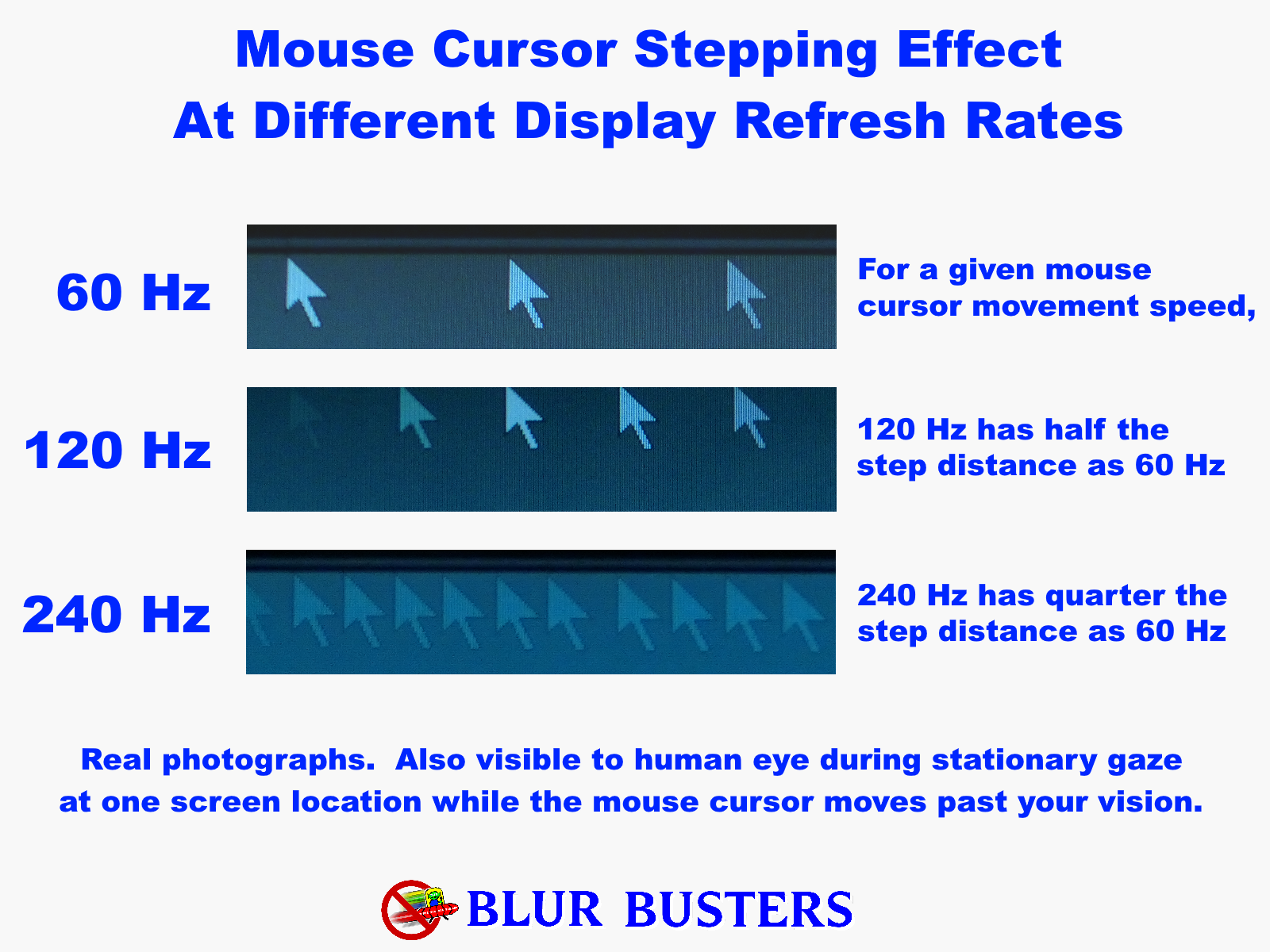

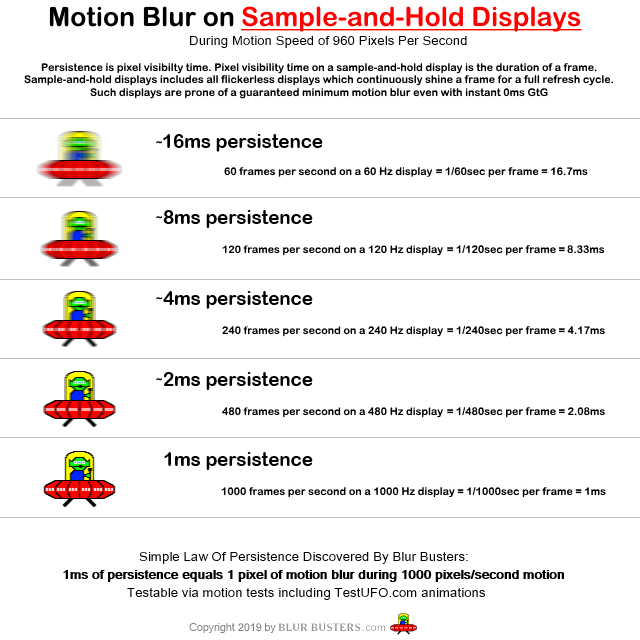

As observed, there's a certain diminishing curve of returns, which is why I recommend 3x or 4x refresh rate upgrades instead of just 1.5x or 2x refresh rate upgrades, if you don't see much difference in 2x refresh rate upgrades. So thusly, there are other reasons why to use higher Hz, not just for eliminating tearing and stutter.

One common situation is that if you've never tried 240Hz or 360Hz, you don't usually realize how laggy 60Hz is. It's easier to understand the lag of 60Hz when you've tried a system with 1/4th the latency. It's also why even Grandmas can notice 60Hz-vs-240Hz, it's more visible than VHS-vs-DVD. Even web browser scrolling has 1/4th the motion blur at 240Hz (while having 1/4th the lag too -- but you notice the reduce motion blur more).

Geometric upgrades on the refresh rate curve is necessary to punch the diminishing curve of returns, which is why I recommend non-gamers upgrade by multipliers rather than absolute increments.

60 -> 120 -> 240 -> 480 -> 1000 Hz (2x upgrade)

60 -> 144 -> 360 -> 1000 Hz (2.5x upgrade)

60 -> 240 -> 1000 Hz (4x upgrade)

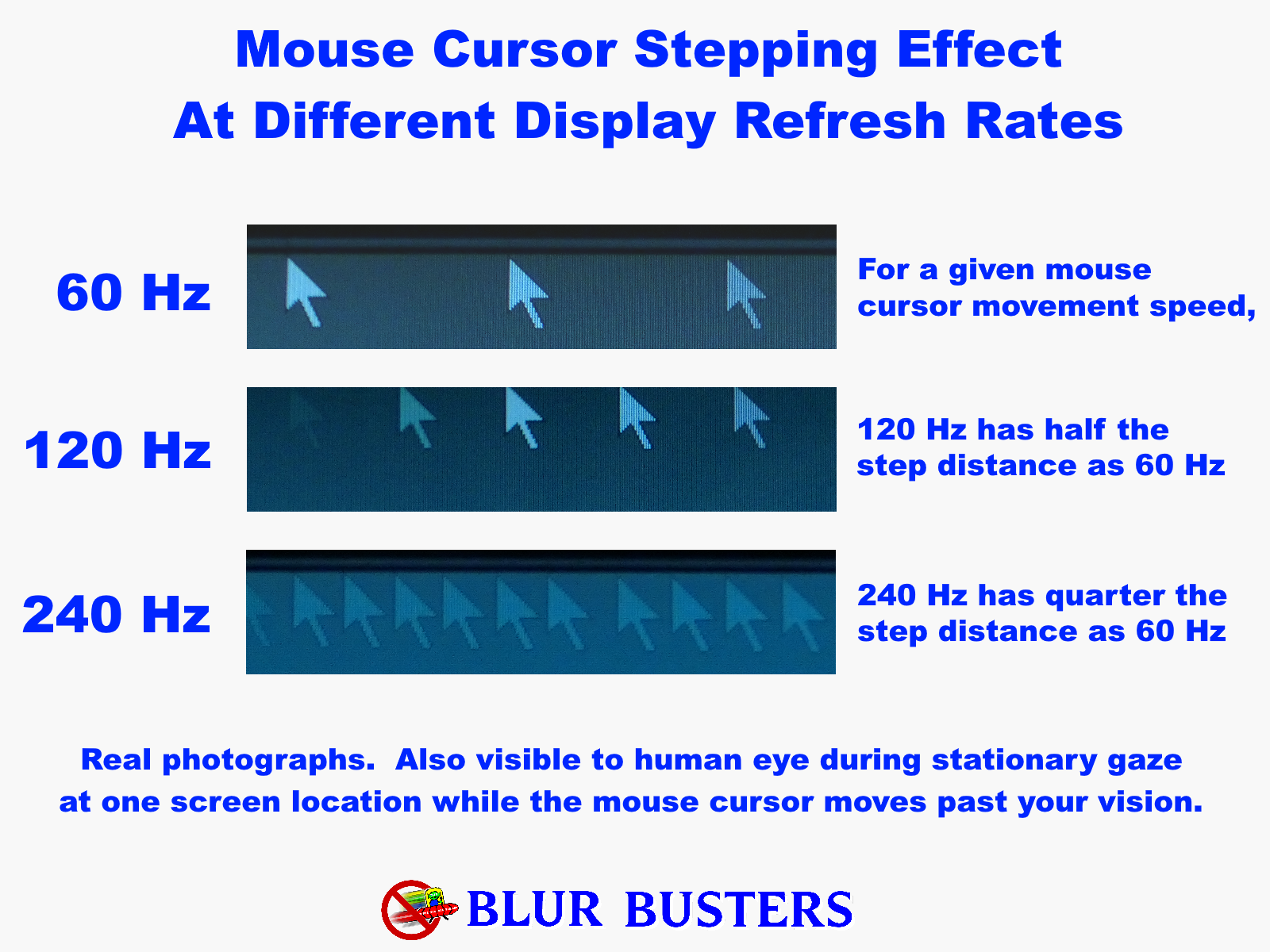

People in esports can go whoopee in small Hz increments (e.g. 40Hz such as 240Hz -> 280Hz -> 360Hz) but average laypeople see refresh upgrade benefits when it's geometric (Situation is similar to spatials like 480p -> 1080p -> 4K -> 8K), because of the stroboscopic stepping benefit --

The Stroboscopic Effect of Finite Frame Rate -- as well as the motion blur reducing benefit

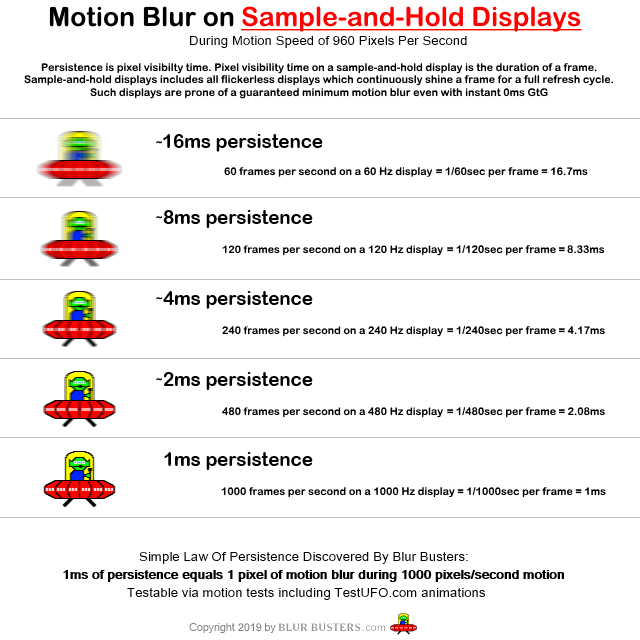

Blur Busters Law: The Amazing Journey to Future 1000 Hz Displays as well as

Pixel Response FAQ: Two Pixel Response Benchmarks: GtG Versus MPRT. You may have seen this already, but in case you or another reader has not, this is worthwhile reading for people who want to understand the proper researched science. (I'm in over 20 peer reviewed papers).

The motion blur equivalence is similar to camera shutter, e.g. SLR camera 1/60sec versus 1/240sec. It's harder to see 2x differences in blur, than 4x differences in motion blur trail size, for example.

Also, if your browser properly supports framerate=Hz animation, VRR looks like this (click to go fuller screen):

(This simulated VRR uses frame blending/interpolation to emulate VRR on a non-VRR display)